AI-Powered Cybersecurity: The End of Human-Only Security Teams?

Post Summary

AI is reshaping cybersecurity in healthcare by addressing rising threats, including AI-driven cyberattacks. Here's the key takeaway: AI tools can detect, analyze, and respond to threats far faster than human teams alone. But it’s not about replacing humans - it’s about letting AI handle repetitive tasks so security teams can focus on decision-making and oversight.

Key Points:

- AI's Role: AI systems monitor networks, detect anomalies, and respond to threats in real-time, reducing breach costs and improving patient safety.

- Dual Use: Cybercriminals also exploit AI for advanced attacks like phishing, data tampering, and algorithm manipulation.

- Regulation Updates: U.S. healthcare regulations now require AI systems to meet strict compliance standards (e.g., HHS AI strategy unveiled Dec. 2025).

- Human Oversight: AI supports, but doesn’t replace, human expertise in managing complex incidents and maintaining ethical standards.

AI is transforming cybersecurity strategies, but human judgment remains critical. The future lies in combining AI’s speed with human insight to protect sensitive healthcare data effectively.

What AI Means for Healthcare Cybersecurity

What AI-Powered Cybersecurity Is

AI-powered cybersecurity uses advanced technologies like machine learning, user behavior analytics, and security automation to protect healthcare systems. These tools safeguard digital infrastructure, electronic protected health information (ePHI), and medical devices from cyber threats with minimal human intervention [5][7][4]. Unlike traditional methods that rely on predefined rules, AI learns continuously, identifying normal system behavior and flagging any anomalies.

This approach is especially crucial in healthcare, where AI plays a pivotal role in Industry 4.0. Beyond cybersecurity, it’s reshaping clinical workflows, patient engagement processes, and how data is managed [6]. AI’s ability to process massive amounts of event data across intricate IT systems allows it to operate at speeds and scales far beyond human capabilities.

This evolving technology is becoming a cornerstone of healthcare IT systems.

Where AI Is Used in Healthcare IT

AI tools are integrated across healthcare IT systems to protect sensitive data and infrastructure. For example, they secure Electronic Health Records (EHRs) by monitoring access patterns and detecting unauthorized attempts to view or alter patient information [3]. In medical device security, AI identifies potential vulnerabilities in connected devices that hackers might exploit. Cloud platforms hosting patient data also rely on AI to analyze network traffic and detect breaches in real time [2].

AI is also transforming third-party risk management. Healthcare organizations often work with numerous vendors, such as billing services and telehealth providers. AI automates the evaluation of these partners’ security practices, flagging high-risk vendors and monitoring new vulnerabilities. It also simplifies HIPAA compliance by offering automated assessments and real-time monitoring [2].

In addition to guarding against external threats, AI keeps an eye on internal systems, tracking data flows to prevent unauthorized sharing. This is particularly important as healthcare organizations expand their digital footprint with initiatives like hospital-at-home programs, mobile health apps, and remote patient monitoring.

U.S. Regulations and Compliance Requirements

As AI becomes central to healthcare cybersecurity, U.S. regulations are evolving to ensure these technologies meet strict compliance standards. On December 4, 2025, the U.S. Department of Health and Human Services (HHS) unveiled its AI strategy, focusing on governance, risk management, ethics, transparency, security, civil rights, and privacy laws for AI use in healthcare [8]. By April 3, 2026, HHS requires all AI systems to adhere to minimum risk management practices, including bias mitigation, outcome monitoring, security, and human oversight. AI tools that fail to meet these standards could face discontinuation [8].

Shortly after, on December 12, 2025, an Executive Order established a unified federal AI framework to prevent discrepancies between state regulations [9][10]. Meanwhile, the Health Sector Coordinating Council (HSCC) is preparing 2026 guidance to address AI cybersecurity risks in alignment with HIPAA, FDA rules, and the NIST AI Risk Management Framework [11]. For organizations using AI-enabled medical devices, compliance involves following "Security-by-Design" principles, as outlined by the FDA, NIST, and CISA recommendations [11]. Additionally, in 2025, the Office for Civil Rights (OCR) proposed updates to HIPAA regulations, raising cybersecurity standards and impacting how AI tools are integrated and monitored in healthcare systems [12].

How AI Works for Both Attackers and Defenders

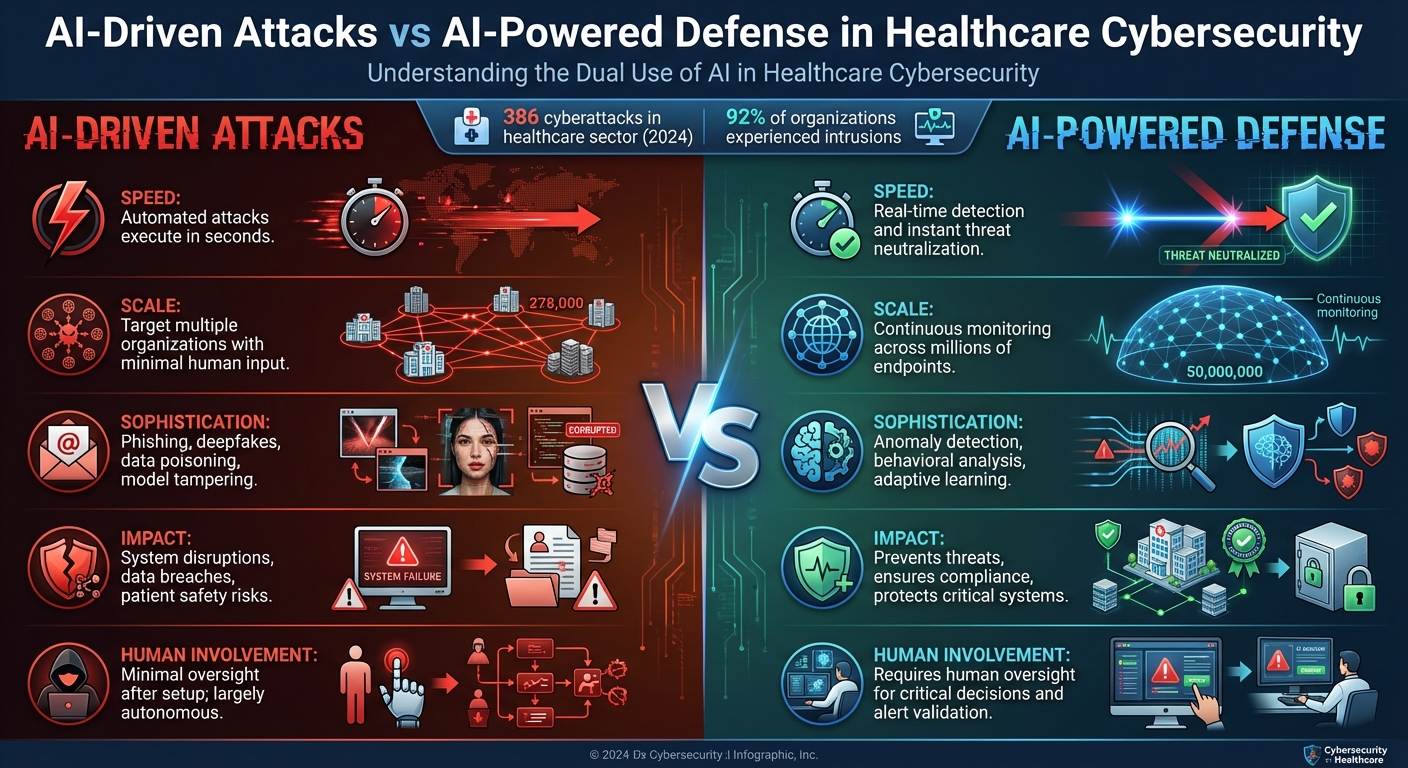

AI-Driven Cyberattacks vs AI-Powered Defense in Healthcare

AI is a game-changer in healthcare cybersecurity, empowering both defenders and attackers. While defenders harness it to safeguard systems and protect sensitive patient data, attackers exploit it to launch increasingly sophisticated threats. Understanding this dual use is crucial for healthcare organizations navigating today’s complex threat landscape.

AI-Enabled Cyber Attacks

Cybercriminals are leveraging AI to carry out attacks with alarming speed and precision. Generative AI allows them to craft phishing emails that are highly convincing and capable of bypassing traditional security filters. This makes it easier to target multiple healthcare organizations at once [6][14].

But the threats don’t stop there. Emerging tactics like data poisoning and algorithm manipulation pose additional risks. In these scenarios, attackers tamper with the training data or models used by AI-powered medical devices and diagnostic tools, potentially leading to harmful outcomes [11]. Deepfakes and AI-driven social engineering campaigns have also become significant concerns for cybersecurity professionals [13].

The numbers paint a stark picture. In 2024 alone, the healthcare sector reported 386 cyberattacks, with 92% of organizations experiencing some form of intrusion [14]. International hacking collaborations have further increased the complexity of these attacks [14]. One particularly tragic case involved a ransomware attack on a hospital, causing system failures that delayed emergency care and resulted in a fatality [6].

As attackers continue to innovate with AI, defenders are stepping up, using the same technology to counter these threats.

AI for Defense and Protection

On the defensive side, healthcare organizations are deploying AI to keep pace with the speed and scale of these advanced attacks. AI-powered threat detection systems analyze network traffic in real time, spotting anomalies that could signal a breach [15]. These systems work around the clock, monitoring millions of events across intricate IT infrastructures - tasks that would be impossible for human analysts alone.

Automated response tools take things a step further. They can isolate compromised devices or block unauthorized access attempts instantly, helping prevent threats from escalating [18]. This swift action is critical for ensuring patient safety and maintaining the uninterrupted operation of healthcare systems [15].

Modern AI defenses are also evolving. They don’t just detect patterns; they learn from every incident, adapting to new attack methods and improving accuracy over time. Despite this automation, human oversight remains irreplaceable. Security teams validate alerts, make key decisions, and ensure compliance with regulations, all while addressing the unique challenges of protecting patient data.

This contrast between how attackers and defenders use AI is summarized below.

Attack vs. Defense: A Comparison

| Factor | AI-Driven Attacks | AI-Powered Defense |

|---|---|---|

| Speed | Automated attacks execute in seconds [16][17] | Real-time detection and responses neutralize threats instantly [16][18] |

| Scale | Attacks can target multiple organizations with little human input [14] | Continuous monitoring across millions of endpoints [15] |

| Sophistication | Includes phishing, deepfakes, data poisoning, and model tampering [6][11][13] | Uses anomaly detection, behavioral analysis, and adaptive learning [15][18] |

| Impact | Causes system disruptions, data breaches, and patient safety risks [6][14] | Prevents threats, ensures compliance, and protects critical systems [15] |

| Human Involvement | Requires minimal oversight after setup; attacks are largely autonomous [19] | Relies on human oversight for critical decisions and alert validation [18] |

How Security Teams Are Changing

AI is reshaping the way healthcare security teams operate, shifting their focus from repetitive manual tasks to more strategic responsibilities. Instead of spending countless hours combing through logs or handling routine alerts, these teams are now concentrating on overseeing AI outputs, analyzing risks, and coordinating incident responses. This shift calls for a deeper understanding of how AI systems make decisions, the ability to validate their findings, and the readiness to step in when human judgment is essential.

New Job Roles and Required Skills

This transformation highlights a key challenge: the need for specialized skills outweighs the need for increased headcount, especially as AI adoption continues to grow [20].

To meet this demand, teams need to develop expertise in areas like machine learning fundamentals, AI risk evaluation, and regulatory compliance. These skills include understanding how machine learning models behave, interpreting AI-generated risk analyses, and ensuring adherence to healthcare regulations such as HIPAA. Additionally, expertise in managing AI governance frameworks and assessing the reliability of automated recommendations has become increasingly important.

AI doesn’t replace human expertise - it enhances it. The evolving responsibilities emphasize the importance of maintaining strong human oversight.

Keeping Humans in Control

As AI systems take on more tasks, human oversight becomes even more critical. While automated systems are excellent at identifying potential threats, only human judgment can provide the necessary context and ethical considerations.

Humans are essential for validating AI-generated alerts to minimize false positives, making key decisions during critical security incidents, and ensuring that automated responses align with patient safety standards. They also handle complex scenarios where regulatory, legal, or organizational factors require careful interpretation. In essence, AI delivers speed and scalability, but human insight ensures accountability and safeguards sensitive health data and patient care.

How Censinet Uses AI with Human Oversight

Censinet provides a practical example of balancing automation with human oversight. Their platform, Censinet AI™, simplifies third-party risk assessments by automating tasks like security questionnaires, evidence summarization, and risk report generation. However, human oversight remains central to the process.

Risk teams maintain control through configurable rules and review mechanisms, ensuring that automation supports, rather than replaces, critical decisions. Censinet AI™ also promotes collaboration by facilitating advanced routing and task orchestration across Governance, Risk, and Compliance (GRC) teams. Acting as a centralized control point for AI governance and risk management, the platform routes key findings and tasks to the appropriate stakeholders, including members of the AI governance committee, for review and approval.

With real-time data consolidated in an intuitive AI risk dashboard, Censinet RiskOps serves as a hub for managing AI-related policies, risks, and tasks. This setup ensures that the right teams address the right issues at the right time, maintaining the balance between automation and human expertise.

sbb-itb-535baee

Adding AI to Your Cyber Risk Management Program

As cybersecurity continues to evolve, organizations must now incorporate AI into their risk management strategies to stay ahead of potential threats.

To effectively integrate AI into your cybersecurity framework, it’s crucial to align these systems with existing regulations and risk management standards. For example, healthcare organizations should treat AI systems with the same level of scrutiny as any process involving Protected Health Information (PHI) [21]. In December 2025, the U.S. Department of Health and Human Services released its AI Strategy, emphasizing the importance of governance, thorough risk management practices, and respect for Americans' health data to build public trust [22].

Matching AI with Risk Management Standards

AI tools should be designed to align with established frameworks like the NIST Cybersecurity Framework, while also meeting industry-specific regulatory requirements. In the healthcare sector, the Health Sector Coordinating Council (HSCC) is working on 2026 guidance for managing AI cybersecurity risks. This guidance focuses on governance, secure-by-design principles, and third-party risk management, all while ensuring compliance with HIPAA, FDA regulations, and the NIST AI Risk Management Frameworks [11]. Additionally, Clinical Risk Management frameworks can provide valuable insights for securely implementing AI technologies while maintaining regulatory compliance and strong controls [6].

To effectively integrate AI within these frameworks, organizations should map AI capabilities to the NIST functions: Identify, Protect, Detect, Respond, and Recover. Key measures include input filtering, data anonymization, and verifying the integrity of training data. These steps lay the groundwork for a more efficient evaluation of third-party risks.

Using AI for Third-Party Risk Management

AI can significantly streamline vendor due diligence and monitoring processes. Platforms like Censinet RiskOps demonstrate this by automating tasks such as security questionnaires, summarizing evidence, and generating risk reports through tools like Censinet AI™. For example, vendors can complete security questionnaires in seconds, while the platform automatically captures critical details about product integrations and fourth-party risk exposures. This level of automation allows healthcare organizations to reduce risk faster without sacrificing vendor oversight.

Setting Up AI Governance and Oversight

Before deploying AI in cybersecurity operations, organizations need to establish strong governance structures. Start by clearly defining roles, responsibilities, and oversight mechanisms for every stage of the AI lifecycle [11]. Begin with an inventory of all AI systems, documenting their purposes, data sources, and associated risks. An AI governance committee should oversee system performance, validate outputs, and ensure alignment with patient safety standards. This committee should include representatives from security, compliance, clinical, and IT leadership to address technical risks while keeping patient care at the forefront.

Conclusion: Building Security Teams with AI

The integration of AI into cybersecurity isn't about replacing human teams - it’s about reshaping how they operate. With 92% of healthcare organizations reporting cyber intrusions last year and 52% identifying internal weaknesses as a major concern [14], it’s evident that the status quo is no longer sufficient. As Tapan Mehta, Healthcare and Pharma Life Sciences Executive, aptly states:

"It's no longer a question of if a healthcare organization will be targeted, it's a question of when they will be targeted" [1].

Evaluate Your Current Capabilities

The first step in strengthening your cybersecurity defenses is understanding where your vulnerabilities lie. Start by examining outdated systems, legacy software, and unmanaged IoT devices that might expose your organization to threats. Conduct thorough vulnerability assessments and penetration tests to simulate attacks and uncover potential weak points. Additionally, review your data governance policies, access control mechanisms, and visibility across both on-premises and cloud networks. Don’t overlook cybersecurity awareness training - employee errors remain a significant risk factor. By pinpointing these gaps, you can identify where AI tools can make the biggest impact.

Start with High-Value AI Applications

When implementing AI, focus on use cases that deliver immediate, measurable improvements. For instance, automated risk assessments can bring together fragmented risk data and use predictive analytics to identify threats before they escalate [23]. AI-driven anomaly detection can flag unusual patterns in network activity or user behavior that might signal a breach. Similarly, automated tools can streamline vendor assessments and consolidate risk data efficiently. Prioritize applications that address your most critical challenges, whether it’s ensuring regulatory compliance, protecting patient data, or bolstering workforce security [23].

Why Human Judgment Still Matters

While AI offers significant advantages, it cannot replace the expertise and critical thinking of skilled professionals. AI systems excel at processing large volumes of data, but they lack the nuanced understanding and ethical considerations essential in healthcare cybersecurity. Additionally, some AI algorithms operate as "black boxes", making their decision-making processes difficult to interpret. AI systems are also susceptible to advanced attacks, which could lead to failures or even patient harm. As Tapan Mehta highlights:

"Healthcare organizations don't necessarily have the bench for this level of talent. Where cybersecurity is a very specific skill set that you need, if you're trying to layer that with AI, that pool gets even narrower" [1].

The most effective strategy combines the speed and scalability of AI with the expertise and judgment of human professionals. By working together, automation can enhance decision-making without replacing the critical role of human oversight.

FAQs

How does AI improve the effectiveness of human cybersecurity teams in healthcare?

AI plays a key role in supporting cybersecurity teams in healthcare by providing real-time threat detection and automated network monitoring. It can spot unusual activities and potential risks much faster than traditional methods, helping organizations stay a step ahead of cyber threats.

By taking over repetitive tasks like incident response and compliance checks, AI not only speeds up response times but also reduces the chances of human error. This frees up cybersecurity professionals to concentrate on tackling more complex and strategic challenges, boosting both the effectiveness and security of healthcare systems.

What risks do healthcare organizations face if cybercriminals use AI in attacks?

The increasing use of AI by cybercriminals poses serious challenges for healthcare organizations. One major threat is data poisoning, where attackers deliberately tamper with training data to manipulate AI systems. Another growing concern is the rise of advanced phishing and social engineering attacks, which, powered by AI, become more convincing and far harder to identify. On top of that, AI enables attackers to adapt quickly to security measures, helping them evade detection in real time.

Healthcare IoT devices are especially at risk. AI-driven attacks can exploit vulnerabilities in these devices, leading to data breaches, interruptions in operations, and even risks to patient safety. These challenges underscore the need for healthcare organizations to adopt strong AI-driven cybersecurity measures to stay ahead of these evolving threats.

What role do U.S. regulations play in shaping AI use in healthcare cybersecurity?

U.S. regulations are key to promoting the responsible use of AI in healthcare cybersecurity. These rules set compliance standards, emphasize transparency in how AI tools operate, and demand clear disclosures about AI's involvement in decision-making. States like California, Illinois, Nevada, and Texas enforce oversight through licensing boards to ensure accountability and prevent misuse.

At the federal level, efforts focus on building a unified framework to resolve potential conflicts between state laws. These initiatives also encourage strong cybersecurity practices and provide guidance for the ethical development of AI technologies. Together, these measures enable healthcare organizations to integrate AI solutions while staying compliant and protecting sensitive patient information.