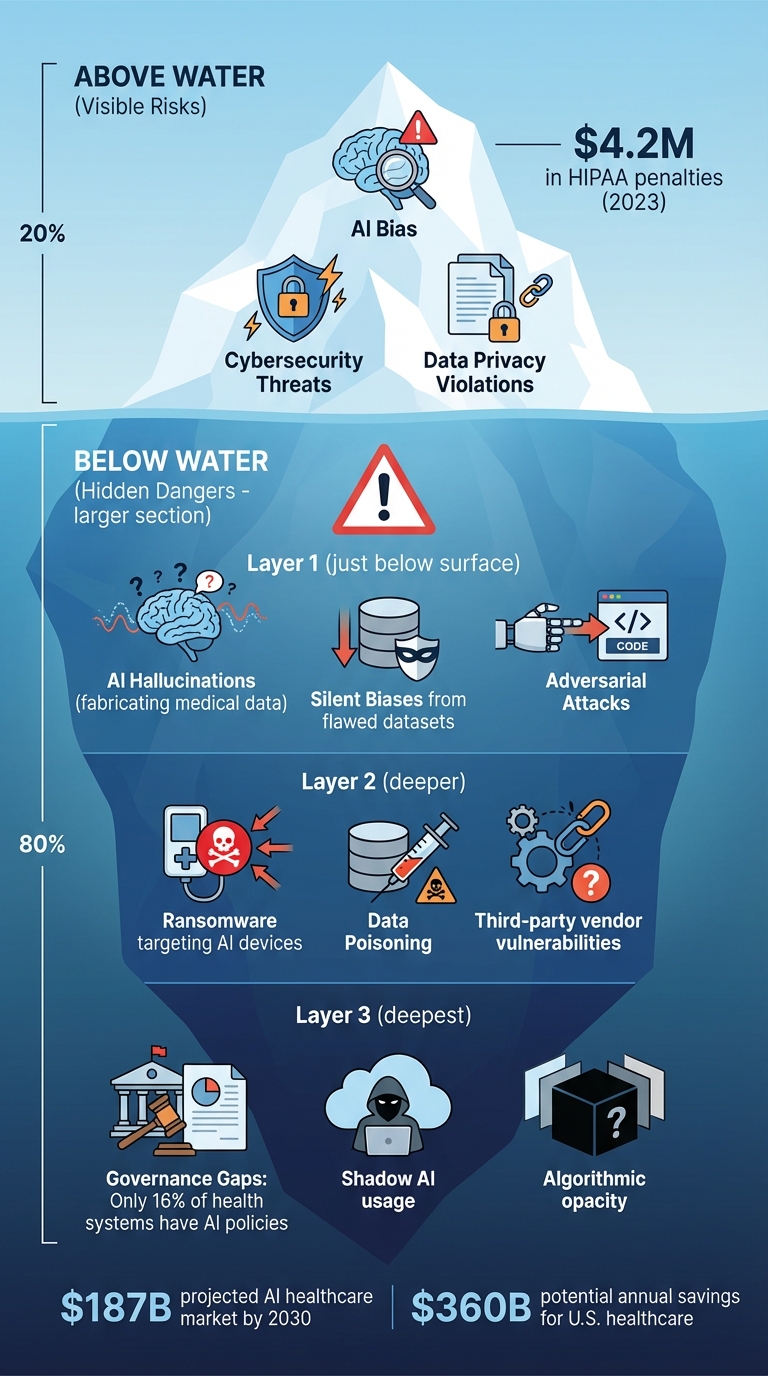

The AI Risk Iceberg: What Lies Beneath the Surface of Machine Learning Deployments

Post Summary

The idea that visible AI risks - like bias - are only a small part of deeper, hidden threats such as adversarial attacks, data poisoning, and AI hallucinations.

It leads to misdiagnoses, inequitable care, and systemic disparities.

Interconnected data pipelines, model manipulation, IoMT devices, and weak third‑party controls.

Factually incorrect outputs that appear credible, potentially harming clinical decisions.

Many lack HIPAA compliance, BAAs, or safe data‑handling practices, creating privacy and regulatory exposure.

It automates AI vendor assessments, centralizes evidence, identifies vulnerabilities, and enables strong AI governance.

Takeaway: Effective risk management requires structured governance, transparency, and continuous human oversight to ensure AI supports, rather than harms, patient care.

AI Risk Iceberg in Healthcare: Visible and Hidden Threats

Surface-Level Risks: Data Biases and Algorithmic Opacity

AI in healthcare comes with its fair share of challenges, and two of the most pressing ones are biased training data and opaque decision-making processes. These issues are often the first to catch the attention of healthcare providers and organizations, as they can have immediate and potentially harmful effects. Let’s dive into these risks, starting with data biases and then exploring the complications of opaque algorithms.

Data Biases in Healthcare AI

AI systems are only as good as the data they’re trained on. When that data is unbalanced, incomplete, or unrepresentative, biases creep in. These biases can stem from a variety of sources, such as unequal sample sizes, missing information, overlooked social factors, or flaws in labeling and design.

The consequences? AI models that perpetuate or even worsen existing inequalities in healthcare. For example, if a diagnostic tool is trained primarily on data from one demographic group, it might perform poorly when used on patients from underrepresented populations. This can result in misdiagnoses, inappropriate treatments, or even denial of critical care - essentially creating a healthcare system where some groups receive better care than others.

Algorithmic Opacity and Decision-Making

Even when the training data is representative, the complexity of many AI systems - especially deep learning models - introduces another problem: opacity. These "black box" models, often used in tasks like medical image analysis, are notorious for their lack of transparency. They make decisions in ways that even experts struggle to fully understand [9].

This lack of clarity creates serious challenges. When errors occur, it’s hard to pinpoint what went wrong or who is responsible. It also undermines patient trust and informed consent. If doctors can’t explain how an AI system arrived at a particular decision, how can patients feel confident about undergoing an AI-assisted procedure? Without transparency, both patients and healthcare providers are left in the dark, which can erode trust in these technologies [9] [11] [6] [10].

Hidden Vulnerabilities in AI Models

In healthcare, the risks of AI go beyond biased data and opaque algorithms. Two particularly concerning issues - adversarial attacks and AI hallucinations - pose serious challenges during clinical operations, where patient safety must always come first.

Adversarial Attacks on AI Models

Adversarial attacks exploit AI systems by introducing malicious inputs, leading to incorrect or harmful outputs. In the healthcare setting, these attacks can override safety protocols, tamper with the functionality of AI-controlled medical devices, or even expose sensitive patient data [12]. Common methods include prompt injection, jailbreaks, and data poisoning [12].

One alarming example involved a chat-based medical app for iOS, where researchers from Payatu demonstrated how a compromised vector database could turn an AI assistant into a liability. By uploading a seemingly harmless PDF embedded with a malicious injection string, an attacker manipulated the AI's responses. In one instance, when a patient asked about taking ibuprofen with blood pressure medication, the AI provided unauthorized advice. This advice led to dizziness and gastrointestinal bleeding due to a dangerous drug interaction. Such cases underline how maliciously injected content can directly endanger patients [12].

"A seemingly benign question can be manipulated to bypass safeguards or elicit unauthorised advice. Worse, LLMs can 'hallucinate,' fabricating confident but incorrect information, potentially leading to dangerous recommendations in a high-stakes domain like medicine." - Payatu

Adversarial attacks can also interfere with AI-controlled medical devices. By altering their operations, these attacks can create immediate, life-threatening scenarios [5].

AI Hallucinations in Decision-Making

AI hallucinations are another silent but serious threat. These occur when an AI system generates factually incorrect or misleading outputs [1]. Unlike adversarial attacks, which stem from external manipulation, hallucinations happen spontaneously as the AI "invents" information that appears credible. In clinical settings, such errors can mislead clinicians who rely too heavily on AI systems, resulting in misdiagnoses or improper treatments. The opaque, black-box nature of many AI models makes it difficult to identify and correct these errors before they impact patient care [4][6].

Cybersecurity Threats in AI Deployments

Healthcare organizations are navigating a rapidly evolving cybersecurity landscape, where AI systems have become high-value targets. These systems often integrate with critical components like medical devices, patient databases, and third-party platforms, making them attractive to attackers. Addressing these threats requires embedding cybersecurity into the broader risk management strategy for healthcare AI.

Data Poisoning and Model Manipulation

Data poisoning attacks involve tampering with the training data used by AI systems. By injecting malicious inputs, attackers can distort the AI's learning process, leading to dangerous outcomes. In healthcare, this could mean incorrect diagnoses, flawed treatment recommendations, or even systemic failures. The consequences of such manipulation can be devastating, both for individual patients and the healthcare system as a whole.

Ransomware Targeting AI-Controlled Medical Devices

AI-controlled medical devices are increasingly targeted by ransomware attacks, exploiting their vulnerabilities. Many older devices lack adequate security measures and often rely on outdated software, making them easy prey for attackers. When ransomware strikes, it can disrupt essential medical services by locking providers out of critical equipment or altering device functionality. The fallout can be severe - delayed procedures, compromised patient safety, and a reliance on manual processes while systems are restored. These scenarios highlight the pressing need for strengthened security protocols across all connected devices and systems.

Third-Party Vendor Risks in AI Integrations

The growing reliance on external vendors for AI solutions, especially cloud-based platforms, introduces additional vulnerabilities. These third-party integrations can open the door to data breaches and unauthorized access to sensitive patient information [6]. Moreover, the lack of transparency in vendor AI models and incomplete training data can lead to inaccurate predictions and flawed treatment recommendations. This is particularly concerning for underrepresented patient groups, who may face disproportionately negative outcomes due to these inaccuracies [13]. Addressing these risks requires careful scrutiny of vendor practices and robust safeguards to protect patient data and ensure accurate AI performance.

Real-World AI Failures in Healthcare

Case Studies of AI Deployment Failures

AI in healthcare has faced serious challenges, with issues like bias, data breaches, system errors, and weak governance causing harm to patients and disrupting operations.

One major problem is algorithmic bias. When training datasets fail to include diverse populations, AI systems can unfairly deny care to certain groups. This has led to delays in treatment and, in some cases, life-threatening situations that could have been avoided with more thorough validation.

Another concern is data privacy breaches. Sensitive patient information has been exposed due to inadequate security measures, leaving healthcare organizations vulnerable to legal troubles and reputational damage. Interconnected AI systems, if not properly secured, become attractive targets for cyberattacks.

System malfunctions also pose risks by disrupting critical procedures and jeopardizing patient safety. Clinicians often struggle to identify and correct errors because AI systems lack transparency in their decision-making processes.

These examples highlight the pressing need for stronger oversight and governance to prevent such failures from recurring.

Lessons Learned From Failures

The failures mentioned reveal serious gaps in governance and oversight. Without proper controls, AI systems can produce inaccurate results, biased recommendations, and increased liabilities - ultimately harming patient care [7]. Traditional governance methods fall short for modern AI, which operates in ways that differ significantly from earlier medical software [7].

A growing issue is "shadow AI", where unauthorized AI tools are used within healthcare organizations. This creates functionality problems, legal risks, and increases the likelihood of data breaches [7][8].

To tackle these challenges, healthcare organizations need to set up dedicated AI governance committees. These committees should include experts from legal, compliance, IT, clinical operations, and risk management. Their role would be to oversee the entire AI lifecycle, rigorously testing tools for bias and accuracy while ensuring they meet regulatory standards [10][6].

Equally critical is maintaining continuous human oversight. Over-reliance on AI without human judgment has repeatedly resulted in patient harm. AI should support clinical decision-making, not replace it, ensuring that human expertise remains central to healthcare.

sbb-itb-535baee

Risk Assessment Frameworks for Healthcare AI

Once potential risks - both visible and hidden - are identified, the next step is applying a solid risk assessment framework. This step is essential for managing AI effectively in healthcare. Organizations need to tailor their risk assessments to match their specific objectives and tolerance for risk. By doing so, they can avoid safety issues and steer clear of compliance violations. These frameworks act as a guide for evaluating AI systems at every stage of their lifecycle.

Choosing the right framework is not a one-size-fits-all process. A framework designed for general technology might fall short when applied to healthcare AI. In this field, decisions made by algorithms and strict regulatory requirements directly affect patient outcomes [14][15]. Effective frameworks help translate theoretical risks into practical measures by addressing identified vulnerabilities.

Using the NIST AI Risk Management Framework

One option for healthcare organizations is the NIST AI Risk Management Framework. While its implementation can vary, the framework provides guidelines to establish clear governance. It focuses on identifying, validating, and mitigating risks associated with AI, offering a structured approach to managing these challenges.

ECRI Hazard Evaluation for AI Systems

Another option is the ECRI Hazard Evaluation, which is tailored to clinical environments. This method helps organizations identify hazards that may arise from integrating AI into healthcare workflows. By examining how AI errors or implementation issues could impact patient safety, organizations can proactively monitor systems and address risks as part of a broader strategy. This approach ensures that patient safety remains a top priority while leveraging AI advancements.

Mitigation Strategies with Censinet RiskOps™

Healthcare organizations need actionable tools to address risks effectively. With the global AI healthcare market expected to exceed $187 billion by 2030[1], the stakes are higher than ever. Recent HIPAA fines, totaling $4.2 million, underscore the financial risks tied to non-compliance[2].

Building on established frameworks, these strategies aim to deliver timely and effective risk management.

Streamlining AI Risk Assessments with Censinet AITM

Censinet AITM simplifies and speeds up the third-party risk assessment process. Vendors can instantly submit their security data, which the tool then analyzes and summarizes. It captures essential information, such as product integration details and potential fourth-party risks, and generates detailed risk reports. For healthcare organizations juggling relationships with dozens - or even hundreds - of AI vendors, this automation is a game-changer. It addresses one of the biggest challenges: the lengthy timelines of traditional assessments. By automating these processes, organizations can significantly reduce risk management delays while maintaining thorough evaluations.

Human-in-the-Loop Oversight for AI Governance

While automation is essential, human oversight remains irreplaceable. Censinet’s approach ensures that expert judgment stays central to the process. Through predefined rules and review steps, risk teams maintain control over automated systems, ensuring that automation complements rather than replaces human decision-making. This balance is particularly important as AI governance emerges as a top priority in healthcare[2]. Effective governance also requires integrating compliance programs tailored to AI’s unique challenges, including adherence to the Office of Inspector General's seven elements of an effective compliance program[6]. Human oversight ensures that risk assessments align with both regulatory demands and the practical realities of clinical environments.

Centralized AI Risk Dashboard for GRC Teams

A centralized AI risk dashboard acts as the command center for governance, risk, and compliance (GRC) teams. It provides a single platform for monitoring, auditing, and assessing AI systems in real time[6]. Key findings and tasks are routed to the appropriate stakeholders for review and action, streamlining the entire process. This "air traffic control" model ensures that issues are addressed promptly and by the right teams. Additionally, the dashboard supports the creation of an AI governance committee, offering a comprehensive view of clinical, administrative, and legal data[3]. This fosters accountability for AI-related decisions while enabling healthcare leaders to oversee policies, procurement, deployment, and ongoing management - all through one unified system.

These strategies bridge the gap between identifying risks and establishing strong AI governance in healthcare.

Conclusion: Managing AI Risks in Healthcare

The integration of AI in healthcare holds transformative potential, with projections suggesting it could save the U.S. healthcare system up to $360 billion annually[17]. However, while the benefits are substantial, the current lack of oversight is concerning - only 16% of health systems have systemwide governance policies in place to regulate AI usage[17]. This gap underscores the urgent need for structured risk management.

To harness AI's potential responsibly, healthcare organizations must establish strong governance frameworks. Expert committees should be formed, bringing together professionals from clinical, legal, compliance, and IT backgrounds. These groups would oversee AI tools, address biases, ensure alignment with regulations, and provide continuous monitoring. Importantly, these committees must have clear decision-making authority and escalation protocols for managing emerging risks[6][19][16].

Transparency is another critical factor. Patients have a right to know when AI influences their care, and clinicians need clear insights into how algorithms arrive at decisions. As one expert emphasized:

"Transparency is critical and failure to disclose such use could undermine trust in the healthcare system as a whole and lead to ethical and legal challenges for the user"

.

With regulatory guidelines still evolving[18], waiting for absolute clarity isn’t an option. Healthcare organizations must act now by implementing adaptable compliance programs. These programs should address changing regulations while prioritizing data quality, security, and patient safety. Proactive risk management across the AI lifecycle is not just a best practice - it’s essential for safeguarding trust and ensuring ethical AI implementation.

FAQs

What are AI hallucinations, and how can they affect healthcare decisions?

AI hallucinations happen when an artificial intelligence system produces false or misleading information but presents it as if it were correct. In healthcare, these errors can have severe implications, such as contributing to misdiagnoses, suggesting unsuitable treatments, or introducing mistakes in patient care.

Such errors don’t just put patient safety at risk - they can also diminish trust in AI tools and pose serious challenges for healthcare providers. To tackle this problem, it’s essential to focus on thorough testing, continuous monitoring, and establishing safeguards that ensure AI systems provide accurate and dependable outcomes.

What steps can healthcare organizations take to safeguard AI systems from adversarial threats?

Healthcare organizations can protect AI systems from potential threats by using a layered security strategy. This involves measures like input validation and sanitization to block harmful data, continuous monitoring to spot unusual activity, and frequent model validation to maintain system reliability. Adding safeguards to address issues such as hallucinations, prompt injection, and bias is equally important.

Other key steps include enforcing strict access controls, encrypting sensitive information, and performing regular security audits to prevent vulnerabilities. On top of that, creating a strong governance framework with real-time oversight and clear protocols for incident response can significantly reduce risks, ensuring AI systems are deployed safely and responsibly in healthcare environments.

Why is transparency in AI crucial for building patient trust?

Transparency in AI plays a key role in building patient trust by providing clear insights into how these tools influence healthcare decisions. When patients have a grasp of how these systems operate - what they can do and where they might fall short - they’re more likely to feel assured about the care they’re receiving.

Being upfront about potential biases, errors, and the processes behind AI-driven decisions isn’t just ethical; it also shows a commitment to regulatory standards. This kind of open communication helps ease concerns, builds confidence, and strengthens the connection between patients, healthcare providers, and AI technologies.

Related Blog Posts

- The New Risk Frontier: Navigating AI Uncertainty in an Automated World

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- The Healthcare AI Paradox: Better Outcomes, New Risks

- The Governance Gap: Why Traditional Risk Management Fails with AI

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"What are AI hallucinations, and how can they affect healthcare decisions?","acceptedAnswer":{"@type":"Answer","text":"<p>AI hallucinations happen when an artificial intelligence system produces false or misleading information but presents it as if it were correct. In healthcare, these errors can have severe implications, such as contributing to <strong>misdiagnoses</strong>, suggesting unsuitable treatments, or introducing mistakes in patient care.</p> <p>Such errors don’t just put <strong>patient safety</strong> at risk - they can also diminish trust in AI tools and pose serious challenges for healthcare providers. To tackle this problem, it’s essential to focus on thorough testing, continuous monitoring, and establishing safeguards that ensure AI systems provide accurate and dependable outcomes.</p>"}},{"@type":"Question","name":"What steps can healthcare organizations take to safeguard AI systems from adversarial threats?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare organizations can protect AI systems from potential threats by using a layered security strategy. This involves measures like <strong>input validation and sanitization</strong> to block harmful data, <strong>continuous monitoring</strong> to spot unusual activity, and frequent <strong>model validation</strong> to maintain system reliability. Adding safeguards to address issues such as hallucinations, prompt injection, and bias is equally important.</p> <p>Other key steps include enforcing strict access controls, encrypting sensitive information, and performing regular security audits to prevent vulnerabilities. On top of that, creating a strong governance framework with real-time oversight and clear protocols for incident response can significantly reduce risks, ensuring AI systems are deployed safely and responsibly in healthcare environments.</p>"}},{"@type":"Question","name":"Why is transparency in AI crucial for building patient trust?","acceptedAnswer":{"@type":"Answer","text":"<p>Transparency in AI plays a key role in building patient trust by providing clear insights into how these tools influence healthcare decisions. When patients have a grasp of how these systems operate - what they can do and where they might fall short - they’re more likely to feel assured about the care they’re receiving.</p> <p>Being upfront about potential biases, errors, and the processes behind AI-driven decisions isn’t just ethical; it also shows a commitment to regulatory standards. This kind of open communication helps ease concerns, builds confidence, and strengthens the connection between patients, healthcare providers, and AI technologies.</p>"}}]}

Key Points:

What visible risks sit at the top of the “AI risk iceberg” in healthcare?

- Biased training data leading to inequitable patient outcomes

- Opaque, black‑box algorithms that clinicians cannot validate

- Poor model explainability, undermining trust and informed consent

- Immediate safety risks, including misdiagnosis and inconsistent performance

What hidden AI vulnerabilities pose the greatest patient‑safety risk?

- Adversarial attacks that change model behavior

- Data poisoning that corrupts training sets

- Prompt‑injection exploits that bypass safeguards

- AI hallucinations, producing confident but incorrect outputs

- Manipulation of AI‑controlled medical devices

Why do AI‑driven cyber threats spread faster in healthcare?

- Interconnected EHRs, IoMT devices, APIs, and cloud platforms

- Weak supply‑chain controls across vendors and sub‑processors

- Legacy medical devices lacking modern security hardening

- High‑value PHI, making healthcare a top target

- Real‑time clinical dependencies, amplifying operational impact

How does algorithmic bias erode trust and clinical quality?

- Unequal performance across demographics

- Amplification of historic inequities in training data

- Unreliable predictions for underrepresented groups

- Reduced clinician confidence in AI‑assisted workflows

- Potential regulatory scrutiny for harmful bias

What privacy and compliance concerns do generative AI tools create?

- Lack of HIPAA‑compliant data handling

- No Business Associate Agreements (BAAs)

- Risk of PHI leakage into model training

- Unclear data‑retention practices

- High risk of unauthorized sharing or re‑identification

How does Censinet RiskOps™ strengthen AI governance and safety?

- Automates AI‑specific risk assessments across vendors

- Centralizes policies, evidence, and risk registers

- Routes issues to AI governance committees

- Tracks model vulnerabilities and fourth‑party risks

- Provides a real‑time AI risk dashboard for clinicians, compliance, and security teams