AI Risk Insurance: The Emerging Market for Algorithmic Protection

Post Summary

AI risk insurance is becoming essential as artificial intelligence tools, particularly in healthcare, introduce risks traditional insurance doesn't cover. These risks include algorithm failures, biased outputs, and cybersecurity breaches, which can lead to lawsuits, regulatory fines, and reputational harm. Standard policies like medical malpractice or cyber insurance often miss these exposures, leaving organizations vulnerable.

Healthcare providers face unique challenges, such as misdiagnoses from AI systems, data breaches involving patient information, and compliance with evolving regulations. AI risk insurance addresses these gaps by covering liabilities tied to algorithmic errors, security vulnerabilities, and compliance penalties.

Key takeaways:

- Cybersecurity risks: AI-specific breaches like data poisoning or compromised algorithms.

- Liability gaps: Misdiagnoses, biased decisions, and system failures.

- Compliance penalties: Regulatory fines due to AI governance issues.

Organizations can reduce risks by performing thorough AI assessments, maintaining human oversight, and collaborating with vendors. Using tools for automated risk management can also lower insurance costs and improve coverage terms. As AI adoption grows, integrating AI risk insurance with broader risk management strategies is critical for safeguarding operations and patient outcomes.

AI Risks Covered by Insurance Policies

AI risk insurance policies are designed to shield healthcare organizations from three major threats: cybersecurity vulnerabilities, liability and operational failures, and reputational and compliance penalties. These risks often fall outside the scope of traditional insurance, leaving critical gaps in coverage. Let’s dive into each category to understand how tailored policies address these challenges.

Cybersecurity Vulnerabilities

AI systems introduce unique cybersecurity risks that go beyond typical phishing or ransomware attacks. For instance, attackers can manipulate training data, compromise algorithms, or exploit system weaknesses, putting both patient safety and sensitive data at risk. A breach involving Protected Health Information (PHI) could expose a vast number of patient records, given the volume of data AI tools process.

Moreover, traditional cyber insurance policies often don't account for the specific risks tied to AI systems. Insurers are now crafting policies that explicitly cover these exposures, such as data leakage from AI-driven processes. These tailored solutions aim to bridge the gap between standard cyber coverage and the evolving threats AI systems face.

Liability and Operational Failures

Errors in AI algorithms can lead to severe consequences in healthcare, such as biased diagnoses, incorrect treatment plans, or unexpected system breakdowns. These failures not only jeopardize patient care but also expose organizations to costly lawsuits - liabilities that standard malpractice insurance often doesn’t cover.

To address this, insurers are introducing specialized AI policies that focus on risks like algorithmic bias and model failures. Professional liability insurance is also being updated to include AI-related errors. However, determining accountability - whether it lies with the healthcare provider, the AI developer, or the data scientist - remains a complex challenge. These specialized policies help mitigate uncertainties by covering operational disruptions and legal claims tied to AI failures.

Reputational and Compliance Penalties

When AI governance falls short - through biased outcomes, mishandling of data, or opaque decision-making - it can erode public trust and invite regulatory scrutiny. Healthcare organizations, bound by strict standards for AI transparency and fairness, can face hefty fines, legal costs, and reputational damage.

To address these risks, insurers are now offering coverage that specifically targets reputational harm and compliance penalties. These policies can help organizations manage costs tied to regulatory fines, legal defense, remediation efforts, and reputation repair. As AI oversight frameworks continue to evolve, this type of coverage becomes increasingly critical for healthcare providers navigating the complexities of AI governance.

Types of AI Risk Insurance Policies

Three Types of AI Risk Insurance Coverage for Healthcare Organizations

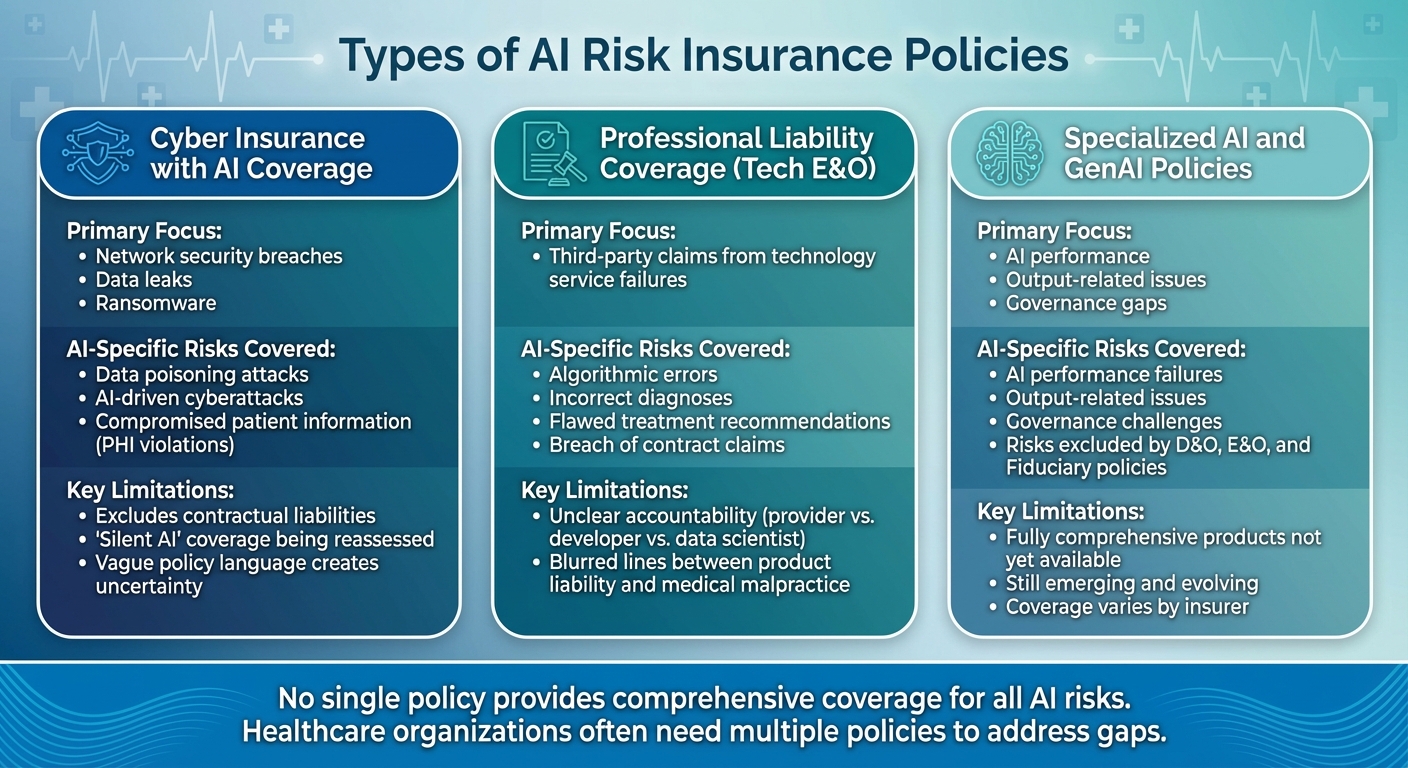

Healthcare organizations have three main options for AI risk insurance. Each policy type addresses specific aspects of AI-related risks, but none currently provides all-encompassing coverage for every potential exposure. These policies reflect the growing need for AI governance in healthcare.

Cyber Insurance with AI Coverage

Cyber insurance, which has traditionally covered network security breaches, data leaks, and ransomware attacks, is now adapting to include AI-specific risks. These policies are evolving to address threats like data poisoning and AI-driven cyberattacks, both of which could compromise sensitive patient information and violate privacy laws concerning Protected Health Information (PHI). However, most policies exclude contractual liabilities, and insurers are reassessing "silent AI" coverage - where AI risks are not explicitly mentioned but could still be implicated due to vague policy language [2][3].

Professional Liability Coverage

Professional liability insurance, often referred to as Technology Errors and Omissions (Tech E&O), focuses on third-party claims caused by mistakes or failures in technology services. In healthcare, this might include damages caused by algorithmic errors, such as incorrect diagnoses or flawed treatment recommendations. While these policies typically cover breach of contract claims and alleged wrongful acts, they also raise challenging questions about accountability. Determining whether liability rests with the healthcare provider, the AI developer, or the data scientist blurs the lines between product liability and medical malpractice [1][3].

Specialized AI and GenAI Policies

Specialized AI and GenAI policies are emerging to fill gaps left by traditional insurance. These policies focus on risks tied to AI performance, output, and governance - areas that standard cyber or professional liability insurance often exclude. For example, the rise of "Absolute" AI exclusions in Directors and Officers (D&O), Errors and Omissions (E&O), and Fiduciary Liability policies highlights the demand for coverage tailored to AI-related challenges [4]. While a fully comprehensive AI-specific insurance product has yet to hit the market, insurers are developing targeted solutions as more executives recognize the risks associated with AI [1][2].

Although existing insurance policies may provide partial coverage for AI-related risks, significant gaps and uncertainties remain. As healthcare increasingly relies on AI, the insurance industry is working to adapt and create policies that better address these emerging challenges [2][3].

Assessing and Reducing AI Risks Before Purchasing Insurance

Before healthcare organizations purchase AI risk insurance, they need to take a close look at their AI systems, potential points of failure, and the risks tied to their use. Since different applications of AI come with varying levels of risk, insurance needs will differ depending on how the technology is deployed [5]. With insurers expected to introduce AI exclusion clauses by early 2026, being proactive in managing risks isn't just smart - it's necessary [6]. These initial assessments lay the groundwork for more focused strategies down the line.

Cybersecurity Benchmarking and Risk Assessment

Understanding where AI systems might be vulnerable starts with a thorough enterprise risk assessment. Tools like Censinet RiskOps™ provide a centralized way to evaluate AI cybersecurity across platforms, devices, and vendors. This platform helps organizations spot weaknesses in their AI security setup before insurers do. By addressing these gaps early, organizations can prepare the documentation insurers require for better coverage terms. Plus, showing a strong commitment to risk assessment can help when negotiating policies.

Human-in-the-Loop AI Validation

Keeping human oversight in AI-driven processes is a smart way to reduce errors and biases that could lead to insurance claims. In healthcare, AI outputs should serve as recommendations - not final decisions. For critical tasks like diagnosing conditions or developing treatment plans, human review and approval remain essential. Regular audits can flag and correct algorithmic biases, ensuring that the system remains fair and reliable. This "human-in-the-loop" approach not only minimizes risks but also demonstrates to insurers that the organization practices responsible AI management.

Collaborative Risk Networks

AI vendors bring additional risks, and healthcare organizations must account for these third-party exposures. Censinet Connect™ makes it easier to work with vendors on joint risk assessments, uncovering potential vulnerabilities in third- and even fourth-party relationships. By consolidating risk data across all vendor interactions into one platform, organizations can provide insurers with a clear, comprehensive picture of their AI risk management efforts. This transparency can influence both the availability of coverage and its pricing.

sbb-itb-535baee

How Healthcare Organizations Can Implement AI Risk Insurance

To effectively secure AI risk insurance, healthcare organizations need to embed it within a well-rounded risk management strategy. This means treating insurance as just one part of a broader system that includes technology platforms, automation tools, and continuous oversight. The idea is to create a setup where risk management and insurance coverage work together, making the organization stronger and potentially reducing costs.

Integrating AI Risk Insurance with Risk Management Platforms

Combining insurance coverage with a proactive risk management platform strengthens an organization's ability to handle AI-related risks. For instance, Censinet RiskOps™ merges AI risk data with broader cybersecurity metrics, offering a clear record of ongoing risk management efforts. This documentation becomes invaluable when it’s time to renew policies or negotiate terms, showing insurers that the organization is committed to managing risks continuously - not just conducting a one-time review.

Given the current uncertainty in AI risk insurance - where general liability and medical malpractice policies often lack clear AI-specific coverage and standalone AI insurance products are rare [1] - this kind of proactive approach becomes even more critical. Demonstrating due diligence through a comprehensive platform can help healthcare organizations navigate this uncertain landscape more effectively.

Leveraging Automation to Reduce Insurance Costs

Automation tools, like Censinet AITM™, play a key role in lowering risk exposure and cutting insurance premiums. These tools speed up vendor assessments and evidence validation, completing tasks like security questionnaires in seconds while automatically summarizing documentation. This efficiency not only saves time but also improves data accuracy, which is crucial for underwriting and claims decisions.

When insurers see that an organization uses automated controls and continuous monitoring, they’re more likely to offer better terms. For example, a well-structured AI liability insurance market would naturally favor organizations using proven, safe AI systems [7]. By adopting automation, healthcare organizations can reduce their risk profile, save money, and ensure smoother compliance with insurance requirements.

Ensuring Continuous AI Risk Oversight

Securing AI risk insurance isn’t a one-and-done process - it requires ongoing compliance. Healthcare organizations should set up multidisciplinary review teams to evaluate AI tools before implementation, helping to prevent unexpected outcomes [7]. Platforms like Censinet RiskOps act as a central hub for managing AI-related policies, risks, and tasks. With real-time data displayed in an easy-to-understand dashboard, key findings can be routed to stakeholders - such as AI governance committees - for review and approval.

This continuous monitoring is essential, as AI cannot replace human judgment [1][9]. It also ensures that organizations stay compliant with regulatory requirements and insurance conditions. As new state laws, like California's AB 489 and Illinois's Wellness and Oversight for Psychological Resources Act (set to take effect in 2025), begin to shape the legal landscape [8], maintaining this level of oversight will be crucial for staying eligible for insurance coverage.

Conclusion: The Future of AI Risk Insurance in Healthcare

The adoption of AI in healthcare is advancing at a pace 2.2 times faster than the broader economy, with the use of domain-specific tools projected to grow sevenfold by 2024 [10]. In 2025 alone, $1.4 billion is expected to flow into healthcare AI investments [10]. However, this rapid expansion highlights a critical issue - existing insurance policies fail to address the unique risks posed by AI, leaving significant gaps in protection.

The insurance industry is adapting to these challenges by shifting toward real-time risk management. This includes dynamically pricing risks and embedding insurance coverage directly into healthcare operations [12]. To meet regulatory demands for transparency and accountability, insurers are turning to explainable AI (XAI) models [11][13]. These changes aim to address pressing concerns, such as the rise in denial rates from 10.9% to 22.7% at UnitedHealthcare between 2020 and 2022, linked to AI-driven automation in prior authorization processes [14]. By establishing ethical AI standards and robust governance frameworks, these measures help mitigate such risks.

Healthcare organizations must take a proactive approach to align insurance with ongoing risk management efforts. For example, integrating AI risk insurance with platforms like Censinet RiskOps™ can help document due diligence, potentially lowering premiums while ensuring continuous oversight. This strategy not only demonstrates accountability to insurers but also helps bridge coverage gaps, offering stronger protection.

As the healthcare landscape evolves, payers are expected to develop their own AI capabilities to match those of providers [10]. To stay ahead, organizations should invest in strategies like human-in-the-loop validation, automated risk assessments using tools such as Censinet AITM™, and ongoing monitoring. These efforts not only help transfer risk but also strengthen the overall AI strategy, reinforcing earlier risk mitigation practices. The future of AI risk insurance lies in building resilience, safeguarding patient outcomes, and staying aligned with regulatory shifts.

FAQs

What are the specific risks in AI systems that traditional insurance policies often overlook?

Traditional insurance policies often fall short when it comes to addressing the specific risks associated with AI systems. For instance, algorithmic failures can lead to decisions that are incorrect or even harmful, while biases embedded within AI models might produce unfair or skewed outcomes. On top of that, issues like copyright infringement, discrimination, and defamation stemming from AI-generated content are generally outside the scope of traditional coverage.

AI systems are also vulnerable to cybersecurity threats, including model manipulation, data poisoning, and broader systemic attacks. These challenges underline the growing importance of specialized AI risk insurance to safeguard organizations against the financial and operational fallout from these emerging risks.

How can healthcare organizations incorporate AI risk insurance into their current risk management plans?

Healthcare organizations can strengthen their risk management strategies by incorporating AI risk insurance alongside robust governance frameworks. These frameworks should clearly define accountability and ensure compliance with regulations. Regular audits of AI models - focusing on bias, accuracy, and reliability - are essential for spotting vulnerabilities. Additionally, having human oversight for critical AI-driven decisions provides an extra safeguard against potential errors.

To stay ahead of risks like data breaches, algorithmic failures, and cybersecurity threats, organizations must regularly update their policies to align with the rapidly changing AI landscape. When combined with AI risk insurance, these measures can help healthcare providers better protect sensitive data, minimize disruptions, and avoid costly compliance issues.

How can organizations minimize AI-related risks before getting insurance?

To address potential risks associated with AI before purchasing insurance, organizations should focus on strengthening the security and reliability of their systems. Begin with regular risk assessments to pinpoint any weaknesses in your AI models or operational processes. This helps you stay ahead of potential issues.

It's also essential to put in place strong governance frameworks to oversee how AI is used and how decisions are made. Regularly auditing your AI models is another key step. This can help identify and correct biases, ensuring fair outcomes. Protecting data privacy is equally important, which can be achieved through robust security protocols. Lastly, make sure there’s human oversight at critical decision-making points to maintain accountability.

By aligning your safety measures with regulatory requirements, you can stay compliant and reduce the risk of legal or financial consequences. These actions not only help mitigate risks but also show a dedication to using AI responsibly.