AI Risk Management: Why Traditional Frameworks Are Failing in the Machine Learning Era

Post Summary

They rely on static, periodic reviews that don’t work for AI systems that evolve continuously.

Black‑box algorithms, biased outputs, data poisoning, and AI‑driven cyberattacks.

Attackers use AI for model manipulation, ransomware targeting, and automated network scanning.

No coverage for algorithmic bias, model drift, AI hallucinations, or adversarial manipulation.

By adopting frameworks like NIST AI RMF, deploying continuous monitoring, and adding human oversight.

It automates AI risk assessments, centralizes evidence, and enables real‑time monitoring with human‑in‑the‑loop governance.

In healthcare, AI is transforming diagnostics, operations, and patient care, but it also introduces risks that outdated risk management frameworks cannot handle. These systems were built for static, predictable software - not dynamic, evolving AI. Key challenges include:

Healthcare organizations must shift to modern, flexible approaches, combining real-time monitoring, human oversight, and cross-functional teams. For example, tools like Censinet RiskOps™ automate risk assessments while keeping humans in control, addressing gaps left by older methods. Failure to update risk strategies could jeopardize patient safety, data security, and compliance.

Why Traditional Risk Management Frameworks Fall Short for AI

Traditional risk management frameworks were designed with predictable software systems in mind. They rely on periodic assessments - evaluating risks, documenting them, and revisiting later. But AI systems don’t play by those rules. They learn, adapt, and change in real time, making static assessments ineffective. This shift demands a fresh approach to handling risks.

Static Risk Assessments vs. Dynamic AI Threats

In traditional systems like electronic health records (EHRs) or billing software, periodic risk assessments work because these systems remain relatively stable over time. AI systems, on the other hand, are constantly evolving. For instance, an AI model predicting patient readmissions in January might behave differently by March due to changes in patient demographics or updated care protocols. These shifts can introduce new vulnerabilities that static frameworks fail to catch.

AI algorithms adjust their outputs based on new data, meaning their risks and performance can vary dramatically. Take a model trained on data from one hospital system - it might not perform as expected when deployed across a network with entirely different patient populations [1]. Traditional frameworks, built for systems with steady and predictable behavior, simply can’t keep up with this level of fluidity. This mismatch becomes even more problematic when you factor in the opacity of AI algorithms and their unique vulnerabilities.

Black-Box Algorithms and the Transparency Problem

Many AI models function as black boxes, producing results based on statistical patterns rather than clear, understandable rules [1]. This lack of transparency creates major hurdles for risk management. For example, when a diagnostic AI recommends a treatment, traditional frameworks expect clear documentation of the decision-making process. But with AI, even developers often struggle to explain how the model reached its conclusion. This can undermine patient trust and complicate informed consent.

Transparency issues make it incredibly difficult to explain how AI models work, what data they rely on, or why they make specific decisions [1]. In healthcare, this becomes a serious problem. How do you assure patients their data is being used responsibly when the AI’s logic is opaque and its methods evolve over time [1][2]? Traditional frameworks assume systems can be fully documented and understood - an assumption that simply doesn’t hold with black-box AI.

Missing Coverage for AI-Specific Vulnerabilities

Traditional frameworks also fall short in addressing risks unique to AI, such as algorithmic bias, data poisoning, and AI-generated errors. For instance, a malicious actor could manipulate training data to cause an AI model to misdiagnose certain conditions. Or an algorithm might unintentionally reinforce historical biases, leading to unequal healthcare outcomes for specific groups.

These vulnerabilities demand new risk categories that go beyond the scope of existing frameworks [1]. The interplay between humans and AI introduces failure modes that traditional error classifications don’t account for. When AI systems malfunction or produce a "hallucination" - generating content that seems plausible but is completely incorrect - there’s no guidance in traditional frameworks on how to address or mitigate these issues. These gaps highlight the urgent need for risk management strategies tailored to the complexities of AI-driven healthcare.

New Cybersecurity Risks AI Brings to Healthcare

As AI continues to advance, so do the risks it introduces - many of which outpace the capabilities of traditional cybersecurity frameworks. AI doesn't just amplify existing threats; it opens the door to entirely new vulnerabilities that healthcare systems may not be prepared to handle. These risks directly threaten patient safety, data security, and the overall integrity of healthcare operations. Here’s a closer look at some specific attack methods that demand urgent attention.

Data Poisoning and Model Manipulation

One of the most alarming risks is data poisoning, where attackers deliberately inject harmful data into AI training datasets. This tampering can corrupt the AI models, leading to dangerous outcomes like consistent misdiagnoses - imagine tumors being overlooked or lab results being misclassified. These attacks target the training phase, exploiting weaknesses in how data is sourced and vetted. Without proper oversight or third-party validation, compromised training data can go unnoticed, leaving healthcare systems vulnerable to catastrophic errors.

AI-Powered Ransomware and Automated Attacks

Cybercriminals are now using AI to supercharge ransomware attacks, making them more destructive and harder to detect. AI enables attackers to pinpoint high-value targets, such as electronic health record (EHR) systems and critical imaging servers, and launch attacks at moments designed for maximum disruption. For example, in 2025, a major U.S. health insurance provider accidentally exposed 4.7 million customer PHI records over three years due to a misconfigured cloud storage bucket [5].

These AI-enhanced attacks are particularly dangerous because they can dynamically alter their code to evade detection. Additionally, AI tools can rapidly scan vast hospital networks, identifying outdated devices, vulnerable APIs, and other weak points much faster than human hackers ever could [3]. This level of automation dramatically increases the potential scale and impact of cyberattacks in healthcare.

Algorithmic Bias and Clinical Errors

Beyond direct attacks, AI systems can also introduce significant risks through algorithmic bias and errors. These issues can lead to clinical harm, billing fraud, and even legal trouble. When healthcare providers rely too heavily on AI outputs without fully understanding their limitations, the consequences can be severe. In 2024, for instance, the Texas attorney general reached a settlement with a company that marketed a generative AI tool as "highly accurate" for creating patient documentation and treatment plans. The tool, however, made false claims about its accuracy, leading to misleading and deceptive practices [2].

That same year, the U.S. Department of Justice subpoenaed several pharmaceutical and digital health companies to investigate whether their use of generative AI in electronic medical record systems had resulted in excessive or unnecessary care [2]. These cases highlight how flawed algorithms can quickly expose healthcare organizations to regulatory scrutiny and legal risks, underscoring the need for careful oversight and accountability in AI deployment.

The Gap Between Traditional Frameworks and AI Requirements

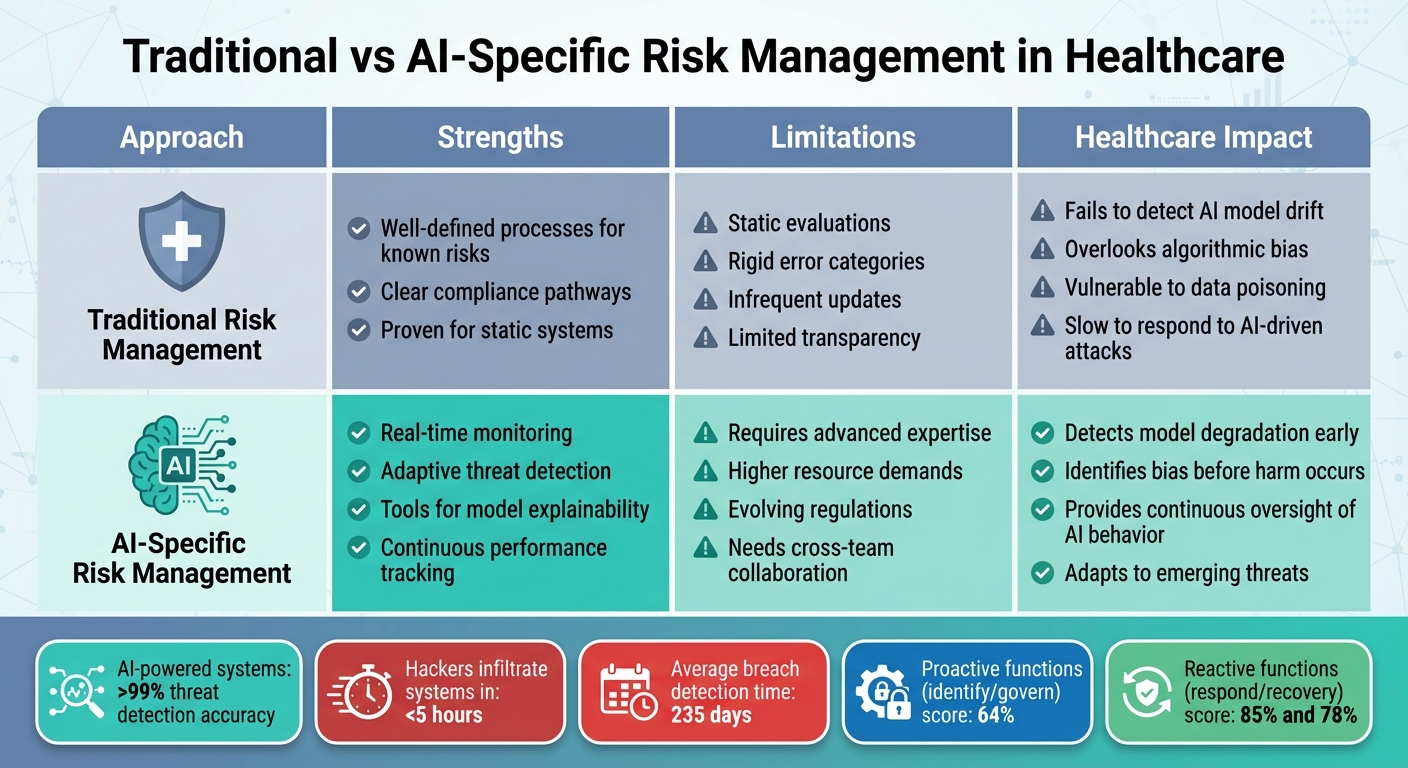

Traditional vs AI-Specific Risk Management in Healthcare: Key Differences

Traditional risk management systems were created for a world that moves at a much slower pace than today’s AI-driven landscape. These frameworks were designed for static environments - where risks could be identified, assessed, and monitored periodically. But AI operates in a completely different realm. It’s dynamic and adaptive, constantly evolving as it processes new data and adjusts its behavior. This creates a continuously shifting risk profile that traditional systems simply can’t keep up with.

The challenges are clear. Legacy frameworks struggle to address failure modes that are unique to AI. For instance, algorithmic biases can shift over time, predictive models often lack transparency, and automated systems can fail in unpredictable ways. Add to that the complex interactions between human operators and AI, and you’ve got a recipe for unforeseen vulnerabilities [1]. These frameworks weren’t built to handle AI-specific issues, leaving critical gaps in areas like healthcare risk management [1][2].

To put this into perspective, a benchmarking study using the NIST Cybersecurity Framework 2.0 revealed some eye-opening numbers. While reactive measures like "respond" and "recovery" scored 85% and 78%, respectively, proactive functions like "identify" and "govern" lagged behind at just 64% [7]. This reactive approach is especially risky in the world of AI, where threats can emerge and escalate in a matter of hours - not days.

Traditional Frameworks vs. AI-Specific Needs

The table below highlights the stark differences between traditional risk management approaches and the requirements of AI-specific systems in healthcare:

Well-defined processes for known risks; clear compliance pathways; proven for static systems

Static evaluations; rigid error categories; infrequent updates; limited transparency

Fails to detect AI model drift; overlooks algorithmic bias; vulnerable to data poisoning; slow to respond to AI-driven attacks

Real-time monitoring; adaptive threat detection; tools for model explainability; continuous performance tracking

Requires advanced expertise; higher resource demands; evolving regulations; needs cross-team collaboration

Detects model degradation early; identifies bias before harm occurs; provides continuous oversight of AI behavior; adapts to emerging threats

The numbers paint a stark picture of why traditional methods fall short. AI-powered cybersecurity systems, for example, can detect threats with over 99% accuracy [6]. Yet, most hackers can infiltrate systems in less than five hours, while it takes organizations an average of 235 days to even detect the breach [6]. Traditional frameworks, with their periodic reviews and reactive stance, are simply too slow for the fast-moving world of AI. Healthcare organizations need systems that can keep pace with threats that evolve by the hour, not by the quarter.

sbb-itb-535baee

Modern Strategies for Managing AI Risks in Healthcare

As traditional frameworks show their limitations, modern strategies offer a clearer path to securing AI in healthcare. To manage the rapidly evolving risks associated with AI, healthcare organizations must embrace real-time, adaptable approaches. Proven frameworks are already available - now is the time to put them into action.

Adapting NIST AI RMF for Healthcare

The NIST AI Risk Management Framework (NIST AI RMF) provides a strong starting point for managing AI risks. However, healthcare organizations need to customize it to address their specific challenges. This means embedding principles like fairness, transparency, explainability, accountability, privacy, and safety into every phase of AI development. These adjustments ensure compliance with regulations like HIPAA and other healthcare standards.

Healthcare-specific adaptations include rigorous validation processes, scenario testing, and continuous monitoring to detect and mitigate issues like model drift before they affect patient care. For instance, deploying tools for bias detection and real-time model monitoring early on can help identify problems as they arise. The Department of Health and Human Services reported 271 active or planned AI use cases in fiscal year 2024, with a projected 70% increase in new use cases by FY 2025 [8]. This rapid growth highlights the urgent need for structured and flexible risk management strategies. While automated risk assessments are valuable, they must be complemented by human oversight to ensure patient safety.

Human-in-the-Loop Governance for AI Oversight

Automation can improve efficiency, but it cannot replace human judgment. A human-in-the-loop approach ensures that clinicians retain ultimate control over AI-assisted decisions, reducing overreliance on algorithms and maintaining professional accountability. Studies show that without human oversight, clinicians face unrealistic expectations when managing AI-generated recommendations.

The 2025 Wolters Kluwer Health Future Ready Healthcare report revealed a disconnect: while 80% of respondents prioritized workflow optimization, only 18% were aware of their organization’s policies for generative AI use [9]. Among those with policies, 64% had rules for data privacy, 55% emphasized transparency, 51% addressed ethical concerns, and 42% outlined processes for integrating AI tools into workflows [9]. These findings underscore the critical role of human oversight in ensuring that AI tools are safely and effectively integrated into patient care.

Building Cross-Functional AI Risk Teams

To keep pace with the dynamic nature of AI risks, healthcare organizations must rely on cross-functional teams. These teams bring together diverse expertise from IT, compliance, clinical care, ethics, data science, and community advocacy to safeguard patient outcomes. A multidisciplinary AI governance committee can oversee activities such as vetting AI tools, addressing biases (like those stemming from data poisoning or algorithmic flaws), and ensuring regulatory compliance.

Collaboration between technical, clinical, and ethical teams from the outset helps identify vulnerabilities early and ensures accountability. This approach not only aligns technical capabilities with clinical needs but also minimizes risks that might otherwise go unnoticed. Considering that the healthcare sector experiences an average cost of $9.8 million per cybersecurity incident [9], cross-functional teams are essential for fostering a culture of continuous improvement and accountability.

How Censinet RiskOps™ Addresses AI Risk Management at Scale

Censinet RiskOps™ offers a forward-thinking approach to managing the unique challenges AI introduces to healthcare. As AI technology evolves rapidly, healthcare organizations require tools that can keep pace while maintaining critical human oversight. RiskOps™ bridges the gap between the traditional methods of risk management and the dynamic needs of AI systems by blending automation with human expertise.

Automated AI Risk Assessments and Dashboards

Censinet RiskOps™ consolidates AI-related policies, risks, and tasks into a single platform with real-time updates. Its intuitive AI risk dashboard provides up-to-the-minute visibility into emerging threats.

The platform’s Censinet AI™ feature streamlines the third-party risk assessment process. Vendors can complete security questionnaires in seconds, while the system automatically summarizes vendor evidence, captures key product integration details, identifies fourth-party risks, and generates comprehensive risk reports. This level of automation addresses the speed limitations of traditional frameworks without sacrificing the thoroughness needed to meet healthcare compliance standards.

Human-Guided Automation for Risk Management

Censinet AI™ doesn’t replace human judgment - it enhances it. Automated processes are designed to work alongside human oversight at critical stages, such as evidence validation, policy creation, and risk mitigation. Configurable rules and workflows ensure that risk teams remain in control, while automation takes care of repetitive tasks, enabling healthcare leaders to scale their efforts without compromising patient safety or care quality.

The platform strikes a balance between automation and human insight by allowing organizations to set clear boundaries for automated processes. When the system identifies potential risks, it routes them to designated reviewers who can apply clinical and ethical considerations before taking action. This collaborative approach also extends to coordinating multidisciplinary teams for thorough AI oversight.

Coordinating GRC Teams for AI Oversight

RiskOps™ ensures seamless communication across governance, risk, and compliance (GRC) teams. Acting as a central hub, Censinet RiskOps™ distributes critical risk findings to the appropriate stakeholders - whether they’re technical teams, clinical professionals, compliance officers, or ethics advisors. This ensures that everyone involved has timely access to the information they need to make well-informed decisions.

Conclusion

Traditional frameworks simply weren’t built to handle the fast-moving, ever-changing nature of AI. Static evaluations can’t keep up with evolving risks, and older models fail to address AI-specific threats like data poisoning, model manipulation, or AI-driven ransomware. As Gianmarco Di Palma and colleagues observed, “The introduction of AI in healthcare implies unconventional risk management, and risk managers must develop new mitigation strategies to address emerging risks” [1].

Given these challenges, healthcare organizations need to rethink their strategies. Modern approaches must combine automation with human oversight to tackle AI risks effectively. In October 2024, the HSCC Cybersecurity Working Group launched an AI Cybersecurity Task Group, bringing together 115 healthcare organizations to provide operational guidance [4]. This effort highlights the growing understanding that managing AI risks requires collaboration across disciplines, constant monitoring, and frameworks tailored to AI’s unique demands.

Proactive governance plays a critical role here. Establishing AI committees, implementing thorough procurement policies, conducting regular audits, and tightening controls on third-party vendors are all essential steps. These measures align with new models like the NIST AI Risk Management Framework, addressing the broader attack surface AI introduces.

Tools like Censinet RiskOps™ offer a practical, scalable solution. By combining automated risk assessments with human oversight, it bridges the gap left by traditional frameworks. Features like a centralized dashboard and streamlined cross-team coordination ensure technical teams, clinical staff, and compliance officers can work together to manage AI risks more effectively.

The divide between outdated frameworks and AI’s demands is undeniable, but there’s a clear path forward. By embracing AI-specific governance, using advanced risk management tools, and maintaining strong oversight, healthcare organizations can protect patient safety while making the most of AI’s transformative potential.

FAQs

What steps can healthcare organizations take to manage AI-related risks effectively?

Healthcare organizations can tackle AI-related risks by taking a proactive, well-rounded approach. This means regularly assessing risks specific to AI systems, using high-quality, unbiased training data, and being transparent about how AI models make decisions. Setting up clear governance and accountability structures is another key step in managing potential challenges.

To ensure safety, organizations should implement strict data privacy and security measures, continuously monitor AI outputs for accuracy, and comply with regulations like HIPAA. Training staff, developing incident response plans, and encouraging teamwork across departments are also crucial for addressing risks like data manipulation or adversarial attacks. By blending these strategies, healthcare providers can strengthen their AI systems and better protect patient information.

Why are traditional risk management frameworks inadequate for managing AI risks in healthcare?

Traditional risk management frameworks often fall short when it comes to navigating the complexities of AI in healthcare. These systems are typically built to handle static and predictable risks, which makes them ill-equipped to tackle AI-specific threats like data breaches, algorithm tampering, or cybersecurity gaps.

The problem is that these traditional approaches are more reactive than proactive, leaving them unable to keep up with the fast-changing risks associated with AI technologies. On top of that, they usually lack the adaptability needed to address the ethical, privacy, and security challenges unique to AI. This gap leaves healthcare organizations vulnerable to serious risks. To safeguard sensitive healthcare systems, it's crucial to shift toward modern, AI-focused risk management strategies.

Why is human oversight essential for managing AI risks in healthcare?

Human oversight plays a critical role in managing AI risks in healthcare, ensuring these systems are used responsibly and deliver effective results. While AI excels at processing large volumes of data quickly, it often falls short when it comes to grasping the complexities of clinical situations or addressing ethical dilemmas. Human involvement is key to spotting and correcting errors, biases, or potential system weaknesses that could otherwise jeopardize patient safety.

Healthcare also demands nuanced decision-making that AI simply cannot achieve on its own. By combining the analytical power of AI with human expertise, organizations can better address risks like data breaches, system malfunctions, or unintended outcomes, ultimately creating safer and more dependable solutions for patients.

Related Blog Posts

- Risk Revolution: How AI is Rewriting the Rules of Enterprise Risk Management

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- The Safety-Performance Trade-off: Balancing AI Capability with Risk Control

- The Governance Gap: Why Traditional Risk Management Fails with AI

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"What steps can healthcare organizations take to manage AI-related risks effectively?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare organizations can tackle AI-related risks by taking a proactive, well-rounded approach. This means regularly assessing risks specific to AI systems, using high-quality, unbiased training data, and being transparent about how AI models make decisions. Setting up clear governance and accountability structures is another key step in managing potential challenges.</p> <p>To ensure safety, organizations should implement strict data privacy and security measures, continuously monitor AI outputs for accuracy, and comply with regulations like HIPAA. Training staff, developing incident response plans, and encouraging teamwork across departments are also crucial for addressing risks like data manipulation or adversarial attacks. By blending these strategies, healthcare providers can strengthen their AI systems and better protect patient information.</p>"}},{"@type":"Question","name":"Why are traditional risk management frameworks inadequate for managing AI risks in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>Traditional risk management frameworks often fall short when it comes to navigating the complexities of AI in healthcare. These systems are typically built to handle static and predictable risks, which makes them ill-equipped to tackle <strong>AI-specific threats</strong> like <a href=\"https://censinet.com/blog/taking-the-risk-out-of-healthcare-june-2023\">data breaches</a>, algorithm tampering, or cybersecurity gaps.</p> <p>The problem is that these traditional approaches are more reactive than proactive, leaving them unable to keep up with the <strong>fast-changing risks</strong> associated with AI technologies. On top of that, they usually lack the adaptability needed to address the ethical, privacy, and security challenges unique to AI. This gap leaves healthcare organizations vulnerable to serious risks. To safeguard sensitive healthcare systems, it's crucial to shift toward modern, AI-focused risk management strategies.</p>"}},{"@type":"Question","name":"Why is human oversight essential for managing AI risks in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>Human oversight plays a critical role in managing AI risks in healthcare, ensuring these systems are used responsibly and deliver effective results. While AI excels at processing large volumes of data quickly, it often falls short when it comes to grasping the complexities of clinical situations or addressing ethical dilemmas. Human involvement is key to spotting and correcting errors, biases, or potential system weaknesses that could otherwise jeopardize patient safety.</p> <p>Healthcare also demands nuanced decision-making that AI simply cannot achieve on its own. By combining the analytical power of AI with human expertise, organizations can better address risks like data breaches, system malfunctions, or unintended outcomes, ultimately creating safer and more dependable solutions for patients.</p>"}}]}

Key Points:

Why can’t traditional risk frameworks manage modern AI systems?

- Static assessments don’t match AI’s constantly changing behavior

- Periodic reviews miss real‑time vulnerabilities created by new data

- Legacy controls assume predictable, rules‑based systems

- No guidance for model drift, hallucinations, or inference‑time risks

- Inadequate documentation models for opaque, black‑box algorithms

What AI‑specific vulnerabilities threaten healthcare systems?

- Data poisoning that corrupts training datasets

- Adversarial inputs triggering misdiagnosis or harmful outputs

- Prompt injection targeting clinical chatbots or workflow agents

- Model drift that degrades accuracy without warning

- Opaque decision pathways that make errors difficult to trace

How does AI amplify cybersecurity threats?

- AI‑powered ransomware identifies high‑value assets automatically

- Automated reconnaissance scans hospital networks at scale

- Manipulation of AI‑enabled medical devices, altering operations

- API exploitation in AI‑connected systems

- Faster propagation of attacks across vendor and supply‑chain ecosystems

Where do legacy frameworks fall short in healthcare AI oversight?

- No classification for algorithmic bias or clinical safety errors

- No mitigation controls for hallucinations or autonomy risks

- Incomplete coverage for data lineage and training‑data integrity

- Lack of continuous monitoring, relying on annual reviews

- Assumptions of transparency that do not apply to neural networks

How should organizations modernize AI risk management?

- Adopt NIST AI RMF and healthcare‑specific adaptations

- Implement continuous monitoring for behavior changes

- Use human‑in‑the‑loop governance for clinical AI decisions

- Build cross‑functional AI oversight teams across clinical, IT, legal, and compliance functions

- Conduct adversarial testing and scenario‑based validation

How does Censinet RiskOps™ support scalable AI governance?

- Automates AI vendor assessments with healthcare‑specific criteria

- Centralizes risk registers, controls, and evidence

- Identifies fourth‑party risks through integrated mapping

- Routes findings to governance committees for review

- Provides real‑time AI risk dashboards to track vulnerabilities and compliance