The AI Risk Manager: Human Intuition Meets Machine Intelligence

Post Summary

Healthcare cybersecurity is evolving, and integrating artificial intelligence (AI) with human expertise is becoming a necessity. AI helps healthcare organizations process vast amounts of data, detect threats, and manage risks faster than ever. However, human oversight remains vital for ethical, strategic, and patient-centered decision-making. Together, this partnership strengthens defenses against cyber threats while ensuring compliance with regulations like HIPAA and FDA guidelines.

Key Takeaways:

- AI’s Role: Detects anomalies, monitors systems 24/7, and automates tasks like vulnerability scanning and incident response.

- Human Expertise: Adds ethical context, strategic decision-making, and clinical understanding.

- Challenges: AI risks include data poisoning, bias, and system errors, requiring structured governance and oversight.

- Regulations: Frameworks like NIST AI RMF and HHS’s 2025 AI strategy guide the safe deployment of AI in healthcare.

- Third-Party Risks: Vendor systems account for over 80% of healthcare breaches, emphasizing the need for rigorous assessments.

AI tools like Censinet RiskOps™ streamline risk management, but human involvement ensures critical decisions align with patient safety and organizational goals. Balancing automation with oversight is the key to effective healthcare cybersecurity.

Basics of AI Risk Management in Healthcare

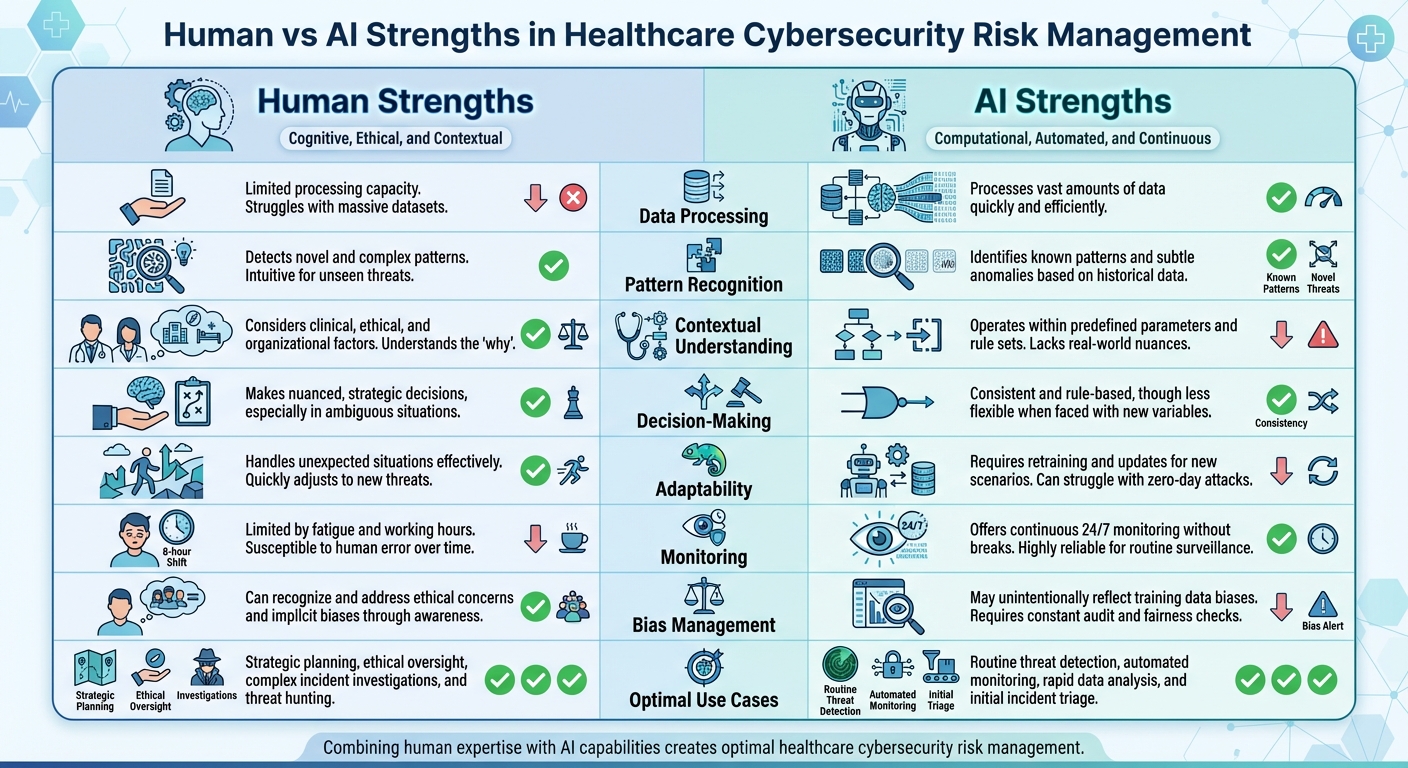

Human vs AI Strengths in Healthcare Cybersecurity Risk Management

Managing AI risks in U.S. healthcare involves tackling unique challenges like adversarial attacks, data poisoning, and model drift - issues that set AI apart from traditional cybersecurity concerns. These risks arise as AI systems adapt to real-world conditions, potentially leading to performance degradation over time.

Key Concepts and U.S. Regulatory Frameworks

To navigate these challenges, several frameworks guide healthcare organizations in managing AI risks effectively. One central resource is the NIST AI Risk Management Framework (AI RMF), which emphasizes creating and deploying trustworthy AI systems. According to NIST:

The NIST AI Risk Management Framework (AI RMF) is intended for voluntary use and to improve the ability to incorporate trustworthiness considerations into the design, development, use, and evaluation of AI products, services, and systems [5][8].

In response to the growing influence of generative AI, NIST released the Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile (NIST-AI-600-1) on July 26, 2024. This update specifically addresses risks tied to generative AI systems [5][8]. Healthcare organizations are also guided by frameworks like the Healthcare Industry Cybersecurity Practices and recommendations from the HSCC's AI Cybersecurity Task Group, which align AI governance with existing standards such as HIPAA, FDA regulations, and NIST guidelines [6].

Building on these efforts, the U.S. Department of Health and Human Services (HHS) introduced an AI strategy on December 4, 2025, focusing on "Governance and Risk Management for Public Trust." This strategy requires HHS divisions to identify high-impact AI systems and implement baseline risk management measures - such as bias mitigation, outcome monitoring, security protocols, and human oversight - by April 3, 2026. Any systems failing to meet these standards must be discontinued or phased out [4].

With these regulatory measures in place, the next step is understanding how AI and human expertise can work together to manage risks effectively.

Human Versus Machine Strengths in Risk Management

AI and human experts bring complementary strengths to risk management. AI is exceptional at processing large datasets and performing continuous monitoring, making it ideal for automating repetitive tasks like log analysis or vulnerability scanning. On the other hand, human professionals excel at interpreting these technical findings within the broader context of patient care, ethical considerations, and operational goals.

By combining AI's efficiency with human judgment, healthcare organizations can adopt a balanced approach to risk management. AI handles the heavy lifting in data-intensive tasks, freeing up human experts to focus on complex, high-value activities that require strategic thinking.

| Capability | Human Strengths | AI Strengths |

|---|---|---|

| Data Processing | Limited processing capacity | Processes vast amounts of data quickly |

| Pattern Recognition | Detects novel and complex patterns | Identifies known patterns and subtle anomalies |

| Contextual Understanding | Considers clinical, ethical, and organizational factors | Operates within predefined parameters |

| Decision-Making | Makes nuanced, strategic decisions | Consistent and rule-based, though less flexible |

| Adaptability | Handles unexpected situations effectively | Requires retraining for new scenarios |

| Monitoring | Limited by fatigue and working hours | Offers continuous 24/7 monitoring |

| Bias Management | Can recognize and address ethical concerns | May unintentionally reflect training data biases |

| Optimal Use Cases | Strategic planning, ethical oversight, investigations | Routine threat detection, automated monitoring |

This synergy between AI and human expertise allows organizations to maximize efficiency while maintaining a strong focus on ethical and strategic priorities.

What AI Can Do For Healthcare Cybersecurity

Combining the precision of machines with human judgment, AI is transforming healthcare cybersecurity. Large U.S. healthcare organizations face a staggering volume of over 10,000 security alerts daily, leaving human teams overwhelmed and stretched thin [12]. AI steps in by monitoring logs, user behavior, and network traffic in real time, quickly identifying unusual patterns that could signal potential threats [9].

AI For Threat Detection And Monitoring

Traditional security approaches often rely on signature-based detection, which focuses on known risks. AI, on the other hand, goes further by adapting to uncover emerging threats and subtle irregularities [9][10]. Tools like User and Entity Behavior Analytics (UEBA) and advanced Security Information and Event Management (SIEM) systems use AI to establish a baseline of normal activity across users and devices. For example, if patient records are accessed at odd hours or a medical device starts communicating with an unfamiliar external server, AI flags these anomalies instantly [10]. These capabilities highlight how AI complements human expertise by catching what might otherwise go unnoticed.

AI For Vulnerability And Exposure Management

AI also excels at identifying weak points in healthcare's increasingly complex digital landscape. From misconfigured cloud-based patient portals to insecure telehealth platforms, AI pinpoints vulnerabilities that could be exploited [3]. Machine learning models continuously monitor medical devices, detecting unusual behavior or potential breaches in real time [11]. By gathering data from as many as 80 intelligence sources, AI-powered vulnerability management tools create a detailed cybersecurity profile for devices [11]. Crucially, these tools don't treat all risks equally - they assess and prioritize vulnerabilities based on their potential clinical impact and the broader threat environment [11].

| Feature | Traditional Vulnerability Management | AI-Augmented Vulnerability Management |

|---|---|---|

| Monitoring Capabilities | Periodic assessments with limited insights | Real-time monitoring of device behavior and traffic |

| Risk Prioritization | Generalized severity scores | Dynamic scoring based on clinical impact and context |

| Threat Detection | Focused on known vulnerabilities | Detects both known and emerging threats through pattern recognition |

| Response Speed | Manual review and remediation | Automated flagging and instant adjustments |

By dynamically managing vulnerabilities, AI ensures faster, more efficient responses to potential risks.

AI For Incident Response And Recovery

AI dramatically improves incident response by automating key steps in detection, investigation, and remediation. When a potential threat arises, AI analyzes threat intelligence, reviews logs, updates tickets, sends alerts, and even resolves some incidents without human input [12]. This process typically unfolds in six automated stages:

- Detection: AI collects data from sources like SIEMs, firewalls, and endpoint detection tools to identify suspicious activity.

- Triage and Enrichment: Alerts are supplemented with contextual details, including asset information and user behavior, to filter out false positives and assess severity.

- Prioritization: Machine learning models rank threats, ensuring the most critical issues are addressed first.

- Response: Predefined playbooks automatically execute containment actions, such as isolating devices, blocking IPs, or disabling compromised accounts.

- Notification and Escalation: Human analysts are alerted when necessary, and all actions are documented.

- Post-Incident Analysis: Logs are reviewed, reports are generated, and case management systems are updated to improve future response efforts [12].

This streamlined process significantly reduces mean-time-to-resolution (MTTR), helping to minimize disruptions to patient care. AI can also assign tasks to team members based on the type of threat, required skills, and availability [13]. This allows security teams to focus on proactive threat hunting rather than being bogged down by routine alerts.

Platforms like Censinet RiskOps™ use AI to speed up third-party risk assessments. Vendors can complete security questionnaires quickly, while the platform automatically summarizes evidence, captures integration details, and generates risk reports. With tools like Censinet AI™, organizations can automate evidence validation, policy drafting, and risk mitigation while maintaining critical human oversight. This balance of automation and human input ensures efficient and effective risk management.

Adding Human Oversight to AI Systems

Combining the efficiency of AI with the discernment of human expertise is essential for managing risks effectively. While AI can enhance the speed and accuracy of risk management, human judgment ensures decisions align with patient safety, regulatory compliance, and ethical values. The challenge lies in striking the right balance - determining when AI can act independently and when human intervention is crucial. Achieving this balance requires structured frameworks, strong governance, and ongoing monitoring of third-party AI tools.

Human-In-The-Loop Models and AI Autonomy Levels

Oversight models play a critical role in creating governance structures that protect AI systems. Not all AI systems need the same level of human involvement. The Health Sector Coordinating Council (HSCC) is working on 2026 guidance that introduces a five-level autonomy scale, designed to align the level of human oversight with the risks posed by specific AI tools [6]. This framework helps organizations tailor oversight to different applications.

Human-in-the-loop models involve direct human approval before AI takes action. For instance, if AI identifies a potential security threat and suggests isolating a medical device, a security analyst reviews the recommendation and decides whether to proceed. This approach is ideal for high-stakes decisions where actions could disrupt clinical operations.

Human-on-the-loop models allow AI to act independently while humans monitor its performance and step in if needed. For example, AI might block suspicious IP addresses automatically but alert the security team for review. This model works well in situations like adjusting detection thresholds, where AI handles routine changes, but humans validate them periodically.

Human-in-command models keep humans in full control, with AI serving as an advisory tool. For example, AI might analyze vulnerability data and suggest priorities, but humans make all final decisions. This level of oversight is critical in scenarios where AI errors, such as generating incorrect but plausible information (known as "hallucinations"), could lead to flawed security decisions [6].

Censinet RiskOps™ offers a real-world example of this balanced approach. With Censinet AI™, tasks like evidence validation, policy drafting, and risk mitigation are automated, but human oversight remains integral. Risk teams configure rules and review processes to ensure that automation supports decision-making rather than replacing it. Key findings and tasks are routed to the appropriate stakeholders for review, maintaining centralized control over AI oversight.

AI Governance and Risk Committees

These oversight models guide the establishment of formal committees tasked with continuously evaluating AI performance and associated risks. Effective oversight depends on structured governance processes that define roles and responsibilities throughout the AI lifecycle [6]. These committees, made up of IT, clinical, compliance, and risk experts, ensure AI systems meet organizational standards.

Committees maintain a comprehensive inventory of AI systems, documenting their functions, data sources, and potential risks. They establish relevant standards and implement AI-specific security and data controls, using frameworks like the NIST AI Risk Management Framework [6]. Regular evaluations ensure AI tools deliver benefits without introducing unacceptable risks.

| AI Lifecycle Stage | Human Oversight Responsibilities |

|---|---|

| Planning & Procurement | Define use cases, assess vendor security, establish performance metrics, review contracts |

| Development & Integration | Validate data quality, test for bias, ensure interoperability, document architecture |

| Deployment & Monitoring | Approve go-live decisions, track performance metrics, review anomalies, audit decisions |

| Maintenance & Updates | Evaluate model changes, retrain algorithms, assess vulnerabilities, update policies |

| Decommissioning | Plan data migration, archive records, terminate vendor access, document lessons learned |

Censinet RiskOps™ enhances governance by centralizing real-time AI data, policies, risks, and tasks in one dashboard. Findings are routed to the appropriate team members, ensuring continuous oversight and accountability. This approach ensures even automated systems remain under human guidance, a critical factor in strengthening cybersecurity.

Managing Third-Party and Supply-Chain AI Risks

Third-party AI tools bring unique vulnerabilities, making it essential to extend risk assessment frameworks to these systems. In 2025, over 80% of stolen protected health information (PHI) records came from breaches involving third-party vendors, business associates, and non-hospital providers [14]. Cybercriminals often use a "hub-and-spoke" strategy, targeting a single vendor to access multiple healthcare organizations [14]. When third-party AI tools are compromised, the resulting ransomware attacks can disrupt hospitals and patient care, even if the hospital itself wasn’t the direct target [14].

To address this, the HSCC is developing 2026 guidance on "Third-Party AI Risk and Supply Chain Transparency" to improve security and trust within healthcare supply chains [6]. In the meantime, organizations need to adopt rigorous due diligence processes.

Vendors should be thoroughly vetted using security questionnaires, contract clauses, and ongoing monitoring. This includes evaluating data usage, PHI handling, breach notification protocols, and liability terms. Organizations should assess third-party AI systems for security, privacy, and bias risks, aligning evaluations with frameworks like the NIST AI Risk Management Framework, HICP, and HIPAA [6]. Standardized processes for procurement, monitoring, and decommissioning ensure consistent oversight across all third-party AI tools [6].

Censinet AI™ simplifies third-party risk assessments by enabling vendors to quickly complete security questionnaires. It automatically summarizes evidence, captures integration details, identifies fourth-party risks, and generates summary reports. This speeds up the evaluation process while preserving the human oversight needed to navigate complex vendor relationships and make informed decisions about third-party AI risks.

This layered approach ensures that human intuition complements machine intelligence, creating a resilient foundation for healthcare cybersecurity.

sbb-itb-535baee

Building A Plan For AI-Enabled Cyber Risk Management

Rolling out AI-driven cybersecurity demands a well-organized strategy: evaluate existing systems, focus on high-priority use cases, and tailor the approach to the unique challenges of U.S. operations. This groundwork sets the stage for a phased, measurable plan.

Assessing Current Maturity and Identifying Gaps

Start by gauging your organization's readiness for AI-powered risk management using a maturity model. In November 2025, the Health Sector Coordinating Council (HSCC) Cybersecurity Working Group shared an early look at its 2026 AI cybersecurity guidance. This includes an AI Governance Maturity Model designed to help healthcare organizations assess their capabilities, pinpoint weaknesses, and prioritize upgrades.

Create a detailed inventory of all AI systems in use, documenting their functions, data dependencies, and security implications. Beyond simply cataloging, ensure you have clean, structured, and labeled data for training models, real-time data pipelines for ongoing monitoring, and governance frameworks that promote transparency and trust in AI outputs [1].

Address data fragmentation by integrating risk data across critical systems. Evaluate your cybersecurity infrastructure to confirm it includes HIPAA-compliant cloud environments, strong encryption, audit trails, role-based access controls, and tools for managing regulatory updates [1]. Identify vulnerabilities such as outdated software, weak hardware, and insecure system interoperability. Expand traditional risk assessments to address AI-specific challenges like algorithmic bias, opaque predictive models, system malfunctions, and complex human-technology interactions [2]. For every gap identified, create a remediation plan with clear metrics for success.

Phased Implementation and Measuring Success

Adopt a step-by-step approach, starting with low-risk, high-impact use cases. The HSCC recommends rolling out AI in phases, first establishing strong policies and best practices before scaling [6]. Begin with education and training programs to build awareness and ensure proper use of AI tools in daily operations [6].

Next, focus on cyber defense by developing playbooks for handling AI-related cyber incidents - covering preparation, detection, response, and recovery. Introduce AI-driven threat intelligence processes and operational safeguards to support these efforts [6]. Finally, formalize governance by defining clear roles, responsibilities, and oversight throughout the AI lifecycle, ensuring alignment with regulations like HIPAA and FDA guidelines [6].

Track progress using performance metrics that demonstrate real-world improvements. Key indicators include Mean Time to Detect (MTTD) and Mean Time to Respond (MTTR) for security incidents, fewer high-severity events, quicker vulnerability fixes, and prompt third-party risk assessments. Additionally, measure the accuracy of AI-generated risk predictions compared to actual incidents and the time saved on tasks like evidence validation. Throughout, maintain strong human oversight to complement AI's capabilities.

Tools like Censinet RiskOps™ simplify phased implementation by allowing organizations to focus on specific risk areas and expand as they grow. Meanwhile, Censinet AI™ speeds up third-party risk assessments by automating security questionnaires, summarizing evidence, identifying fourth-party risks, and generating concise reports. This lets healthcare organizations manage risks more efficiently while keeping critical human oversight through configurable rules and review processes.

Operational and Cultural Challenges in the U.S.

Scaling AI-enabled cybersecurity in U.S. healthcare means tackling staffing shortages, budget constraints, and legacy systems. Clear communication about how AI supports risk management while keeping key decisions human-driven is essential. The shortage of cybersecurity talent makes it critical to upskill staff at all levels to safely use AI tools and understand their limitations [7][15]. Tight budgets further complicate efforts to build robust cybersecurity programs [15].

Legacy systems and complex IT setups add another hurdle. Many outdated infrastructures are difficult to secure and may not integrate easily with modern AI tools, requiring careful planning. In multi-hospital systems, coordinating AI adoption across different technology stacks and workflows only heightens the complexity.

Building trust and transparency is crucial for successful AI adoption. Clinical staff, administrators, and board members need a clear understanding of how AI supports risk management and where human judgment remains essential. Open communication channels, transparent performance metrics, and feedback systems for reporting concerns or unexpected behavior are vital. This cultural shift ensures AI becomes a trusted ally in protecting patient safety and strengthening organizational resilience.

To address these challenges, Censinet AI™ acts as a centralized hub for managing AI-related policies, risks, and tasks. Key findings and action items are routed to the appropriate stakeholders for timely review and resolution. An intuitive AI risk dashboard provides continuous oversight, ensuring the right teams are addressing the right issues at the right time. This approach helps healthcare organizations balance operational realities with the demands of modern cybersecurity.

Conclusion: The Future Of AI Risk Management In Healthcare

The future of cybersecurity in healthcare lies in blending AI's data-crunching abilities with human judgment. AI is unparalleled when it comes to processing vast amounts of information, spotting patterns, and automating repetitive tasks. But it lacks the nuanced understanding, ethical considerations, and strategic thinking that only humans can provide. Together, this partnership shifts organizations from merely reacting to threats to actively preventing them.

That said, AI isn't without its challenges. Issues like algorithmic opacity and the potential for generating incorrect outputs, often referred to as "hallucinations", highlight the need for human oversight [2][3][16]. The strongest approach leverages AI's efficiency and scale while relying on humans to interpret results, question assumptions, and make the most critical decisions.

Tools such as Censinet RiskOps™ and Censinet AI™ demonstrate how AI can streamline risk management without sacrificing human control. These platforms allow for faster third-party risk assessments and better vendor relationship management, all while ensuring continuous monitoring. Configurable rules ensure that the final analysis is always reviewed and validated by human teams.

To make the most of these advancements, organizations need to invest in upskilling their workforce, establishing strong governance practices, and fostering collaboration across teams. As AI continues to evolve, healthcare will require professionals who understand both the technology and the intricacies of patient safety. These individuals will play a crucial role in guiding AI systems to deliver better outcomes while catching potential errors before they affect patient care. This balanced approach mirrors earlier strategies that successfully combined technical expertise with human oversight to create safer healthcare environments.

FAQs

How does AI enhance threat detection in healthcare cybersecurity?

AI plays a crucial role in strengthening cybersecurity in healthcare by keeping a constant watch on networks and identifying unusual patterns or suspicious activities as they happen. With the help of predictive analytics, it can pinpoint potential weak spots before they become serious problems, giving organizations a proactive edge against new threats.

On top of that, AI can handle automated responses to security risks, speeding up mitigation efforts and minimizing the risk of human mistakes. When advanced machine intelligence is paired with human expertise, healthcare organizations can build a more responsive and efficient cybersecurity approach.

What challenges come with using AI for managing healthcare cybersecurity risks?

Integrating AI into healthcare cybersecurity risk management is no small feat. One of the primary hurdles is managing fragmented and disconnected risk data. When data is scattered across systems, gaining a full picture of vulnerabilities becomes a daunting task. On top of that, navigating strict regulatory and legal requirements adds another layer of complexity, particularly when it comes to safeguarding patient safety and protecting sensitive information.

Another significant challenge lies in achieving seamless data integration while ensuring transparency in how AI systems make decisions. Without clear oversight, trust in these systems can waver. This is where human involvement becomes crucial - not just to monitor AI performance but to address concerns and reassure stakeholders.

Lastly, there's the human element to consider. Organizations need to strike a balance between leveraging AI's benefits and addressing workforce dynamics. This includes training employees to collaborate effectively with AI tools and considering how these advancements might reshape job roles within the industry.

How does human oversight improve AI in healthcare risk management?

Human involvement is crucial in refining AI systems within healthcare. It ensures precision, minimizes mistakes, and keeps patient safety at the forefront. Experts play a key role in verifying AI-generated insights, spotting potential biases or inaccuracies, and upholding accountability in clinical decisions.

By keeping an eye on how AI systems perform over time, healthcare professionals can catch irregularities, avoid excessive dependence on automated results, and step in when needed. This partnership between human expertise and machine intelligence strengthens trust, improves decision-making, and ensures AI tools are applied responsibly and effectively in critical healthcare settings.