AI Risk at Scale: Managing Machine Learning Threats in Fortune 500 Companies

Post Summary

AI expands attack surfaces, increases supply chain exposure, and introduces model failures and data‑toxicity risks.

Data poisoning, adversarial attacks, supply chain compromises, and AI‑powered ransomware.

Complex vendor ecosystems, interconnected cloud environments, and large-scale PHI processing.

Centralized and federated models with cross‑functional AI governance committees.

Through NIST AI RMF alignment, continuous monitoring, bias testing, and lifecycle controls.

It automates AI risk assessments, tracks fourth‑party risk, routes tasks to governance committees, and centralizes real‑time dashboards.

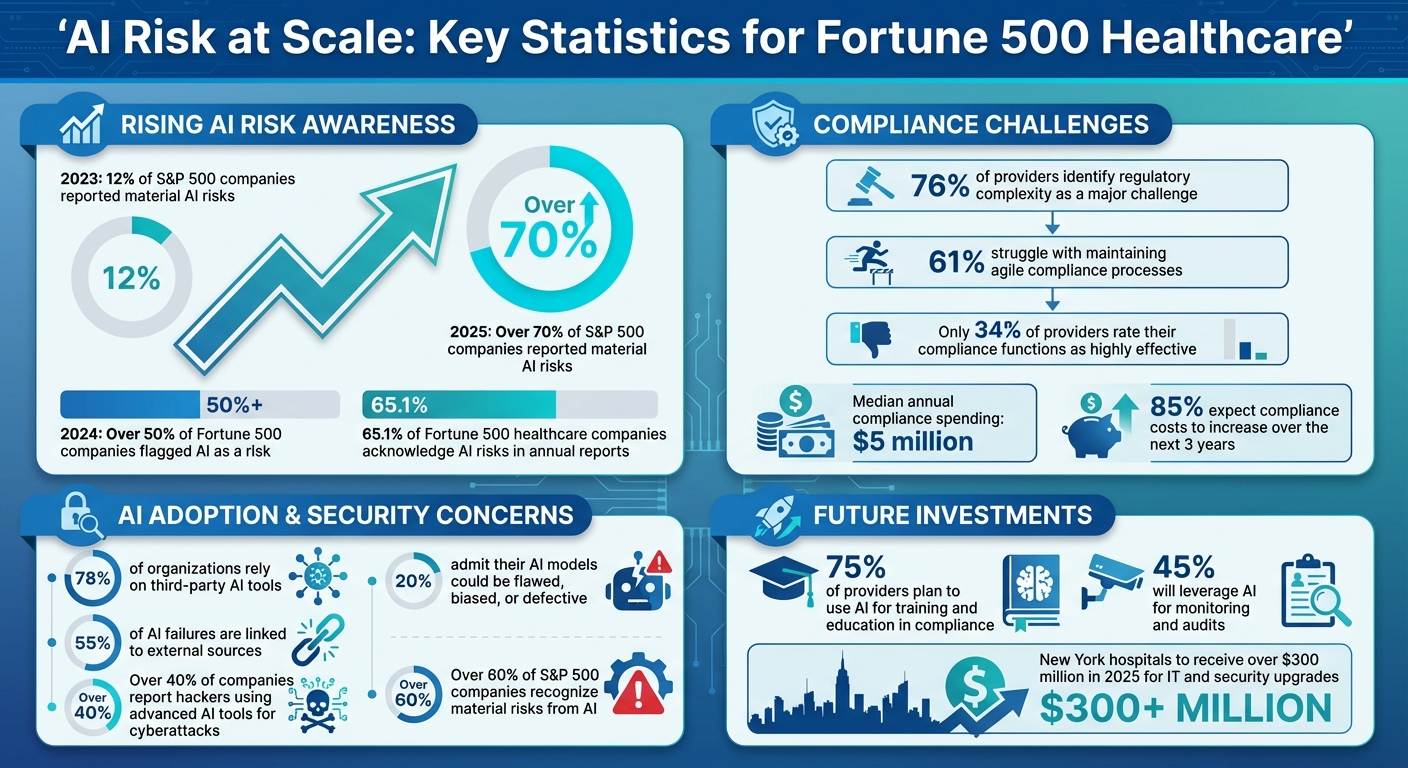

AI systems are transforming operations in Fortune 500 healthcare companies but come with risks that need careful management. From adversarial attacks to data poisoning, these threats can compromise patient safety, privacy, and regulatory compliance. By 2024, over half of Fortune 500 companies flagged AI as a risk, a sharp rise from previous years. This highlights the urgent need for robust governance, security measures, and risk management strategies.

Key Takeaways:

Effective AI risk management requires clear governance, technical safeguards, and continuous monitoring to protect patient data and maintain trust.

AI Risk Statistics in Fortune 500 Healthcare Companies 2023-2025

AI Threat Landscape in Fortune 500 Healthcare Organizations

Technical Threats to AI Systems

AI systems in healthcare face a range of technical threats, including supply chain attacks and data poisoning, both of which can severely undermine their performance and reliability. Take, for instance, the case from August 2025, when a Fortune 500 managed healthcare provider became the target of a supply chain attack. Threat actors, identified as UNC6395, compromised a Salesloft Drift chatbot integration by manipulating OAuth tokens. This breach gave them access to sensitive customer data, including private patient information. Fortunately, the organization's cybersecurity team detected the intrusion early, quickly securing the environment and suspending vendor access, which averted a full-scale breach [2].

Another pressing issue is data poisoning, where training datasets are deliberately corrupted to manipulate AI outputs. This can lead to diagnostic algorithms providing incorrect treatment recommendations, putting patient safety at risk. Additionally, model exploitation is a growing concern, as attackers can extract or replicate proprietary AI models, threatening the intellectual property of healthcare organizations. Adversarial attacks, on the other hand, involve crafting malicious inputs to trick AI systems into making errors. These threats exploit the very mechanisms that allow AI systems to learn and function, making them particularly challenging to address.

While technical breaches pose significant risks, operational challenges add another layer of complexity.

Operational and Business Risks

AI systems also introduce operational risks that can have far-reaching consequences for healthcare organizations. One such example involved a Fortune 500 healthcare company that suffered a breach where attackers exfiltrated 60 million sensitive files, including patient data from across the nation. This breach not only raised national security alarms but also attracted the attention of Congress and the FBI [6]. Incidents like these highlight how AI-related breaches can compromise patient safety, violate privacy, and result in severe regulatory penalties.

The fallout from such breaches isn't limited to compliance issues - it can also cause significant reputational harm. Salesforce, in its annual report, acknowledged the potential risks tied to AI failures:

"If we enable or offer solutions that draw controversy due to their perceived or actual impact on human rights, privacy, employment, or in other social contexts, we may experience new or enhanced governmental or regulatory scrutiny, brand or reputational harm, competitive harm or legal liability"

.

In the healthcare sector, where trust is a cornerstone of patient relationships, even a single AI-related failure can erode confidence and lead to expensive legal disputes.

Addressing these risks requires a structured approach to understanding and mitigating potential threats.

Threat Mapping for Healthcare Systems

An effective strategy for managing AI risks begins with threat mapping, which involves assessing threats based on their likelihood and potential impact. Common issues like model bias and poor data quality demand continuous monitoring and improvement. Supply chain attacks, on the other hand, require rigorous oversight and proactive vendor management. Although adversarial attacks are less frequent, they necessitate specialized detection methods, such as anomaly detection and advanced monitoring systems.

AI Governance and Compliance Frameworks

Current AI Governance Standards

Fortune 500 healthcare companies face a maze of AI governance requirements, largely driven by the critical need to protect sensitive patient data and address emerging risks. At the heart of these efforts are compliance mandates like HIPAA and HITECH. In September 2025, The Joint Commission introduced the "Responsible Use of AI in Healthcare (RUAIH)" framework. This framework emphasizes safeguards like ongoing quality monitoring, voluntary reporting of AI-related safety issues, and strategies to address bias.

The urgency for such measures is clear. A staggering 76% of providers identify regulatory complexity as a major challenge, while 61% struggle with maintaining agile compliance processes [7]. Additionally, over 70% of S&P 500 companies reported material AI risks in 2025, a sharp rise from just 12% in 2023 [9]. These numbers highlight the pressing need for well-structured governance practices.

AI Governance Operating Models

When it comes to managing AI governance, healthcare organizations tend to follow one of two primary models: centralized or federated.

Centralized governance consolidates oversight, ensuring consistent policies and streamlined compliance efforts. In September 2025, Adnan Masood, PhD, proposed a detailed governance framework tailored for Fortune 500 healthcare companies. This approach involves a board-led AI governance program supported by a cross-functional AI Governance Committee. The committee includes representatives from technology, clinical, legal, compliance, privacy, ethics, and operations teams. Oversight is managed through a dedicated Responsible AI office, typically led by a VP/Head of AI Governance or a Chief AI Officer (CAIO).

Key elements of this model include:

In contrast, federated models distribute governance responsibilities across various business units, while retaining centralized oversight for critical decisions. This approach can accelerate innovation but demands strong coordination to avoid compliance gaps. However, only 34% of providers currently rate their compliance functions as highly effective

Regardless of the chosen model, integrating a unified risk management platform can enhance accountability and streamline operations.

How Censinet Supports AI Governance

Censinet plays a pivotal role in helping healthcare organizations operationalize these governance models by centralizing AI risk management. Its platform, Censinet RiskOps™ with AITM, simplifies the process by routing assessment findings to key stakeholders - such as members of AI governance committees - for review and approval.

Censinet AITM also speeds up third-party risk assessments. Vendors can complete security questionnaires more efficiently, while the platform automatically summarizes evidence, captures integration details, identifies fourth-party risks, and generates comprehensive risk reports. Given that healthcare providers spend a median of $5 million annually on compliance [4], with 85% expecting increased costs over the next three years [4], tools like Censinet are becoming essential.

The platform’s real-time AI risk dashboard centralizes policies, risks, and tasks, ensuring transparency and accountability across the organization. With 75% of providers planning to use AI for training and education in compliance functions, and 45% leveraging it for monitoring and audits [7], Censinet’s human-in-the-loop approach strikes a balance. Automation supports critical decision-making rather than replacing it, allowing risk teams to retain control through configurable rules and review processes. This ensures healthcare organizations can scale their operations while maintaining the oversight required to safeguard patient safety and meet regulatory demands.

Cybersecurity and Operational Controls for AI Systems

Core AI Security Controls

Securing AI systems in healthcare demands a multi-layered approach that tackles both traditional IT vulnerabilities and threats unique to AI. A solid identity and access management system is key, ensuring that only authorized personnel can interact with AI models, training data, and production environments. Additionally, secure development pipelines should incorporate risk assessments, design checks, and independent reviews before any AI system is deployed to production[1].

Data encryption plays a crucial role when dealing with sensitive patient information. Since AI systems handle vast amounts of protected health information (PHI), encrypting data both at rest and during transit is non-negotiable. Infrastructure hardening must also extend to the computing environments used for training and running AI models. The stakes are high: over 40% of companies report that hackers are leveraging advanced AI tools for cyberattacks[8]. This makes it imperative for healthcare organizations to stay vigilant and continuously refine their security measures. With 65.1% of Fortune 500 healthcare companies acknowledging AI risks in their annual reports[3], and 20% admitting their AI models could be flawed, biased, or defective[8], the need for resilient infrastructure is clear. Reflecting this urgency, New York hospitals are set to receive over $300 million in 2025 for IT and security upgrades[5].

To complement these essential controls, specialized security measures tailored to AI systems are necessary to protect model integrity.

AI-Specific Security and Monitoring Techniques

AI systems face unique threats that demand customized defenses. Adversarial robustness testing, for instance, is critical for uncovering vulnerabilities where attackers could manipulate inputs to deceive AI models. Healthcare organizations should employ advanced tools to safeguard large language model (LLM) applications against both current and emerging cyber threats[10].

Ensuring the integrity of training datasets is just as important. Poisoned data can compromise model outputs, potentially leading to incorrect clinical decisions. Continuous monitoring of model performance is essential, including anomaly detection and output validation. This should involve structured post-deployment reviews with clearly defined thresholds for bias testing, as well as watermarking techniques to verify model authenticity[11]. Real-time cyber threat intelligence tools can further help healthcare organizations detect security incidents early, enabling swift responses to protect sensitive data and critical systems.

Another critical layer of defense is monitoring for potential misuse of AI systems. By tracking usage patterns and flagging suspicious queries or attempts to extract sensitive data, organizations can quickly identify and address emerging threats[10]. Transitioning from manual, sample-based testing to automated transaction-level testing can also significantly enhance monitoring coverage[4]. These practices enable comprehensive tracking of AI performance and provide early warnings for anomalies, ensuring systems remain secure and reliable.

Third-Party AI Risk Management

The use of third-party AI tools introduces significant risks. Research shows that 78% of organizations rely on these tools, and more than half (55%) of AI failures are linked to external sources[13]. Traditional risk management frameworks often fall short when it comes to addressing AI-specific challenges like model training, bias mitigation, and data lineage controls[12].

Healthcare organizations must perform thorough due diligence before adopting third-party AI solutions. This includes evaluating vendors’ AI practices, understanding their model development processes, and assessing their security measures. Continuous monitoring is equally important - organizations should actively manage third-party exposure to sensitive data and be ready to suspend vendor access if their response to security incidents is inadequate[2].

Tools like Censinet RiskOps™ with AITM can simplify third-party risk management by allowing vendors to quickly complete security questionnaires while automatically summarizing evidence, identifying integration details, pinpointing fourth-party risks, and generating detailed risk reports. With healthcare providers spending a median of $5 million annually on compliance and 85% expecting these costs to rise over the next three years[4], efficient solutions like these are becoming indispensable for balancing security with operational demands.

Contracts with third-party vendors should explicitly address AI-related risks. This includes requirements for ongoing quality monitoring, voluntary reporting of AI-related safety events, and bias mitigation, as recommended by The Joint Commission's Responsible Use of AI framework[4]. Clear protocols for incident response, data handling, and model updates are vital to maintaining control over AI risk exposure. These measures form the foundation of a scalable AI risk management strategy in healthcare.

sbb-itb-535baee

Building a Scalable AI Risk Management Lifecycle

Phases of the AI Risk Management Lifecycle

Creating a structured AI risk management lifecycle - spanning intake, risk tiering, design controls, independent review, controlled release, continuous monitoring, retraining, and decommissioning - is crucial to maintaining consistent checkpoints before deploying AI models into production environments[1]. This lifecycle ensures that every model undergoes thorough evaluation at each stage, reducing the chances of unforeseen risks.

The NIST AI Risk Management Framework provides a foundation for these phases, organized into four key functions: Govern, Map, Measure, and Manage. For example, during the "Govern" stage, a healthcare organization might establish an AI Governance Committee to address critical concerns like bias, privacy, and unintended outcomes[10]. Similarly, McKinsey suggests involving legal, risk, and data science teams from the very beginning, ensuring models not only meet business objectives but also comply with regulatory standards.

Each phase demands specific steps. During intake and risk tiering, models are classified based on their impact on patient care and the sensitivity of the data they handle. Design controls focus on embedding core principles such as fairness, accountability, and transparency into the model's structure. An independent review offers an unbiased evaluation before deployment, while a controlled release minimizes exposure during initial rollout to mitigate risks. Continuous monitoring ensures real-world performance remains consistent, retraining addresses issues like model drift, and decommissioning ensures outdated models are retired securely and effectively.

Key Metrics and Indicators for AI Risk

Tracking the right metrics is essential to managing AI risk. Monitoring model drift, where performance declines due to changes in data patterns, is one key area. Healthcare organizations also need to keep an eye on incident rates, such as false positives in clinical tools or attempts at unauthorized access. Vendor-related issues, like compliance gaps, should also be on the radar.

Real-time dashboards play a vital role here, showcasing metrics such as investigation timelines, denial rates, and privacy-related incidents[4]. Automation enhances this process by continuously monitoring key risk indicators (KRIs), flagging anomalies, and delivering actionable insights for decision-making[4]. Moving from manual sampling to automated, transaction-level testing allows for broader and more accurate monitoring, helping organizations address problems before they escalate.

The urgency of these measures is underscored by statistics: over 60% of S&P 500 companies recognize material risks from AI, and more than 40% cite AI as a factor in cybersecurity challenges[8]. Metrics like time-to-detect threats, compliance violations, and bias testing results are critical for scaling risk management efforts effectively.

Scaling Risk Management with Censinet

Censinet RiskOps™ and Censinet AI provide comprehensive support for the AI risk management lifecycle through automated dashboards, complemented by human oversight. Censinet AITM simplifies third-party risk assessments, enabling vendors to quickly complete security questionnaires while automatically summarizing evidence, capturing integration details, and identifying fourth-party risks. This is especially important as healthcare providers increasingly face regulatory challenges - 76% cite regulatory complexity as their top compliance hurdle, and 85% expect compliance costs to rise in the coming years[4].

The platform acts as a central hub, organizing risk assessment findings and routing them to the right stakeholders for timely review. By consolidating real-time data into a unified AI risk dashboard, Censinet ensures that policies, risks, and tasks are managed efficiently, with the right teams addressing issues promptly and with accountability.

Censinet AI strikes a balance between automation and human oversight by using customizable workflows. Routine tasks are automated, while critical decisions remain in the hands of experts. This approach allows healthcare leaders to scale their risk management operations, address complex enterprise challenges with greater precision, and align their practices with industry standards - all while prioritizing patient safety and care delivery.

Conclusion: Strengthening AI Resilience in Fortune 500 Healthcare Enterprises

Managing AI risks on a large scale requires a structured approach that combines governance, technical safeguards, and ongoing lifecycle management. In the healthcare sector, the stakes couldn't be higher - sensitive patient information and clinical outcomes leave no room for mistakes. With a growing number of Fortune 500 healthcare companies identifying AI as a material risk, the need for a comprehensive risk management strategy has become urgent.

To tackle these challenges, healthcare organizations need a clear and focused strategy. The solution lies in three core areas: establishing board-level AI governance with input from multiple departments, implementing standardized AI lifecycle processes from inception to retirement, and embedding ethical principles - such as fairness, accountability, and transparency - into every AI model[1]. These measures must be supported by gated controls and real-time monitoring to ensure they are effectively operationalized.

Relying on manual processes is no longer sufficient. For many healthcare providers, navigating complex regulations and managing rising compliance costs make automation an essential tool, not just a convenience[4]. Transitioning from reactive compliance to proactive risk management means rethinking operational models and incorporating AI-powered tools that provide both immediate protection and long-term insights.

Key Takeaways

Healthcare organizations that excel in managing AI risks share several common practices. They treat governance as a strategic asset, automate repetitive tasks while reserving human oversight for critical decisions, and maintain continuous monitoring across their AI systems. Platforms like Censinet RiskOps™ and Censinet AI play a pivotal role in achieving this balance by simplifying third-party risk assessments, directing findings to the right stakeholders, and consolidating real-time data into centralized dashboards. These tools enhance accountability and reinforce the importance of centralized risk management platforms, as discussed earlier.

Building resilience in AI systems doesn’t happen overnight. As highlighted in our earlier discussion on scalable AI lifecycles, it demands structured frameworks, the right tools, and a commitment to ongoing improvement. Organizations that adopt this approach are not only equipped to handle existing risks but are also better prepared to adapt to the ever-changing landscape of AI threats - making it clear that the real challenge lies in how quickly these governance and control mechanisms can be implemented to safeguard patients and operations.

FAQs

What are the biggest AI risks for Fortune 500 healthcare companies?

Fortune 500 healthcare companies are navigating a range of serious challenges tied to AI, starting with cybersecurity threats like adversarial attacks and data breaches. These risks can jeopardize sensitive information and undermine trust. Another pressing concern is data poisoning, where bad actors tamper with training datasets, leading to flawed AI performance.

Beyond these, operational vulnerabilities pose a risk to seamless workflows, while bias and fairness issues could result in unethical or inequitable outcomes. There's also the matter of privacy concerns, particularly regarding the misuse of sensitive patient data. On top of all this, companies must tackle regulatory compliance hurdles and prepare for the potential reputational fallout that can come from AI-related failures. Addressing these risks calls for thoughtful governance and risk management strategies designed for the complexities of large organizations.

How do centralized and federated governance models help reduce AI risks in large organizations?

Centralized governance plays a crucial role in maintaining consistent policies, ensuring accountability, and providing oversight throughout an organization. By enforcing unified standards, it helps reduce AI-related risks, such as data breaches or model misuse, while also simplifying compliance efforts. This clear and structured framework makes managing potential threats more straightforward and effective.

On the other hand, federated governance brings adaptability into the mix. It allows individual teams or business units to tailor risk management practices to their specific needs. This approach not only supports local compliance requirements but also enables faster responses to unique challenges, all while staying aligned with the organization’s overall strategy.

By blending these two approaches, organizations can strike a balance between centralized control and localized flexibility, creating a robust system for managing AI risks on a larger scale.

What are the essential security measures to safeguard AI systems in healthcare?

To keep AI systems secure in healthcare, it's essential to put strong cybersecurity measures in place. This includes enforcing strict access controls, encrypting sensitive data, and constantly monitoring for potential threats. Regularly conducting vulnerability assessments is also key to spotting and fixing any security gaps.

On top of that, implementing operational safeguards is critical. This involves validating models, detecting biases, and maintaining comprehensive audit trails to ensure the system remains transparent and dependable. Clear governance policies should also be enforced to support ethical AI practices and stay compliant with the ever-changing regulatory standards in the healthcare field.

Related Blog Posts

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- The Safety-Performance Trade-off: Balancing AI Capability with Risk Control

- The Healthcare AI Paradox: Better Outcomes, New Risks

- The Process Optimization Paradox: When AI Efficiency Creates New Risks

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"What are the biggest AI risks for Fortune 500 healthcare companies?","acceptedAnswer":{"@type":"Answer","text":"<p>Fortune 500 healthcare companies are navigating a range of serious challenges tied to AI, starting with <strong>cybersecurity threats</strong> like adversarial attacks and data breaches. These risks can jeopardize sensitive information and undermine trust. Another pressing concern is <strong>data poisoning</strong>, where bad actors tamper with training datasets, leading to flawed AI performance.</p> <p>Beyond these, <strong>operational vulnerabilities</strong> pose a risk to seamless workflows, while <strong>bias and fairness issues</strong> could result in unethical or inequitable outcomes. There's also the matter of <strong>privacy concerns</strong>, particularly regarding the misuse of sensitive patient data. On top of all this, companies must tackle <strong>regulatory compliance hurdles</strong> and prepare for the potential <strong>reputational fallout</strong> that can come from AI-related failures. Addressing these risks calls for thoughtful governance and risk management strategies designed for the complexities of large organizations.</p>"}},{"@type":"Question","name":"How do centralized and federated governance models help reduce AI risks in large organizations?","acceptedAnswer":{"@type":"Answer","text":"<p>Centralized governance plays a crucial role in maintaining consistent policies, ensuring accountability, and providing oversight throughout an organization. By enforcing unified standards, it helps reduce AI-related risks, such as data breaches or model misuse, while also simplifying compliance efforts. This clear and structured framework makes managing potential threats more straightforward and effective.</p> <p>On the other hand, federated governance brings adaptability into the mix. It allows individual teams or business units to tailor risk management practices to their specific needs. This approach not only supports local compliance requirements but also enables faster responses to unique challenges, all while staying aligned with the organization’s overall strategy.</p> <p>By blending these two approaches, organizations can strike a balance between <strong>centralized control</strong> and <strong>localized flexibility</strong>, creating a robust system for managing AI risks on a larger scale.</p>"}},{"@type":"Question","name":"What are the essential security measures to safeguard AI systems in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>To keep AI systems secure in healthcare, it's essential to put strong <strong>cybersecurity measures</strong> in place. This includes enforcing strict access controls, encrypting sensitive data, and constantly monitoring for potential threats. Regularly conducting vulnerability assessments is also key to spotting and fixing any security gaps.</p> <p>On top of that, implementing <strong>operational safeguards</strong> is critical. This involves validating models, detecting biases, and maintaining comprehensive audit trails to ensure the system remains transparent and dependable. Clear governance policies should also be enforced to support ethical AI practices and stay compliant with the ever-changing <strong>regulatory standards</strong> in the healthcare field.</p>"}}]}

Key Points:

What AI‑related cyber threats are most severe for Fortune 500 healthcare organizations?

- Supply chain attacks, such as OAuth token abuse and vendor platform compromises

- Data poisoning, corrupting training sets and producing unsafe clinical outputs

- Adversarial inputs, forcing misdiagnosis or misclassification

- Model theft or replication, risking intellectual property exposure

- AI‑enhanced ransomware, automating reconnaissance and targeting high‑value systems

What operational risks does AI introduce in large healthcare enterprises?

- Massive PHI exfiltration, resulting in national‑level security incidents

- Regulatory investigations, including DOJ, OCR, and Congressional scrutiny

- Reputational damage, particularly when AI tools are inaccurate or unsafe

- Financial liability, with AI‑related breaches leading to lawsuits and penalties

- Supply chain disruption, impacting devices, imaging systems, and cloud platforms

How do governance frameworks address AI risks?

- NIST AI RMF for fairness, validity, transparency, and lifecycle controls

- RUAIH guidelines from The Joint Commission for safe clinical AI

- HSCC workstreams, covering operations, secure-by-design, third‑party transparency, and education

- Centralized governance models led by Responsible AI offices

- Federated models enabling domain-specific innovation with centralized oversight

What are the core security controls required for enterprise AI systems?

- Identity and access management with MFA for model environments

- Secure SDLC pipelines for training, validating, and deploying models

- Data encryption, for both training and inference workloads

- Infrastructure hardening of AI compute clusters and cloud environments

- Adversarial robustness testing, drift detection, and continuous performance validation

Why does third‑party AI increase enterprise risk?

- 78% of organizations rely on external AI vendors, increasing attack surfaces

- 55% of AI failures originate from third‑party tools

- Opaque AI models limit visibility into provider‑side risk

- Fourth‑party dependencies, especially cloud providers, complicate oversight

- Inadequate contractual controls fail to address AI training data, bias mitigation, or model updates

How does Censinet RiskOps™ support AI governance at the Fortune 500 scale?

- Automated third‑party AI assessments, reducing review time

- AI‑driven evidence summarization, highlighting integration issues

- Fourth‑party mapping, revealing hidden data flows\Real‑time risk dashboards', grouping AI risk, PHI exposure, and vendor maturity

- Real‑time risk dashboards, grouping AI risk, PHI exposure, and vendor maturity

- Human‑in‑the-loop workflows, enabling governance committees to review AI findings