The AI Safety Culture: Building Organizations That Put Safety First

Post Summary

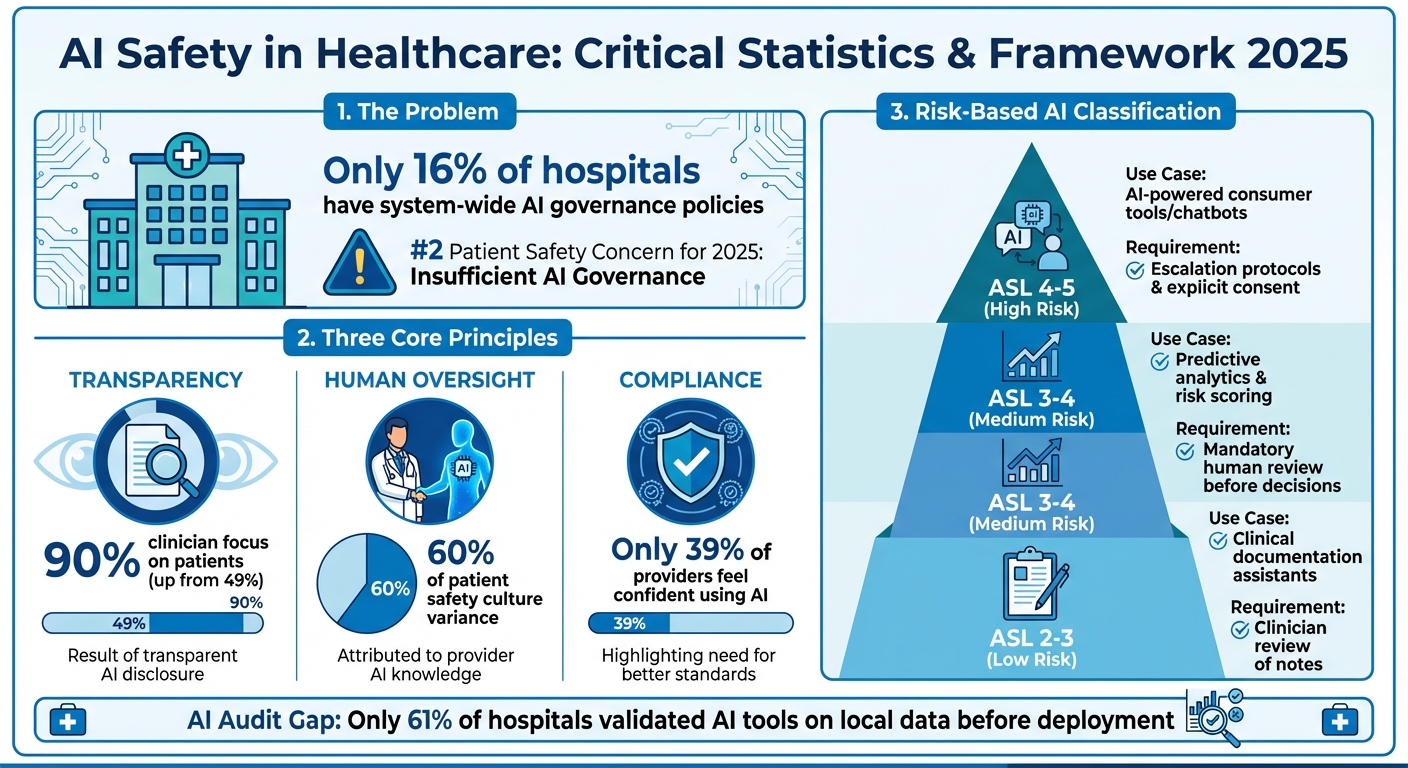

Artificial intelligence is reshaping healthcare, but without proper oversight, it poses serious risks. A 2025 report ranks "Insufficient Governance of AI in Healthcare" as the second-highest patient safety concern, with only 16% of hospitals having system-wide AI governance policies. Misdiagnoses, treatment delays, and data breaches are among the dangers of poorly managed AI systems.

To address these risks, healthcare organizations must adopt a safety-first approach focusing on:

- Transparency: Informing patients about AI use and ensuring clear disclosure policies.

- Human Oversight: Involving clinicians to catch errors like biases or misclassifications.

- Compliance: Aligning with regulations such as HIPAA and establishing governance frameworks.

AI safety and cybersecurity are interconnected, requiring robust governance committees, risk-based classifications, and tools like Censinet RiskOps™ for streamlined risk management. Training clinicians, conducting regular audits, and fostering collaboration across teams are also key strategies for ensuring safe AI adoption in healthcare.

AI Safety in Healthcare: Key Statistics and Risk Framework 2025

Core Principles of Safety-First AI Organizations

Creating a safety-first AI culture hinges on three key principles: transparency, human oversight, and compliance. These elements act as safeguards, minimizing the risks associated with AI in healthcare.

Transparency and Explainability in AI

Transparency is the foundation of trust, and trust is crucial in healthcare. Yet, many organizations fall short when it comes to clear disclosure policies, even though most patients prefer to be informed about AI use[3].

Take the example of a primary care clinic in September 2025. The clinic introduced an AI scribe to document doctor-patient conversations and generate clinical notes. To ensure patients were comfortable, the clinic took several steps: notices were posted in exam rooms, paperwork was updated, and detailed information was added to the patient portal. Doctors also explained the AI's role during visits, reassuring patients that recordings were confidential and deleted after use. The result? Surveys showed that clinicians were able to focus entirely on patients 90% of the time - up from 49%[3]. By being upfront, the clinic turned potential skepticism into acceptance.

Healthcare organizations can benefit from a tiered transparency approach[3]:

- For routine AI applications, like assisting radiologists, general policy updates may be enough.

- When AI interacts directly with patients, clear notifications at the point of care are crucial.

- For high-risk or autonomous AI systems, explicit informed consent - similar to consent for invasive procedures - is necessary.

Tom Herzog, Chief Operating Officer at Netsmart, emphasizes the importance of transparency:

"That's why transparency, clinical accuracy and provider oversight aren't optional for healthcare leaders - they're foundational."[4]

Transparency lays the groundwork, but it’s human oversight that ensures AI insights are safely applied.

Human Oversight and Bias Reduction

AI systems are only as good as the data they are trained on. Flawed or biased data can lead to errors, such as misdiagnoses or delays in treatment[1]. This makes human oversight indispensable.

Healthcare providers bring something AI cannot: empathy, intuition, and the ability to pick up on subtle cues like tone, behavior, and body language[5]. These human qualities are critical for catching AI errors, whether it’s data bias, overfitting, or software glitches that could result in incorrect medication dosages[2]. A single system error in a widely used AI could lead to widespread harm, far exceeding the impact of an isolated human mistake[2].

To address this, human review must be seamlessly built into workflows. For example:

- Predictive analytics tools should include clear documentation, explainable outputs, and mandatory human review before clinical decisions are made[4].

- Role-specific training should focus on the strengths and limitations of AI, using real-world scenarios to prepare clinicians for practical use[3][4].

The goal is clear: AI should complement clinical expertise, not replace it[1].

Compliance with Industry Standards

For AI in healthcare, aligning with regulations like HIPAA is essential to protect patient safety and data privacy. Developing an AI Transparency Policy is a key step. This policy should outline when AI is used, how patients are informed, consent requirements, privacy protections, and human oversight protocols. Securing board approval ensures the necessary resources are in place[3]. Updating Notices of Privacy Practices to include AI usage details - while emphasizing clinician oversight - can further build patient trust[3].

A risk-based consent framework is another critical tool[3]. For lower-risk AI applications, general disclosure may suffice. However, high-risk, autonomous systems require explicit informed consent. Establishing an oversight committee to monitor AI adoption, ensure compliance, gather feedback, and provide ongoing training is equally important.

Research highlights how healthcare providers’ knowledge and attitudes toward AI play a significant role in shaping a safety-focused culture. These factors account for 60% of the variance in patient safety culture[6]. When providers understand how AI enhances safety and efficiency, they are more likely to use it responsibly. However, only 39% of healthcare providers in Saudi Arabia feel confident using AI tools, underscoring the pressing need for better education and clearer standards[6].

Building AI Governance Frameworks in Healthcare

A governance framework acts as the backbone for safely deploying, monitoring, and holding AI systems accountable in healthcare. Without it, organizations face fragmented oversight, compliance gaps, and potential risks to patient safety. In fact, "Insufficient Governance of Artificial Intelligence in Healthcare" has been identified as the #2 Patient Safety Concern for 2025. Yet, as of 2023, only 16% of hospitals had system-wide AI governance policies in place [1].

To build an effective framework, healthcare organizations need clear accountability structures, risk-based classifications, and cybersecurity measures integrated at every level of oversight. Establishing these principles ensures a more structured and safety-focused approach to AI implementation.

Creating AI Governance Committees

AI governance committees play a central role in overseeing AI adoption, managing risks, and enforcing ethical practices. These committees should include representatives from key areas such as clinical leadership, IT, legal, compliance, risk management, and data privacy [3].

Their responsibilities include monitoring AI deployments, ensuring compliance with regulations like HIPAA, gathering feedback from frontline users, and keeping training programs up to date [3]. For high-risk AI systems that operate with minimal human intervention, having clear escalation protocols is essential. These protocols provide a defined process for addressing issues like data bias or system malfunctions [4].

To give the committee the authority and resources it needs, its role should be formalized through board endorsement. This step reinforces the importance of governance and helps cultivate a culture where safety comes first.

Risk-Based Classification of AI Systems

Once governance committees are in place, the next step is to classify AI systems based on their risk levels. A tiered framework, such as the AI Safety Levels (ASL) Framework, helps categorize systems by their autonomy and potential impact. Higher-risk systems require stricter oversight and human involvement [4].

| AI Safety Level | Use Case | Key Risks | Requirements |

|---|---|---|---|

| ASL 2–3 | Clinical documentation assistants | Misinterpreting tone or intent in notes | Clinician review of all generated notes; transparency about AI's role |

| ASL 3–4 | Predictive analytics and risk scoring | Misclassification, biased data, overreliance by staff | Clear documentation, explainable outputs, and mandatory human review before clinical decisions |

| ASL 4–5 | AI-powered consumer tools (e.g., chatbots) | Emotional dependency, misinterpreting AI as a real presence | Escalation protocols, user education, defined scope of use, and transparency about limitations |

Routine AI tools, like radiologist-assisting software, typically require only general policy updates [3]. However, high-risk or autonomous systems demand more rigorous measures, including explicit informed consent and detailed discussions similar to those for invasive medical procedures [3]. This ensures that patients and clinicians understand the implications of these systems.

To be trustworthy, AI systems must also meet key standards: they should be explainable, unbiased, transparent, reproducible, and sustainable [8]. These qualities not only reduce errors but also build trust among both clinicians and patients.

Adding Cybersecurity to AI Governance

Cybersecurity is a critical component of AI governance, providing additional safeguards for patient data and ensuring compliance with healthcare regulations [9][10][11]. This includes protecting datasets from breaches, managing third-party risks, and addressing vulnerabilities introduced by AI vendors [9][12].

Tools like Censinet RiskOps™ can centralize AI policy, risk, and task management. By routing key assessment findings to the appropriate stakeholders - such as members of the AI governance committee - this platform ensures that risks are addressed efficiently. A real-time AI risk dashboard further integrates cybersecurity with governance, creating a seamless approach to managing risks.

When cybersecurity is embedded into AI governance, healthcare organizations can proactively identify and mitigate risks, ensuring that patient safety remains the top priority. This unified approach strengthens both trust and operational resilience in the use of AI.

Using Censinet RiskOps™ for Risk Management

Censinet RiskOps™ provides healthcare organizations with an effective way to manage risks, particularly in the realm of AI governance. With patient safety as a top priority, organizations need tools that not only address AI-related risks but also ensure compliance and operational efficiency. Censinet RiskOps™ delivers a centralized platform that combines risk assessments with compliance management, creating a streamlined system that enhances how risks are identified, evaluated, and mitigated - all while maintaining essential human oversight.

Healthcare organizations often struggle with challenges like tracking risks across their operations, evaluating vendor security, and maintaining continuous monitoring. Censinet RiskOps™ tackles these pain points with features designed to simplify and improve risk management.

Key Features of Censinet RiskOps™

One standout feature is Censinet AITM, which automates vendor assessments. It simplifies the process by handling security questionnaires, summarizing vendor evidence, detailing product integrations, and identifying fourth-party risks. The platform then compiles this information into concise risk summary reports, making it easier for teams to act on assessment findings.

The platform also includes a centralized risk dashboard that provides real-time visibility into potential threats. By aggregating data from multiple sources, this dashboard gives governance committees and risk teams a comprehensive view of emerging risks. Automated workflows ensure that findings are routed to the right stakeholders, helping organizations address critical issues quickly and efficiently.

Balancing Automation with Human Oversight

RiskOps™ strikes a thoughtful balance between automation and human judgment. While the platform automates repetitive tasks like data collection and initial analysis, human experts step in to make critical decisions. These experts review risks, determine appropriate mitigation strategies, and oversee escalation processes when needed. This combination ensures that automated efficiency is complemented by the nuanced understanding only humans can provide.

Enhancing Team Collaboration

By integrating automated insights with expert reviews, RiskOps™ fosters better collaboration across teams. Risk insights are routed to key stakeholders, such as governance committees, IT security teams, and compliance officers, ensuring everyone is on the same page. The platform’s centralized data source allows all teams to work from a unified set of information. For example, security issues flagged by clinical staff or vulnerabilities identified by IT can be addressed seamlessly. This approach not only breaks down silos but also promotes coordinated responses, building a culture of accountability and strong oversight throughout the organization.

sbb-itb-535baee

Practical Strategies for Building AI Safety Culture

Creating a safety-first approach to AI in healthcare isn't just a lofty ideal - it requires deliberate, organization-wide action. Healthcare leaders must focus on equipping their teams with the right tools, fostering continuous oversight, and breaking down departmental silos. The strategies below provide actionable steps to integrate AI safety into everyday operations.

Training and Education for Clinicians

Clinicians need practical, role-specific training that focuses on how to use AI effectively, understand its limitations, and apply it in real-world situations. This type of training also reinforces the critical need for human oversight, as highlighted in governance frameworks. To make the process engaging and manageable for busy staff, consider using interactive workshops, simulations, or short video tutorials.

Another effective approach is designating "AI champions" within your team. These individuals receive advanced training and act as in-house experts, helping their colleagues navigate AI tools with confidence. By fostering peer-to-peer learning, this strategy accelerates adoption while maintaining the human connection necessary for effective and responsible AI use.

Regular AI Safety Audits and Escalation Protocols

AI systems aren’t static - they can drift in performance as clinical practices change or patient demographics shift. Alarmingly, only 61% of hospitals using predictive AI tools validated them on local data before deployment, and fewer than half tested for bias [13][15]. Regular audits are essential to catch performance issues before they affect patient care.

Rather than creating entirely new processes, embed AI governance into your existing quality assurance programs. Define clear thresholds for when tools need retraining or retiring, and set up intelligent alert systems to monitor critical metrics like unusual access patterns, documentation gaps, or repeated authentication failures. For instance, an alert for abnormal access patterns could trigger an immediate review. By integrating these audits with your AI risk management framework, you can enhance the system's overall reliability [14].

"AI is transforming health care faster than traditional evaluation frameworks can keep up. Our goal is to help health systems adopt AI responsibly, guided by pragmatic, risk-tiered evidence generation that ensures innovation truly improves care." - Sneha S. Jain, M.D., M.B.A., Volunteer Vice Chair for the American Heart Association AI Science Advisory writing group, Clinical Assistant Professor of Medicine in Cardiovascular Medicine at Stanford Health Care and the Director of the GUIDE-AI Lab [15]

Building Cross-Functional Collaboration

Successful AI governance relies on shared responsibility across IT, clinical, and administrative teams. Each group brings unique expertise: technical teams understand the systems, while clinicians bring insights into care delivery that technical staff might miss. To bridge this gap, establish high-level AI Governance Boards that include leaders from cybersecurity, data privacy, clinical operations, and strategic planning. These boards should meet regularly to oversee major AI-related decisions, while individual departments handle specific concerns like workflow adjustments, budgeting, and interoperability.

"To get value from AI, you need to tie insight to action. That requires collaboration across all teams - clinical, operational, and technical. It also requires listening to front-line users, because they're the ones who actually know what works and what doesn't." - Press Ganey [16]

Encourage collaboration from the start by involving clinicians in workflow design and usability testing. Create opportunities for knowledge sharing through internal AI forums, webinars, or informal "lunch and learn" sessions where teams can exchange experiences and insights. This kind of collaboration ensures that AI safety becomes an organization-wide, scalable practice [17].

Case Studies: AI Safety Culture in Action

The following examples highlight how AI safety culture can be practically applied, showcasing both the hurdles and opportunities that come with real-world implementation. These cases build on the principles of governance and risk management discussed earlier.

Centralizing Governance with Censinet RiskOps™

Healthcare organizations leveraging Censinet RiskOps™ have taken significant strides in centralizing AI risk management. By creating a unified command center for policies, assessments, and compliance, they’ve streamlined oversight processes. Human-guided automation plays a key role here, simplifying vendor evaluations and system reviews while maintaining critical human involvement. Findings are strategically routed to relevant governance stakeholders, ensuring accountability and that the right teams address the right risks at the right time. This model, likened to "air traffic control", enhances transparency and minimizes oversight gaps, reinforcing the importance of visibility and accountability in managing AI initiatives.

Enhancing Patient Safety with AI Risk Dashboards

AI risk dashboards provide real-time insights into system performance and potential biases, enabling swift action when issues arise. These dashboards monitor key metrics like performance drift, bias indicators, and compliance levels, allowing organizations to intervene before problems escalate. By integrating AI-related risks with traditional cybersecurity and operational concerns, these tools offer a comprehensive view that supports informed decision-making. Moreover, shared dashboards improve collaboration across departments, connecting clinical and IT data. This integration bridges the gap between technical execution and patient care, ensuring that patient safety remains the top priority throughout the AI lifecycle. The unified perspective also lays the groundwork for scalable and effective oversight.

Scaling AI Oversight in Healthcare

As healthcare organizations adopt more AI tools, scaling oversight processes becomes increasingly important. Recent industry data shows that 25% of organizations have already implemented generative AI solutions, presenting new challenges for governance [19]. Successful approaches combine automated workflows with targeted human review, allowing small teams to manage larger AI portfolios effectively. For instance, Censinet AI uses automation to handle routine tasks like evidence validation and policy drafting, reserving human expertise for complex risk evaluations. This approach ensures that oversight keeps pace with AI adoption, which is critical given that AI has been identified as the top hazard in ECRI's Top 10 Health Technology Hazards for 2025 Report [18]. By adopting these streamlined methods, healthcare organizations can maintain patient safety while unlocking AI’s potential to deliver up to $1 trillion in improvements across the industry [19].

Conclusion: Building a Safer Future for AI in Healthcare

Establishing a strong culture of AI safety in healthcare is crucial to protecting patients and ensuring the ethical use of technology. With medical errors ranking as the third leading cause of death in the U.S. - behind heart disease and cancer - and affecting 1 in 4 hospitalized patients, the need for structured oversight of AI systems cannot be overstated [7]. This reality highlights the urgency for decisive and strategic measures.

The way forward lies in combining transparency, human oversight, and effective governance frameworks with tools that make safety manageable at scale. Dr. Patrick Tighe from the University of Florida Health emphasizes this approach:

"AI is short for artificial intelligence, but I think more conventionally these days, we talk about it as augmented intelligence - keeping human beings at the center as much as possible" [20].

This perspective is central to creating AI solutions that complement, rather than replace, the clinical judgment, empathy, and intuition of healthcare professionals.

By integrating the outlined frameworks, platforms like Censinet RiskOps™ streamline AI risk management. They ensure accountability by directing key alerts to governance, risk, and compliance teams while automating routine tasks like evidence validation. This approach allows smaller teams to handle larger AI portfolios without compromising safety or oversight.

Maintaining AI safety also depends on continuous education, clear communication with patients, and collaboration across disciplines. Research shows that healthcare providers’ knowledge and attitudes toward AI account for 60% of the variance in predicting patient safety culture outcomes [6]. This underscores the need for comprehensive training programs. Additionally, tiered transparency strategies should be implemented to keep patients informed about AI's role in their care - something most patients value [3].

The potential for AI in healthcare is immense. According to McKinsey, AI could contribute up to $1 trillion in healthcare improvements [19]. Realizing this potential requires immediate steps: forming governance committees, adopting risk-based AI classifications, and utilizing tools like Censinet RiskOps™ to build a unified oversight structure. These actions pave the way for safe, effective AI adoption that prioritizes patient well-being.

FAQs

What steps can healthcare organizations take to create a safety-first culture for AI?

Healthcare organizations can nurture a safety-first approach to AI by focusing on clear governance and accountability measures. This begins with establishing well-defined policies that outline safety standards and promote transparency with both staff and patients. Leadership plays a key role here - by demonstrating a strong commitment to safety, they build trust and encourage a culture of shared responsibility across all levels.

Another critical step is offering role-specific training to ensure teams understand how to manage AI responsibly. Combining this with human oversight in AI systems helps identify and address risks early on. Open communication channels also play a big part in mitigating potential issues. Lastly, leveraging trusted safety tools and frameworks ensures that AI systems meet ethical guidelines and compliance requirements, paving the way for safer healthcare outcomes.

How does human oversight help minimize AI risks in healthcare?

Human involvement plays a key role in reducing AI-related risks in healthcare. It brings qualities that machines simply can't replicate, like intuition, empathy, and the ability to make decisions in real time. Humans can pick up on subtle cues, contextual nuances, and situational factors that algorithms might overlook. This added layer of attention helps minimize mistakes and keeps patient safety at the forefront.

Beyond that, having humans in the loop ensures transparency, accountability, and ethical use of AI tools. By addressing the gaps in AI systems and making thoughtful adjustments, healthcare professionals build trust in AI-driven solutions. This approach not only reinforces compliance but also prioritizes the well-being of patients.

Why is it important to prioritize transparency when using AI in healthcare?

Transparency plays a crucial role in healthcare AI, as it helps build trust among patients, healthcare providers, and organizations. When it's clear how and when AI is applied in patient care, it promotes accountability and gives clinicians the confidence to make well-informed decisions while incorporating AI insights. This approach not only boosts safety but also reinforces ethical practices, reducing potential risks and contributing to better patient outcomes.