AI Safety Governance: Creating Frameworks That Actually Work

Post Summary

Artificial intelligence (AI) is reshaping healthcare, offering tools for faster diagnoses, patient monitoring, and operational efficiency. However, its rapid adoption has introduced risks like bias, data breaches, and performance degradation. Only 61% of hospitals validate predictive AI tools on local data, and less than half test for bias. This lack of oversight can harm patients, especially in underserved communities.

To address these challenges, effective AI safety governance is crucial. A solid framework should include:

- Clear policies for AI use, testing, and monitoring.

- Cross-functional teams for oversight, including clinical, IT, and compliance experts.

- Risk assessments at every stage of AI deployment.

- Training programs to ensure staff understand AI limitations and responsibilities.

Tools like Censinet RiskOps™ can centralize risk management, automate assessments, and provide real-time monitoring to streamline governance efforts. With proper structures, healthcare organizations can balance innovation with patient safety and regulatory compliance.

Core Elements of AI Safety Governance in Healthcare

Effective AI governance in healthcare is about turning ethical principles into actionable policies that protect patients while allowing room for innovation. These policies act as safeguards, ensuring that advancements in AI align with patient safety. Regulatory frameworks then build on these principles, shaping how AI systems are managed in the healthcare sector.

Principles of AI Safety Governance

At the heart of AI governance in healthcare are several key principles that guide its safe and ethical use:

Patient safety and well-being must always take precedence. Before being deployed, healthcare AI systems need thorough testing, followed by regular monitoring to ensure they don’t cause harm. This includes preventing biased outcomes or a decline in performance over time [3].

Fairness and equity require that AI tools work for all patients, regardless of background. This means auditing training datasets, testing on diverse populations, and monitoring for disparities in outcomes. For instance, in March 2025, Kaiser Permanente introduced Abridge, an AI tool for clinical documentation. Before rolling it out widely, they conducted extensive quality checks, collected feedback from thousands of doctors, and ensured the system supported all patients, including non-English speakers. Their results were even published in a peer-reviewed journal [5].

Transparency and explainability are essential for trust. AI systems should provide clear explanations for their recommendations, allowing clinicians to cross-check with their own clinical expertise. This includes documenting confidence levels, addressing uncertainties, and obtaining patient consent when needed [3].

Accountability and human oversight ensure that someone is always responsible for AI-driven decisions. Healthcare organizations must define oversight roles, document decisions made with AI, and establish clear frameworks for liability [3].

Data privacy and security are critical to safeguarding sensitive patient information. This involves strict governance practices, such as consent management, de-identification of data, access controls, and maintaining audit trails.

US Regulatory Requirements for AI

AI in healthcare operates under an existing web of regulations, including medical device rules, clinical trial guidelines, GxP controls, HIPAA, and payer oversight [4]. The FDA oversees AI systems used for medical purposes - like diagnosing or treating diseases - under the Software as a Medical Device (SaMD) framework. This requires clinical validation, software testing, and robust cybersecurity measures [4]. As noted by MIT researchers Dan Huttenlocher, Asu Ozdaglar, and David Goldston:

"The first step in AI governance should be to ensure that current regulations must equally apply to AI. If human activity without the use of AI is regulated, then the use of AI should similarly be regulated" [1].

Under HIPAA and HITECH, organizations must handle protected health information with extreme care. Additionally, the NIST AI Risk Management Framework provides a structured approach for managing AI risks throughout its lifecycle, emphasizing the need for documentation, quality controls, and post-market monitoring for high-risk AI systems [4].

The importance of compliance was highlighted in August 2025, when the U.S. Department of Justice resolved a criminal case involving a healthcare insurer that misused AI to make improper payments for pharmacy referrals. This incident demonstrates the real-world consequences of failing to meet regulatory standards [1].

Identifying AI Risk Areas

The principles of governance help pinpoint high-impact risk areas in healthcare AI. These areas demand careful attention to ensure patient safety, equitable outcomes, and data security.

Cybersecurity vulnerabilities are a major concern. AI systems processing large amounts of patient data are tempting targets for cyberattacks, making strong security measures and constant monitoring essential.

Data privacy risks go beyond HIPAA requirements. AI systems could unintentionally expose sensitive patient information through their outputs or require data-sharing agreements that pose new privacy challenges. Clear policies on data usage, storage, and retention are crucial.

Algorithmic bias is another critical risk. Without proper validation, AI tools could worsen healthcare disparities. A survey found that only 61% of hospitals using predictive AI tools validated them on local data, and less than half conducted bias testing [2].

Clinical safety risks include issues like performance degradation, inaccurate recommendations, and failure to handle unique or rare cases. Regular validation, real-world monitoring, and incorporating feedback into risk assessments are necessary to address these challenges [4].

Treating AI as inherently high-risk ensures that safeguards - like bias checks, outcome monitoring, enhanced security, and human oversight - are built into the system from the start [4][7]. In fiscal year 2024, the Department of Health and Human Services (HHS) reported 271 active or planned AI use cases, with a 70% increase expected in FY 2025. This rapid growth underscores the urgent need for comprehensive risk management strategies [7]. Together, these elements create a solid foundation for AI safety governance in healthcare.

How to Build an AI Safety Governance Framework

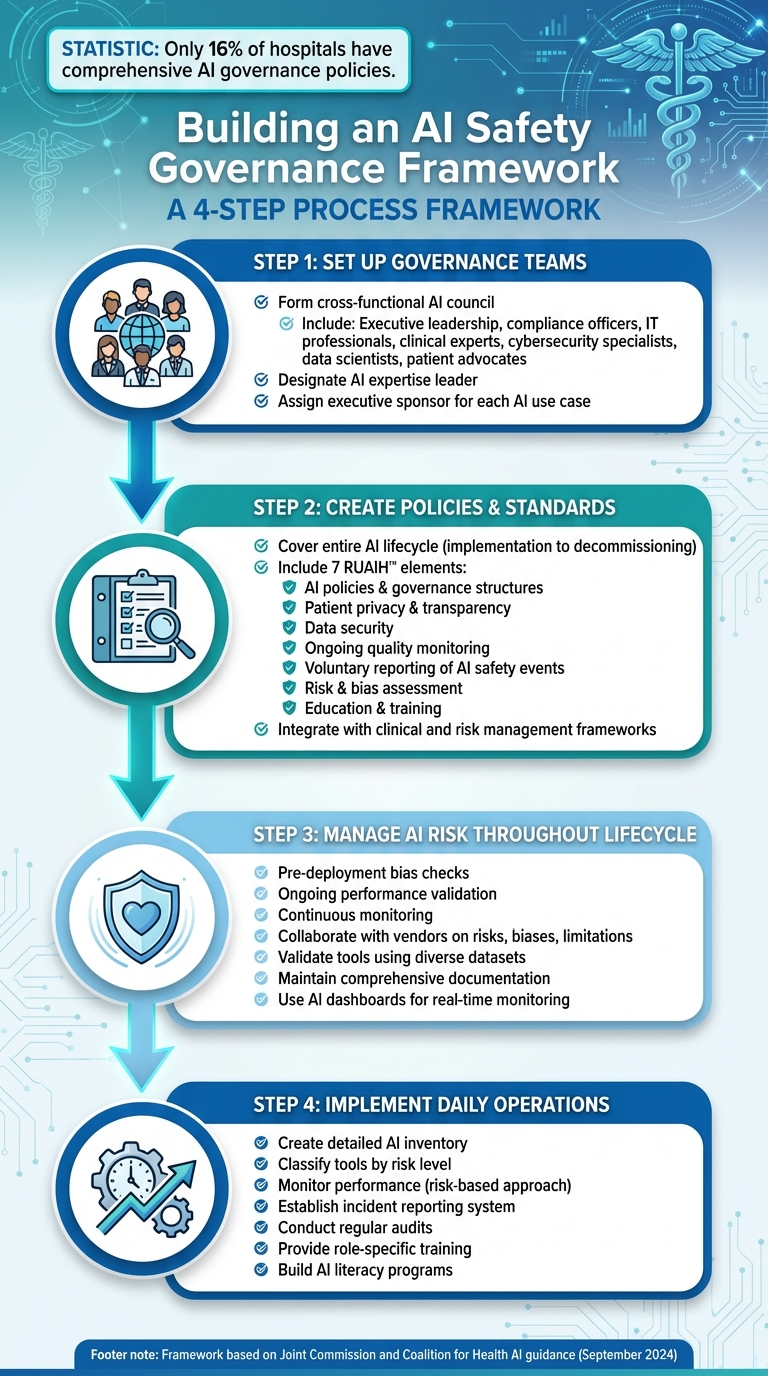

4-Step Framework for Building AI Safety Governance in Healthcare

Creating an AI safety governance framework involves aligning people, policies, and processes to ensure responsible and effective use of AI. By focusing on structured teams, clear policies, and risk management, organizations can operationalize AI safety with confidence.

Setting Up Governance Teams and Responsibilities

Start by forming a cross-functional AI council to regularly review projects and establish policies [8]. This team should bring together diverse expertise, including executive leadership, compliance officers, IT professionals, clinical experts, cybersecurity specialists, data scientists, and patient advocates [8]. The mix of perspectives ensures well-rounded decision-making.

Designate a leader with AI expertise to oversee the implementation and use of AI tools within the organization [8]. Additionally, assign an executive sponsor for each AI use case to ensure strategic alignment and accountability [1]. This centralized structure streamlines coordination and maintains clear accountability [6].

Creating Policies and Standards for AI Use

Policies should address every stage of the AI lifecycle, from implementation to decommissioning. In September 2024, the Joint Commission and the Coalition for Health AI introduced guidance titled "The Responsible Use of AI in Healthcare (RUAIH™)", which outlines seven essential elements: AI policies and governance structures, patient privacy and transparency, data security, ongoing quality monitoring, voluntary reporting of AI safety events, risk and bias assessment, and education and training [8]. This framework also supports a voluntary Joint Commission certification program for responsible AI use.

Procedures should integrate with existing clinical and risk management frameworks, covering areas such as AI approval, patient notification, consent, data protection, and confidential incident reporting [8]. These policies set the foundation for managing risks and ensuring ethical AI use.

Managing AI Risk Throughout the Lifecycle

To maintain safety, organizations must implement risk assessments at every stage of the AI lifecycle. This includes pre-deployment bias checks, ongoing performance validation, and continuous monitoring [3][8]. Collaborate with vendors to gather detailed information about known risks, biases, and limitations, and validate AI tools using diverse and representative datasets.

Amy Winecoff and Miranda Bogen from the Center for Democracy & Technology emphasize the importance of documentation in AI oversight:

"Effective risk management and oversight of AI hinge on a critical, yet underappreciated tool: comprehensive documentation" [1].

Keep thorough records of validation processes, testing results, data quality evaluations, and performance outcomes. Use AI dashboards for real-time monitoring and establish feedback channels for vendors to address issues as they arise [8]. This level of documentation is crucial for audits and resolving performance problems.

Implementing AI Safety Governance in Daily Operations

Once a governance framework is in place, the next step is weaving it into daily operations. This means embedding oversight into existing workflows. For healthcare organizations, this involves practical tools to track AI systems, monitor their effectiveness, and clearly assign responsibilities to staff.

Creating an AI Inventory and Risk Classification System

Start by maintaining a detailed inventory of all AI tools in use within your organization[6]. For each tool, document key details: its purpose, the data it processes, expected outputs, associated costs, and a plan for monitoring its performance. Use a standardized format, such as a "Purpose and Request Form", to ensure consistency across the board[1].

Classify tools based on the level of risk they pose. Many healthcare AI systems fall into the high-risk category, especially those used for critical medical decisions, and require close oversight[3]. Ask vendors to provide comprehensive information about the tool’s risks, biases, and limitations. Tools like CHAI's Applied Model Card can help track and monitor these factors[8]. Additionally, ensure that AI models are trained on representative datasets and undergo bias assessments to minimize disparities[8].

This inventory system not only supports ongoing performance reviews but also facilitates incident reporting when needed.

Monitoring AI Performance and Reporting Incidents

Adopt a risk-based approach to monitoring. Tools involved in clinical decision-making should be evaluated more frequently than those handling administrative tasks[8]. Develop clear metrics and structured evaluations that cover every stage - from pre-deployment testing to post-deployment monitoring. Have a system in place for incident response, with defined processes for reporting harm and escalating issues. Regular audits should check for biases and security vulnerabilities, with all findings documented and addressed without delay.

Effective monitoring also depends on having well-trained staff who are prepared to oversee AI tools.

Training Staff and Building Accountability

Currently, only 16% of hospitals have comprehensive AI governance policies, and fewer than half have tested predictive AI tools for bias[9][2]. This highlights the pressing need for robust training programs. Define and document role-specific training for all users of AI tools. Staff should understand the limitations of these tools and adhere to proper usage guidelines[8].

Lee H. Schwamm, M.D., a leader in digital health and AI governance, stresses the importance of responsible practices:

"Responsible AI use is not optional, it's essential. This guidance provides practical steps for health systems to evaluate and monitor AI tools, ensuring they improve patient outcomes and support equitable, high-quality care." [2]

To support this, implement AI literacy programs to familiarize staff with fundamental AI concepts, potential risks, and benefits[8]. Create a standardized glossary of terms and ensure staff know where to find information about AI tools and related policies. Training should emphasize that AI is a tool to enhance, not replace, clinical judgment, fostering collaboration between human expertise and AI systems[8][9].

sbb-itb-535baee

Using Censinet RiskOps™ for Scalable AI Governance

Healthcare organizations can simplify their risk management efforts by using centralized platforms that streamline oversight and governance. Censinet RiskOps™ is designed to provide a unified view of risk management, keeping AI-related risks consistently monitored and aligned with governance standards. The platform offers specialized tools for managing inventories, automating assessments, and maintaining real-time oversight.

Centralized AI Risk Inventory Management

Censinet RiskOps™ takes the hassle out of managing risk inventories by consolidating all essential metrics into one platform. This approach ensures that governance teams can efficiently track and evaluate AI systems, staying aligned with established frameworks for effective oversight.

Streamlined Risk Assessments Through Automation

With its centralized inventory as a foundation, the platform simplifies risk assessments by automating routine processes. This automation allows teams to focus on deeper analysis and decision-making while still keeping human expertise at the core. The result? Faster, more efficient workflows without compromising on accuracy or judgment.

Real-Time Oversight with Dashboards and Workflows

Censinet RiskOps™ enhances collaboration across Governance, Risk, and Compliance teams through intuitive dashboards and workflows. These tools provide clear, real-time visibility into risk statuses and ensure that updates are timely and actionable. By integrating seamlessly into daily operations, the platform supports continuous oversight throughout the entire AI lifecycle, helping teams stay ahead of potential risks.

Conclusion: Next Steps for AI Safety Governance

Revisiting Core Governance Principles

Strong AI governance is essential to maintaining security, compliance, ethics, and trust. It's important to regularly revisit these principles to ensure that all AI systems consistently meet safety and regulatory standards. Recent regulatory developments emphasize the need for a solid governance foundation, which serves as the starting point for putting practical measures into action.

Steps to Begin Implementation

Start by forming a cross-functional AI governance team that includes experts in clinical care, IT, compliance, and risk management. Then, create a detailed inventory of all AI systems currently in use within your organization. Classify each system based on its risk level and its potential impact on clinical outcomes. On November 10, 2025, the American Heart Association recommended that health systems adopt straightforward, risk-based guidelines for using AI in patient care [2]. Develop policies that clearly define AI use cases, approval processes, and monitoring practices. Regular training should also be implemented to ensure all staff understand their roles in maintaining AI safety. These steps align with the core governance principles and position your organization to manage AI responsibly while embracing new advancements.

Once your framework is in place, ensure it is well-documented and ready to adapt to future regulatory changes and emerging risks.

Preparing for the Future of AI Governance in Healthcare

With a governance framework established, it's vital to stay ahead of regulatory shifts and adapt as necessary. The regulatory landscape for AI is evolving quickly, with varying approaches across jurisdictions. Many healthcare organizations face a growing "foundational trust deficit" in AI, largely due to insufficient vetting and monitoring of AI tools after they are deployed [10]. This highlights the need for ongoing oversight. Tools like Censinet RiskOps™ can help by providing centralized visibility, automated assessments, and real-time monitoring throughout the AI lifecycle. As AI models evolve and regulations change, your governance framework must remain flexible and capable of evolving alongside the technology.

FAQs

What are the core principles of effective AI safety governance in healthcare?

Effective AI safety governance in healthcare hinges on a few key principles. To start, it demands collaboration between a broad range of experts - healthcare professionals, technology specialists, and policymakers - all working together to create a balanced and informed approach. At its core, governance should always prioritize patient safety and outcomes rather than focusing solely on technical benchmarks.

Equally important is the need for clear accountability structures within organizations. These structures, combined with scalable systems, ensure that healthcare providers can keep up with changing technologies and regulations. Transparency, fairness, and adherence to ethical standards are critical for building and maintaining trust. Additionally, ongoing monitoring and proactive oversight are vital to filling any gaps in regulatory frameworks. Ultimately, AI systems must be deployed in a way that aligns with existing laws and ethical guidelines, ensuring they contribute to safe, effective, and fair healthcare practices.

What steps can healthcare organizations take to prevent bias in AI tools?

To reduce bias in AI tools, healthcare organizations need to prioritize using diverse and inclusive training data. This means ensuring datasets cover a broad spectrum of demographics, including varied patient profiles, to better reflect the populations they serve.

In the development phase, incorporating bias detection and correction techniques is key. These methods can help spot and address disparities before they become ingrained in the system.

It's also critical to routinely test AI models across different patient groups and carefully monitor outcomes to identify any inequities. Regular performance evaluations should be conducted to maintain fairness, accuracy, and adherence to ethical guidelines. By embedding these practices into their workflows, healthcare providers can create AI systems that promote safety and fairness for all patients.

How does Censinet RiskOps™ support AI governance in healthcare?

Censinet RiskOps™ makes managing AI governance in healthcare easier by offering a comprehensive platform to handle risks, ensure compliance, and oversee the operation of AI systems. It helps healthcare organizations tackle key issues such as data privacy, cybersecurity risks, and ethical challenges, ensuring that AI technologies align with regulatory requirements and industry standards.

By simplifying risk assessments and compliance processes, Censinet RiskOps™ supports healthcare providers in deploying AI solutions securely and efficiently, building trust and confidence in essential operations.

Related Blog Posts

- Cross-Jurisdictional AI Governance: Creating Unified Approaches in a Fragmented Regulatory Landscape

- AI Governance Talent Gap: How Companies Are Building Specialized Teams for 2025 Compliance

- The Safety-Performance Trade-off: Balancing AI Capability with Risk Control

- Board-Level AI: How C-Suite Leaders Can Master AI Governance