AI Vendor Risk Management in Healthcare: The Complete 2025 Governance Guide

Post Summary

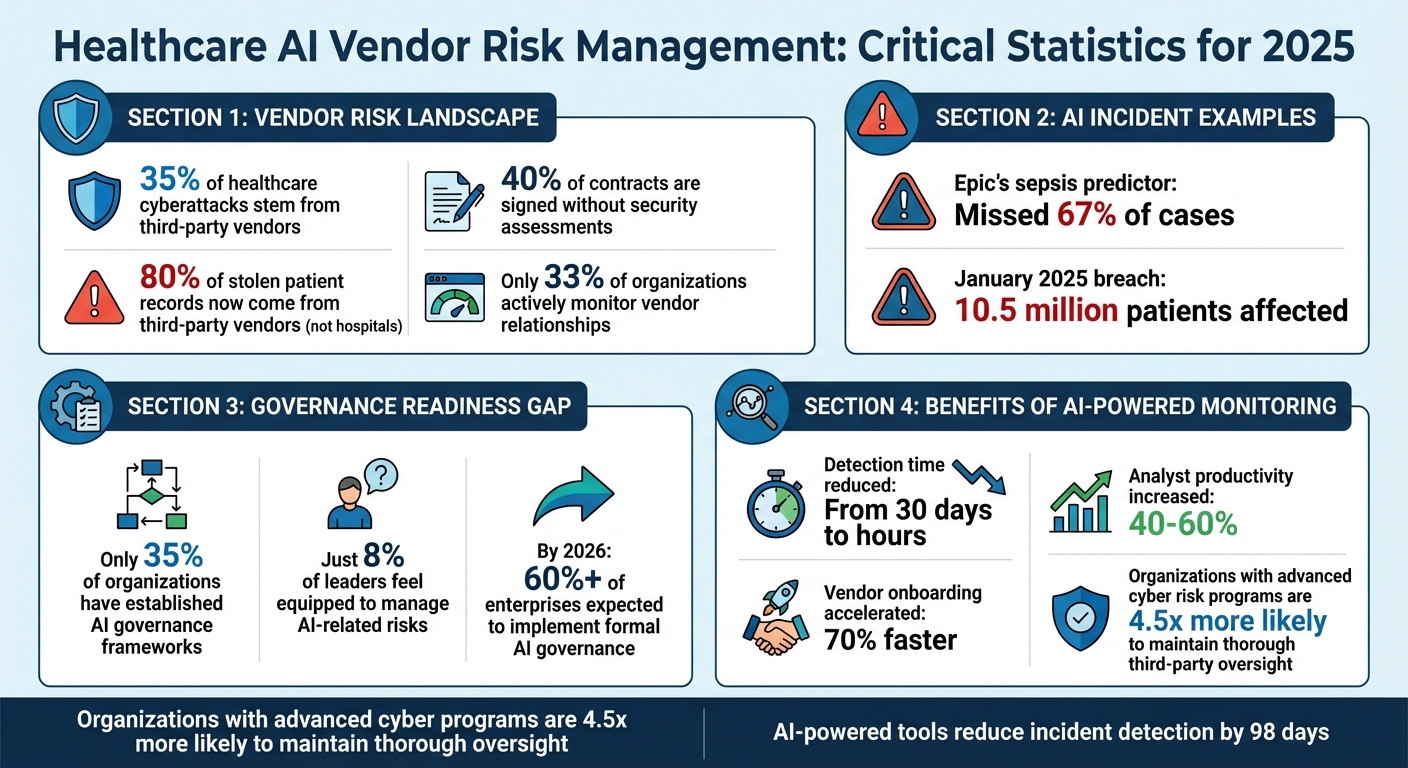

Healthcare's adoption of AI is transforming patient care and operations, but it comes with risks that demand structured oversight. Key challenges include data breaches, algorithmic bias, and regulatory compliance. 35% of healthcare cyberattacks stem from third-party vendors, yet 40% of contracts are signed without security assessments. The stakes are high, as seen with incidents like Epic's sepsis predictor missing 67% of cases and a January 2025 breach affecting 10.5 million patients.

Healthcare leaders must now prioritize continuous monitoring and robust governance frameworks to manage these risks and meet stricter 2025 regulatory requirements, including FDA and state-level rules. Selecting AI vendors involves assessing security practices, bias mitigation, and compliance readiness. Contracts should include clauses for liability, transparency, data ownership, and performance guarantees. Tools like Censinet RiskOps™ simplify vendor evaluations and ongoing monitoring, enabling organizations to manage AI risks effectively.

Key Takeaways:

- AI risks are dynamic and require proactive governance.

- Regulatory bodies like the FDA demand compliance with strict standards.

- Vendor contracts must address AI-specific risks and responsibilities.

- Continuous risk monitoring is essential to safeguard patient safety and data.

Healthcare organizations must act now to strengthen AI governance, reduce vulnerabilities, and ensure compliance in this rapidly evolving landscape.

Healthcare AI Vendor Risk Management: 2025 Key Statistics and Compliance Data

2025 Regulatory Requirements for Healthcare AI

The rules surrounding healthcare AI have taken a sharp turn in 2025, moving from mere recommendations to strict enforcement. Experts are calling this the "AI Enforcement Era," as the FDA ramps up regulatory actions for AI used in healthcare settings[4]. This shift means healthcare organizations working with AI vendors must now treat AI as a regulated technology, not just another piece of software.

Regulatory Bodies and Their Guidelines

Several federal and state agencies are now involved in regulating healthcare AI, each with its own set of rules. At the forefront is the FDA, which enforces regulations when AI systems influence areas like labeling, performance claims, dosing, safety, or clinical decisions. In these cases, the entire AI solution - including vendor-provided features - must comply with device-level quality standards, validation protocols, and lifecycle controls[4]. The FDA's expectations are closely aligned with the NIST AI Risk Management Framework (AI RMF), creating a unified governance approach.

Meanwhile, HIPAA continues to oversee the handling of protected health information in AI applications. Additionally, the Joint Commission and Coalition for Health AI issued new guidance in September 2025 on the "Responsible Use of AI in Healthcare", aimed at providers[5][6]. This overlapping oversight means healthcare organizations must carefully navigate multiple regulatory requirements, which directly impact how they select and monitor AI vendors.

On the state level, compliance gets even trickier. Many states have introduced laws requiring transparency, human oversight, and safeguards against discrimination in AI-driven healthcare. At least 18 states have adopted language from Colorado's 2024 AI law, pointing to a growing trend toward standardized disclosure frameworks that vendors must manage across various jurisdictions[7].

Compliance Challenges for AI Vendors

For AI vendors, keeping up with these evolving regulations is no small feat. The FDA made its enforcement stance clear in April 2025 when it issued a warning letter to Exer Labs. The company had misclassified an AI-powered diagnostic tool, failing to secure the necessary 510(k) clearance. The FDA also flagged several issues, including missing design controls, inadequate corrective procedures (CAPA), insufficient audit trails, unqualified suppliers, and gaps in employee training[4]. This case serves as a cautionary tale: vendors entering the market with AI tools for screening, diagnosis, or treatment must have a solid regulatory foundation or risk serious penalties.

Healthcare organizations, in turn, need to demand thorough documentation from their AI vendors. This includes transparency in system architecture, validation processes, data privacy protocols, security measures, and robust change-control procedures[4]. The challenge is compounded by the need for vendors to meet standardized disclosure requirements across multiple states and jurisdictions[7]. These hurdles highlight the importance of healthcare organizations staying ahead in their compliance efforts.

Adapting to Regulatory Updates

To keep pace with these regulatory demands, healthcare organizations must overhaul their compliance strategies. Start by creating a detailed inventory of AI systems, classifying them by risk level, and applying controls tailored to each category[4]. It's time to move beyond experimentation and establish a structured operating model. This should include an AI governance board, clear principles for responsible AI use, risk classification frameworks, vendor oversight protocols, and defined roles for quality assurance, IT, and business teams.

Preparing for FDA audits is another critical step. Organizations should maintain detailed records of model performance, implement drift detection mechanisms, control unintended behaviors, secure approvals for retraining, and ensure traceability of AI outputs back to auditable inputs[4]. Transparency tools are essential, documenting when and how AI impacts decisions and creating audit trails to prove human oversight. These measures are especially important for organizations operating across multiple jurisdictions with varying regulatory requirements[7].

How to Select and Contract AI Vendors

With the strict compliance requirements looming for 2025, healthcare organizations must take a meticulous approach when selecting AI vendors. This is not just about meeting technical needs - it's about safeguarding against compliance risks. By 2026, over 60% of enterprises are expected to implement formal AI governance frameworks[8]. Without a robust evaluation process, organizations risk exposing themselves to data breaches, regulatory penalties, and even patient safety issues.

Evaluating AI Vendors: What to Look For

Start with a deep dive into the vendor's security practices. Assess their infrastructure, data handling procedures, and incident response plans. Transparency is key - vendors should clearly disclose their AI training data sources, model governance practices, and how they test for and address biases[9]. Bias detection and mitigation are especially crucial in healthcare, where algorithmic disparities could directly impact patient care decisions.

Another critical step is conducting a thorough audit of how the vendor handles protected health information (PHI). Look at how they process, store, and transmit PHI, paying close attention to data residency, encryption methods, and access controls[10][11]. Don’t forget about fourth-party risks - ask vendors to identify any subprocessors or cloud providers they rely on, as these can introduce additional vulnerabilities.

Tejas Ranade, Chief Product Officer at TrustCloud, emphasizes: "Many organizations overlook third-party AI risk. Having a structured approach to vendor management is what will make your compliance and data security make or break - and frankly, what's needed to keep up with how fast AI is moving."[8]

Key Contract Terms for AI Vendors

AI vendor agreements require clauses that go beyond traditional software contracts. Clearly define liability for AI-related errors, such as incorrect diagnoses or treatment recommendations. Include mandatory disclosure requirements, compelling vendors to share details about their AI usage, training data, and any algorithm updates[8][10]. Establish data ownership terms upfront to ensure your organization retains full control over patient data and any insights derived from it[10].

Service level agreements (SLAs) should outline expectations for system uptime, incident response times, and performance metrics[9][11]. Your contract should also grant audit rights, enabling you to review the vendor's AI systems, security measures, and compliance documentation whenever necessary[8][10]. Additionally, plan for the end of the relationship by including data portability terms, ensuring you can retrieve your data in usable formats if needed[10].

Bias mitigation efforts should also be a contractual requirement. Vendors should provide evidence of their strategies and commit to ongoing testing throughout the agreement's duration[9].

As noted by the Health AI Partnership: "By establishing shared expectations between vendors and HDO teams, the framework promotes clear accountability, evidence-based procurement decisions, and proactive risk mitigation through transparent documentation of system capabilities, limitations, and safety measures."[11]

These contract elements create a foundation for effective oversight, a process that can be streamlined using tools like Censinet RiskOps™.

Simplifying Vendor Evaluation with Censinet RiskOps™

Manually assessing vendors can be slow and prone to oversights. Censinet RiskOps™ simplifies this process by centralizing all vendor evaluation tasks into one platform. It tracks security questionnaires, compliance documentation, and risk scores for your AI vendors, automating repetitive tasks while keeping critical decisions under human oversight.

The platform drastically reduces the time needed to complete vendor questionnaires, summarizing evidence and capturing important details about integrations and fourth-party risks in seconds. It generates detailed risk reports, giving your team an instant snapshot of each vendor’s risk profile. With customizable workflows, critical findings are routed directly to your AI governance committee for review - acting as a control center for AI oversight. A centralized dashboard provides real-time updates on policies, risks, and tasks, ensuring accountability across your organization and enabling teams to address issues efficiently and effectively.

sbb-itb-535baee

Ongoing Risk Monitoring and Mitigation

Signing a contract is just the beginning - ongoing monitoring is crucial. Consider this: 80% of stolen patient records now come from third-party vendors rather than directly from hospitals [13]. However, only about one-third of organizations actively monitor their vendor relationships, leaving a substantial gap in oversight [2]. Organizations with advanced cyber risk programs are 4.5 times more likely to maintain thorough third-party oversight [2]. In 2025, continuous risk management isn't just a recommendation - it's a governance necessity. Below, we'll outline key practices to ensure effective risk assessment and quick incident response.

Regular Vendor Risk Assessments

For vendors handling high-risk AI, quarterly risk assessments are a must, while lower-risk vendors should undergo annual reviews. Implement network monitoring at integration points to track data flows, flagging unusual transfers, unexpected API activity, or any behavioral changes that might signal a security issue [13]. Stay informed about security incidents reported by vendors, even if those incidents haven’t directly impacted your systems. To address risks from subcontractors (fourth-party risks), require your primary vendors to enforce equivalent security standards with their partners [13].

During these assessments, dig deeper by asking specific questions about the AI model’s design, the data used for training, risk controls, explainability features, and ongoing monitoring practices. Ensure these align with the latest AI regulations [14]. Additionally, AI-powered threat detection tools can significantly reduce the time it takes to identify incidents - by as much as 98 days [13]. These efforts help ensure you're prepared to act swiftly if an AI-related issue arises.

Responding to AI Incidents

When an AI-related incident occurs - whether it’s a data breach, algorithm malfunction, or biased output affecting patient care - speed is critical. Set up clear escalation paths and include a multidisciplinary response team comprising data scientists, clinicians, compliance officers, and ethics experts. Start by documenting the incident, isolating the affected system, notifying the vendor as per your contract, and activating your incident response plan. If the issue impacts patient-facing AI, clinical teams should reassess diagnoses or treatment recommendations as necessary. For breaches involving protected health information (PHI), HIPAA requires notifying affected individuals within 60 days.

Once the immediate issue is contained, conduct a root cause analysis to uncover vulnerabilities and update your risk assessments accordingly. If manual monitoring becomes too resource-intensive, automation can help maintain robust oversight without overburdening your team.

Scaling Oversight with Censinet AI

Routine assessments are essential, but automated tools can elevate your oversight to the next level. Censinet AI turns monitoring into a dynamic, predictive process by analyzing real-time risk signals and generating composite risk scores [1]. Early adopters have seen impressive results: detection times cut from 30 days to mere hours, analyst productivity boosted by up to 60%, and vendor onboarding accelerated by 70% [1]. Its centralized dashboard offers a real-time view of AI-related policies, risks, and tasks, enabling your team to maintain continuous oversight and respond to new challenges quickly. This streamlined approach ensures compliance and keeps your organization ahead of potential risks.

AI Governance Frameworks for 2025 and Beyond

Creating a strong AI governance framework is essential to keep pace with evolving threats. On December 4, 2025, the U.S. Department of Health and Human Services (HHS) unveiled a 21-page AI strategy that prioritizes governance and risk management to build public trust [12]. As part of this initiative, HHS now mandates that its divisions establish baseline risk management protocols for high-impact AI systems by April 3, 2026, aligning with the Office of Management and Budget’s (OMB) timeline [12]. This move represents a shift from simple compliance to resilience, redefining how AI vendors are overseen [1]. To meet these challenges, innovative staffing approaches are becoming a necessity.

Combining Internal Teams with Managed Services

Currently, only 35% of organizations have an established AI governance framework, and just 8% of leaders feel equipped to manage AI-related risks [16]. A hybrid approach - merging in-house expertise with managed services - can help bridge this gap. Internal teams focus on strategic functions like setting risk tolerance levels, crafting policies, and supervising critical vendors. Meanwhile, managed services take on the operational workload, ensuring scalability. Tools like Censinet RiskOps™ make this model feasible by centralizing AI-related policies, risks, and tasks into a single dashboard. This setup acts like "air traffic control", ensuring that the right teams tackle the right issues at the right time without overwhelming internal resources [1].

Preparing for New AI Threats

AI threats are constantly changing, so governance frameworks need to be adaptable and forward-thinking. Annual risk reviews are a must - they help reassess vendor portfolios, refine risk-tiering frameworks for new AI use cases, and keep up with regulations like the EU AI Act [14]. HHS’s guidance, rooted in the NIST AI Risk Management Framework, advocates for continuous monitoring and integrating risk assessments into AI deployment processes [12][15]. This strategy strikes a balance between adopting useful AI tools and maintaining safeguards through early testing and ongoing risk management.

Action Items for Healthcare Leaders

Healthcare leaders must act now to address these challenges. Start by evaluating your current state: inventory existing policies, tools, third-party relationships, and systems that handle protected health information (PHI). Log potential risks and consolidate them into centralized platforms [3]. Establish an AI Governance Board with representatives from IT, cybersecurity, data, and privacy teams, and create a cross-division AI Community of Practice to drive implementation efforts [12]. Review vendor contracts to ensure they disclose any use of AI in their services, and scrutinize data policies to confirm that third parties aren’t using your organization’s data to train AI models [14].

To secure executive support, use visual dashboards that clearly demonstrate the impact of AI risks [3]. Early adopters of AI-powered risk management have reported impressive results: reducing the time to detect risks from 30 days to mere hours, increasing analyst productivity by 40–60%, and speeding up vendor onboarding by 70% [1]. With tools like Censinet RiskOps™, these outcomes are achievable while maintaining the human oversight necessary for effective and scalable AI governance.

FAQs

What are the essential elements of a strong AI governance framework in healthcare?

A solid AI governance framework in healthcare prioritizes patient safety, data security, and regulatory compliance. To achieve this, several critical elements must come together:

- Continuous risk monitoring to spot and address vulnerabilities as they emerge.

- Defined vendor evaluation criteria to ensure reliability and adherence to regulations.

- Compliance with laws like HIPAA, safeguarding sensitive patient data.

- Strong data security protocols to minimize the risk of breaches.

- Human oversight to validate AI outputs and catch potential errors.

- Transparency in how AI tools operate and make decisions.

- Prepared incident response plans to tackle issues swiftly and effectively.

By weaving these components into their governance approach, healthcare organizations can stay ahead of risks while fostering trust and ensuring compliance.

What steps should healthcare organizations take to evaluate and choose the right AI vendors?

To choose the right AI vendors, healthcare organizations need to prioritize cybersecurity, operational reliability, and regulatory compliance (like HIPAA). Start by conducting thorough research - this includes examining the vendor's financial health, their data management policies, and how they handle risks.

Use tools such as risk scoring models and real-time monitoring to spot vulnerabilities and rank vendors accordingly. Regular audits and open communication are essential, as is incorporating vendor evaluations into your overall governance strategy. This approach ensures AI solutions stay secure, dependable, and aligned with changing regulations. And don’t forget: human oversight is crucial to verify AI performance and decision-making.

How can healthcare organizations comply with the 2025 AI regulations?

To align with the upcoming 2025 AI regulations in healthcare, organizations need to establish a well-structured governance framework that focuses on safeguarding data, protecting patient safety, and meeting regulatory standards. This involves several key actions: conducting thorough risk assessments, adopting AI-specific measures such as data minimization and ensuring model transparency, and adhering to both federal and state privacy laws.

It's also crucial to keep detailed documentation of AI usage, data management practices, and vendor partnerships to maintain accountability. Routine audits, continuous monitoring of AI systems, and transparent communication about compliance efforts are vital to staying prepared for these regulatory changes.