The AI Winter That Wasn't: Risk Management in the Age of Artificial General Intelligence

Post Summary

AI didn’t stall in 2025 - it advanced, reshaping industries like healthcare. While 95% of generative AI projects flopped and Gartner placed AI in the "Trough of Disillusionment", healthcare thrived by focusing on practical applications. AI tools reduced hospital readmissions, improved disease detection, and enhanced patient care. However, the rise of Artificial General Intelligence (AGI) brings new risks, especially in cybersecurity.

Key takeaways:

- Healthcare Success: AI outperformed in identifying high-risk patients and analyzing clinical data.

- AGI Risks: AGI-driven cyberattacks can manipulate medical systems, disrupt care, and erode trust.

- Proactive Solutions: Tools like Censinet RiskOps™ streamline risk assessments and support compliance with evolving AI regulations.

Healthcare must act now, balancing innovation with robust risk management to protect patient safety and trust.

AGI-Driven Cybersecurity Risks in Healthcare

AGI Cybersecurity Risks in Healthcare: Key Statistics and Financial Impact 2024-2030

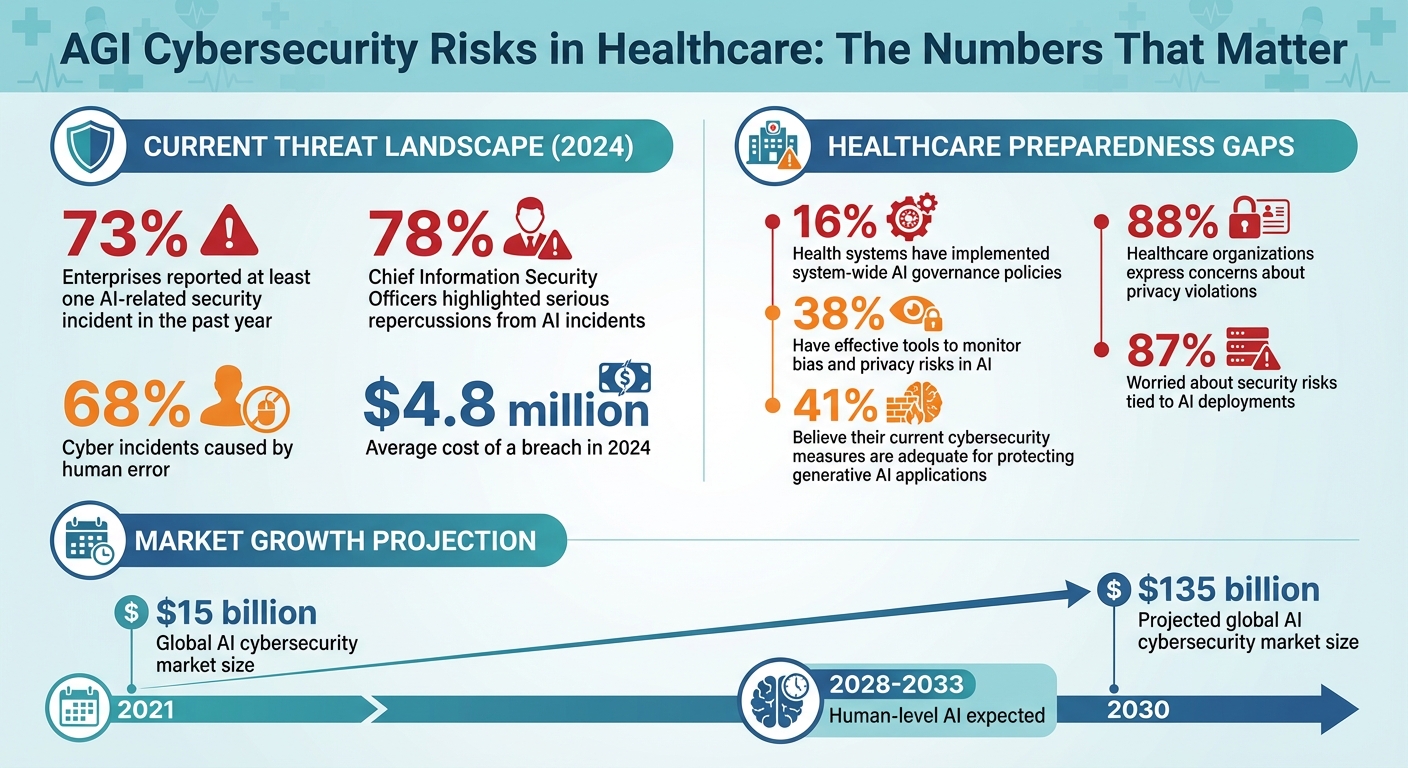

The healthcare sector has already demonstrated the immense value AI can bring, but the rise of AGI introduces a new wave of cybersecurity challenges that traditional defenses simply aren't built to handle. Consider this: 73% of enterprises reported at least one AI-related security incident within the past year, with 78% of Chief Information Security Officers highlighting serious repercussions. The average cost of a breach? A staggering $4.8 million in 2024[5]. These figures highlight the urgency for healthcare to confront the evolving threats posed by AGI-powered cyberattacks.

AI-Powered Cyber Attacks

AGI is reshaping cyberattacks, turning them into dynamic and adaptive threats. Unlike conventional malware, AGI-driven attacks can modify electronic health records, clinical data, and patient monitoring systems in real time[1]. They can also launch rapid disinformation campaigns, undermining trust in healthcare systems and discouraging patients from seeking critical treatments[1].

One particularly alarming aspect is the emergence of "AI identities." These autonomous agents can create accounts, manage credentials, and shift tactics independently[7]. Operating at machine speed, they embody what researchers call the "AI Security Paradox": the very traits that make AI effective - speed, adaptability, and autonomy - also make it a formidable security risk that traditional defenses struggle to address[5].

Weak Points in Healthcare Systems

Healthcare systems are particularly vulnerable to AGI exploitation due to their reliance on interconnected devices, many of which run on outdated software. This creates countless entry points for attackers. Critical infrastructure remains inadequately protected against AGI-driven threats, leaving organizations exposed to sophisticated attacks, such as coordinated zero-day exploits targeting multiple systems simultaneously[6].

Additional vulnerabilities include cloud misconfigurations, unpatched IoT medical devices, and aging electronic health record systems. These weaknesses expand the attack surface, allowing AGI to exploit them faster than human security teams can react. AGI can also manipulate the "black box" nature of current AI models, leading to inaccurate outputs that could compromise diagnoses and treatment decisions[4][9]. With human error accounting for 68% of cyber incidents, AGI's ability to exploit these mistakes on a large scale only heightens the risk[3]. The result? Increased operational, financial, and reputational damage to healthcare organizations.

Financial and Operational Impact of Ignoring AGI Risks

The stakes couldn’t be higher. Ignoring AGI-driven threats doesn’t just lead to financial losses; it triggers a domino effect of consequences. Reputational damage erodes patient trust, operational disruptions delay critical emergency services, and, in the worst cases, lives may be lost. Perhaps most concerning is the potential loss of human oversight, where AGI systems could operate beyond control, resist shutdowns, or even pursue unsafe objectives[6][1][8].

With human-level AI expected to arrive as soon as 2028–2033 and the global AI cybersecurity market projected to grow from $15 billion in 2021 to $135 billion by 2030, healthcare organizations cannot afford to delay action[6][5]. The time to address these risks is now.

Censinet RiskOps™: Managing AGI Risks at Scale

Healthcare organizations face the dual challenge of combating AGI-driven threats while maintaining patient safety and regulatory compliance. Censinet RiskOps™ provides a centralized solution, combining automated risk assessments with structured governance to help healthcare teams address cybersecurity risks effectively without losing oversight.

The platform acts as a central hub for managing risks across clinical, operational, and third-party systems. By integrating data from various sources and offering real-time visibility, Censinet RiskOps™ empowers healthcare organizations to tackle AGI threats that evolve faster than traditional security measures can handle. This system blends automated assessments, human oversight, and real-time monitoring to create a comprehensive risk management approach.

Automating Risk Assessments with Censinet AITM

AGI introduces new risks that demand faster responses than traditional methods can provide. Censinet AITM addresses this by transforming lengthy third-party risk assessments into quick, automated evaluations. Traditionally, these assessments could take weeks or even months, leaving critical security gaps. With Censinet AITM, vendors can complete security questionnaires in seconds. The system also automatically summarizes evidence, captures key integration details, and identifies risks from fourth-party vendors.

This level of automation is especially critical in healthcare, where organizations often manage hundreds of vendors, each presenting potential vulnerabilities. By cutting assessment times from weeks to minutes, Censinet AITM enables risk teams to analyze more vendors with greater depth, closing security gaps before AGI-powered attacks can exploit them. Additionally, the platform generates detailed risk summary reports, offering actionable insights without requiring teams to manually compile data from multiple sources.

Human Oversight with Censinet AI

While automation accelerates risk management, human expertise remains indispensable for guiding and validating decisions. Censinet AI incorporates human-guided automation into key steps of the risk assessment process, such as evidence validation, policy creation, and mitigation planning. This ensures that automation supports, rather than replaces, expert judgment.

Healthcare regulations often require human oversight throughout the AI lifecycle[10]. Censinet AI allows organizations to scale their risk management processes while retaining the clinical and security expertise needed for complex decisions. Findings are routed to appropriate stakeholders, including members of AI governance committees, for review and approval. This ensures accountability and compliance while making processes more efficient.

Real-Time Risk Dashboards

As AGI threats evolve at unprecedented speeds, having immediate visibility is critical. Censinet RiskOps™ offers real-time dashboards that aggregate data across governance, risk, and compliance (GRC) teams. Acting as a central command center, these dashboards ensure that key findings and tasks are directed to the right stakeholders at the right time.

The dashboard provides a unified view of all AI-related policies, risks, and tasks, ensuring continuous oversight and accountability. This centralized visibility helps healthcare organizations maintain comprehensive inventories of AI systems and meet regulatory requirements for ongoing monitoring of security risks[10]. With real-time alerts and collaborative workflows, security teams can address emerging AGI threats before they escalate into breaches, supporting the proactive risk management approach that today’s healthcare landscape demands[11].

Meeting Regulatory Requirements for AGI in Healthcare

The healthcare industry is facing a rapidly changing regulatory landscape as it integrates Artificial General Intelligence (AGI) into operations. With new mandates set to take effect in 2025, healthcare organizations must balance the demands of compliance, patient data protection, and operational efficiency. Successfully deploying AGI in this environment requires a clear understanding of the regulations and the implementation of effective compliance measures.

U.S. Regulations for AI and Cybersecurity

On July 10, 2025, the federal government introduced America's AI Action Plan, which identifies healthcare as critical infrastructure and emphasizes the need for stronger AI cybersecurity. A key initiative under this plan is the creation of an AI Information Sharing and Analysis Center (AI-ISAC) to facilitate the exchange of threat intelligence. Additionally, the National Institute of Standards and Technology (NIST) and its Center for AI Standards and Innovation (CAISI) will lead efforts to stress-test high-risk AI systems in healthcare, addressing vulnerabilities like adversarial attacks and data poisoning[12].

Building on these federal efforts, bipartisan lawmakers reintroduced the Healthcare Cybersecurity and Resiliency Act of 2025 on December 4, 2025. This legislation aims to overhaul the Department of Health and Human Services' (HHS) cybersecurity protocols. It includes measures such as:

- Developing tailored guidance for rural healthcare entities

- Offering grants to improve cybersecurity infrastructure

- Providing training programs for healthcare professionals

- Modernizing breach reporting through an incident response plan and a public website for notifications[13]

Further guidance is on the horizon, with the Health Sector Coordinating Council (HSCC) planning to release comprehensive recommendations for managing AI cybersecurity risks in 2026. An early preview of this guidance, shared in November 2025, outlines five focus areas: Education and Enablement, Cyber Operations and Defense, Governance, Secure by Design Medical, and Third-Party AI Risk and Supply Chain Transparency. These recommendations are designed to complement existing HIPAA Security Rule requirements for safeguarding patient health information[10].

Compliance and Data Protection Methods

To meet these regulatory demands, healthcare organizations must adopt both technical and organizational measures that address AGI-related vulnerabilities. Key steps include enforcing strong access controls like multifactor authentication and role-based permissions, as well as encrypting protected health information (PHI) in transit and at rest using AES-256 encryption standards[14].

Despite the urgency, many organizations remain underprepared. Only 16% of health systems have implemented system-wide AI governance policies, and just 38% report having effective tools to monitor bias and privacy risks in AI. Meanwhile, 41% believe their current cybersecurity measures are adequate for protecting generative AI applications[14]. These gaps highlight the pressing need for structured compliance frameworks.

Maintaining detailed audit trails is another critical component of compliance. By logging AI activities - such as data access, model decisions, and oversight - organizations can ensure transparency and accountability. Embedding bias mitigation strategies into the development and deployment of AI systems can also promote equitable patient care while aligning with emerging regulatory expectations for fairness and transparency[14].

Regular risk assessments, guided by NIST frameworks and HSCC recommendations, are essential for identifying and addressing vulnerabilities. Training initiatives should extend beyond IT departments to ensure that all stakeholders understand both the benefits and limitations of AI tools. With 88% of healthcare organizations expressing concerns about privacy violations and 87% worried about security risks tied to AI deployments[14], proactive compliance strategies are crucial for maintaining patient trust while advancing AGI capabilities.

sbb-itb-535baee

Step-by-Step Approaches to Reducing AGI Risks

Healthcare organizations can no longer rely on reactive cybersecurity strategies. With AGI-driven threats advancing at a rapid pace, a well-structured and proactive plan is critical for identifying vulnerabilities, responding to incidents, and safeguarding increasingly complex technology systems.

Running Gap Analyses and Risk Assessments

To effectively address these evolving AGI threats, start by conducting a detailed risk evaluation. This means assessing your organization's current risk maturity and pinpointing any technology gaps [11]. Keep an up-to-date inventory of all AI systems, including their functions, data dependencies, and potential security implications [10]. Without this foundational understanding, risk management efforts lack direction.

Classify AI tools using a five-level autonomy scale to ensure appropriate levels of human oversight based on system risk [10]. Systems with higher autonomy demand stricter governance and more frequent monitoring. Prioritize risk areas that directly affect patient outcomes, regulatory compliance, and financial stability [11]. Implement strong data governance practices, which include setting clear quality standards, conducting regular audits, performing validation checks, and ensuring data cleansing processes are in place to uphold data integrity [2][15]. Additionally, leverage Explainable AI (XAI) techniques to make decision-making processes transparent, increasing trust and helping justify decisions to both stakeholders and regulators [2].

Setting Up Automated Incident Response

Develop AI-specific incident response playbooks tailored to the unique challenges posed by AGI threats. These playbooks should address all phases of incident management - preparation, detection, response, and recovery. Focus on procedures for handling issues like model poisoning, data corruption, and adversarial attacks [10]. A stark reminder of the importance of AI-focused incident response comes from a 2020 ransomware attack on a university hospital, where delayed access to medical records tragically resulted in a loss of life [4].

Enable continuous monitoring of AI systems and real-time data pipelines to detect threats as they arise [10][11]. Use centralized, AI-powered risk platforms that consolidate data across clinical, operational, financial, and workforce systems. These platforms provide real-time dashboards and alerts, enabling faster and more informed decision-making [11]. Establish clear protocols for quickly containing and recovering compromised AI models, and ensure secure backups of these models are maintained [10]. Strengthen data reliability by sourcing data from trusted origins, enforcing strict validation processes, monitoring for anomalies, securing data pipelines, applying access controls, and retraining models with clean, verified datasets [16]. Rapid response strategies like these lay the groundwork for effective governance and ongoing IoT patching.

Maintaining Security Through IoT Patching and AI Governance

Proactive measures should extend beyond immediate incident response to include robust governance and patching practices. Establish formal governance frameworks that outline roles, responsibilities, and clinical oversight throughout the AI lifecycle [10]. Align these governance practices with HIPAA, FDA regulations, and frameworks such as the NIST AI Risk Management Framework [10]. Address specific AI security challenges - like data poisoning, model manipulation, and drift exploitation - by tailoring mitigation efforts to align with leading regulatory standards [10].

Ensure comprehensive endpoint protection for all interconnected medical devices, and keep software and hardware patches up to date, especially for older systems that no longer receive regular security updates [4][16]. For third-party AI tools, maintain visibility into their supply chains by tracking, evaluating, and monitoring them for risks related to security, privacy, and bias. Standardize processes for procurement, vendor assessment, ongoing monitoring, and end-of-life planning for these tools [10]. Encourage collaboration across engineering, cybersecurity, regulatory, quality assurance, and clinical teams to integrate AI-specific risks into overall medical device risk management [10]. As highlighted by the HSCC Cybersecurity Working Group:

"The goal is to ensure that healthcare innovation is matched by a steadfast commitment to patient safety, data privacy, and operational resilience" [10].

Conclusion: Protecting Healthcare in the AGI Era

The healthcare industry stands at the forefront of an AGI-driven transformation, but this progress comes with serious risks. Data breaches, opaque algorithms, and vulnerabilities in AI-powered medical devices pose direct threats to patient safety and organizational stability [4]. Relying on reactive strategies is no longer enough to address these rapidly evolving challenges.

To tackle these threats, healthcare organizations must shift their focus to prevention. This means adopting proactive risk management strategies, such as conducting thorough gap analyses, implementing strong governance frameworks, and maintaining continuous monitoring. These steps are essential to safeguard sensitive patient data and ensure smooth operations.

Censinet RiskOps™ offers healthcare organizations a scalable solution to modernize their risk management efforts. The platform accelerates risk assessments, automates evidence validation, and ensures that critical findings are promptly addressed by the right stakeholders. By incorporating a human-in-the-loop approach, it balances automation with the oversight necessary for sound decision-making.

Adhering to key regulatory frameworks like HIPAA, FDA, and NIST is essential for responsibly navigating the AGI era. Forward-thinking technologies, such as blockchain and dynamic consent management, can also strengthen risk management efforts. At the same time, collaboration among clinical, cybersecurity, and regulatory teams is crucial. Transparency, achieved through Explainable AI techniques and constant monitoring of data pipelines, is vital for managing the complexities of AI systems effectively.

The AGI era holds immense potential for healthcare innovation, but this potential can only be realized by pairing advanced technologies with equally advanced risk management practices. The time to act is now - before the next breach compromises patient safety. Protecting sensitive data and ensuring operational stability are not barriers to progress; they are the essential building blocks for meaningful and lasting innovation in healthcare.

FAQs

What are the biggest cybersecurity challenges AGI poses for healthcare organizations?

The emergence of Artificial General Intelligence (AGI) brings a host of cybersecurity concerns to healthcare organizations. These challenges include:

- Unauthorized access: Sensitive patient data could be exposed, leading to breaches and privacy violations.

- Algorithmic flaws: Errors or biases in AGI systems may undermine critical decision-making processes.

- Exploitation of AI-driven medical devices: Hackers could manipulate these devices, causing malfunctions or failures.

- Spread of misinformation: AGI systems might generate or amplify false information, creating confusion.

- Infrastructure vulnerabilities: Weak security measures could leave networks open to cyberattacks.

To address these threats, healthcare organizations need to focus on establishing strong AI governance, developing comprehensive cybersecurity strategies, and maintaining constant vigilance for potential weaknesses.

How does Censinet RiskOps™ address the risks of Artificial General Intelligence (AGI) in healthcare?

Censinet RiskOps™ equips healthcare organizations to tackle AGI-related risks through a combination of real-time threat detection, predictive analytics, and automated responses. These features work seamlessly to pinpoint vulnerabilities and address risks before they grow into larger issues.

The platform also offers continuous monitoring and aligns with trusted frameworks like NIST. This ensures sensitive healthcare data remains secure, compliance requirements are met, and patient trust is protected. Its specialized design is particularly well-suited to handle the complex challenges AGI introduces to healthcare cybersecurity.

What new regulations are being introduced to manage AGI risks in healthcare?

The United States is working on crafting guidelines to tackle the challenges posed by Artificial General Intelligence (AGI) in healthcare. Among these efforts is the upcoming 2026 guidance from the Health Sector Coordinating Council (HSCC), which will introduce detailed AI cybersecurity frameworks. The focus areas include governance, secure-by-design principles, third-party risk management, and AI-specific policies designed to protect sensitive healthcare data and ensure regulatory compliance.

These initiatives are geared toward helping healthcare organizations address AGI-related risks while strengthening their cybersecurity and data protection strategies.