Behavioral Analytics Revolution: How AI Detects Insider Threats

Post Summary

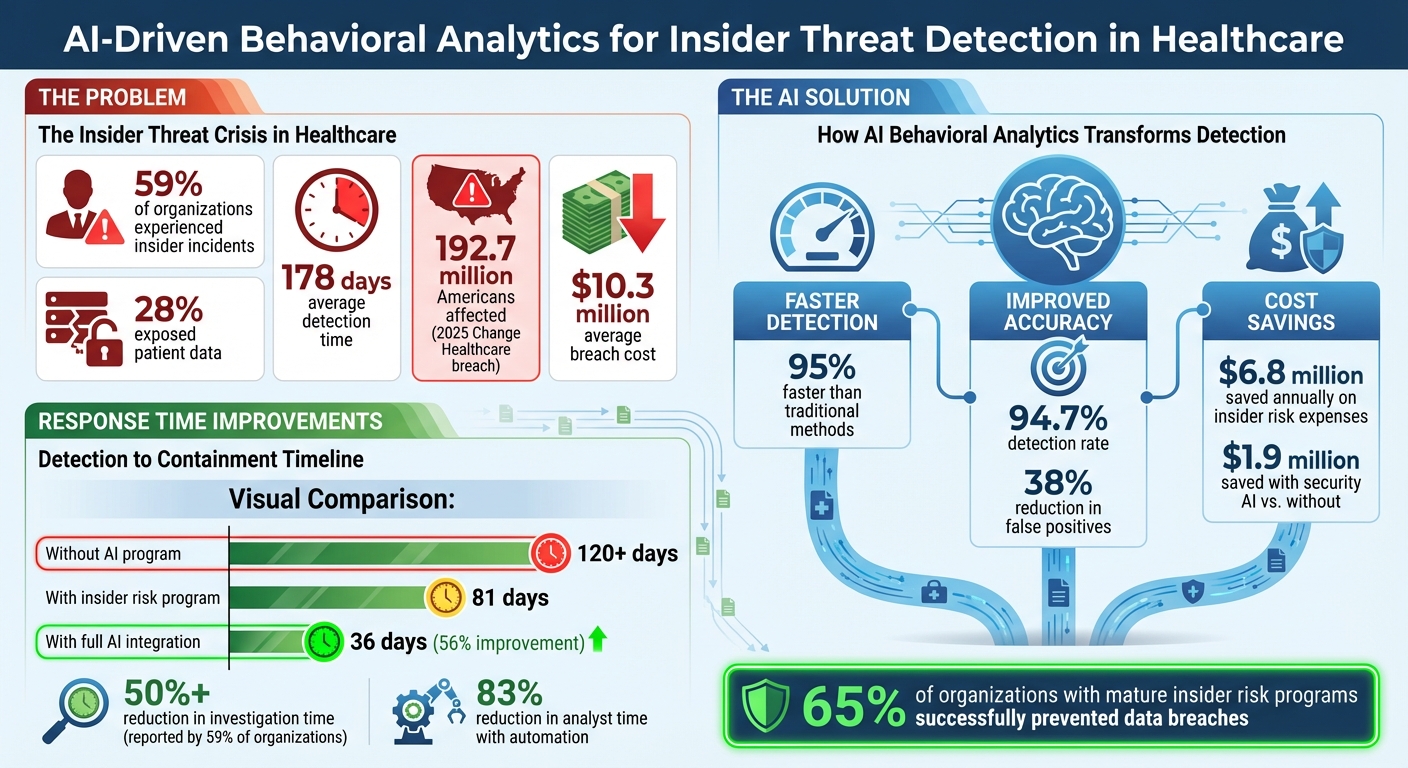

Insider threats in healthcare are a growing problem, with 59% of organizations experiencing incidents and 28% exposing patient data. These threats take an average of 178 days to detect, leading to costly consequences, like the 2025 Change Healthcare breach affecting 192.7 million Americans.

AI-driven behavioral analytics offers a solution by monitoring user activity, identifying unusual patterns, and reducing detection time. By analyzing data like system logs and access patterns, AI establishes baselines for normal behavior and flags deviations. Tools like Censinet RiskOps™ and Censinet AITM enable healthcare organizations to monitor threats in real-time, automate responses, and improve security accuracy.

Key benefits include:

- Faster detection: AI identifies threats 95% faster than older methods.

- Improved accuracy: False positives reduced by 38%, with detection rates nearing 94.7%.

- Cost savings: Organizations save an average of $6.8 million annually on insider risk expenses.

AI-driven tools are reshaping cybersecurity, equipping healthcare organizations to address insider threats more effectively while safeguarding sensitive patient data.

AI-Driven Behavioral Analytics Impact on Healthcare Insider Threat Detection

How Behavioral Analytics Works in Healthcare Cybersecurity

Behavioral analytics systems in healthcare cybersecurity analyze a wide range of data, including user activity logs, access patterns, system logs, network traffic, authentication events, file system changes, process executions, and cloud API calls [8]. This data is used to establish what constitutes "normal" user behavior, which is critical for identifying unusual or potentially harmful actions within the system.

"Behavioral analytics in cybersecurity refers to the process of collecting and analyzing data about how users, applications, systems, and devices behave across a network. It creates a baseline of what is considered 'normal' and continuously monitors for deviations that may indicate malicious intent or insider threats."

– Seceon [6]

Creating Baselines for Normal User Behavior

To define normal behavior, these systems analyze historical data to understand typical patterns for specific roles. For example, a nurse might routinely access patient records during shift hours, while a billing administrator typically works during standard business hours and interacts with financial systems. These patterns help the system establish baselines for expected behavior [2].

Organizations often implement a learning phase lasting two to four weeks, during which the system passively observes activity without generating alerts [8]. This period allows the AI to gather enough data to identify regular patterns before active monitoring begins. Advanced machine learning methods, such as Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks, are employed to analyze sequential data. These algorithms help the system recognize behavioral trends and changes over time, reducing false positives and enhancing monitoring accuracy [9].

How AI Algorithms Detect Anomalies

Once baselines are established, AI algorithms continuously monitor for deviations that could signal insider threats. By tracking activities like logins, file access, system usage, and network traffic, these systems can identify behaviors that deviate from the norm. For instance, if an employee begins downloading large volumes of data at odd hours, the system flags this as suspicious [2].

Unlike traditional rule-based systems, AI can detect subtle anomalies that might otherwise go unnoticed. Unsupervised algorithms analyze unlabeled data to uncover hidden patterns [8]. Techniques like the Exponentially Weighted Moving Average (EWMA) are used to update baselines dynamically, ensuring they adapt to changes within the organization [9]. This combination of anomaly detection and adaptability enhances the system's ability to identify threats.

Context-Aware AI for Accurate Threat Detection

Context-aware AI takes threat detection a step further by incorporating additional factors into its analysis. These systems develop detailed behavioral profiles for each user, role, and department [2][5]. They consider variables such as time of day, location, device type, and specific job responsibilities. This contextual understanding minimizes false positives. For instance, a cardiologist accessing patient records during regular hours is routine, but the same action from an unknown device at an unusual time might signal a security risk. By merging behavioral patterns with contextual details, AI can more accurately assess the level of risk associated with any given activity.

Using Censinet RiskOps™ and Censinet AITM for Insider Threat Detection

Censinet combines advanced behavioral analytics with practical cybersecurity tools to help healthcare organizations address insider threats effectively.

Healthcare systems require solutions that turn behavioral insights into actionable security measures. With Censinet RiskOps™ and Censinet AITM, security teams can monitor user activity, identify unusual patterns, and respond quickly to potential threats from within.

Real-Time Monitoring with Censinet RiskOps™

Censinet RiskOps™ offers continuous oversight of system usage, file access, login behaviors, and more. By creating behavior baselines for different roles and departments, it can flag anomalies - like odd access times or unexpected data downloads - that might indicate insider risks. This real-time monitoring gives security teams the ability to act as soon as issues arise.

Efficient Investigations with Censinet AITM

Censinet AITM simplifies the investigation process by connecting the dots between anomalies and their context. By automating the analysis of unusual behaviors, it reduces the need for manual reviews, allowing security teams to evaluate potential threats more effectively and save valuable time.

Balancing AI with Human Expertise

While AI detects patterns and flags anomalies, human oversight remains essential. Censinet employs a "human-in-the-loop" approach, where professionals review AI findings before taking action. This ensures legitimate activities - like emergency responses or scheduled maintenance - aren't mistakenly flagged as threats, striking a balance between accuracy and operational efficiency.

Practical Strategies to Reduce Insider Risks

Customizing Behavior Models by Role

AI systems need to understand the unique responsibilities tied to different roles. For instance, a nurse accessing patient records operates differently from a billing administrator managing claims. By tailoring behavior models to specific roles, organizations can better monitor and flag unusual activity.

These role-specific profiles take into account factors like working hours, systems accessed, and frequency of interactions. For example, if an employee accesses records outside normal hours or views data from a different department, the system can flag it as unusual. Comparing an individual's behavior to their role-based peers further refines this process, reducing unnecessary alerts. Many modern platforms now provide behavior-based templates and tools that allow analysts to design detections suited to their organization's specific risks and job functions [3].

This level of customization also makes it easier to automate responses to potential threats.

Automating Alert Workflows for Faster Response

When it comes to insider threats, speed is everything. Automated workflows ensure that critical events - like unauthorized access to sensitive health records or unexpected data downloads - trigger immediate actions. These might include locking a user’s account temporarily, notifying a security analyst, or blocking data transfers through email or cloud services.

By automating these processes, organizations can reduce response times dramatically. In fact, automated workflows can cut analysts' time by as much as 83%, thanks to instant triage, risk scoring, and enriched alerts. Platforms that integrate Security Orchestration, Automation, and Response (SOAR) tools with behavioral analytics can even create playbooks to isolate risky users and revoke their access instantly - no manual intervention required. This is particularly important in healthcare, where 43% of medical groups reported increasing their use of AI in 2023, with security automation being a top priority [2].

Such automation not only speeds up responses but also supports ongoing improvements to detection models.

Continuous Model Retraining for Better Accuracy

AI systems can only stay effective if they continuously learn and adapt. Retraining models regularly allows them to keep up with changes, such as employees moving to new roles, departments growing, or workflows evolving. Without this ongoing refinement, models risk becoming outdated, leading to more false positives and wasted time investigating routine activities.

As Zac Amos, Features Editor at ReHack, puts it:

"With machine learning, AI models become smarter after every interaction by fine-tuning their understanding of what is expected and reducing false positives."

The stakes are high, especially in healthcare, where 59% of organizations reported insider-related incidents in the past year, and 28% of those involved patient data exposure [4]. Regular retraining helps AI systems differentiate between legitimate changes - like a nurse moving from the emergency department to intensive care - and actual threats. This approach not only improves detection accuracy but also reduces alert fatigue, ensuring security teams can focus on real risks without being overwhelmed by false alarms.

sbb-itb-535baee

Measuring Results and Scaling with Censinet AI

Key Metrics for Measuring Effectiveness

When it comes to evaluating the impact of AI-driven behavioral analytics, certain metrics stand out. Detection accuracy is a major indicator of success. By properly integrating behavioral analytics, organizations can achieve an average detection accuracy of 94.7%, while also cutting down on false positives by 38% compared to traditional approaches [9].

Another critical factor is response time. AI-powered systems can identify compromised accounts 95% faster than older methods [11]. Additionally, 59% of organizations report that automation slashes investigation time by 50% or more [10]. For organizations with structured insider risk programs, the average time from detection to containment is 81 days, a marked improvement over the 120+ days seen in organizations without such programs [10]. With full integration, this timeline can shrink even further - from 81 days down to just 36 days, representing a 56% improvement [10].

Organizations that advance through a five-stage maturity model for behavioral risk analytics often see significant gains: a 43% improvement in time-to-resolution and an average annual cost reduction of $6.8 million in insider risk expenses. Moreover, 65% of these organizations report that their insider risk programs successfully prevented data breaches [10].

These metrics highlight the importance of scalable, effective solutions in the realm of healthcare cybersecurity.

Scaling Cybersecurity Efforts with Censinet

Given the complexity of healthcare environments, scaling cybersecurity measures is essential. Censinet RiskOps™ and Censinet AI™ are designed to expand seamlessly across departments and facilities while maintaining high performance. By utilizing real-time monitoring and automated analysis, the platform’s cloud-native architecture processes millions of events per second and petabytes of logs [8]. This is critical in an industry where the average hospital manages over 10,000 connected medical devices [1] and faces an average breach cost of $10.3 million [1].

A standout feature of the platform is its federated learning approach. This allows AI models to train across multiple hospital facilities or departments without transferring sensitive data - only model updates are shared centrally [7]. This method ensures privacy while enabling the system to continuously learn and improve across the organization. Organizations that rely heavily on security AI have saved an average of $1.9 million compared to those that don’t. Furthermore, AI-driven systems in high-risk environments have achieved a 98% threat detection rate and cut incident response time by 70% [7].

Censinet AI™ also simplifies workflows by summarizing vendor evidence, documenting integration details, and generating concise risk summary reports. This allows healthcare organizations to address risks more efficiently while preserving the essential human oversight required for safe and scalable operations.

Conclusion: The Future of Insider Threat Detection in Healthcare

Insider threats remain one of the toughest challenges for healthcare organizations. Traditional security tools, which rely on predefined rules, often fall short when facing modern, sophisticated threats. This gap can lead to serious consequences, as threats frequently go undetected until significant damage has already occurred.

AI-powered analytics are changing the game. By offering real-time anomaly detection, continuous learning, and minimizing the need for manual intervention, AI is reshaping how threats are identified. In fact, in 2023, 43% of medical groups reported expanding their use of AI for security automation [2]. These advancements are not only improving detection capabilities but are also setting the stage for a more modern approach to cybersecurity in healthcare.

Solutions like Censinet RiskOps™ and Censinet AITM provide healthcare organizations with tools for proactive monitoring and automated analysis. These systems continuously adapt to evolving threats, ensuring organizations stay ahead of potential risks.

The shift from reactive security measures to proactive, AI-driven strategies is no longer optional - it’s essential. With the high financial and operational stakes in healthcare, adopting AI-driven behavioral analytics offers the speed, precision, and scalability necessary to safeguard patient data and maintain seamless operations in an increasingly complex threat environment.

FAQs

How does AI-powered behavioral analytics help detect insider threats in healthcare?

AI-driven behavioral analytics is transforming how insider threats are detected in the healthcare sector. By constantly keeping an eye on user behavior, it identifies unusual patterns - like accessing sensitive data unexpectedly or straying from typical activity. Spotting these anomalies in real time helps stop potential security breaches before they can cause serious damage.

On top of that, AI streamlines incident analysis and prioritization. It cuts down on false alarms and ensures quicker, more precise responses. This forward-thinking method bolsters cybersecurity efforts, safeguarding sensitive healthcare information effectively.

How does context-aware AI help reduce false positives in insider threat detection?

Context-aware AI takes a smarter approach to threat detection by diving deep into the unique behavior patterns of users and systems within a given environment. Instead of treating every anomaly as a potential threat, it learns what "normal" activity looks like, making it easier to spot actual risks while ignoring harmless deviations.

This means fewer false alarms. Security teams can concentrate on addressing real threats instead of wasting time sifting through endless, irrelevant alerts. The outcome? A sharper, more efficient detection process that strengthens cybersecurity efforts where it matters most.

What financial advantages can healthcare organizations gain from using AI-powered security solutions?

Healthcare organizations stand to save millions by using AI-powered security tools to tackle insider threats. These advanced systems catch risks early, significantly lowering the chances of costly data breaches and avoiding hefty regulatory fines.

Beyond just cost savings, these tools streamline operations by automating the process of detecting and addressing threats. This means staff can focus their energy on other essential tasks. In the long run, this proactive strategy doesn't just safeguard sensitive patient information - it also boosts the organization's financial health and strengthens its reputation.