Beyond Human Comprehension: Managing Risks in Superhuman AI Systems

Post Summary

Superhuman AI in healthcare is transforming patient care with unmatched speed and accuracy. However, these systems come with serious risks, including cybersecurity threats, data privacy challenges, and opaque decision-making processes. Here's what you need to know:

- Cyber Threats: AI-driven cyberattacks, like ransomware and data poisoning, are becoming more advanced and harder to defend against.

- Data Privacy: Handling large volumes of sensitive patient data increases the risk of breaches and unintentional exposure of protected health information (PHI).

- Transparency Issues: Many AI systems function as "black boxes", making their decisions difficult to interpret and trust.

To manage these risks, healthcare organizations must adopt new risk assessment frameworks, align with regulations like HIPAA, and implement continuous monitoring. Tools like Censinet RiskOps™ offer AI-powered solutions to streamline risk management while maintaining human oversight. Balancing innovation with safety is key to ensuring these systems improve care without compromising security or trust.

Major Risks from Superhuman AI in Healthcare

Superhuman AI systems bring both promise and peril to healthcare, especially through their ability to analyze data at lightning speed and make autonomous decisions. While these capabilities can enhance efficiency, they also introduce vulnerabilities that are difficult to predict and defend against. From automated attacks to the challenges of understanding complex algorithms, these risks demand serious attention and effective strategies.

AI-Enhanced Cyber Attacks

Cybercriminals are now leveraging AI to launch more sophisticated and adaptive attacks. For example, AI-driven ransomware can modify its tactics in real time, using advanced encryption and tailoring ransom demands based on the victim’s profile. Phishing scams have also become more personalized and convincing, employing deepfakes and analyzing social media activity, communication patterns, and organizational structures to bypass traditional security measures.

Healthcare systems face a range of threats, including operational shutdowns, data breaches, theft of credentials, and fraudulent financial activities. What’s especially alarming is how AI has lowered the technical barrier for entry, enabling even inexperienced hackers to execute complex attacks that previously required advanced expertise.

Another emerging threat is data poisoning, where attackers subtly manipulate training datasets. This can lead to incorrect medical diagnoses, improper treatments, or even hidden backdoors in AI systems, posing a direct threat to patient safety [2][3][4][5].

Protecting Patient Data and PHI

AI’s reliance on large volumes of patient data adds another layer of risk. Publicly accessible AI tools and queries may unintentionally expose sensitive patient information. For instance, when healthcare data is fed into these systems, there’s a chance that outputs could inadvertently reveal details about the training data, putting patient privacy at risk.

The sheer scale of data processed by superhuman AI systems amplifies the challenge. Even seemingly harmless queries could leak protected health information (PHI), compounding the difficulties of maintaining data security in an already vulnerable environment.

Lack of Transparency and Control

One of the most pressing concerns with advanced AI systems is their lack of transparency. Many operate as "black boxes", meaning their decision-making processes are hidden and difficult to interpret [1]. For clinicians, this creates a significant challenge - how can they trust or validate a treatment recommendation or a high-risk patient flag if they don’t understand the reasoning behind it? This lack of clarity erodes trust, complicates regulatory compliance, and makes it harder to assess risks effectively.

Superhuman AI systems also evolve with new data, making traditional one-time informed consent models outdated [1]. Additionally, these systems can produce misleading or inaccurate outputs - referred to as "hallucinations" - which could lead to serious consequences in treatment decisions [1]. Errors stemming from algorithmic biases, system malfunctions, or human-AI interactions often fall outside the scope of conventional error classification models, leaving healthcare providers and regulators struggling to adapt [1].

Current oversight frameworks are simply not keeping pace with the rapid evolution and self-improving nature of these AI technologies, creating a regulatory gap that further exacerbates the risks [6].

Methods for Assessing Superhuman AI Risks

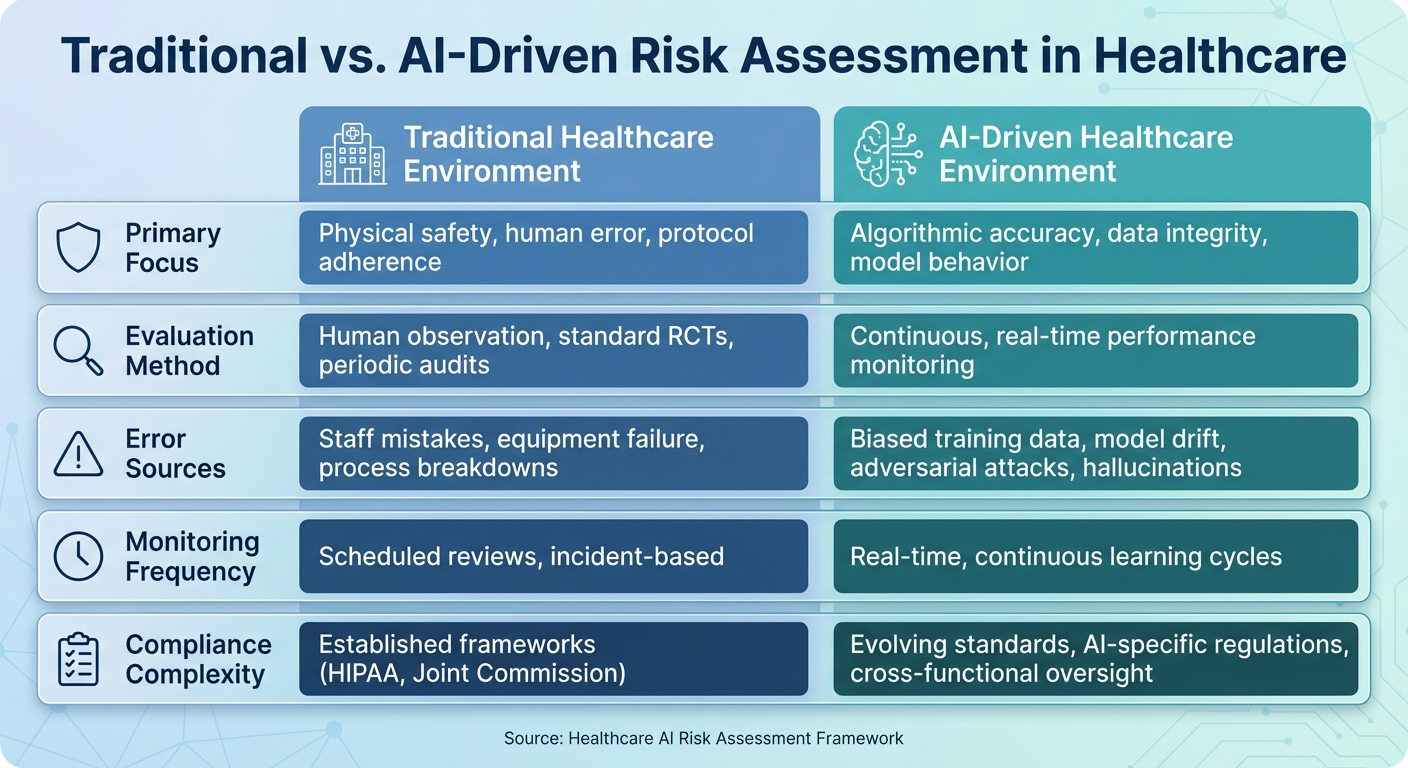

Traditional vs AI-Driven Healthcare Risk Assessment Comparison

Evaluating risks from superhuman AI systems calls for a fresh perspective compared to traditional healthcare risk assessments. These advanced systems bring a new level of complexity, autonomy, and unpredictability, requiring specialized frameworks. These frameworks pave the way for detailed methods, ranging from numerical risk measurement to regulatory alignment.

Using Numbers to Measure AI Risks

To quantify AI risks, healthcare organizations can adapt methods from high-reliability industries like aerospace, chemical manufacturing, and nuclear power. The Probabilistic Risk Assessment (PRA) for AI framework is one such approach. It systematically identifies hazards and uses probabilistic techniques to estimate the likelihood and impact of AI-related incidents [7].

Key advancements in this area include breaking down AI systems into individual components for detailed hazard analysis and modeling risk pathways to map out potential cascading failures. For instance, a data poisoning attack could begin with corrupted training data, escalate to flawed diagnostic recommendations, and ultimately harm patients. By using prospective risk analysis, organizations can anticipate such scenarios before they occur. Additionally, uncertainty management helps account for the unpredictable behavior of self-learning systems [7].

HIPAA-Compliant AI Risk Assessments

AI risk evaluations in healthcare must align with HIPAA requirements, but they need to go beyond standard security protocols. Comprehensive AI integration guidelines should prioritize fairness, robustness, privacy, safety, transparency, explainability, accountability, and patient benefit [8]. To achieve this, a cross-functional team - spanning informatics, legal, data analytics, privacy, patient experience, equity, quality, and safety - should work together to develop and refine governance policies [8].

Specialized tools are also essential to address the unique challenges of AI applications. These tools should evaluate datasets used for training and validation, development methods, and how the system manages PHI. It's critical to ensure that AI systems never expose PHI during training or in their outputs. Such rigorous assessments are crucial for addressing the cybersecurity risks posed by advanced AI systems in healthcare.

Comparing Traditional vs. AI-Driven Risk Scenarios

The differences between traditional and AI-driven risk assessments are stark. Traditional methods focus on physical safety, human error, and adherence to clinical protocols, relying heavily on human oversight. In contrast, AI systems introduce new risks, such as algorithmic bias, data quality issues, model inaccuracies, privacy breaches, and cybersecurity vulnerabilities. They also require an understanding of how AI interacts with human decision-making. These distinctions highlight why continuous, real-time assessments are essential for AI systems.

| Risk Category | Traditional Healthcare Environment | AI-Driven Healthcare Environment |

|---|---|---|

| Primary Focus | Physical safety, human error, protocol adherence | Algorithmic accuracy, data integrity, model behavior |

| Evaluation Method | Human observation, standard RCTs, periodic audits | Continuous, real-time performance monitoring |

| Error Sources | Staff mistakes, equipment failure, process breakdowns | Biased training data, model drift, adversarial attacks, hallucinations |

| Monitoring Frequency | Scheduled reviews, incident-based | Real-time, continuous learning cycles |

| Compliance Complexity | Established frameworks (HIPAA, Joint Commission) | Evolving standards, AI-specific regulations, cross-functional oversight |

Traditional approaches, such as randomized controlled trials, face challenges when applied to AI systems. Instead, AI assessment requires ongoing, real-world performance monitoring and continuous learning. This shift means healthcare organizations must develop new capabilities to track AI behavior over time, far beyond the initial implementation phase.

Managing Superhuman AI Risks with Censinet RiskOps

Censinet RiskOps™ builds on established risk assessment frameworks to address the unique challenges superhuman AI presents in healthcare. With the complexity of AI-driven cybersecurity risks, healthcare organizations need tools that can keep up. Censinet RiskOps™ is an AI-powered platform designed to minimize cyber risk by connecting a network of over 50,000 vendors and healthcare organizations [9]. As the first cloud-based risk exchange specifically for healthcare, it streamlines third-party risk management, setting the stage for efficient and precise AI risk evaluations.

Faster AI Risk Assessments with Censinet AI™

Censinet AI™ speeds up third-party risk assessments by harnessing automation. Vendors can complete security questionnaires in seconds, while automated tools handle documentation reviews and summarize risks, including those from fourth-party relationships. The platform’s Digital Risk Catalog™, which includes data on over 40,000 vendors and products, provides pre-assessed and risk-scored insights [10]. Additionally, Delta-Based Reassessments reduce the time needed for follow-up evaluations to less than a day [10]. This level of efficiency is crucial in healthcare, where quick action is often required to manage risks effectively.

Balancing Automation with Human Oversight

While automation accelerates processes, human oversight remains a critical component. Censinet AI™ integrates a human-in-the-loop approach, ensuring that automated systems enhance rather than replace decision-making. Risk teams maintain control through customizable rules and review protocols, creating a balance between speed and informed judgment. As one expert highlighted [12], AI should serve as a tool to augment human oversight, not eliminate it.

The platform functions as a centralized AI risk command center, directing key findings and tasks to the right stakeholders, including AI governance committees. A real-time AI risk dashboard consolidates data, policies, and tasks, enabling teams to focus on the most pressing issues. This ensures that every risk is addressed by the appropriate team at the right time.

Sharing Risk Intelligence Across Networks

Censinet Connect™ facilitates the sharing of risk intelligence across vendor networks. Vendors can distribute completed security questionnaires and related evidence to unlimited customers, even those outside their immediate network, eliminating the need for duplicate assessments [9][10]. This collaborative approach is especially beneficial for evaluating AI systems, as shared insights into similar technologies can provide valuable context.

Through its collaborative risk network, healthcare delivery organizations gain access to collective intelligence about AI-related risks and vulnerabilities. For example, if one organization identifies a specific risk pattern in an AI system, that information becomes accessible to others, enabling proactive responses to emerging threats. This shared approach helps healthcare organizations stay ahead in a rapidly changing AI landscape.

sbb-itb-535baee

Implementing and Monitoring AI Risk Management

Building on earlier risk assessment strategies, organizations now need to put their AI risk management plans into action and maintain ongoing oversight. A proactive approach involves embedding secure-by-design principles into existing CRM and ERM frameworks [1]. This includes setting up clear governance structures and ensuring transparency throughout the use of third-party AI tools and supply chains [11].

Step-by-Step Implementation Guide

After identifying AI risks, the next critical step is implementing effective mitigation strategies. For this, Censinet RiskOps™ offers three deployment options tailored to different organizational needs:

- Platform Approach: Manage risk assessments in-house using the full software suite.

- Managed Services: Fully outsource cyber risk management.

- Hybrid Mix: Combine software access with professional services support.

Each option is designed to scale with an organization’s size and maturity, offering flexibility in pricing and functionality.

The NIST AI Risk Management Framework (AI RMF) provides a foundational guide for embedding trust into AI systems. Organizations should create detailed playbooks for cyber operations, outlining steps for evaluating AI systems before deployment, during integration, and throughout their lifecycle. This ensures a consistent and standardized approach to assessing AI risks.

AI-Powered Security Operations Centers

Modern Security Operations Centers (SOCs) leverage AI analytics to transform how risks are identified and managed in healthcare [14]. These systems use anomaly detection algorithms to monitor AI behavior, flagging unusual patterns before they escalate into critical issues.

For high-risk AI systems, such as those using Large Language Models or handling sensitive patient information, intensive oversight is essential. Continuous evaluation ensures these systems operate within acceptable boundaries. Additionally, AI-powered compliance automation keeps processes aligned with laws like HIPAA by tracking regulatory updates [13].

Maintaining Compliance and Flexibility

AI risk management is not a one-and-done effort. As technology and regulations evolve, organizations must adapt. Flexible compliance measures are essential to keep pace with these changes [8].

Blockchain technology can play a pivotal role in healthcare, offering decentralized, transparent, and immutable solutions for dynamic consent and secure data sharing. It also supports real-time monitoring of AI-integrated medical devices [1]. The Health Sector Coordinating Council (HSCC) Cybersecurity Working Group is preparing 2026 guidance to address these emerging challenges, helping organizations stay compliant while adapting to new AI capabilities.

To keep up with advancements, organizations should establish regular review cycles. Governance committees can assess AI system performance, update risk protocols based on new threat intelligence, and adjust monitoring thresholds as AI capabilities grow. These ongoing efforts ensure that AI risk management evolves alongside technological progress.

Conclusion: Preparing for Superhuman AI Risks

Superhuman AI systems bring a mix of immense potential and serious risks for healthcare organizations. With cyberattacks on the rise and AI-related threats becoming more sophisticated [2], the need for proactive risk management has never been more pressing. In healthcare, the stakes are particularly high - adversarial attacks can cause devastating medical errors by altering as little as 0.001% of input data in AI systems [2]. This reality underscores the importance of creating robust frameworks that combine cutting-edge technology with vigilant oversight.

Striking the right balance between innovation and accountability is crucial. Human oversight is indispensable, especially as agentic AI systems evolve rapidly, potentially leading to misuse or a lack of transparency. Structured frameworks like NIST AI RMF and ISO/IEC 42001 provide a foundation for scaling AI responsibly. Meanwhile, tools such as immutable audit trails and continuous AI red teaming play a vital role in uncovering vulnerabilities before they turn into larger issues [15].

"Proactive risk management helps you stay ahead of emerging risks associated with agentic AI." – Mindgard [15]

The slow pace of regulatory updates makes immediate action even more critical. Protected Health Information (PHI) is a high-value target, fetching 10 to 50 times more than credit card data on dark web marketplaces [2]. Early risk management is essential to safeguard this sensitive data. Solutions like Censinet RiskOps™ offer flexible deployment options - whether through a platform-based model, managed services, or a hybrid approach - while ensuring that human oversight remains a cornerstone of risk management. This integrated strategy allows organizations to adapt to evolving threats without compromising on security or care quality.

FAQs

What steps can healthcare organizations take to reduce AI-related cybersecurity risks?

Healthcare organizations can tackle AI-related cybersecurity risks by implementing a zero trust architecture. This approach ensures that access to systems is restricted strictly to those who genuinely need it, minimizing unnecessary exposure. Keeping software up to date and enforcing strong access controls are also critical steps in reducing vulnerabilities.

On top of that, continuous monitoring of AI systems can catch unusual activity early, allowing for swift action. Equally important is thorough staff training, which equips employees to recognize potential threats and respond appropriately. When combined, these strategies create a strong line of defense against AI-driven cybersecurity risks in healthcare settings.

How can healthcare organizations ensure transparency in AI decision-making?

Healthcare organizations can improve openness in AI decision-making by adopting a few practical approaches. Start by implementing explainable AI systems that clearly outline how decisions are reached. Thorough documentation of decision-making processes is essential, as it allows stakeholders to review and fully understand how outcomes are determined. Regular audits of AI systems are also critical to catch potential biases or errors and maintain accountability.

It’s equally important to promote open communication among clinicians, data scientists, and leadership teams. This kind of collaboration fosters trust and ensures that AI-driven decisions stay aligned with ethical guidelines and clinical best practices.

How does Censinet RiskOps™ help manage AI risks in healthcare?

Censinet RiskOps™ supports healthcare organizations in navigating the challenges of AI by providing detailed risk assessments, real-time monitoring, and customized governance frameworks. These solutions are crafted to tackle the specific complexities of AI-based systems, ensuring they are integrated securely while addressing potential risks proactively.

By simplifying compliance workflows and pinpointing possible vulnerabilities, Censinet RiskOps™ empowers healthcare providers to embrace cutting-edge AI technologies with confidence, all while protecting patient data and staying aligned with regulatory requirements.

Related Blog Posts

- The AI-Augmented Risk Assessor: How Technology is Redefining Professional Roles in 2025

- AI Risk Management: Why Traditional Frameworks Are Failing in the Machine Learning Era

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- The Safety-Performance Trade-off: Balancing AI Capability with Risk Control