Top Challenges in Re-Identification Risk Management

Post Summary

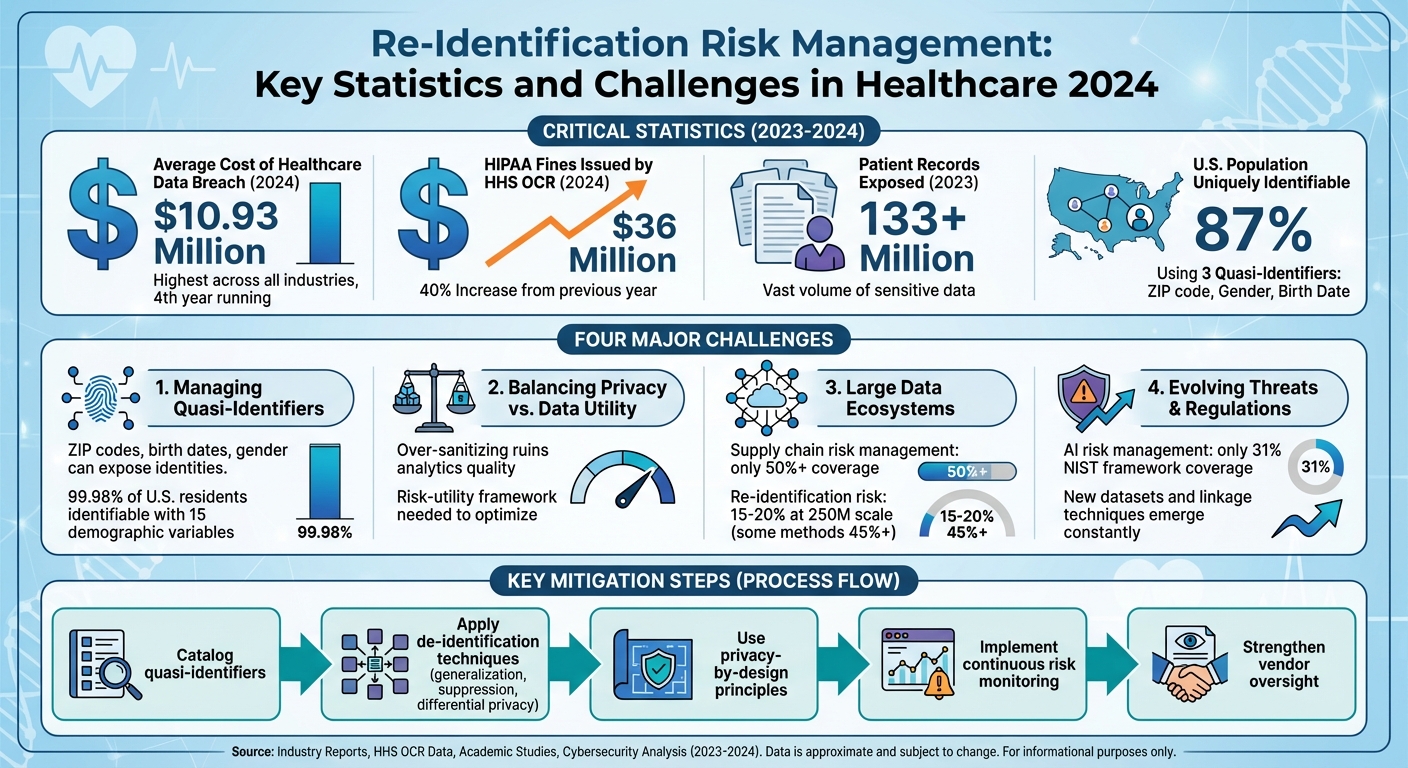

Re-identification risk is a growing concern in healthcare, where even de-identified data can be traced back to individuals when combined with external sources. This article highlights four major challenges in managing these risks and provides actionable strategies to address them:

- Quasi-Identifiers: Data points like ZIP codes, birth dates, or gender can expose identities when matched with public data.

- Privacy vs. Data Utility: Balancing the need for detailed data in research with privacy protections is complex but necessary.

- Large Data Ecosystems: Multi-organization data sharing increases risks, especially with weak vendor oversight or flawed token systems.

- Evolving Threats: New datasets, linkage techniques, and regulations demand continuous risk monitoring.

Steps to mitigate these risks include cataloging quasi-identifiers, applying de-identification techniques, using privacy-by-design principles, and adopting continuous risk monitoring. The stakes are high, with healthcare data breaches costing $10.93 million on average in 2024 and HIPAA fines surging by 40%. Addressing these challenges requires strong governance, technical safeguards, and collaboration across teams.

Re-Identification Risk Management: Key Statistics and Challenges in Healthcare 2024

Challenge 1: Managing Risks from Quasi-Identifiers

What Are Quasi-Identifiers?

Quasi-identifiers are data points like ZIP codes, dates of birth, gender, or admission dates. On their own, they don’t directly reveal someone’s identity. But when combined with external data, they can uniquely identify individuals. Unlike direct identifiers such as names or Social Security numbers, quasi-identifiers are often left in datasets after de-identification because they’re essential for analysis. However, their potential to expose identities shouldn’t be underestimated. For example, studies show that 87% of the U.S. population can be uniquely identified using just three quasi-identifiers - 5-digit ZIP code, gender, and full date of birth - when matched with publicly available voter registration data [10]. Recognizing these risks is crucial to prevent re-identification.

Linkage attacks exploit quasi-identifiers by matching them from de-identified datasets to external sources like voter rolls, brokered data, or public registries. Even datasets that comply with HIPAA Safe Harbor can be at risk. For instance, retaining fields like a 3-digit ZIP code, rare disease codes, provider specialty, age range, gender, and visit quarter may still expose individuals in sparsely populated areas, especially when combined with local news or social media posts about their condition [6].

Common Mistakes in Risk Assessments

One of the most frequent missteps organizations make is assuming that HIPAA Safe Harbor guarantees anonymity. This overlooks the fact that remaining quasi-identifiers can still be matched with external data to re-identify individuals [2]. Another common oversight is failing to evaluate whether datasets overlap with other external data sources that might share similar fields [5].

Rare attribute combinations and small population groups also pose challenges. For example, uncommon medical diagnoses or procedures, or data from sparsely populated areas - sometimes called "more cows than people" ZIP codes - can create records that are easy to trace back to an individual [14]. Many organizations also focus solely on dataset-level risk assessments, ignoring the importance of calculating re-identification probabilities for individual records. Additionally, they often neglect to revisit their assessments as new data is added or when external datasets become more accessible [2].

How to Reduce Quasi-Identifier Risks

Reducing the risks associated with quasi-identifiers requires a clear strategy. Start by cataloging all quasi-identifiers in your datasets and identifying potential external sources that could match those fields [14]. Once you’ve done this, you can apply techniques to minimize risk.

- Generalization: Replace specific values with broader categories. For instance, use age ranges instead of exact ages, quarters or years instead of exact admission dates, or 3-digit ZIP codes that meet population thresholds instead of full ZIP codes.

- Suppression: Remove or mask high-risk variables altogether. For example, suppress precise location data for ultra-rare diseases or remove entire rows if the combination of quasi-identifiers is too unique (e.g., fewer than five individuals sharing the same pattern) [5].

- Top/Bottom Coding: Group extreme values into broader categories, such as showing ages as "90+" to minimize uniqueness among outliers [14].

- Differential Privacy: Add mathematically calibrated noise to query results or use synthetic data to limit re-identification risks, even when external datasets are available [9].

To strengthen protections further, organizations should consider using Expert Determination under HIPAA for higher-risk data releases instead of relying solely on Safe Harbor. Continuous monitoring is also essential for reassessing risks as datasets evolve or new external sources emerge [2]. Tools like Censinet RiskOps™ can assist by simplifying risk assessments, tracking controls across vendors handling de-identified data, and enabling collaboration between healthcare organizations and their partners to manage risks effectively.

Challenge 2: Balancing Data Usefulness and Privacy

Finding the right balance between making healthcare data useful and protecting individual privacy is a critical challenge in managing re-identification risks.

The Privacy vs. Usefulness Trade-Off

Healthcare data is a goldmine for analytics, but it comes with risks. Detailed information like timestamps, geographic data, and rare diagnoses is essential for things like predictive modeling, outcomes research, and tracking population health. However, these same details - known as quasi-identifiers - can make it easier to identify individuals when combined with external data sources [5][8].

One study highlighted this risk by showing that 15 demographic variables could uniquely identify 99.98% of U.S. residents in what was supposed to be a de-identified census dataset [15]. On the flip side, over-sanitizing data - like using only the year instead of full dates or overly broad age ranges - can ruin the quality of analyses. This can obscure seasonal trends or make it impossible to study high-risk groups effectively [5][8].

The stakes are high. In 2024, the Department of Health and Human Services' Office for Civil Rights (HHS OCR) handed out $36 million in fines for HIPAA violations - a 40% increase from the previous year - with poor risk assessments being a top issue [11]. Meanwhile, over 133 million patient records were exposed in 2023 alone [15][16]. These breaches not only damage trust but also discourage patients from participating in registries and data-sharing initiatives, which could hurt the future of healthcare analytics [16]. To address this challenge, organizations are turning to structured approaches like the risk-utility framework.

Using a Risk-Utility Analysis Framework

A risk-utility framework helps teams make smarter trade-offs between data privacy and usability. The process starts with privacy and data science teams working together to identify the essential data elements - like age, time, and location - needed for specific projects such as predictive modeling or comparative studies [5]. They then evaluate re-identification risks by looking at potential external data sources, linkage keys, and how unique certain data points are. From there, they apply de-identification techniques and recalculate metrics for both risk (e.g., k-anonymity) and utility (e.g., model accuracy) to understand the impact of their adjustments [5][8].

This process is iterative. Teams begin with a de-identified dataset, measure its risk and utility, and then gradually tighten protections until they achieve an acceptable balance [15]. If risks remain too high, they might suppress high-risk records, like those involving very rare diagnoses, while carefully monitoring for any biases these changes might introduce [5][8].

Another effective tool is a tiered data access model, which categorizes datasets based on their risk levels and intended uses. For example:

- Public-use data: Highly de-identified and suitable for general exploration.

- Limited-use data: More detailed but accessible only under strict agreements.

- Secure enclave data: Fully detailed data analyzed in a controlled environment, where data cannot be removed [4][5].

This approach allows advanced projects, like AI development, to move forward while still offering lower-risk data for broader research.

While risk-utility frameworks are powerful, integrating privacy considerations into analytics from the start ensures compliance and smoother workflows throughout the project.

Building Privacy into Analytics from the Start

The concept of "privacy-by-design" embeds privacy measures early in the process, giving teams the flexibility to align data structures and analytic strategies with privacy requirements [4][5]. This starts with joint planning sessions where privacy, compliance, and data science teams define project goals, decide on necessary data elements, and set limits on identifiability before any data is even extracted [4][5]. Together, they design schemas that separate direct identifiers from the analytic data and pre-plan which fields will be generalized or suppressed [4][5].

During model development, data scientists should collaborate with privacy experts whenever they plan to add new quasi-identifiers, link external datasets, or scale up projects. These actions can significantly increase re-identification risks [3][5]. Regular checkpoints, such as data review boards, help ensure updated risk assessments before data is shared or models are deployed [4][5]. Tools like Censinet RiskOps™ simplify this collaboration by centralizing risk assessments across vendors and partners, keeping privacy risks in check throughout the project lifecycle.

On the technical side, automated workflows can apply tested de-identification methods that preserve clinical integrity. For instance, slightly adjusting lab values within acceptable ranges can protect privacy while keeping critical relationships intact [8]. Secure architectures that keep identifiable data in protected environments - offering analysts only de-identified or aggregated outputs - also reduce risks without sacrificing analytic capabilities [5]. For multi-institution projects, cryptographic techniques like tokenization can enable accurate record linkage while minimizing re-identification risks [3][5].

Challenge 3: Managing Risk Across Large Data Ecosystems

Healthcare data flows through a web of multi-state systems, cloud platforms, Health Information Exchanges (HIEs), and analytics vendors, creating heightened risks of re-identification. A single patient might appear in numerous datasets, and even if each dataset is de-identified, combining them can expose identities. This is especially problematic in the U.S., where healthcare organizations increasingly depend on shared infrastructure and third-party services for electronic health records and AI-powered diagnostics. The challenges here are twofold: technical issues, like token design flaws, and governance gaps, such as inadequate vendor oversight.

Privacy-Preserving Record Linkage (PPRL) Challenges

Privacy-preserving record linkage (PPRL) systems rely on cryptographic tokens - encrypted versions of patient identifiers like name, birthdate, and ZIP code - to link records across organizations without exposing direct identifiers [3]. However, as the scale of datasets grows, so does the risk of re-identification.

For datasets with fewer than 1 million individuals, many token designs result in re-identification risks below 1%. But when datasets expand to around 25 million people, that risk can exceed 10%. At the national scale of approximately 250 million individuals, some token strategies see risks climb to 15–20%, with certain encodings surpassing 45% [3].

This vulnerability arises from fixed encoding methods. If an attacker gains access to external registries - like voter files, obituaries, or consumer databases - and can replicate the token logic, they can link tokens back to real identities [3][18]. Adding multiple tokens per patient or including detailed demographics further increases uniqueness, making re-identification easier [3]. In multi-state networks, where patients appear across various hospitals and payers, the combination of repeated appearances and high-quality administrative records exacerbates these risks [8].

Managing Third-Party and Vendor Risks

Third-party vendors often aggregate de-identified data from multiple sources, creating a more comprehensive view of patients. However, if these vendors also have access to additional data, such as consumer profiles or commercial claims, they could potentially re-identify individuals [4][17][18]. The more copies of a dataset that circulate within the supply chain, the higher the chance that at least one party may have weaker security measures or experience a breach [5].

The urgency of addressing this issue is reflected in recent findings. The 2025 Healthcare Cybersecurity Benchmarking Study by KLAS Research and Censinet revealed that supply chain risk management has the weakest coverage among cybersecurity framework categories - just over 50% - even as third-party breaches continue to rise annually [13]. Inadequate risk assessments have also been cited as a major cause of regulatory penalties [11].

"Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare." - Matt Christensen, Sr. Director GRC, Intermountain Health [1]

Without standardized approaches to managing third-party risks, including regular security reviews and binding privacy obligations, healthcare organizations cannot meet the "very small" re-identification risk standard required under HIPAA Expert Determination [4][5].

Technical and Governance Solutions

To tackle the risks tied to large data ecosystems, healthcare organizations need a mix of advanced technical measures and solid governance practices.

Technical Strategies:

Organizations should adopt safer PPRL token designs that reduce uniqueness, limit the number of tokens generated per patient, and use third-party linkage agents that manage tokens without accessing underlying demographic data [3][5]. Implementing k-anonymity thresholds - which avoid using tokens linked to very small population groups - can also help mitigate risks [5][14]. Additionally, teams should regularly test PPRL encodings against external datasets, like simulated voter files, to measure linkage success rates and adjust token designs based on formal risk assessments [3][5].

Governance Practices:

Strong governance starts with creating a detailed vendor inventory and mapping data flows to identify which third parties receive de-identified datasets, their purposes, and the legal agreements in place [5]. Security and privacy due diligence should cover areas such as encryption, access controls, logging, data minimization, and subcontractor oversight before onboarding or renewing vendor contracts [5][9]. Legal agreements, including Business Associate Agreements, must explicitly prohibit unauthorized re-identification, regulate dataset combinations, ensure audit rights, and specify incident notification timelines, all while adhering to HIPAA Expert Determination standards [4][5].

To streamline these efforts, many healthcare organizations are turning to centralized risk platforms like Censinet RiskOps™. These platforms standardize risk assessments, automate scoring, flag common vendor risks, and maintain a shared repository of third-party risk data. This is particularly useful in large, multi-tenant environments where manual processes are no longer practical.

"Censinet RiskOps allowed 3 FTEs to go back to their real jobs! Now we do a lot more risk assessments with only 2 FTEs required." - Terry Grogan, CISO, Tower Health [1]

Baptist Health's VP & CISO, James Case, noted that the platform not only eliminated the need for spreadsheets but also fostered collaboration among hospitals for better risk management.

sbb-itb-535baee

Challenge 4: Keeping Up with Changing Threats and Regulations

The risk of re-identification is constantly shifting. What might have been considered secure a year ago can become vulnerable today due to new data sources, improved linkage methods, and evolving regulatory demands. Healthcare organizations must move beyond one-time de-identification efforts and adopt ongoing risk monitoring to address these dynamic challenges and compliance needs.

How Re-Identification Risks Keep Changing

The growing availability of public and commercial datasets - like voter records, consumer profiles, social media data, commercial claims, and wearable device information - has enabled a "mosaic effect." This allows attackers to combine quasi-identifiers with external registries to pinpoint individuals [2][4]. Advances in computing power and sophisticated linkage algorithms further amplify these risks, as attackers can merge external datasets using quasi-identifiers [2][8]. Research has shown that large, national-level databases and privacy-preserving record linkage (PPRL) systems are particularly vulnerable to evolving external data, which can expose token weaknesses [3][18].

Potential attackers include insiders, hackers, data brokers, and even overly ambitious researchers [2][5]. Common attack methods range from linking quasi-identifiers to voter lists or obituary records, to triangulating rare patient conditions by combining lab results, diagnoses, and geographic data. Another threat involves PPRL token attacks, where encoded identifiers are reverse-engineered or linked across large datasets [3][6][8][18]. Risks can also increase when organizations introduce new data sources, modify data schemas, or expand data-sharing partnerships [5][6].

To counter these risks, healthcare organizations should establish a privacy risk lead or committee responsible for staying informed on emerging research and guidelines. They should conduct regular risk reviews, especially when significant changes occur, such as schema updates or the addition of new data sources. Monitoring public dataset releases that could be used for linkage and incorporating checkpoints into data governance workflows can help ensure timely updates to risk assessments. Subscribing to healthcare privacy alerts is another way to stay ahead of potential threats [2][5][6][8][18].

These challenges call for a regulatory approach that evolves alongside technological advancements to ensure compliance frameworks remain effective.

Meeting Regulatory Compliance Requirements

As re-identification risks grow, regulatory frameworks must adapt to address these challenges. Under HIPAA, the Safe Harbor method removes 18 identifiers but doesn’t account for future data sources or advanced linkage techniques. The Expert Determination method, on the other hand, requires a qualified expert to certify that residual risk is minimal [4][5]. Federal agencies like HHS/OCR expect organizations using Expert Determination to document their methodologies thoroughly. This includes considering realistic external data threats, adversary profiles, proper data transformations, and periodic reassessments as conditions evolve [2][4][5].

In 2024, HHS OCR issued $36 million in HIPAA fines, marking a 40% increase from the previous year. Many of these penalties stemmed from inadequate risk assessments, delayed breach notifications, and weak access controls [11]. The average cost of a healthcare data breach also reached $10.93 million in 2024, the highest across any industry for the fourth year running [11]. Meanwhile, state laws, such as California’s, have expanded privacy regulations to cover health-adjacent datasets, often including provisions for pseudonymized or de-identified data [4][6]. Federal guidance further emphasizes the importance of accounting for reasonably available external information, maintaining detailed documentation, and ensuring that data recipients are contractually prohibited from attempting re-identification [4][5].

To meet these requirements, organizations should maintain comprehensive risk assessment reports that include details on datasets, quasi-identifiers, attacker models, external linkage resources, and both quantitative and qualitative risk estimates [2][5]. Supporting documentation should also include Expert Determination opinions, data governance records (such as data inventories and access policies), and Data Use Agreements that explicitly ban re-identification and restrict data sharing [2][4][5][6].

Setting Up Continuous Risk Monitoring

Given the ever-changing data landscape and regulatory expectations, continuous risk monitoring is essential. A robust monitoring program typically includes four key components:

- Governance: A cross-functional privacy and risk committee to oversee de-identification standards, approve high-risk projects, and review periodic risk reports.

- Process Triggers: Specific events - like onboarding a vendor, adding sensitive data, or launching a new algorithm - that require a fresh risk assessment.

- Technical Controls: Strong technical measures to prevent unauthorized linkage attempts.

- Metrics and Dashboards: Key indicators, such as the number of datasets with quasi-identifiers, frequency of re-identification assessments, and policy exceptions, are regularly reported to leadership [2][5][6][7].

For ongoing assessments, organizations should evaluate record uniqueness, identify overlaps with external datasets, and simulate linkage attacks to estimate re-identification probabilities [3][5][8]. Best practices include identifying potential linkage resources, specifying linking variables, and either testing linkage on sample data or modeling it when direct testing isn’t feasible [5][8]. For large-scale analytics and PPRL scenarios, research highlights the importance of token design, the number of tokens per patient, and dataset size in mitigating re-identification risks. Improved token designs or third-party token matching can significantly reduce these risks [3]. Additional measures like secure data enclaves, differential privacy techniques, and perturbation algorithms can further protect sensitive data while maintaining its analytical value [5][8].

Organizations should also update risk assessments regularly to account for changes in data populations, token schemes, or sharing arrangements. Establishing clear risk indicators - such as the number of de-identified datasets in use, high-risk quasi-identifier combinations, and third-party data access - can help integrate re-identification risks into enterprise-wide reporting. These metrics can be consolidated into a centralized risk dashboard to inform cybersecurity and privacy reports, guiding strategic decisions on resource allocation and risk management.

A 2025 study by KLAS Research and Censinet revealed that while healthcare organizations are strong in incident response, they often lag in supply chain and asset management. AI risk management, in particular, showed significant gaps, with only 31% coverage across NIST AI Risk Management Framework functions [12][13].

Using platforms like Censinet RiskOps™ can simplify continuous monitoring by centralizing risk assessments, automating scoring, and delivering detailed reports on risks to PHI and clinical applications. Leading organizations are increasingly adopting hub-and-spoke or center-of-excellence models to integrate compliance and risk expertise into digital, data, and AI initiatives.

Conclusion: Improving Re-Identification Risk Management

Managing re-identification risks in U.S. healthcare requires a comprehensive approach that weaves together technical safeguards, contractual protections, and strong governance into a unified framework. This approach addresses key challenges like handling quasi-identifiers, maintaining privacy while ensuring data utility, managing sprawling data ecosystems, and adapting to ever-changing threats. Each of these challenges plays a critical role in creating a continuous, proactive risk management process.

The urgency is undeniable. In 2024, healthcare data breaches cost an average of $10.93 million, marking the highest cost across industries for the fourth straight year. Meanwhile, the HHS Office for Civil Rights issued $36 million in HIPAA fines, a staggering 40% increase from the previous year, largely due to inadequate risk assessments and weak access controls [11]. According to the 2025 Healthcare Cybersecurity Benchmarking Study by KLAS Research and Censinet, many organizations are still more reactive than proactive. For example, supply chain and asset management coverage hovers just above 50%, while AI risk management stands at a mere 31% within the NIST AI RMF framework [12][13]. These gaps leave healthcare systems vulnerable to re-identification risks as datasets grow larger, linkage methods become more sophisticated, and new public datasets emerge.

Censinet RiskOps™ offers a centralized solution to tackle these challenges. The platform simplifies risk management by consolidating vendor assessments, technical safeguards, and compliance tracking for patient data. It evaluates vendor practices around de-identification, security controls, and regulatory compliance across PHI, clinical applications, and supply chains. By streamlining data use agreements and enforcing re-identification prohibitions, it strengthens contractual protections. Continuous monitoring tools and dashboards further enable organizations to identify risks, prioritize fixes, and demonstrate accountability.

To address these risks effectively, healthcare leaders must take decisive steps now. Start by reviewing current data-sharing arrangements and de-identification practices to mitigate risks tied to quasi-identifiers and external linkages. Conduct formal risk assessments that consider realistic attack scenarios. Form a cross-functional privacy committee to set risk thresholds, evaluate high-risk projects, and update policies as threats and regulations evolve. Incorporate privacy-by-design principles into analytics and AI projects, ensuring risk–utility evaluations are part of the initial design phase. Finally, implement continuous monitoring to stay ahead of new public datasets, advanced linkage techniques, and shifts in the data landscape. These actions ensure that managing re-identification risks becomes a continuous, operational priority rather than a one-off compliance task.

FAQs

How can healthcare organizations ensure patient privacy while maximizing the value of their data?

Healthcare organizations can strike a balance between protecting patient privacy and getting the most out of their data by using advanced risk management tools tailored for the healthcare sector. These tools allow for ongoing risk evaluations, enforce strict access restrictions, and implement methods like data anonymization and de-identification to keep sensitive information secure. By adhering to industry regulations and encouraging collaboration among teams, organizations can safeguard their data while enabling valuable analytics and informed decision-making.

What are the most effective strategies for managing re-identification risks in large data ecosystems?

Effectively managing re-identification risks calls for a thoughtful and ongoing strategy. Begin with continuous risk monitoring using advanced tools tailored for healthcare, like AI-driven platforms. These tools can help you stay ahead of potential threats by identifying issues in real time. Pair this with regular risk assessments to pinpoint vulnerabilities and ensure you're meeting industry standards.

It's also essential to prioritize collaboration across teams and organizations. By working together, you can tackle risks from multiple angles and create a more unified approach to data protection. Benchmarking your efforts against industry best practices is another critical step, as it helps measure progress and identify areas for improvement. Together, these strategies provide a stronger shield for sensitive data, reducing the chances of re-identification.

What steps can healthcare organizations take to address evolving data privacy threats and regulatory changes?

Healthcare organizations can stay ahead of data privacy threats and shifting regulations by implementing forward-thinking risk management strategies. This involves routine updates to cybersecurity measures, thorough risk evaluations, and staying aligned with the latest regulatory requirements.

Leveraging tools like AI-driven platforms can simplify these processes. These platforms can automate risk assessments, deliver real-time insights, and help track progress through benchmarking. On top of that, building partnerships with industry peers and keeping up with new trends can add another layer of defense against privacy challenges.