Clinical Documentation AI Vendor Risk: Accuracy, Compliance, and Workflow Integration

Post Summary

Clinical documentation AI tools are reshaping healthcare, but their rapid adoption comes with risks. These tools aim to reduce administrative burdens, but issues like errors, compliance gaps, and workflow disruptions can lead to serious consequences, including patient harm and legal exposure.

Key risks include:

- Accuracy problems: Errors like omissions, inaccuracies, and biases in AI outputs can jeopardize patient safety.

- Compliance challenges: HIPAA violations and data security concerns arise from AI's reliance on sensitive health information.

- Workflow issues: Poor integration with existing systems can increase clinician workload instead of reducing it.

To mitigate these risks, healthcare organizations must evaluate vendors thoroughly, focusing on accuracy, regulatory adherence, and seamless workflow integration. Strong governance and continuous monitoring are critical for safe and effective AI use in clinical documentation.

Primary Risks of AI Clinical Documentation Tools

Healthcare organizations adopting AI-driven clinical documentation systems face a trio of interconnected risks that can significantly impact patient care, regulatory compliance, and operational workflows. A careful evaluation of these risks is essential for selecting the right vendor. These challenges range from clinical errors to compliance gaps and disruptions in workflow.

Accuracy and Patient Safety Concerns

AI documentation tools are prone to producing serious documentation errors like omissions, factual inaccuracies, misattributions, and even fabricated information (often referred to as "hallucinations") [4][5][6]. These issues arise because such tools often rely exclusively on audio input, missing out on nonverbal cues and critical clinical context. Additionally, the "black box" nature of many AI algorithms makes it difficult to predict or explain these errors [5]. These aren't just minor mistakes - they can lead to misdiagnoses, inappropriate treatments, or delays in urgent care, all of which jeopardize patient safety [5][2].

Another pressing concern is performance disparity. Speech recognition technologies often struggle with accuracy when processing input from Black patients, individuals with non-standard accents, or those with limited English proficiency [1][5]. This creates a higher risk of incomplete or incorrect documentation for marginalized groups.

Overdependence on AI tools can also lead to automation bias, where clinicians may overly trust AI-generated outputs without verifying their accuracy. This can have dangerous consequences, as undetected errors may result in harmful interventions [2].

HIPAA Compliance and Security Concerns

AI systems require access to large volumes of identifiable health information, which raises significant concerns about data privacy and compliance [8]. These tools introduce multiple vulnerabilities that healthcare organizations must address. For instance, the U.S. Department of Health and Human Services (HHS) Office of Inspector General (OIG) has increased its scrutiny of "algorithm-assisted" coding practices. Additionally, some Medicare Administrative Contractors (MACs) now require organizations to disclose their use of AI in documentation during certain audits [8].

"Patient data privacy concerns intensify with AI/AuI implementation, as these systems typically require access to vast quantities of identifiable health information. Organizations must implement robust data governance frameworks that limit information access to system-essential elements, maintain comprehensive audit trails, and establish clear data-retention policies." - Frank Cohen, MPA [8]

The risks go beyond basic HIPAA compliance. Storing Protected Health Information (PHI) in cloud environments, allowing subcontractors access to sensitive data, and weak data retention policies all increase the likelihood of breaches. Furthermore, the lack of clear regulations regarding liability for AI-generated errors adds another layer of uncertainty [7]. Without strong data governance measures, healthcare organizations risk not only regulatory penalties but also a loss of patient trust and compromised documentation reliability.

Workflow Disruption and Liability Concerns

Poor integration of AI tools with existing Electronic Health Record (EHR) systems can create additional data entry tasks and make information retrieval more cumbersome, counteracting the intended efficiency benefits [9][7]. Vendors must demonstrate that their systems can integrate seamlessly with current workflows to prevent these disruptions.

Another challenge is alert fatigue. AI systems often generate excessive alerts, which can overwhelm clinicians and lead them to ignore both critical and non-critical notifications. This undermines the very purpose of the technology, which is to enhance care quality.

Finally, the question of accountability for AI-generated content remains a legal gray area. When errors occur, unclear responsibility can expose healthcare organizations to significant legal risks. To mitigate this, organizations need to establish explicit clinician approval processes and maintain detailed audit trails [8].

How to Evaluate AI Clinical Documentation Vendors

Choosing the right AI clinical documentation vendor isn't as simple as ticking off a compliance checklist. It demands a deep dive into technical capabilities, security measures, and how seamlessly the solution integrates into your operations. With healthcare facing a high risk of third-party breaches and cyberattacks, a thorough evaluation process is non-negotiable. Organizations must assess key factors like accuracy controls, regulatory adherence, and workflow compatibility before making a decision. Here's how to approach this critical evaluation.

Reviewing Clinical Accuracy and Safety Controls

Start by requesting data that demonstrates the AI's performance across a variety of clinical scenarios. This helps address concerns about potential errors and patient safety. Vendors should provide detailed documentation on error rates and examples of how their system handles complex cases. It's also essential to understand the human oversight mechanisms built into the workflow. For instance, find out how the system flags uncertain outputs and what approval processes are in place before the documentation is finalized.

The ECRI Institute has identified AI as the top health technology hazard for 2025 [10], which highlights the importance of understanding how these models behave in real-world situations. Vendors should clearly explain how they mitigate unpredictable or harmful outputs to ensure patient safety. Tools like Censinet RiskOps™ can centralize assessment documentation, track validation evidence, and route critical findings to clinical leadership, enabling organizations to verify vendor claims against objective standards.

Verifying HIPAA, Security, and Data Practices

With healthcare organizations facing an average breach cost of $10.3 million [10], robust security measures are essential. Request detailed information on how vendors handle PHI storage, subcontractor access, and encryption. Ask about their data retention policies and deletion protocols to ensure compliance with industry standards.

The updated HIPAA Security Rule now requires Business Associate Agreements (BAAs) to include specific technical controls, such as encryption, multi-factor authentication (MFA), and network segmentation, with a 240-day timeline for implementation [10]. Make sure the vendor's BAA explicitly outlines these controls instead of relying on vague compliance language. Since 97% of organizations report a lack of AI access controls during security incidents [11], it's critical to assess how vendors prevent unauthorized access and maintain strict control over the data used by their AI models.

Platforms like Censinet RiskOps™ can simplify the compliance review process by offering healthcare-specific security questionnaires that evaluate technical controls, incident response capabilities, and risk management for subcontractors. This continuous monitoring system can identify vulnerabilities before they lead to breaches. Considering that HIPAA fines can reach up to $2 million annually [11], proactive security measures are essential for protecting both patient data and organizational finances.

Checking Workflow Integration and Liability Terms

Technical safeguards are just one part of the puzzle. You also need to evaluate how the AI solution fits into your current workflow and legal framework. Start by reviewing EHR compatibility. Request technical specifications to see how the AI system integrates with your existing electronic health record platform. Determine whether the solution adds extra steps for clinicians or requires duplicate data entry. It's helpful to speak with organizations using the same EHR setup to learn about their implementation experiences and any workflow challenges.

Training programs are another critical area to assess. Clinicians need to be well-prepared to navigate the system and critically evaluate AI outputs. This is especially important to counteract automation bias, where clinicians may overly rely on AI recommendations. Training should emphasize the importance of verification and clearly outline the technology's limitations.

Finally, clarify liability terms in the contract. Determine who is responsible if AI-generated documentation leads to errors or patient harm. Contracts should include clear approval workflows that require explicit clinician sign-off, supported by audit trails to document the review and approval process. Tools like Censinet Connect™ can help by capturing integration details during vendor assessments, including technical architecture, data flow diagrams, and liability provisions, ensuring that all aspects of the integration are well-documented for effective oversight.

sbb-itb-535baee

Maintaining Ongoing Oversight of AI Tools

Setting Up AI Governance and Monitoring

Choosing a vendor is just the starting point - keeping a close eye on how AI performs over time is equally important. The U.S. Department of Health and Human Services Office of Inspector General has highlighted growing concerns about algorithm-assisted coding patterns. Additionally, some Medicare Administrative Contractors now require organizations to disclose AI usage during specific audits [8]. With regulatory scrutiny increasing, having a clear governance framework in place is no longer optional.

A good first step is forming a multidisciplinary AI Governance Committee. This group should include members from legal, compliance, IT, clinical operations, and risk management [2]. Their role is to oversee AI-related activities, evaluate new tools, address potential biases, and ensure compliance with regulations. As regulations become stricter, such governance structures are essential for staying ahead.

The committee should monitor key metrics like accuracy rates, safety concerns, and feedback from clinicians. Regular compliance reviews of AI-generated documentation are crucial [8], as is analyzing documentation trends to spot any unexpected changes after implementing AI tools [8]. Platforms like Censinet RiskOps can simplify this process by centralizing governance and risk management. With features like a user-friendly risk dashboard, Censinet RiskOps helps aggregate data, assign tasks to the right people, and track policies and risks all in one place.

Establishing governance is just the beginning. As AI tools evolve, continuous risk management is just as important.

Managing Risk Throughout the AI Lifecycle

AI tools don’t remain static - they require consistent oversight to adapt to updates and shifting regulations. For instance, starting in 2025, California SB 1120 will require healthcare service plans and disability insurers using AI for utilization reviews to implement safeguards that ensure equitable use, regulatory compliance, and disclosure [2]. Similarly, Utah HB 452 mandates that anyone providing regulated occupational services must disclose their use of generative AI [2].

To manage these risks, set clear thresholds for human intervention, especially when AI outputs could significantly affect patient care or reimbursement [8]. Employing counterbalancing algorithms to detect overstatement patterns can add another layer of security [8]. Some organizations are also forming internal ethics committees to regularly review algorithm designs and assess their outputs for bias or unintended optimizations [8].

Censinet RiskOps can assist with ongoing evaluations and benchmarking vendor performance, all within a single platform [3]. This kind of continuous monitoring helps identify vulnerabilities early, preventing them from escalating into compliance or patient safety issues. As Maxim Topaz, PhD, Associate Professor at Columbia Nursing, puts it:

"The key question is not whether to adopt these tools but how to do so responsibly, ensuring that they enhance care without eroding trust" [1].

Conclusion and Risk Management Checklist

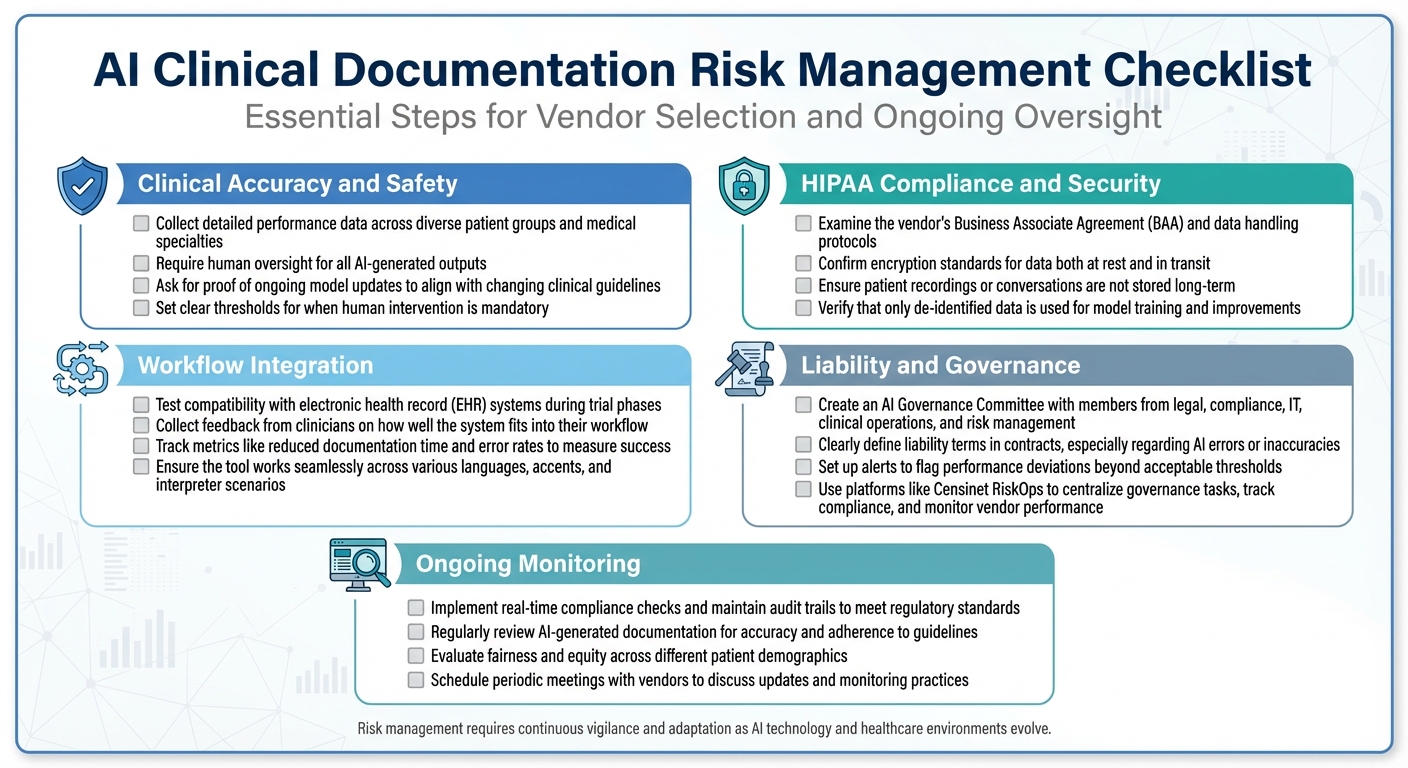

AI Clinical Documentation Risk Management Checklist for Healthcare Organizations

AI-powered clinical documentation tools bring a boost in efficiency, but they also introduce challenges like accuracy, compliance risks, and workflow integration issues. Successful implementation depends on strong governance, continuous monitoring, and being prepared for audits.

As Nature Communications highlights, "Ongoing monitoring and reassessment of AI systems are critical, given the dynamic nature of both the technology and the healthcare landscape" [12]. Regular reviews of accuracy, adherence to regulations, and clinician feedback are key to maintaining safe and effective use.

Here’s a streamlined checklist to help guide vendor selection and ensure ongoing oversight.

Risk Management Checklist

This checklist covers essential steps for managing risks, organized by specific focus areas:

Clinical Accuracy and Safety

- Collect detailed performance data across diverse patient groups and medical specialties.

- Require human oversight for all AI-generated outputs.

- Ask for proof of ongoing model updates to align with changing clinical guidelines.

- Set clear thresholds for when human intervention is mandatory.

HIPAA Compliance and Security

- Examine the vendor's Business Associate Agreement (BAA) and data handling protocols.

- Confirm encryption standards for data both at rest and in transit.

- Ensure patient recordings or conversations are not stored long-term.

- Verify that only de-identified data is used for model training and improvements.

Workflow Integration

- Test compatibility with electronic health record (EHR) systems during trial phases.

- Collect feedback from clinicians on how well the system fits into their workflow.

- Track metrics like reduced documentation time and error rates to measure success.

- Ensure the tool works seamlessly across various languages, accents, and interpreter scenarios.

Liability and Governance

- Create an AI Governance Committee with members from legal, compliance, IT, clinical operations, and risk management.

- Clearly define liability terms in contracts, especially regarding AI errors or inaccuracies.

- Set up alerts to flag performance deviations beyond acceptable thresholds.

- Use platforms like Censinet RiskOps to centralize governance tasks, track compliance, and monitor vendor performance.

Ongoing Monitoring

- Implement real-time compliance checks and maintain audit trails to meet regulatory standards.

- Regularly review AI-generated documentation for accuracy and adherence to guidelines.

- Evaluate fairness and equity across different patient demographics.

- Schedule periodic meetings with vendors to discuss updates and monitoring practices.

This checklist offers a solid foundation, but risk management isn’t a one-and-done task. Both AI technology and healthcare environments are constantly evolving, so your oversight strategies must adapt to keep pace. Effective management requires vigilance and flexibility to ensure safety, compliance, and efficiency over time.

FAQs

How can healthcare providers ensure AI-powered clinical documentation tools are accurate, compliant, and safe to use?

To make sure AI-driven clinical documentation tools are reliable, compliant, and safe, healthcare providers need to focus on consistent human oversight and clinical reviews. These steps help ensure that the AI’s outputs align with established medical standards. It’s equally important to evaluate the data sources powering the AI, confirming they are diverse, high-quality, and tailored to the specific clinical setting.

Performing routine audits and testing the tool in practical, real-world situations can uncover errors or biases that might otherwise go unnoticed. On top of that, ensuring the tool adheres to regulatory guidelines like HIPAA safeguards patient privacy and ensures compliance. Fostering open communication with clinicians and patients about the AI’s functionality not only builds trust but also encourages its safe integration into everyday workflows.

How can healthcare organizations ensure HIPAA compliance and protect data security when using AI tools?

To maintain HIPAA compliance and ensure data security when using AI tools, healthcare organizations should focus on a few crucial steps:

- Validate AI recommendations: Have clinical reviewers thoroughly assess AI-generated codes and suggestions to confirm their accuracy and compliance with regulations.

- Verify data sources: Ensure that the AI tools rely on secure, trustworthy, and compliant data sources.

- Perform regular audits: Conduct ongoing audits to spot and address any risks or inaccuracies in the AI's outputs.

- Implement strong governance policies: Create clear policies to manage AI usage and ensure it aligns with regulatory requirements.

By following these practices, healthcare organizations can reduce risks, stay compliant, and protect sensitive patient data.

What are the best practices for integrating AI clinical documentation systems into healthcare workflows?

To successfully implement AI clinical documentation systems, start with a gradual rollout. This approach helps minimize disruptions and provides time to fine-tune the system as needed. Involve clinicians early on to ensure the technology complements their workflows rather than complicates them. It’s also crucial to ensure the system integrates smoothly with existing EHR platforms using robust API connections to maintain operational efficiency.

Offer thorough training for all users and set up clear channels for ongoing feedback and support. This allows any issues to be addressed promptly and ensures the system continues to improve over time. By focusing on these steps, healthcare organizations can streamline the adoption process and make the most of AI-powered clinical documentation tools.