Clinical Intelligence: Using AI to Improve Patient Care While Managing Risk

Post Summary

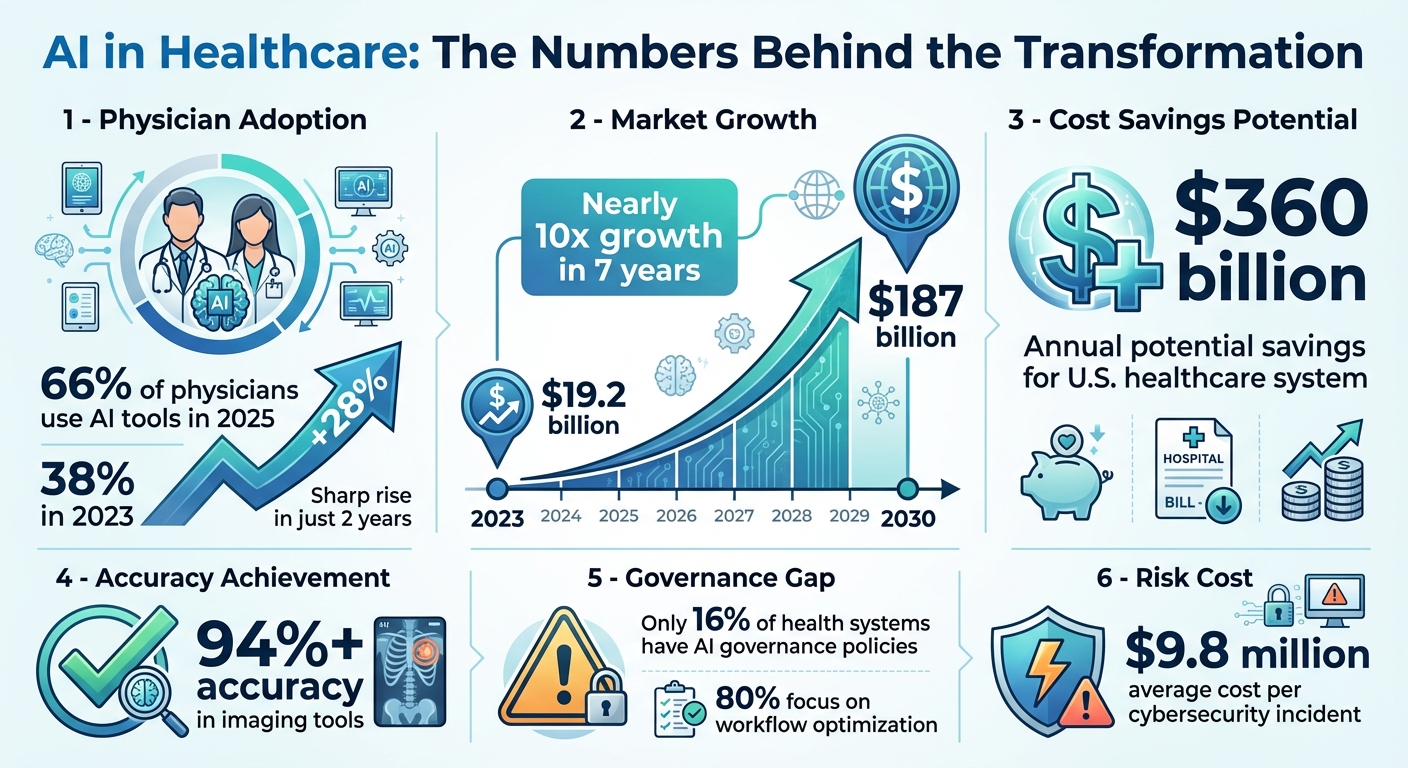

AI is transforming healthcare, offering faster diagnosis, personalized treatments, and reduced clinician workload. But it also introduces challenges like cybersecurity threats, data privacy concerns, and potential clinical errors. By 2025, 66% of physicians already use AI tools, a sharp increase from 38% in 2023. The global AI healthcare market, valued at $19.2 billion in 2023, is projected to grow to nearly $187 billion by 2030.

Key Highlights:

- AI in Action: Diagnoses heart conditions in seconds, predicts disease progression, and reduces administrative burdens.

- Risks: Cyberattacks, data misuse, and biased algorithms pose significant challenges.

- Governance: Success requires oversight, compliance with regulations like HIPAA, and transparent vendor evaluations.

AI holds the potential to reshape patient care while cutting costs, but healthcare providers must balance innovation with safety through clear policies, robust security, and human oversight.

AI in Healthcare: Key Statistics and Market Growth 2023-2030

AI-Powered Clinical Intelligence: Key Use Cases in Patient Care

AI is reshaping the way healthcare providers diagnose, treat, and manage patients across various settings. From identifying high-risk patients to streamlining tedious documentation, these tools are driving efficiency and improving outcomes. Below, we explore how AI-powered clinical intelligence is being applied in practical scenarios, helping healthcare teams align with broader risk management goals.

Predictive and Risk-Stratified Care

AI excels at identifying patients who may be at risk, often before symptoms become apparent. By analyzing a wide range of data - such as clinical records, imaging, genetic profiles, and social factors - AI algorithms can predict disease progression, the likelihood of readmissions, and even mortality rates with remarkable accuracy.

These predictive models allow care teams to act early, potentially preventing emergency visits or unplanned hospital stays. For instance, AI can forecast acute hospital encounters, giving healthcare providers the chance to intervene and reduce costly complications. In clinical trials, AI aids in patient stratification by pinpointing individuals at higher risk of rapid disease progression, ensuring they receive the most appropriate experimental treatments.

In complex conditions like congenital heart disease, AI processes extensive patient data that would be overwhelming to analyze manually. This capability is especially crucial for managing chronic diseases, where early and precise interventions can significantly improve long-term health outcomes.

Diagnostics and Medical Imaging

When it comes to medical imaging, AI has brought a new level of precision and efficiency. Deep learning algorithms now analyze radiology scans, pathology slides, and cardiology images, often detecting subtle patterns and abnormalities that might go unnoticed by human eyes [6][7].

These systems not only enhance diagnostic accuracy but also streamline workflows by automating repetitive tasks like image segmentation and measurements. This allows clinicians to dedicate more time to complex cases and direct patient care, boosting overall productivity in radiology, pathology, and cardiology departments.

AI also supports clinical decision-making by cross-referencing imaging findings with vast medical databases. This helps providers make more informed choices about diagnoses and treatments. By combining advanced algorithms with image recognition, AI enables more personalized care, tailoring diagnostics and treatments to each patient’s unique needs [4][5][6][7]. These advancements reduce diagnostic errors and help address vulnerabilities within healthcare systems.

Reducing Administrative Burden for Clinicians

AI’s benefits extend beyond patient care, offering solutions to lighten the administrative load on clinicians. Tasks like incident reporting, documentation, and compliance audits are automated, reducing errors and freeing up time for patient-focused activities.

With AI, clinicians gain quicker access to critical information and decision support, speeding up clinical processes while maintaining accuracy. Automated tools can draft clinical notes, summarize extensive medical records, and streamline communication among care teams. This not only mitigates clinician burnout but also ensures more time is spent on what truly matters - caring for patients effectively and compassionately.

Understanding and Mitigating Risks in AI Clinical Intelligence

AI has transformed patient care with its potential to improve outcomes and streamline processes. However, it also brings certain risks that healthcare organizations must address to ensure safety and maintain trust. From algorithmic errors to data security threats, these vulnerabilities can compromise both patient well-being and organizational stability. Let’s delve into how these risks manifest and ways to address them effectively.

Clinical Safety and Algorithm Performance Issues

AI algorithms, while powerful, come with limitations that can lead to serious clinical consequences. A key issue is algorithmic bias - when training data doesn’t reflect the diversity of real-world patient populations, the models can produce imbalanced results, disadvantaging certain groups and creating inequities in care. Additionally, over-reliance on AI recommendations without adequate human oversight can cause clinicians to overlook critical details that require expert judgment.

Another challenge is the "black box" nature of many AI models, which makes it difficult to understand how decisions are made. This lack of transparency complicates error tracing, especially as patient demographics shift and clinical practices evolve. Without regular updates, historical data used to train these models may lose relevance over time, further compounding the risk of errors[8][9][10][11][12][13][14].

Cybersecurity and Data Privacy Risks

Healthcare AI systems are prime targets for cyberattacks, given their reliance on interconnected devices and sensitive data. Medical devices like pacemakers, insulin pumps, and imaging equipment are particularly vulnerable to ransomware or Denial of Service (DoS) attacks, which could lead to life-threatening scenarios. Attackers could even manipulate these systems to produce harmful outcomes[8].

AI's dependency on vast amounts of sensitive patient data introduces additional risks. Data breaches or misuse could expose private information, and the inherent opacity of AI systems makes it harder for patients to fully understand how their data is being used. This creates challenges for informed consent, as patients may not be aware of how AI influences their care decisions[8][9].

Regulatory and Ethical Requirements

Navigating the regulatory and ethical landscape is another critical aspect of managing AI in healthcare. The FDA treats AI and machine learning algorithms as medical devices when they are used for diagnosing, treating, or preventing diseases. Depending on the risk level of the tool, these systems must adhere to premarket pathways such as De Novo classification, 510(k) clearance, or Premarket Approval[16][17]. While the 21st Century Cures Act offers some exclusions for clinical decision support software, the FDA retains oversight in high-risk situations[15][16].

Healthcare providers must also comply with HIPAA regulations to safeguard data privacy and security. Ethical considerations add another layer of complexity, as AI systems need to be explainable enough for both clinicians and patients to understand the rationale behind their recommendations. Informed consent, therefore, becomes an ongoing dialogue rather than a one-time agreement, requiring clear communication about AI’s role in care decisions[8][9].

To address these challenges, organizations should implement robust governance frameworks. These frameworks should prioritize transparency, accountability, and continuous oversight to ensure that AI systems are used responsibly and effectively[11].

Building a Secure and Compliant AI Clinical Intelligence Program

Implementing AI in healthcare requires a careful balance between innovation and safety. A secure, risk-managed framework is essential to ensure these tools improve patient care without introducing new vulnerabilities. Success in this area depends on establishing clear governance, maintaining data integrity, and thoroughly evaluating vendors. These steps form the backbone for reducing risks and staying compliant while advancing clinical intelligence.

Setting Up AI Governance and Strategy

Start by forming a dedicated AI governance committee that includes clinical leaders, IT security experts, legal advisors, compliance officers, and data scientists. This team should outline specific goals, such as reducing diagnostic errors, enhancing care coordination, or improving patient monitoring. Their responsibilities include drafting policies for oversight, creating accountability measures, and ensuring that AI tools support - rather than replace - clinical judgment.

To maintain human oversight, document decision-making processes, assign override authority, and train clinicians to interpret AI-generated outputs. Staying ahead of regulatory changes is also critical. The committee should actively monitor updates at both federal and state levels to adapt policies and practices as compliance requirements evolve[2].

Data Governance and Technical Requirements

The reliability of AI systems hinges on the quality of their training data. Establish a centralized risk data platform alongside robust data governance policies to secure, structure, and standardize patient information. Compliance with standards like HL7 FHIR, DICOM, and HIPAA is essential[1]. This involves processes to ensure training data is well-structured, accurately labeled, and diverse enough to minimize bias, which can otherwise compromise both AI performance and patient safety.

Additionally, encryption protocols and access controls must align with HIPAA regulations. Regular audits are vital for verifying data integrity and identifying issues like data drift that could affect the accuracy of AI systems over time.

Risk Assessment and Vendor Evaluation

Thorough vendor evaluations are a cornerstone of a secure AI program. Assess the quality of their training data, security measures, and compliance certifications. The Health Sector Coordinating Council (HSCC) is developing 2026 guidance to address AI cybersecurity risks and improve supply chain transparency, which will be a key resource for these evaluations[18].

Use frameworks like FUTURE-AI, which outlines 30 international best practices for managing AI throughout its lifecycle, to guide your assessments[2]. Key considerations include whether vendors can explain their algorithms' decision-making processes, describe the patient populations used in training, and outline how they manage model updates. Security reviews should focus on encryption methods, access controls, and incident response plans.

Document all findings during the evaluation process and include ongoing monitoring requirements in vendor agreements. Establishing a compliance program with continuous oversight and well-defined policies is critical for managing risks and addressing potential enforcement actions[2].

sbb-itb-535baee

Using Censinet for AI Risk Management

Healthcare organizations are under increasing pressure to adopt AI-driven clinical intelligence while meeting strict security and compliance requirements. Censinet RiskOps™ offers a centralized platform that simplifies cybersecurity and risk management for healthcare, making it easier to assess risks and maintain oversight.

Centralizing Cyber Risk with Censinet RiskOps™

Censinet RiskOps™ brings policies, risks, and mitigation efforts into one unified system. By consolidating real-time data into an easy-to-read risk dashboard, it provides governance committees and risk teams with a clear view of their organization’s risk landscape. This eliminates the inefficiency of juggling fragmented spreadsheets and disconnected tools.

The platform ensures critical findings - like data privacy issues flagged during vendor evaluations - are automatically routed to the appropriate teams, including governance, compliance, and security. This streamlined communication fosters accountability and ensures timely resolution of issues across Governance, Risk, and Compliance (GRC) teams.

Censinet AITM takes this a step further by automating vendor evaluations, saving time and improving accuracy.

Accelerating Vendor Assessments with Censinet AITM

Censinet AITM simplifies and speeds up vendor evaluations. Vendors can complete security questionnaires in seconds, and the system automatically summarizes their evidence and documentation. This process captures crucial details like product integrations and potential fourth-party risks. A human-guided automation framework ensures that risk teams stay in control through customizable rules and review processes.

Once vendor assessments are streamlined, continuous risk monitoring becomes the next priority.

Monitoring Risk with the Censinet Risk Dashboard

As technology evolves and new threats arise, continuous monitoring is vital. The Censinet Risk Dashboard provides real-time insights into system performance, security status, and compliance across the organization. Risk teams can track mitigation efforts, monitor adherence to policies, and compare their practices to industry standards. This centralized view helps healthcare leaders spot trends - such as recurring vulnerabilities - and establish robust policies to protect patient care effectively.

Conclusion

AI-driven clinical intelligence has the potential to save the U.S. healthcare system an impressive $360 billion annually while improving patient outcomes. Some imaging tools, for instance, are already achieving accuracy rates exceeding 94% [11]. But realizing these benefits requires more than just adopting the technology - it demands strong governance, airtight security, and compliance measures that prioritize patient safety alongside innovation.

Right now, there’s a significant gap between the excitement surrounding AI and the readiness to implement it effectively. While 80% of healthcare organizations focus on using AI to streamline workflows, just 18% report being aware of policies governing AI use within their institutions [3]. This lack of preparedness opens the door to serious risks, including misdiagnoses, data breaches, and regulatory violations. Given that the average healthcare cybersecurity incident costs $9.8 million [3], it’s clear that risk management needs to be a top priority. A structured, risk-based approach to deploying AI is no longer optional - it’s essential.

For AI to succeed in healthcare, three key elements must be in place: strong governance, human oversight to validate AI outputs, and ongoing monitoring of risks like model drift or emerging threats. This means organizations need to establish specialized AI compliance programs. These programs should include governance committees, clear policies for AI procurement and use, regular staff training, and routine system audits [2].

It’s also crucial to strike the right balance between technology and human judgment. AI should enhance - not replace - clinical decision-making. Providers must remain critical of AI outputs, address potential biases in training data, and have contingency plans ready. Transparency from vendors, particularly through audit trails and performance documentation, is equally important [11].

The urgency for better AI governance is underscored by the current state of adoption. Only 16% of health systems have systemwide policies in place to govern AI use [11]. Organizations that develop robust risk management frameworks will not only be better positioned to unlock AI’s potential but will also ensure patient safety remains a top priority. By combining strategic governance with vigilant oversight, healthcare can turn AI’s promise into tangible improvements in patient care.

FAQs

How can AI improve predictions for disease progression and patient outcomes?

AI is transforming how we predict disease progression and patient outcomes by processing enormous volumes of healthcare data. It identifies patterns, pinpoints risk factors, and detects early warning signs, allowing healthcare providers to step in earlier and develop treatment plans customized to fit each patient’s specific needs.

With AI, clinicians gain the ability to make more precise predictions about a patient’s condition, which supports better decision-making and elevates the quality of care. These tools also minimize human error and simplify the often intricate task of managing patient health in real-time.

What are the key risks of using AI in healthcare, and how can they impact patient care?

The integration of AI in healthcare offers promising advancements, but it also carries risks that could affect patient outcomes if not carefully addressed. For example, system errors or malfunctions may result in incorrect diagnoses or treatments, while privacy breaches present a serious concern due to the highly sensitive nature of patient data. Other risks, like data security vulnerabilities and algorithm bias, can undermine trust and lead to uneven or inaccurate results.

There are also practical challenges to consider. Issues like alert fatigue - caused by an overwhelming number of notifications - can desensitize healthcare providers, potentially leading to missed critical alerts. Overreliance on AI might reduce human oversight, and a lack of transparency in AI decision-making processes can make it difficult for providers to trust or fully understand the recommendations. Addressing these concerns with clear strategies, such as ensuring informed consent for data use and implementing safeguards, is key to protecting patients while maximizing the benefits AI can bring to healthcare.

How can healthcare organizations ensure their AI systems comply with regulations like HIPAA?

Healthcare organizations can stay compliant with HIPAA regulations when using AI by implementing robust governance practices. This means conducting frequent risk assessments, keeping an updated list of all AI tools in use, and actively monitoring these systems to catch vulnerabilities as they arise.

On top of that, prioritizing data transparency and privacy protections is essential. This involves tracking the origins of data, tackling potential biases, and weaving oversight mechanisms into daily operations. Incorporating human oversight and following stringent privacy standards are also key measures to reduce risks and align with regulatory demands.