Cloud SLAs vs. Reality: Why 99.99% Uptime Promises Failed Healthcare on October 20

Post Summary

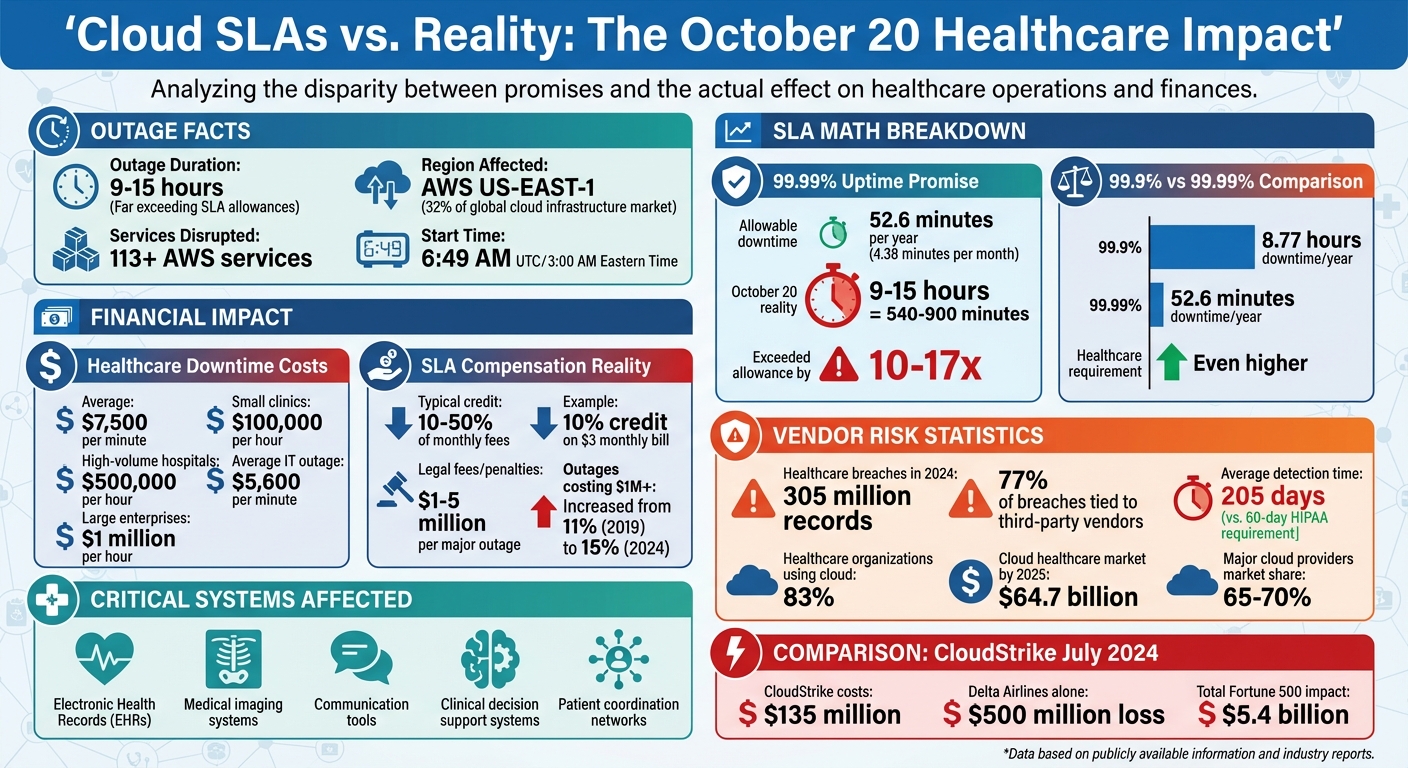

The October 20 AWS outage exposed a harsh truth for healthcare: even a 99.99% uptime guarantee can fail when it matters most. For healthcare providers, where every second counts, the downtime caused by AWS's US-EAST-1 failure disrupted critical systems like electronic health records (EHRs), communication tools, and patient care workflows.

Key Takeaways:

- Healthcare's reliance on cloud SLAs is risky: Standard SLAs prioritize uptime percentages but fail to address the unique demands of healthcare, such as patient safety and operational continuity.

- The AWS regional outage lasted hours, not minutes: This exceeded the allowable downtime under 99.99% SLAs, leaving healthcare providers scrambling with incomplete data and manual processes.

- Single-provider dependency is dangerous: Many healthcare organizations centralize systems in one cloud region, which creates a single point of failure during outages.

- SLA credits are insufficient: Financial compensation from SLA breaches barely covers the actual costs of downtime, which can reach $7,500 per minute for healthcare operations.

- Resilience requires proactive strategies: Multi-region failover, hybrid-cloud setups, and tailored healthcare SLAs are critical to mitigating risks.

Quick Overview:

| Issue | Impact |

|---|---|

| Outage Duration | 9–15 hours, far exceeding SLA allowances |

| Critical Systems Affected | EHRs, imaging, communication tools, clinical decision support |

| Single-Provider Risks | Centralized AWS US-EAST-1 dependency led to widespread failures |

| SLA Compensation Limitations | Minimal credits, often 10% of monthly fees, fail to offset actual losses |

Healthcare leaders must rethink their approach to cloud risk by implementing multi-layered resilience strategies, negotiating better SLAs, and continuously monitoring dependencies. The October 20 outage is a reminder that uptime guarantees alone are not enough to safeguard patient care.

Healthcare Cloud Outage Impact: AWS October 20 Downtime Costs and SLA Gaps

October 20: The Outage and Its Healthcare Impact

Outage Timeline and Affected Services

In the early hours of October 20, 2025, a major AWS outage disrupted services in the US-EAST-1 region, located in Northern Virginia. ThousandEyes reported the first signs of trouble at AWS edge nodes in Ashburn, Virginia, around 6:49 AM UTC [5] (about 3:00 AM Eastern Time). What started as packet loss quickly escalated into significant error rates and delays. Before long, the outage caused widespread failures across various AWS services, creating a ripple effect that severely impacted healthcare systems.

How Healthcare Systems Failed

Healthcare providers heavily dependent on AWS infrastructure faced serious challenges. Electronic health records (EHRs) became inaccessible [4], cutting off clinicians from crucial patient information. Communication networks within hospital systems also went down [6], disrupting coordination between departments and remote facilities. Without access to complete patient data, clinicians were forced to make critical decisions based on incomplete information, increasing the risk of errors in patient care.

The Single-Provider Risk

This outage highlighted a significant vulnerability: the reliance on a single cloud provider. While service-level agreements (SLAs) typically guarantee high availability, the concentrated failure in one region exposed the limits of such assurances. Many healthcare organizations had centralized their operations in AWS US-EAST-1 to save costs and streamline management. Although they implemented redundancy, it was often confined to the same region. When the regional outage overwhelmed these safeguards, the consequences were dire. The incident underscored the dangers of putting all IT infrastructure eggs in one basket - when a cloud provider’s systems fail, the ability to deliver patient care can collapse, and standard SLAs offer little recourse in such scenarios.

Why 99.99% Uptime Failed Healthcare

How Cloud SLAs Work

Building on the earlier discussion about healthcare vulnerabilities, let’s dive into how cloud SLAs (Service Level Agreements) function in reality. These agreements often limit the provider’s liability and offer little recourse when healthcare operations are disrupted [4] [3] [9] [11]. By signing a standard SLA, organizations agree to terms that narrowly define "uptime", use calculations that favor the provider, and provide minimal compensation when services fall short.

Typically, SLAs offer service credits as compensation. For example, if a 99.99% uptime guarantee is breached, you might receive a 10% credit on a $3 monthly bill. But this refund barely scratches the surface of the financial and operational losses healthcare organizations face. Beyond the monetary impact, these outages can result in lost revenue, regulatory penalties, and, most importantly, risks to patient safety.

Another critical issue is that SLAs rarely address performance degradation. Even if there’s no outright outage, spikes in error rates, increased latency, or unreliable services can severely disrupt operations. Yet, standard SLAs often provide no compensation for these scenarios, despite the fact that degraded performance can be just as harmful to patient care as a complete system failure.

SLA Math vs. Healthcare Requirements

A 99.99% uptime guarantee translates to about 52.6 minutes of allowable downtime per year - or roughly 4.38 minutes per month. While this might seem reasonable in other industries, in healthcare, even a few minutes of downtime can have serious consequences.

Take the October 20 outage as an example. It lasted between 9 and 15 hours, with some healthcare organizations reporting lingering issues for hours afterward [10] [11]. This wasn’t a minor disruption - it meant entire shifts of clinicians were left without access to critical systems like electronic health records (EHRs), patient histories, and communication tools. For healthcare providers, the fallout included lost revenue, regulatory fines, and, most alarmingly, compromised patient safety. The service credits offered in such cases are negligible compared to these far-reaching impacts.

Hidden Risks in Cloud Architecture

The October 20 outage also revealed deeper vulnerabilities that SLAs simply don’t cover. These include shared service dependencies and cascading failures across interconnected systems. In this case, the issue stemmed from a DNS resolution and gateway path failure linked to the DynamoDB API endpoint in the US-EAST-1 region. This region supports about 32% of the global cloud infrastructure market [4] [10].

AWS’s interconnected microservices architecture created a domino effect. Failures in core services like DynamoDB and EC2 rippled through dependent systems, causing widespread disruptions [7] [8] [4]. Even systems with built-in redundancies within the same region couldn’t withstand the cascading failures. Shared control planes and dependencies on services like DNS and IAM became single points of failure, undermining even the most carefully designed architectures [4] [3] [10] [8]. For healthcare providers, this meant losing access to EHRs, communication tools, and clinical decision support systems - all at once - putting patient care at immediate risk.

These events also exposed another critical issue: external dependencies that traditional business continuity plans often overlook [10]. Such plans assume you control the failure domain, but when major cloud providers like AWS, Microsoft Azure, or Google Cloud - who collectively dominate 65% to 70% of the global cloud infrastructure market - experience outages, that assumption no longer holds true [10] [11]. Instead of managing your own infrastructure failure, you’re left at the mercy of someone else’s crisis. This underscores the urgent need for healthcare IT to adopt stronger, multi-layered resilience strategies to mitigate these risks effectively.

How to Build Resilience Beyond SLAs

Multi-Region and Hybrid-Cloud Failover Design

Relying on a single cloud region is a risky strategy. Healthcare organizations need to deploy identical environments across multiple cloud regions - think AWS us-east-1 and us-west-2 or Azure centralus and westeurope - using tools like Terraform to automate infrastructure setup [12]. This way, if one region experiences issues, another can seamlessly take over.

To make this setup work, smart DNS failover mechanisms are essential. These systems can automatically detect latency or downtime and reroute traffic to a healthy region without requiring manual intervention [12]. For instance, AWS Lambda combined with Route53 can monitor a primary region and redirect users to a backup when problems arise. One healthcare SaaS platform faced over six hours of downtime when its primary region went offline, disrupting telehealth services and clinic operations [12].

For critical applications like electronic health records (EHRs) or clinical decision support systems, an active-active resilience model is worth considering. This approach allows multiple regions to handle traffic simultaneously, ensuring maximum availability. While more expensive, it’s invaluable when every second matters. If this isn’t feasible, an active-passive failover model - where a secondary cloud remains on standby with synchronized data - can be a good alternative [3]. Using Kubernetes for multi-cloud resilience, along with service meshes like Istio or Linkerd for traffic management, can also help reduce dependency on a single provider [3].

Edge computing adds another layer of resilience. By extending workloads to local edge nodes, healthcare operations can continue even if central cloud connectivity is disrupted. Once connectivity is restored, the system reconciles any changes [3]. Some organizations have gone a step further, creating emergency read-only backup servers or digital prescription systems using low-code tools like Microsoft Power Platform integrated with Microsoft Teams. These solutions help maintain care continuity during outages [13].

Ultimately, technical resilience relies on strong architecture and careful evaluation of vendor dependencies to ensure uninterrupted service.

Tracking Vendor and Fourth-Party Dependencies

The cascading failures on October 20 highlighted a harsh reality: resilience is only as robust as the weakest link in your system. When a primary cloud region goes down, the ripple effects can disrupt interconnected systems, impacting critical operations like EHRs, communication tools, and clinical decision support.

To mitigate these risks, healthcare organizations need strong architectural governance [3]. Before launching any system, an architecture board should review it to identify outage-prone regions and single points of failure. Every external dependency - whether it’s a DNS provider, authentication service, or data storage solution - should be documented and evaluated for its potential impact on patient care if it fails.

Healthcare providers must take responsibility for maintaining service continuity, even when third-party vendors or cloud infrastructure falter [3]. Traditional business continuity plans often assume full control over the failure domain, but this assumption doesn’t hold when major cloud providers, which account for 65% to 70% of global cloud infrastructure, experience outages [10][11]. To address this, organizations should establish clear governance protocols, assign ownership for monitoring dependencies, and define escalation procedures for when risks arise.

Building resilient systems is crucial, but negotiating tailored SLAs for healthcare environments is just as important to protect against financial and operational risks.

How to Negotiate Healthcare-Specific SLAs

Technical resilience alone isn’t enough; healthcare organizations must secure SLAs that reflect the critical nature of patient care.

Most standard cloud SLAs are designed to protect the provider, not the healthcare organization. For example, Google Cloud Healthcare might offer a 10–50% service credit for SLA breaches, but this compensation barely scratches the surface of the actual financial losses [3][2]. On average, healthcare organizations lose $7,500 per minute of downtime [14]. A single hour of network disruption can cost clinics around $100,000 in lost revenue, and for high-volume hospitals, that figure can climb to $500,000 due to delayed procedures and missed appointments [14].

Start by demanding higher uptime guarantees for essential systems. For critical databases and patient-facing applications, negotiate for 99.99% uptime, which limits downtime to just 52.6 minutes annually [1]. The standard 99.9% uptime, allowing up to 8.77 hours of downtime per year, is insufficient for healthcare. Aim for SLAs that exceed the provider’s baseline targets by an extra "9" [3].

Additionally, push for remedies that go beyond basic service credits [9][14][15]. Your SLA should allow for compensation covering lost revenue, diminished patient trust, and regulatory fines tied to outages - especially in cases of provider negligence or repeated failures. Legal fees and penalties from outages can range from $1 million to $5 million, and the percentage of outages costing over $1 million has risen from 11% to 15% since 2019 [15].

Make sure your SLA explicitly addresses key metrics like Recovery Point Objective (RPO), Recovery Time Objective (RTO), data durability, and geographic replication [9][16]. Insist on tiered response times based on issue severity - such as 15–30 minutes for critical problems - and clear resolution time goals, including commitments for Mean Time to Recovery (MTTR) [1]. Transparency is key: demand clarity on how SLA metrics are calculated and monitored, and ensure exclusion clauses are narrowly defined to prevent providers from avoiding accountability [1]. Lastly, include requirements for data sovereignty and compliance reporting to ensure adherence to HIPAA and other regulations [1].

sbb-itb-535baee

Managing Cloud Risk on an Ongoing Basis

Creating a Cloud Risk Governance Program

The October 20 outage serves as a stark reminder that managing cloud risk isn’t a one-and-done task - it’s a continuous process. For healthcare organizations, this means weaving cloud risk oversight into broader enterprise risk and patient safety strategies. A critical step here is evaluating technology partners with a sharp focus on their crisis response and communication protocols.

A strong governance program begins with clear accountability. Designated teams should handle key responsibilities like monitoring vendor dependencies, tracking SLA (Service Level Agreement) performance, and flagging emerging risks. Before launching any system, it's essential to conduct internal reviews to identify potential outage-prone regions and single points of failure. Documenting these dependencies and integrating them into a larger risk governance framework helps assess how each component could impact patient care if it fails.

The urgency of these efforts is backed by alarming data: healthcare breaches affected 305 million records in 2024, and 77% of these incidents were tied to third-party vendors [17]. Even worse, healthcare organizations took an average of 205 days to detect and report vendor-related breaches - far exceeding HIPAA's 60-day notification requirement [17].

Once governance structures are in place, the focus shifts to ongoing monitoring.

Monitoring and Reassessing Cloud Risk

Relying solely on traditional contract management just won’t cut it anymore. Real-time, automated tools are a must for monitoring cloud risks effectively [17]. This means tracking critical SLA clauses, vendor performance, security issues, and compliance metrics continuously - not just during an annual contract review.

Automated workflows can streamline this process. These tools can catalog vendor contracts, extract key obligations like notification requirements, and establish baseline performance metrics [17]. By connecting contract management platforms to security systems, organizations can set up alerts that trigger whenever SLA benchmarks are breached [17].

Reassessment should be a regular practice, especially after major incidents, when risk patterns emerge, or when regulations change [17][9]. Take the July 2024 CloudStrike outage as an example: while the company faced $135 million in costs and service credits, Delta Airlines alone lost $500 million. Parametrix Insurance estimated the total financial impact on U.S. Fortune 500 companies at a staggering $5.4 billion [3]. Events like this highlight the need for healthcare organizations to reevaluate vendor relationships, failover strategies, and contractual protections.

AI-powered contract lifecycle management (CLM) platforms can be game-changers, saving an estimated 12,500–20,000 hours of analysis through automation [17]. These platforms enable real-time monitoring of healthcare-specific SLA clauses - such as data breach notification timelines, system availability guarantees, and security incident response requirements - sending instant alerts when breaches occur.

Managing Cloud Risk with Censinet RiskOps™

To build on these automated practices, Censinet RiskOps™ offers a centralized solution for managing cloud vendor risks across healthcare organizations. This platform simplifies third-party risk assessments, continuously monitors vendor performance, and promotes collaboration among governance, risk, and compliance teams.

Powered by Censinet AI™, the platform can complete vendor security questionnaires in seconds, summarize vendor evidence and documentation automatically, and capture critical details on product integrations and fourth-party risks. This speed and efficiency are vital for managing the complex web of cloud dependencies that contributed to the October 20 outages.

Censinet RiskOps™ consolidates risk management activities into a single, intuitive dashboard. Key findings and tasks are routed to the appropriate stakeholders for timely review and action. The dashboard provides a real-time snapshot of vendor-related policies, risks, and tasks, ensuring that the right teams address issues quickly and effectively.

The platform also incorporates human-guided automation for critical steps like evidence validation, policy creation, and risk mitigation. While automation handles repetitive tasks, risk teams maintain control through customizable rules and review processes. This balance allows healthcare leaders to scale their risk management efforts without compromising patient safety or care delivery - an essential capability as 83% of healthcare organizations already rely on cloud-based services, a market expected to hit $64.7 billion by 2025 [18]. This approach directly addresses the vulnerabilities exposed by the October 20 outage.

Conclusion: What Healthcare Leaders Should Do Next

The AWS outage on October 20, 2025, served as a stark reminder that even promises of 99.99% uptime can't safeguard patient care when systems go down. This disruption in AWS's US-EAST-1 region - a hub with considerable global market share - impacted over 113 services. For healthcare organizations, it underscored a critical truth: resilience cannot be outsourced. The responsibility ultimately lies with them [7][19].

The incident also highlighted the financial gaps in standard SLA agreements. Minimal SLA credits offer little relief for the actual costs incurred during downtime. The average IT outage costs $5,600 per minute, and for larger enterprises, losses can climb to $1 million per hour [19]. These agreements are designed to limit the provider's liability, not to cover the substantial business losses, reputational harm, or regulatory penalties that follow.

Preventing cloud outages requires a proactive approach. Relying solely on multi-AZ redundancy within a single provider falls short when shared regional control planes, DNS dependencies, and centralized service endpoints become single points of failure [19]. The heavy reliance on a handful of major cloud providers creates systemic risks that call for diversification [7].

In light of these challenges, healthcare leaders need to rethink their risk management strategies. This means implementing diverse, automated failover mechanisms and embracing multi-cloud or hybrid-cloud architectures to distribute workloads across multiple providers [19]. They should push for healthcare-specific SLAs that prioritize patient care over generic uptime guarantees. Additionally, continuous monitoring programs should be established to keep tabs on vendor dependencies, SLA compliance, and new risks as they emerge - not just during periodic contract reviews.

Ultimately, resilience must be developed internally. The outage made it clear that healthcare organizations are responsible for their own data security, compliance, and operational continuity [9][4]. Leaders who approach cloud risk management as an ongoing governance issue, rather than a one-time decision, will be far better prepared to safeguard patient care when the next disruption inevitably arises.

FAQs

How can healthcare organizations strengthen cloud resilience to prevent disruptions beyond what SLAs guarantee?

Healthcare organizations can strengthen their cloud resilience by embracing multi-cloud or hybrid-cloud strategies. These approaches help avoid over-reliance on a single provider, ensuring critical systems stay functional even if one provider experiences issues. Adding redundant architectures further boosts reliability, keeping essential operations running smoothly during unexpected failures.

Another key step is developing robust disaster recovery plans. This includes automated failover systems, regular testing, and clear communication protocols. Together, these measures help reduce downtime and ensure uninterrupted patient care.

To stay ahead of potential issues, organizations should also keep a close eye on vendor performance and perform regular risk assessments. This proactive approach helps uncover vulnerabilities early, closing the gap between service level agreements (SLAs) and actual system reliability.

Why does depending on a single cloud provider increase risks for healthcare organizations during outages?

Relying on just one cloud provider can leave healthcare systems vulnerable during outages, creating a single point of failure. If the provider goes down, essential services - like accessing patient records, scheduling appointments, or running telehealth platforms - can grind to a halt.

The fallout from such disruptions can be severe: delayed patient care, potential safety risks, financial setbacks, and damage to an organization's reputation. To reduce these risks, healthcare providers should explore options like using multiple cloud providers, implementing strong disaster recovery plans, and continuously monitoring vendor performance to maintain reliable and resilient operations.

Why don’t SLA compensations fully cover the impact of cloud outages for healthcare providers?

SLA compensations often come up short because they usually offer small financial credits that barely cover a fraction of the actual losses incurred. These credits fail to address the real-world impacts healthcare providers experience, such as disruptions to patient care, damage to their reputation, or the added costs of managing operations during outages.

For healthcare organizations, the stakes go far beyond the dollar value of SLA credits. A system outage can halt critical operations, delay essential treatments, and put patient safety at risk - consequences that go well beyond what most SLAs account for. This highlights why proactive risk management and solid contingency plans are crucial to reducing the risks of downtime.

Related Blog Posts

- The October 2025 AWS Outage: A $62,500-Per-Hour Wake-Up Call for Healthcare Organizations

- 7 Hours Down, Millions Affected: Inside the AWS Outage That Broke Healthcare's Digital Backbone

- Why Your "Highly Available" Healthcare Cloud Architecture Failed on October 20, 2025

- The AWS Outage and Joint Commission: How Business Continuity Standards Just Got Harder