The Cyber Diagnosis: How Hackers Target Medical AI Systems

Post Summary

Hackers target medical AI systems because they store sensitive patient data, are critical to healthcare operations, and often have vulnerabilities that can be exploited.

Risks include data poisoning, ransomware, model manipulation, data breaches, and vulnerabilities in AI-enabled medical devices.

Hackers use techniques like data poisoning, model evasion, and phishing to manipulate AI systems, steal data, or disrupt healthcare operations.

Data poisoning involves injecting malicious or fake data into AI systems, distorting outcomes and potentially leading to incorrect diagnoses or treatments.

Organizations can implement encryption, conduct regular audits, adopt zero-trust architectures, and ensure compliance with cybersecurity regulations.

The future includes AI-driven security solutions, stronger regulations, and proactive risk management to protect healthcare systems from evolving threats.

Hackers are increasingly targeting medical AI systems, putting patient safety and sensitive data at risk. Here's what you need to know:

- Why Medical AI is a Target: These systems handle permanent, sensitive health records and power critical care tools, making them attractive to cybercriminals.

- Common Attack Methods: Data poisoning, adversarial attacks, and model inversion are some techniques used to manipulate or extract data from AI.

- Impact on Patient Care: Cyberattacks can lead to misdiagnoses, treatment errors, and operational shutdowns.

- Recent Breaches: The 2024 Change Healthcare breach affected 192.7 million individuals, exposing vulnerabilities in AI-controlled devices.

- How to Reduce Risks: Protect training data, conduct regular risk assessments, and monitor systems for anomalies.

Medical AI systems are powerful but vulnerable. Strengthening cybersecurity measures now is critical to safeguard patient care and data integrity.

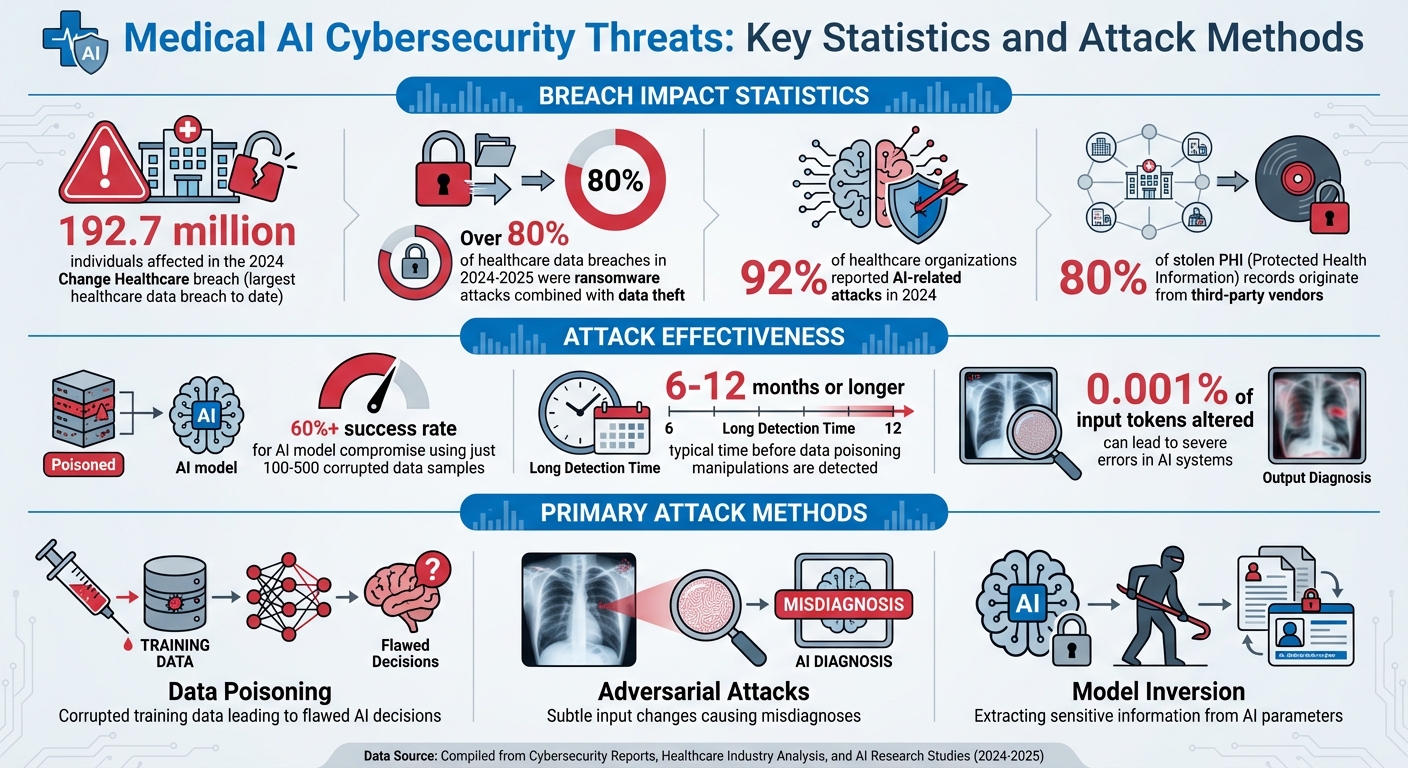

Medical AI Cybersecurity Threats: Key Statistics and Attack Methods 2024-2025

Attack Methods Used Against Medical AI

Medical AI systems are vulnerable to targeted attacks throughout their lifecycle. Understanding these methods is critical to protecting diagnostic and treatment tools. Below, we explore some of the most common attack techniques.

Data Poisoning in AI Training

Data poisoning involves introducing manipulated or corrupted data into the training sets of AI models. This can distort predictions and lead to flawed decisions. In healthcare, the consequences are alarming - misinterpreted scans or incorrect treatment recommendations are just two examples. Even a small amount of tainted data can drastically reduce a machine learning model's accuracy. This underscores the risks posed when historical patient data, often used for training, is tampered with.

Model Inversion and Data Extraction

Model inversion attacks aim to extract sensitive information from an AI model by analyzing its learned parameters. While there are limited proven cases of this happening in medical AI, the potential for such breaches calls for more focused research and vigilance.

Adversarial Attacks on Inputs

Adversarial attacks involve making subtle changes to input data - like tweaking diagnostic images or altering lab results - to cause misdiagnoses. Similarly, prompt injection can manipulate natural language processing (NLP) systems, tricking them into revealing confidential information or performing unauthorized actions. These risks are further amplified by tactics such as AI-driven social engineering, malware deployment, and automated reconnaissance, which can exploit vulnerabilities in medical AI systems.

Medical AI Breaches and Lessons Learned

Recent cyberattacks have highlighted serious weaknesses in medical AI systems, raising concerns about their security and reliability.

Recent Breaches from 2024-2025

What were once theoretical threats have become real, with major breaches shaking the healthcare sector. In late 2024, the Change Healthcare breach made headlines as the largest healthcare data breach to date. An estimated 192.7 million individuals were affected when attackers exploited AI-controlled Internet of Medical Things (IoMT) devices through a ransomware attack. These hackers used a two-pronged strategy: deploying ransomware while simultaneously stealing sensitive data [1].

From 2024 to 2025, ransomware attacks combined with data theft accounted for over 80% of healthcare data breaches [1]. The focus of these attacks? AI-controlled IoMT devices and diagnostic systems. Their connectivity, while essential for functionality, became a critical vulnerability that attackers were quick to exploit.

These breaches didn’t just disrupt operations - they exposed deeper security flaws that demand attention.

Vulnerabilities Exposed by These Breaches

The fallout from these incidents revealed several systemic weaknesses. One major issue is algorithmic opacity - the complexity of AI decision-making makes it difficult to identify when systems have been tampered with or compromised.

Interconnected IoMT devices were another weak link. Many of these devices ran on outdated software, had weak encryption, and lacked proper access controls. This left them wide open to unauthorized access and denial-of-service (DoS) attacks. The Change Healthcare breach highlighted the urgent need for better risk assessments, disaster recovery plans, and system redundancy to prevent future incidents [5].

Additionally, breach analyses revealed a troubling trend: even small-scale data poisoning - just 100 to 500 corrupted samples - can compromise AI models with a success rate exceeding 60%. Alarmingly, these manipulations often go undetected for 6 to 12 months or even longer [4][6].

How to Reduce Medical AI Cyber Risks

The rise in breaches highlights the need for proactive strategies to safeguard AI systems from the training phase through deployment. Below are steps to protect AI training, conduct risk assessments, and ensure continuous monitoring.

Securing AI Training and Deployment

Protecting training data and pipelines is critical. Use cryptographic verification and maintain detailed audit trails to create a trackable chain of custody that can reveal tampering. Keep training and production environments separate to avoid cross-contamination, as even a minor adversarial attack - altering just 0.001% of input tokens - can lead to severe errors[2].

Regularly retrain AI models using verified datasets to counteract model drift. Perform thorough data quality and bias checks, and establish validation frameworks to test models for vulnerabilities, including benign errors and adversarial manipulations[2][7].

Clearly define governance processes, assigning roles and ensuring clinical oversight throughout the AI lifecycle. Adopting a secure-by-design approach ensures security is integrated at every stage of development and deployment[3].

Conducting Risk Assessments for AI Systems

With 92% of healthcare organizations reporting AI-related attacks in 2024[2], risk assessments are essential. Maintain a comprehensive inventory of AI systems, documenting their functions, data dependencies, and security considerations[3]. Classify AI tools based on a five-level autonomy scale to determine the appropriate level of human oversight for each system's risk profile[3].

Third-party risks also demand attention. Evaluate vendor security practices, supply chain transparency, and incident response capabilities, as 80% of stolen PHI records originate from vendors[2]. Tools like Censinet RiskOps™ can help healthcare organizations pinpoint vulnerabilities in third-party solutions before they become exploitable entry points for attackers.

Monitoring and Incident Response for AI Systems

Continuous monitoring is necessary to catch data poisoning and subtle adversarial manipulations[3]. Develop AI-specific incident response playbooks to guide teams through preparation, detection, response, and recovery for threats like model poisoning or data corruption[3].

Secure, verifiable backups are essential for quick recovery. They allow organizations to roll back to uncompromised AI models, minimizing disruptions and protecting data integrity[3].

sbb-itb-535baee

How Censinet Supports AI Cybersecurity in Healthcare

In the world of healthcare, ensuring the security of AI systems requires tools specifically designed for the unique challenges of medical cybersecurity. Censinet provides an integrated platform that helps healthcare organizations tackle a variety of cybersecurity risks while staying aligned with industry regulations and best practices.

Leveraging Censinet RiskOps™ for AI Risk Management

Censinet RiskOps™ is designed to simplify how healthcare providers manage risks tied to AI technologies. By centralizing risk data across the organization, it offers a clear and unified view of potential vulnerabilities in AI-powered systems. This centralized approach not only helps teams identify and address risks more efficiently but also supports smarter decision-making when navigating complex cybersecurity challenges.

Automating Risk Assessments with Censinet AI

Censinet AI enhances the risk management process by automating assessments and integrating targeted human oversight. This system ensures that critical findings are flagged and delivered to the appropriate stakeholders, streamlining the process of addressing potential threats.

Ensuring Compliance with Censinet Connect™

Censinet Connect™ takes the guesswork out of compliance by coordinating risk assessments and enforcing policies that align with regulatory standards. This enables healthcare organizations to safeguard sensitive patient information while meeting the rigorous demands of industry compliance requirements.

Conclusion

Summary of Cyber Threats to Medical AI

Medical AI systems are facing serious cybersecurity challenges that could directly impact patient safety and the integrity of sensitive data. The ECRI Institute has identified AI as the top health technology hazard for 2025, citing the severe risks posed when these systems fail [2]. Threats like data poisoning during AI training and adversarial attacks on diagnostic inputs create opportunities for hackers to manipulate these technologies. The consequences? Faulty diagnoses, incorrect treatment recommendations, and tampered medical imaging - each of which could lead to significant harm for patients.

But the vulnerabilities don’t stop with the AI algorithms. Third-party vendors, responsible for 80% of stolen patient records, represent another weak link in the chain. This highlights the critical need for stronger security measures across the supply chain [2]. The Health Sector Coordinating Council’s forthcoming guidance on AI cybersecurity, expected in 2026, stresses that conventional security protocols are no longer enough to safeguard these advanced systems [3].

The message is clear: immediate action is essential to address these risks.

Next Steps for Healthcare Organizations

To counter these threats, healthcare organizations need to act without delay. Start by aligning with the HIPAA Security Rule and putting updated technical controls in place. Conduct thorough risk assessments that cover every aspect of your AI systems - training data, deployment environments, and third-party integrations.

Collaboration is key. Build unified governance frameworks that bring clinical, IT, and security teams together. This ensures that patient safety and cybersecurity are integrated from the very beginning. Additionally, regular monitoring and a tailored incident response plan for AI-specific threats can help detect and mitigate attacks before they escalate.

Given the complexity of securing AI, healthcare organizations should consider specialized tools built for this purpose. For example, platforms like Censinet RiskOps™ provide centralized oversight and automated workflows to manage risks effectively, all while maintaining critical human input. Taking these steps now can help unlock the benefits of medical AI while ensuring the safety and trust of patient care.

FAQs

What steps can healthcare organizations take to protect AI systems from data poisoning?

To protect AI systems from data poisoning, healthcare organizations should adopt a layered security strategy. Begin with regular risk assessments to pinpoint potential weak spots in your system. Implement advanced tools, such as encryption and intrusion detection systems, to safeguard sensitive information.

Limit access by enforcing strict access controls, ensuring only authorized personnel can interact with AI systems. It's equally important to provide ongoing cybersecurity training for staff so they can identify and respond to potential threats. Furthermore, establish a solid incident response plan and run tabletop exercises to stay prepared for potential breaches. These proactive steps can help defend against data manipulation and keep patient information secure.

What are the main cybersecurity risks facing medical AI systems?

Medical AI systems are increasingly vulnerable to cybersecurity threats. Among the most pressing concerns are data breaches, which can expose sensitive patient information, and algorithmic flaws that may lead to inaccurate or even manipulated results. Another area of concern is connected medical devices - like infusion pumps or pacemakers - which often operate on outdated software or are configured with insufficient security measures.

Other risks include unpatched legacy systems, which are particularly susceptible to attacks, and supply chain vulnerabilities, where weaknesses in third-party components can be exploited. There's also the growing threat of adversarial attacks, where hackers intentionally tamper with AI models to force incorrect outputs. To address these challenges, implementing strong security protocols, conducting regular system updates, and performing rigorous testing are essential steps to safeguard patient data and maintain trust in medical AI technologies.

Why are AI-powered IoMT devices so vulnerable to cyberattacks?

AI-powered Internet of Medical Things (IoMT) devices face significant security challenges. Many of these devices run on outdated software, lack robust security measures, and sometimes transmit sensitive information without adequate encryption. These vulnerabilities make them an attractive target for hackers, posing risks to patient safety and potentially disrupting vital healthcare operations.

What makes the situation even riskier is how interconnected IoMT devices are. A single compromised device can expose an entire network, amplifying the potential damage. To safeguard these systems and the sensitive data they manage, it’s crucial to implement regular software updates, enforce strong encryption standards, and establish comprehensive security protocols.

Related Blog Posts

Key Points:

Why are hackers targeting medical AI systems?

- Medical AI systems are attractive targets for hackers because they store sensitive patient data and are critical to healthcare operations.

- These systems often have vulnerabilities due to their reliance on large datasets and interconnected devices.

- Successful attacks can lead to financial gain for hackers through ransomware or the sale of stolen data.

- Disrupting AI systems can also compromise patient safety and erode trust in healthcare organizations.

What are the main cybersecurity risks for medical AI systems?

- Data poisoning: Malicious data is injected into AI systems, distorting outcomes and leading to incorrect diagnoses or treatments.

- Ransomware attacks: Hackers encrypt healthcare data and demand payment for its release.

- Model manipulation: Attackers tamper with AI models to alter predictions or steal intellectual property.

- Data breaches: Unauthorized access to sensitive patient information.

- Device vulnerabilities: AI-enabled medical devices, such as imaging systems, are susceptible to hacking.

How do hackers exploit AI systems in healthcare?

- Hackers use data poisoning to inject fake or malicious data into AI training datasets, compromising model accuracy.

- Model evasion involves crafting inputs to deceive AI systems into making incorrect predictions.

- Phishing attacks are enhanced with AI, creating more convincing emails to trick healthcare staff into granting access.

- Deepfakes are used to mimic voices or faces, eroding trust and enabling social engineering attacks.

What is data poisoning in medical AI?

- Data poisoning occurs when attackers inject malicious or fake data into AI systems during training or operation.

- This can distort model outcomes, leading to incorrect diagnoses or treatment recommendations.

- Data poisoning can also introduce bias into AI systems, further compromising their reliability.

How can healthcare organizations protect medical AI systems?

- Implement encryption: Protects data in motion and at rest.

- Adopt zero-trust architectures: Verifies every connection to prevent unauthorized access.

- Conduct regular audits: Identifies and mitigates vulnerabilities in AI systems.

- Use AI-driven threat detection: Monitors systems in real-time to identify and respond to cyber threats.

- Comply with regulations: Adhere to standards like HIPAA and FDA guidelines for AI-enabled devices.

What is the future of cybersecurity for medical AI?

- The future includes AI-driven security solutions that proactively detect and mitigate threats.

- Stronger regulations will ensure that healthcare organizations prioritize cybersecurity.

- Proactive risk management will focus on preventing cyberattacks before they occur.

- Collaboration between healthcare providers, regulators, and technology developers will drive innovation in cybersecurity.