The Cybersecurity AI Arms Race: Staying Ahead of Automated Threats

Post Summary

The cybersecurity AI arms race refers to the competition between organizations and cybercriminals leveraging AI to outpace each other in developing advanced attack and defense mechanisms.

AI is used for threat detection, predictive analytics, automated responses, and identifying vulnerabilities in real-time.

Risks include adversarial AI, where attackers use AI to bypass defenses, and the potential for AI systems to be exploited or manipulated.

By investing in advanced AI tools, fostering collaboration, and continuously updating their cybersecurity strategies.

Ethical AI ensures that AI systems are transparent, unbiased, and used responsibly to protect sensitive data and maintain trust.

Future trends include AI-driven automation, enhanced threat intelligence, and the integration of AI with quantum computing for stronger defenses.

The rise of AI in cybersecurity is reshaping how healthcare organizations defend against increasingly automated and sophisticated cyberattacks. Attackers now use AI to automate reconnaissance, craft convincing phishing scams, and adapt malware to bypass defenses. In 2025 alone, 33 million Americans were affected by healthcare data breaches, with ransomware attacks increasing by 30%. Legacy systems relying on outdated methods like signature-based detection are unable to keep pace with these evolving threats.

Healthcare systems face unique risks, as AI-driven attacks can disrupt critical patient care, cause medical errors, and exploit vulnerabilities in third-party vendors. To counter these challenges, solutions like Censinet RiskOps™ and Censinet AI™ provide tools for real-time risk management, vendor oversight, and AI governance. These platforms combine automation with human judgment, enabling faster risk assessments and compliance with regulations like HIPAA and FDA guidelines.

The stakes are high: unprepared systems can lead to financial losses, operational downtime, and even risks to patient safety. By integrating AI-driven defenses with structured governance, healthcare organizations can better protect sensitive data while ensuring uninterrupted care delivery.

How AI-Powered Cyber Threats Target Healthcare

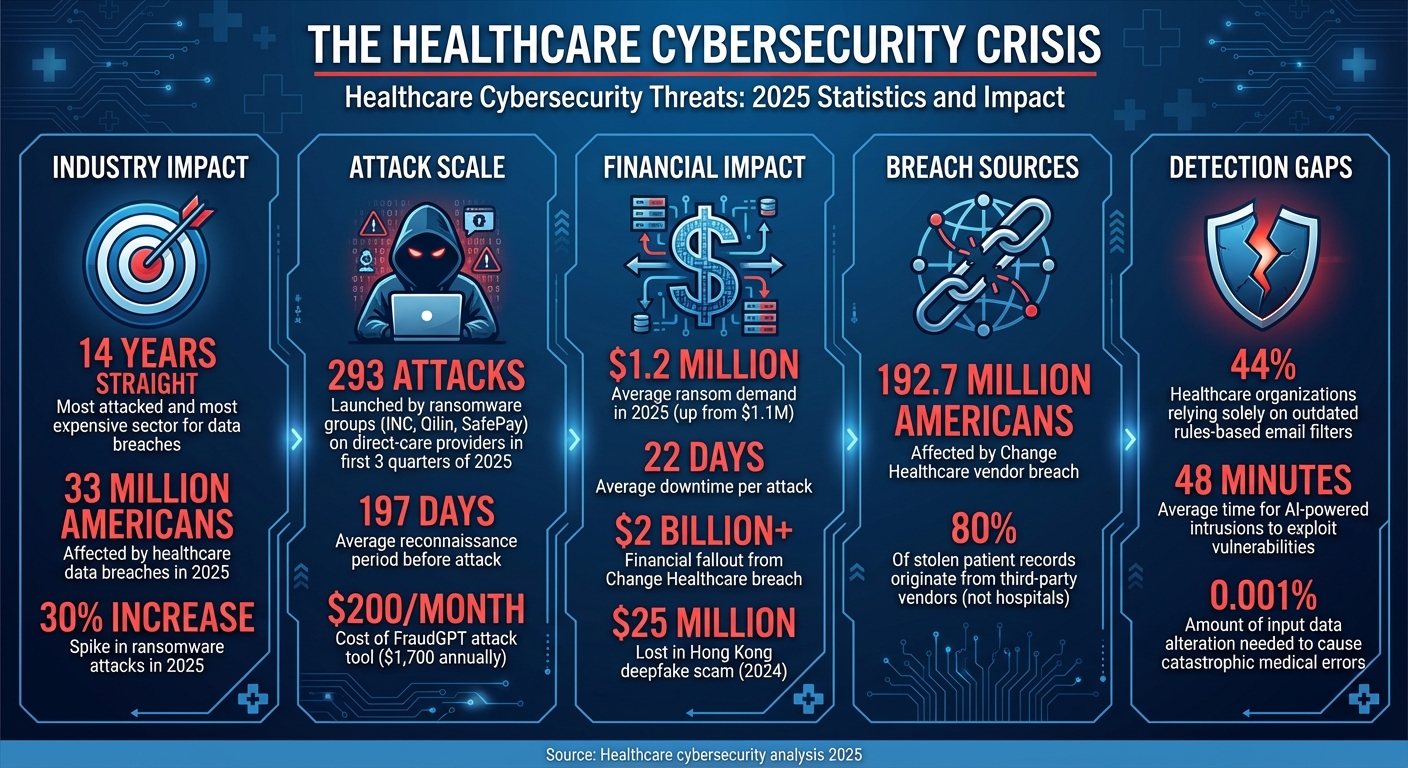

Healthcare Cybersecurity Threats: 2025 Statistics and Impact

Cybercriminals are increasingly using AI to target healthcare systems, turning the industry into a prime target for cyberattacks. For 14 years straight, healthcare has been the most attacked and most expensive sector for data breaches. In 2025 alone, 33 million Americans were affected by healthcare data breaches, while ransomware attacks on the industry spiked by 30% [3].

AI gives attackers tools to automate reconnaissance, tailor phishing scams, and adapt strategies on the fly. Tools like FraudGPT, available for $200 per month or $1,700 annually, make sophisticated attack capabilities more accessible [5]. As Security Magazine explains:

"Artificial intelligence (AI) is reshaping cybersecurity at a pace that few anticipated. It is both a weapon and a shield, creating an ongoing battle between security teams and cybercriminals" [5].

The damage caused by these attacks goes beyond stolen data. In one tragic case, a ransomware attack delayed critical care for a heart patient, forcing a transfer to another hospital 18 miles away. The patient died before receiving treatment, prompting German authorities to launch a manslaughter investigation directly linking the cyberattack to the death [1]. These examples underscore the devastating potential of AI-driven attacks in healthcare.

Automated Attack Methods Targeting Healthcare Systems

AI has revolutionized the way cyberattacks are carried out. Ransomware groups like INC, Qilin, and SafePay launched 293 attacks on direct-care providers in the first three quarters of 2025 alone [3]. These attacks often involve long reconnaissance periods averaging 197 days, allowing hackers to map out systems, locate vulnerabilities, and maximize their impact [3].

Generative AI plays a key role in phishing scams, creating emails that closely mimic legitimate communication. These AI-generated messages adopt specific writing styles and terminology, making them highly convincing. Amy Larson DeCarlo, Principal Analyst at Global Data, highlights the core issue:

"The biggest vulnerability within any organisation: humans" [5].

The sophistication of AI-driven scams was evident in early 2024, when a Hong Kong employee was duped during a video call featuring deepfake versions of his coworkers. This scam resulted in the transfer of $25 million to attackers [4].

AI-powered malware has also become more advanced, mimicking legitimate user behavior and modifying its actions to evade detection. The ECRI Institute identified AI as the top health technology hazard for 2025, emphasizing how adversarial attacks can cause catastrophic medical errors by altering as little as 0.001% of input data in medical AI systems [3]. Even chatbots are vulnerable, with prompt injection attacks leading to harmful advice, exposure of sensitive data, or the creation of false medical records [3].

Financial and Reputational Costs of AI-Driven Breaches

The financial and operational toll of these attacks on healthcare institutions is staggering. As attack methods become more advanced, the costs continue to rise. In 2025, the average ransom demand reached $1.2 million, up from $1.1 million the previous year [3]. However, ransom payments are just the tip of the iceberg. Each attack causes an average downtime of 22 days, disrupting operations and driving up costs [3].

The Change Healthcare data breach serves as a stark example of the vulnerabilities in healthcare supply chains. This single vendor compromise affected 192.7 million Americans, halting prescription processing and insurance claim submissions nationwide. Many healthcare providers had to revert to paper-based systems for weeks, with the financial fallout exceeding $2 billion due to delayed payments and operational disruptions [3]. Similarly, the Synnovis attack highlighted the long-term effects of breaches, as patient notifications continued for over six months while investigators uncovered the full extent of the compromise [3].

Third-party vendors have become a critical weak point in healthcare security. 80% of stolen patient records now originate from third-party vendors rather than hospitals themselves [3]. This trend reflects the growing industrialization of ransomware operations, where attackers systematically exploit the most vulnerable areas of healthcare's digital infrastructure [3].

Healthcare Vulnerabilities Exposed by Automated Attacks

The healthcare industry is grappling with aging infrastructure while facing a new breed of threats: machine-speed AI-driven attacks. Unlike traditional security challenges, these attacks move at a pace far beyond human capabilities. In fact, AI-powered intrusions can identify weaknesses, adjust their tactics, and exploit vulnerabilities in less than 48 minutes on average [9]. Here's a startling statistic: 44% of healthcare organizations still rely solely on outdated methods like rules-based email filters [5]. This reliance creates glaring blind spots, leaving systems defenseless against AI-generated threats that overwhelm static security tools. Shane Cox, Director of the Cyber Fusion Center at MorganFranklin Cyber, highlights the core issue:

"Static security measures that rely on signature-based detection... cannot keep pace with AI-powered cyberattacks, which constantly adapt and evade traditional defenses" [8].

These challenges underscore why older, reactive defenses are no match for the speed and sophistication of AI-enabled threats.

Legacy Systems and Outdated Infrastructure

Legacy systems in healthcare add another layer of vulnerability. These outdated technologies suffer from a critical limitation: they can only recognize threats they’ve encountered before. Most traditional tools depend on signature-based detection and predefined rules, which are ineffective against AI-driven attacks that constantly evolve [5] [7]. Compounding this, manual compliance processes are slow and prone to errors, leaving organizations exposed when rapid AI attacks strike.

Another glaring shortfall is the lack of context-awareness in legacy systems. They struggle to distinguish between genuine communications and AI-crafted impersonations that mimic organizational language with uncanny precision [5]. Health-ISAC, a key player in healthcare cybersecurity, warns:

"AI-driven cyberattacks outpace healthcare defenses, exposing vulnerabilities in aging systems and understaffed security teams, threatening patient safety" [6].

And the risks don’t stop at internal systems - AI also exploits weaknesses in the broader healthcare supply chain.

Third-Party and Supply Chain Risks Amplified by AI

Third-party vendors are a critical weak point in healthcare security. These vendors often operate with limited budgets and less robust defenses, yet they have extensive access to sensitive healthcare systems and patient data [10] [3]. Attackers leverage AI to pinpoint these vulnerabilities, launching data poisoning attacks through compromised foundation models or infiltrating vendor systems to breach multiple organizations at once [11] [3].

The problem doesn’t end with direct vendors. Fourth-party relationships - subcontractors and service providers used by primary vendors - create additional openings for AI-driven attacks. Traditional vendor management processes can’t keep up with the scale and speed of these risks. AI tools can map entire supply chain networks faster than security teams can audit them, leaving healthcare organizations struggling to close gaps in time.

Using Censinet AI to Manage Cyber Threats

Healthcare organizations face a growing challenge: keeping up with the speed and complexity of AI-driven cyberattacks. Traditional risk management methods often struggle to keep pace with these evolving threats. The answer lies in combining automated risk intelligence with human expertise. This approach creates a system that not only detects risks quickly but also ensures that human judgment remains central to decision-making. By integrating these tools into a unified platform, organizations can implement proactive defenses that respond to threats before they escalate. Here's how Censinet is leading the way with its cutting-edge solutions.

Censinet RiskOps™ and Censinet AI™ are designed to streamline risk assessments across the healthcare ecosystem, including third-party vendors and supply chain partners.

Censinet RiskOps™: A Centralized Risk Management Platform

Censinet RiskOps™ tackles the complexities of healthcare cyber risk management by acting as a centralized hub for all risk-related activities. This platform automates workflows that used to take weeks or even months, providing real-time validation of evidence and continuous risk monitoring. It also offers full visibility into the healthcare supply chain, including the often-overlooked risks posed by fourth-party vendors. One standout feature is its ability to maintain a comprehensive inventory of all AI systems in use within an organization [2]. By consolidating risk data into an easy-to-navigate dashboard, RiskOps™ empowers security teams to stay ahead of emerging threats and respond effectively.

Censinet AI™: Speeding Up Risk Assessments with Artificial Intelligence

Building on the foundation of RiskOps™, Censinet AI™ enhances risk management by automating detailed assessments. This tool quickly processes vendor evidence and documentation, identifies key integration details, and highlights fourth-party risks, delivering concise and actionable risk reports. Such speed is critical in an environment where AI-powered attacks can compromise systems in no time.

Censinet AI™ uses a combination of autonomous automation and human oversight to handle tasks like evidence validation, policy creation, and risk mitigation [2]. Risk teams can customize rules and review processes to ensure automation complements their decision-making rather than replacing it. This approach aligns with healthcare regulations, such as HIPAA and FDA requirements, and adheres to frameworks like the NIST AI Risk Management Framework [2]. Additionally, Censinet AI™ ensures that key findings and AI-related risks are routed to the appropriate stakeholders, including AI governance committees, for review and approval. This balance of automation and human input provides both rapid insights and the regulatory compliance needed in the healthcare sector.

sbb-itb-535baee

Balancing AI Automation with Human Oversight

AI can process data at incredible speeds, but it often operates as a "black box", making its decision-making process unclear [5]. This lack of transparency can lead to blind spots, potentially putting patient safety at risk. For example, AI might generate false positives, flagging legitimate medical communications and causing unnecessary delays in healthcare operations or compliance [5]. This is where human oversight becomes essential. By combining the speed of automation with human judgment, healthcare organizations can ensure that AI supports and enhances the expertise of risk management teams rather than causing unintended problems. This balance is crucial for addressing the complex challenges of healthcare cybersecurity.

Configurable AI with Human Control

Censinet AI™ offers risk management teams the ability to configure rules and review processes, giving them control over how automated findings are vetted. This approach directly addresses AI's limitations, such as its tendency to "hallucinate" or produce inaccurate results, and the ongoing need for supervised learning [7]. While AI takes care of time-intensive tasks like validating evidence, drafting policies, and mitigating risks, the final decision-making authority remains firmly in human hands [2]. The platform also ensures that critical findings and AI-related risks are routed to the appropriate stakeholders, such as AI governance committees, combining automation with expert oversight for a more reliable process.

Meeting Healthcare Regulatory Requirements

Censinet AI™ is designed to align with major regulatory frameworks like HIPAA, FDA guidelines, and NIST standards [2]. It provides audit trails and explainable insights, which are essential for meeting rigorous compliance requirements. This transparency is particularly valuable for CISOs and compliance teams, as it helps them understand the reasoning behind risk-related decisions. With upcoming regulations like the EU AI Act - set to take full effect in August 2026 - placing greater emphasis on ethical standards, auditability, and legal accountability, tools like Censinet AI™ help healthcare organizations stay ahead of the curve [12]. By embedding compliance into its automation processes, the platform enables healthcare providers to scale their risk management efforts while keeping pace with evolving regulatory demands.

AI Governance for Continuous Risk Oversight

For healthcare organizations, AI governance isn't a "set it and forget it" task. With 92% of healthcare organizations experiencing cyberattacks in 2024 and AI listed as the #1 health technology hazard for 2025 by the ECRI Institute [3], the need for ongoing oversight has never been clearer. The challenge lies in developing governance frameworks that can keep up with fast-changing threats while managing AI systems across clinical applications, vendor networks, and supply chains. Creating resilient governance structures is the logical next step in advancing from basic threat detection to comprehensive risk management. This ongoing need for oversight is also shaping how integrated risk management solutions evolve.

In November 2025, the Health Sector Coordinating Council (HSCC) Cybersecurity Working Group unveiled its 2026 guidance for AI cybersecurity risk management. Drafted by an AI Cybersecurity Task Group that included 115 healthcare organizations, the guidance underscores the importance of formal governance processes. These processes define clear roles and responsibilities throughout the AI lifecycle [2]. Key recommendations include maintaining an up-to-date inventory of all AI systems, using a five-level autonomy scale to classify AI tools by risk, and aligning governance controls with regulations like HIPAA and FDA requirements.

AI Governance Committees and Task Assignment

Successful AI governance depends on seamless collaboration across multiple teams. Censinet RiskOps™ serves as a central hub, ensuring that critical findings and tasks reach the right people at the right time [2]. When AI-related risks are identified - whether tied to third-party vendors, clinical applications, or supply chain vulnerabilities - the platform automatically assigns tasks to designated reviewers, including members of AI governance committees.

This streamlined coordination allows cybersecurity teams, data scientists, clinical leaders, and compliance officers to work together using a single, unified system. Instead of juggling scattered spreadsheets or email threads, all AI-related policies, risk assessments, and remediation efforts are managed in one centralized platform. The system tracks progress and enforces accountability, which is essential given that 80% of healthcare breaches originate from third-party vendors [3]. By automating task routing, the platform supports proactive strategies to address AI-driven threats.

Real-Time Dashboards for Risk Monitoring

Static reports can't keep up with dynamic threats. That’s why Censinet AI™ integrates real-time data into user-friendly dashboards, offering continuous visibility into AI risks across the healthcare ecosystem. These dashboards monitor vendor security, track fourth-party exposures, flag anomalies in clinical AI systems, and detect emerging threats before they escalate.

This real-time monitoring addresses a critical gap highlighted in the HSCC guidance: the need for "rapid containment and recovery of compromised models" and "secure and verifiable model backups" [2]. With these dashboards, organizations gain immediate insights, enabling them to act swiftly. For instance, instead of discovering vulnerabilities months later - like in the Change Healthcare breach that affected 192.7 million Americans - organizations can now detect and respond to threats in real time. This shift is crucial, especially when adversarial attacks manipulating just 0.001% of data can lead to catastrophic medical errors [3].

Conclusion

The race to outpace AI-driven cyber threats is heating up, especially as attackers target the healthcare sector with increasingly sophisticated automated attacks. Traditional methods like rules-based and signature detection simply can't keep up with the speed and complexity of these AI-enabled threats [5][8]. To address this, healthcare organizations need to rethink their cybersecurity strategies and adopt defenses that combine the speed of AI with the precision of human expertise [5].

As Great American Insurance Group aptly states, "AI can be a powerful tool, but it doesn't replace professional judgment, responsibility or oversight. Using it wisely means embedding risk management into every step of the design process" [13]. This highlights the need for AI systems in healthcare that not only detect and respond to threats at machine speed but also allow experienced professionals to make critical decisions when it matters most.

Censinet offers a compelling solution with its integrated platforms, Censinet RiskOps™ and Censinet AI™. These tools strike the right balance between automation and oversight, centralizing AI risk management across vendors, clinical applications, and supply chains. With real-time dashboards, they streamline processes like evidence validation and risk assessments, enabling security teams to focus on strategic oversight and high-priority decisions.

For healthcare organizations, adopting AI-powered defenses with structured governance and expert oversight is no longer optional - it's essential. This balanced approach of leveraging cutting-edge technology while maintaining professional judgment creates a resilient cybersecurity framework. It not only safeguards sensitive patient data but also ensures uninterrupted care delivery, keeping healthcare systems strong in the face of evolving AI threats.

FAQs

How is AI used to both strengthen and compromise cybersecurity in healthcare?

AI serves a dual purpose in the realm of healthcare cybersecurity. On one side, cybercriminals are leveraging AI to develop more sophisticated threats. These include automated phishing campaigns that adapt to targets, deepfake-driven social engineering that mimics real individuals, and AI-powered malware capable of evading traditional defenses. These tools make attacks more convincing, harder to spot, and quicker to execute.

On the flip side, AI is an essential tool for protecting healthcare systems. It empowers organizations with advanced threat detection, behavioral analysis, and real-time responses to potential breaches. Beyond that, AI can anticipate vulnerabilities, enabling proactive steps to neutralize risks before they escalate. When used strategically, AI helps healthcare providers stay one step ahead in the constantly shifting cybersecurity landscape.

What unique risks do AI-powered cyberattacks pose to healthcare systems?

AI-driven cyberattacks are creating serious hurdles for healthcare systems. Attackers are leveraging AI to quickly identify network vulnerabilities, exploit weaknesses in cloud-based tools like patient portals and telehealth platforms, and craft highly convincing phishing schemes with the help of generative AI. Some even go as far as using deepfake technology to manipulate individuals or interfere with AI-based decision-making systems.

The fallout from these attacks can be devastating: stolen patient data, altered medical records, disruptions to life-saving medical devices, and the ability to evade traditional security measures. To defend against these threats, healthcare organizations need to implement cutting-edge cybersecurity strategies designed to address the rapidly evolving risks posed by AI.

How can healthcare organizations effectively combine AI automation and human oversight in cybersecurity?

Healthcare organizations can successfully merge AI automation with human oversight by striking a thoughtful balance that utilizes the unique strengths of each. One key aspect is ongoing staff training. Teams need to not only understand how to use AI tools but also how to interpret the insights these tools provide. This ensures that employees are equipped to make informed decisions when working alongside AI systems.

Human oversight plays a critical role, especially in sensitive areas like patient data protection and incident response. By carefully reviewing AI-driven decisions in these contexts, organizations can catch potential errors or biases before they escalate into larger issues.

On top of that, having clear incident response plans in place and adopting advanced monitoring tools can help organizations detect and address threats early. When automation is paired with accountability, healthcare providers can bolster their defenses while retaining the human judgment necessary to navigate complex cybersecurity challenges.

Related Blog Posts

Key Points:

What is the cybersecurity AI arms race?

The cybersecurity AI arms race is the ongoing competition between organizations and cybercriminals to leverage artificial intelligence (AI) for either defending against or launching cyberattacks. While organizations use AI to enhance threat detection and response, attackers exploit AI to create more sophisticated and evasive attacks.

How is AI transforming cybersecurity?

- Threat Detection: AI analyzes vast amounts of data to identify anomalies and potential threats in real-time.

- Predictive Analytics: AI predicts potential vulnerabilities and attack vectors before they are exploited.

- Automated Responses: AI systems can autonomously respond to threats, reducing response times.

- Vulnerability Scanning: AI identifies weaknesses in systems and networks to prevent breaches.

What are the risks associated with AI in cybersecurity?

- Adversarial AI: Cybercriminals use AI to bypass security measures and create undetectable malware.

- Bias in AI Models: Poorly trained AI models can lead to false positives or negatives, compromising security.

- Exploitation of AI Systems: Attackers may manipulate AI systems to behave unpredictably or leak sensitive data.

How can organizations stay ahead in the AI arms race?

- Invest in Advanced AI Tools: Organizations should adopt cutting-edge AI solutions for proactive defense.

- Continuous Training: Regularly update AI models with the latest threat intelligence.

- Collaboration: Partner with industry leaders and governments to share insights and strategies.

- Ethical AI Practices: Ensure AI systems are transparent, unbiased, and secure.

What is the importance of ethical AI in cybersecurity?

Ethical AI ensures that AI systems are designed and used responsibly. This includes maintaining transparency, avoiding biases, and protecting sensitive data. Ethical AI fosters trust among users and stakeholders while ensuring compliance with regulations.

What are the future trends in AI and cybersecurity?

- AI-Driven Automation: Increased reliance on AI for automating threat detection and response.

- Enhanced Threat Intelligence: AI will integrate with global threat intelligence networks for better insights.

- Quantum Computing Integration: AI combined with quantum computing will create stronger encryption and defense mechanisms.

- Adaptive AI Systems: AI systems will evolve to counteract emerging threats dynamically.