Cybersecurity's AI Transformation: From Detection to Prevention

Post Summary

Cyberattacks in healthcare are escalating rapidly, with ransomware incidents nearly doubling since 2022 and costing the industry $10.9 million in 2023 alone. The stakes are higher than ever, as breaches now threaten not just data but patient safety. Traditional methods can no longer keep up, leaving healthcare systems vulnerable to increasingly sophisticated threats.

AI is reshaping cybersecurity by shifting focus from reacting to breaches to preventing them. Here’s how AI is changing the game:

- Real-time threat detection: AI processes massive data instantly, identifying unusual activity and stopping threats before they escalate.

- Predictive analytics: By analyzing historical and real-time data, AI forecasts potential attacks, improving response times and reducing risks.

- Automated responses: AI isolates compromised devices and halts attacks within seconds, minimizing disruptions.

- Vendor risk management: AI monitors third-party systems continuously, identifying vulnerabilities across supply chains.

These tools are helping healthcare organizations protect sensitive data, ensure compliance, and maintain uninterrupted operations. However, AI's success depends on robust oversight, secure infrastructure, and collaboration between human expertise and automated systems. The future of healthcare cybersecurity lies in leveraging AI to stay ahead of evolving threats while prioritizing patient safety and trust.

AI-Driven Prevention: Core Concepts and Benefits

Reactive vs Proactive AI-Driven Cybersecurity in Healthcare

AI is reshaping healthcare cybersecurity by shifting the focus from reacting to breaches after they occur to preventing threats before they strike. This shift is built on three main capabilities: recognizing normal activity, predicting potential threats, and responding faster than human teams ever could. The results are impressive - AI-driven threat detection systems cut the time to identify incidents by an average of 98 days [4]. This means threats can be neutralized before they escalate, reducing the damage from breaches and strengthening overall operational resilience.

Behavioral Analytics and Anomaly Detection

AI algorithms excel at monitoring network traffic, user behavior, and device activity to spot anything out of the ordinary [2]. By learning what "normal" looks like, the system can quickly flag unusual patterns - like access at odd hours or unexpected device communications - allowing it to detect anomalies in real time [2].

What makes AI so effective is its ability to process massive amounts of data instantly, picking up on subtle warning signs that might escape human analysts. Once an anomaly is flagged, AI can take immediate action, isolating compromised accounts or devices in seconds. This kind of automated response shifts cybersecurity from a reactive scramble to a proactive defense that operates at machine speed.

Predictive Models for Threat Anticipation

Predictive AI models are game-changers for anticipating threats like ransomware, phishing, and vendor risks. By analyzing historical data, current threat intelligence, and system vulnerabilities, these models can forecast potential attacks [5]. For instance, in May 2025, the HCAP model demonstrated 98% accuracy, reduced false positives by 25%, and saved organizations an average of $1.9 million [5][6].

These models also bolster Identity and Access Management by analyzing risks in real time for every login attempt, blocking access when something seems off. They enhance phishing detection by examining email tone, content, and structure, catching even the most sophisticated social engineering attempts. Additionally, they can immediately isolate compromised devices when ransomware is detected, stopping the spread before it starts [5].

Reactive Detection vs. Proactive Prevention

Traditional cybersecurity methods focus on responding to breaches after they occur, while AI-driven systems aim to block threats before they cause harm. As Health Catalyst puts it:

AI is not a silver bullet, but it is a powerful partner in defending healthcare organizations from an evolving threat landscape [2].

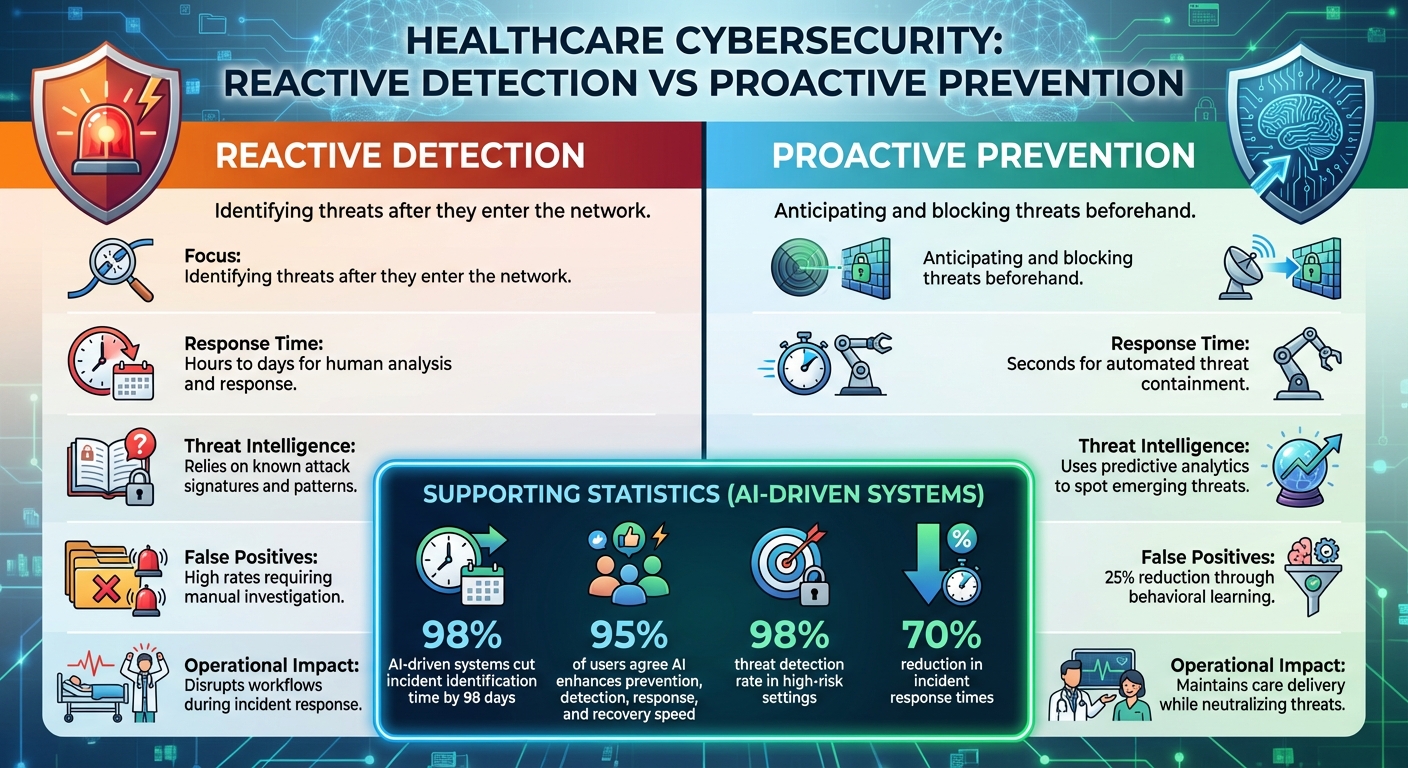

The table below outlines the key differences between these two approaches:

| Aspect | Reactive Detection | Proactive Prevention |

|---|---|---|

| Focus | Identifying threats after they enter the network | Anticipating and blocking threats beforehand |

| Response Time | Hours to days for human analysis and response | Seconds for automated threat containment |

| Threat Intelligence | Relies on known attack signatures and patterns | Uses predictive analytics to spot emerging threats |

| False Positives | High rates requiring manual investigation | 25% reduction through behavioral learning [6] |

| Operational Impact | Disrupts workflows during incident response | Maintains care delivery while neutralizing threats [2] |

The advantages of AI-driven systems are clear. They allow healthcare organizations to manage risks continuously and effectively. Supporting this shift, 95% of users agree that AI-powered cybersecurity tools enhance the speed and efficiency of prevention, detection, response, and recovery [5]. In high-risk settings, these systems have achieved a 98% threat detection rate and reduced incident response times by 70% [5]. Such advancements are transforming how healthcare organizations safeguard patient data while ensuring uninterrupted operations.

AI Applications in Healthcare Cybersecurity

AI is reshaping how healthcare organizations defend against cyber threats, offering tools that enhance speed, precision, and efficiency across the entire security lifecycle. From scanning vendor networks to isolating compromised medical devices, AI-powered solutions are becoming essential in fortifying the proactive frameworks discussed earlier.

Continuous Risk Assessment and Predictive Analytics

AI systems operate around the clock, monitoring vulnerabilities across vendors, medical devices, and cloud platforms. Unlike traditional periodic audits, these tools provide 24/7 scanning, prioritizing high-risk systems for immediate action, such as patching or enhanced monitoring [2]. They can identify unusual communication patterns among IoT and connected medical devices, flagging suspicious data transfers to external entities [2].

This continuous monitoring is crucial for compliance with regulations like HIPAA and NIST. AI automates compliance checks, reducing the need for manual intervention and ensuring organizations stay updated with changing regulatory standards [1]. It also customizes risk assessments to address each organization's specific vulnerabilities [1]. Scott Mattila from Health Catalyst highlights this shift:

"AI in healthcare cybersecurity is emerging as a game-changer, giving CISOs and IT teams the ability to detect threats earlier, respond faster, and safeguard patient data in ways previously impossible." [2]

By analyzing both historical and real-time data, AI-powered predictive analytics can anticipate potential breaches before they occur, enabling earlier risk identification and reducing the chances of disruptions in care delivery [2]. This proactive approach significantly bolsters patient safety measures [3].

Automated Threat Response and Incident Containment

AI-driven systems can respond to threats instantly, executing predefined playbooks without waiting for human approval. These tools can quarantine compromised devices, halt ransomware in its tracks, and disable suspicious accounts within seconds [1]. For healthcare organizations, where every second counts, AI-based threat detection works 85% faster than traditional methods [8].

This rapid response minimizes disruptions to critical healthcare operations by neutralizing attacks in real time [7]. For example, if suspicious activity is detected on a medical device, it can be isolated immediately to protect patient safety while the security team investigates. AI also reduces false positives, allowing teams to focus on credible threats and make quicker, more informed decisions [8]. Additionally, AI-driven investigations streamline triage processes, enabling organizations to allocate resources effectively and avoid wasting time on false alarms [7].

The result? Healthcare providers can continue delivering care uninterrupted, all while neutralizing cyber threats and avoiding the delays often associated with manual response processes.

Vendor and Third-Party Risk Management

AI's capabilities extend to managing external risks, an area of growing concern given that third-party vendors account for 80% of healthcare breaches [4]. AI tools automate vendor due diligence by monitoring data flow patterns for anomalies and maintaining real-time visibility into vendor-related security incidents [4].

These platforms also safeguard patient data by flagging unusual data transfers to external networks, a critical aspect of monitoring third-party interactions [2]. They assist healthcare organizations in complying with updated HIPAA Security Rule requirements, which mandate technical controls like encryption, multi-factor authentication, and network segmentation [4]. With organizations having a limited timeframe to update agreements, AI can streamline much of this compliance work [4].

Vendor-related breaches are often complex, involving multiple organizations - an average of seven per incident [4]. AI simplifies this complexity by identifying risks across the entire healthcare supply chain, including subcontractors and service providers used by primary vendors [4]. This continuous monitoring transforms vendor risk management from a periodic task into an ongoing, proactive defense strategy.

Implementing AI for Cyber Defense in Healthcare

Healthcare organizations are facing a pressing need to strengthen their cybersecurity measures. With a staggering 92% of these organizations reporting cyberattacks in 2024 [4], the stakes couldn't be higher. To meet this challenge, a solid foundation of data management and system architecture is essential for successfully deploying AI-driven cybersecurity solutions.

Building the Foundation: Data and Architecture

For AI-powered cybersecurity to work effectively, healthcare systems need a centralized view of their data. This includes electronic health records, connected medical devices, cloud platforms, and third-party vendor networks [2]. Without this comprehensive visibility, AI tools struggle to detect patterns or anomalies that could indicate a threat. To protect the data feeding these AI models, organizations must implement advanced encryption, strict access controls, and reliable intrusion detection systems [9].

Legacy systems pose another significant challenge. These outdated technologies not only lack modern defenses but also complicate the AI training process and increase the attack surface [3][4]. Upgrading these systems should be a priority, alongside adopting Zero Trust architecture and microsegmentation to quickly contain potential threats [4]. Additionally, compliance with the HIPAA Security Rule demands technical measures such as multi-factor authentication and encryption, with a 240-day deadline for updating business associate agreements [4].

Securing AI training pipelines is equally critical. Even the smallest manipulation - altering just 0.001% of input data - can lead to catastrophic failures in medical AI systems [4]. To prevent this, healthcare organizations should employ cryptographic verification for training data, maintain detailed audit trails for any data changes, and separate training environments from production systems [4]. Cloud service providers also play a crucial role and must adhere to stringent security standards, with clearly defined responsibilities outlined in service agreements [9]. With these measures in place, AI can better anticipate and neutralize cyber threats.

Human-in-the-Loop Operations

While AI excels at automating threat detection and response, human oversight is still essential in security operations [2]. Security teams must review AI-generated alerts, make nuanced decisions about complex threats, and ensure that automated actions align with clinical priorities. This collaboration between human expertise and AI speed enhances strategic responses, emphasizing proactive threat prevention.

This approach - known as human-in-the-loop - also prevents over-reliance on automation. It allows security teams to scale operations effectively while maintaining control over critical decisions. Robustness testing for AI models is another key step, ensuring they perform reliably under adversarial conditions, not just in clinical scenarios [4]. By using configurable rules and review processes, security teams can fine-tune automation to support, rather than replace, essential decision-making.

Integration with Censinet RiskOps™

Combining human oversight with advanced platforms like Censinet RiskOps™ takes cyber defense to the next level. This platform acts as a central hub for managing AI-driven risk, vendor assessments, and continuous monitoring in healthcare organizations. Its Censinet AITM feature streamlines third-party risk assessments by enabling vendors to complete security questionnaires in seconds. It then summarizes evidence, documentation, and key findings into comprehensive risk reports.

Censinet RiskOps™ also ensures that critical AI risks and findings are routed to the right stakeholders for review. With an intuitive AI risk dashboard providing real-time insights, organizations gain clear visibility into all AI-related policies, risks, and tasks. This combination of human oversight and automated efficiency helps risk teams scale their operations while ensuring patient safety and uninterrupted care delivery.

sbb-itb-535baee

Ethical and Future Considerations for AI in Cybersecurity

Managing Ethical and Legal Challenges

The use of AI in healthcare cybersecurity brings up some tough ethical questions, particularly around data privacy, algorithmic bias, and transparency. When AI systems handle sensitive health data to detect potential threats, there’s always the risk of inadvertently exposing patient information. This becomes even more complex when you factor in interconnected systems, third-party vendors, and outdated infrastructures, all of which make data governance harder to manage. Tackling these issues is essential for building effective, AI-driven prevention strategies.

One major concern is bias in AI models. If the datasets used to train these systems are skewed, the AI might fail to recognize threats impacting specific populations, leaving security gaps and creating ethical dilemmas. To address this, organizations should have governance frameworks in place that continuously monitor AI decisions. Regular audits can help identify and correct biases. Transparency is equally important - security teams need to clearly communicate how AI systems make decisions about suspicious activities or vendor risks.

AI can also streamline compliance with ever-changing regulations by automating checks and processes [1]. However, as these systems evolve, organizations must ensure that patients are kept in the loop. Updated informed consent practices are critical so individuals fully understand how their data is being used [3].

These ethical and legal challenges are shaping the future of AI in cybersecurity, setting the foundation for new approaches and strategies.

Emerging Trends in AI Cybersecurity

As ethical considerations guide the use of AI, new trends are emerging that are reshaping the healthcare cybersecurity landscape. The urgency of these changes is clear - cybersecurity breaches have skyrocketed by 97% year-over-year, with one breach at Change Healthcare alone impacting 100 million individuals [10]. This surge highlights why the role of Chief Information Security Officers (CISOs) in healthcare is expanding. It’s no longer just about managing technology; CISOs now need to oversee AI, cloud computing, and even software used in medical devices across the entire healthcare ecosystem.

The integration of cyber-physical systems, IoT, cloud computing, and AI is redefining both clinical workflows and security strategies. This shift, often referred to as Industry 4.0, means cybersecurity can’t just be about reacting to incidents anymore. Instead, organizations must focus on proactive prevention and ensuring business continuity. At the same time, adversarial AI is emerging as a major challenge. Cybercriminals are leveraging AI to design more advanced attacks, pushing defenders to create AI systems that can withstand these evolving threats.

Healthcare organizations also need to prepare for upcoming AI-specific regulations. As clinical and cybersecurity risks become increasingly linked, there’s a growing need for unified governance approaches that address both areas together. This convergence demands a broader, more strategic view of how AI can protect not just data, but also patient safety and trust.

Conclusion

The shift from reacting to threats to actively preventing them is reshaping healthcare cybersecurity. This new approach focuses on safeguarding patient data and ensuring uninterrupted care. AI-driven solutions play a key role in addressing vulnerabilities within legacy systems, connected devices, and telehealth platforms, offering a more robust defense against potential breaches[2].

By using tools like predictive analytics, behavioral anomaly detection, and automated threat responses, organizations can identify and neutralize risks before they escalate. This marks a significant evolution in cybersecurity, turning it into a proactive strategy rather than just a defensive measure.

However, technology alone isn’t enough. While AI brings technical capabilities to the table, its success hinges on human involvement. Effective implementation requires blending advanced AI tools with human expertise to ensure ethical use, proper oversight, and a multi-layered defense that adapts to new challenges. Healthcare organizations must incorporate AI into their Security Operations Centers (SOCs) while training teams to interpret and act on AI-generated insights. This approach ensures that automation enhances decision-making rather than replacing it entirely[2].

Censinet RiskOps™ exemplifies this proactive shift by streamlining risk management across vendors and devices. Its AI-powered features improve risk assessments, automate evidence validation, and enhance workflows for Governance, Risk, and Compliance (GRC) teams. By centralizing third-party risk management, enterprise vulnerabilities, and AI-related policies, Censinet RiskOps™ equips healthcare leaders to minimize risks efficiently while maintaining the human oversight needed for safe and compliant operations.

The future of healthcare cybersecurity lies in staying ahead of threats through intelligent automation, ongoing risk evaluations, and collaborative defense strategies. This balance of AI-driven efficiency and expert human oversight represents a forward-thinking approach to protecting both patient data and safety in an increasingly digital healthcare environment.

FAQs

How does artificial intelligence enhance cybersecurity in healthcare?

Artificial intelligence is transforming healthcare cybersecurity by offering faster and more precise threat detection compared to older methods. It works around the clock, scanning networks for unusual activity and spotting subtle patterns that could signal potential risks. By leveraging predictive analytics, AI can even anticipate vulnerabilities before they escalate into serious problems.

One of AI's standout features is its ability to deliver real-time threat responses, which helps block unauthorized access, fend off malware attacks, and minimize data breaches. It also plays a crucial role in evaluating third-party risks and bolstering the security of Internet of Medical Things (IoMT) devices. This ensures that healthcare organizations remain a step ahead of emerging cyber threats.

What ethical challenges arise when using AI to enhance healthcare cybersecurity?

The integration of AI into healthcare cybersecurity introduces a range of ethical challenges that demand careful consideration. One key priority is ensuring clarity in how AI systems operate, particularly when tasked with safeguarding sensitive patient information. Protecting privacy and implementing strong data security protocols are non-negotiable to prevent potential breaches or the misuse of confidential data.

Another critical concern is addressing algorithmic bias to guarantee fairness and impartiality in outcomes. To mitigate risks, human oversight should remain a constant presence, ensuring that AI-driven decisions are validated and any unintended consequences are promptly addressed. Equally important is establishing clear responsibility for the actions and decisions made by AI systems. This not only helps build trust but also reinforces ethical standards in healthcare cybersecurity.

How does AI improve vendor and third-party risk management in healthcare?

AI plays a key role in improving vendor and third-party risk management within healthcare by keeping a constant watch on external systems and devices. This ongoing monitoring helps spot vulnerabilities as they happen, offering real-time insights that can prevent potential security issues from turning into full-blown breaches.

By leveraging predictive analytics, AI can even anticipate risks before they materialize, giving organizations a much-needed edge in combating cyber threats. Beyond detection, AI simplifies the entire risk assessment process by automating evaluations of vendor security. This streamlines operations, making assessments faster and more efficient. The result? A stronger cybersecurity posture with less time and fewer resources spent managing third-party risks.