The Diagnostic Revolution: How AI is Changing Medicine (And the Risks Involved)

Post Summary

By boosting accuracy, detecting disease earlier, and speeding workflows in radiology, pathology, genomics, and ophthalmology.

More precise imaging analysis, faster detection, personalized treatments, and reduced false negatives.

PHI exposure, adversarial manipulation, ransomware, third‑party vendor breaches, and model poisoning.

Biased data leads to unequal accuracy across populations, misdiagnoses, and unreliable predictions.

FDA oversight, HIPAA compliance, adaptive algorithm monitoring, and disclosure requirements for AI involvement.

It accelerates vendor assessments, centralizes AI risk data, enables human-in-the-loop governance, and monitors risks in real time.

AI is transforming medical diagnostics by improving accuracy, speeding up processes, and reducing costs. Tools like imaging analysis, predictive models, and genetic insights are helping detect diseases earlier and more precisely. For example, AI in radiology can identify subtle abnormalities in images, while in genomics, it aids in creating personalized treatments. These advancements could save the U.S. healthcare system up to $360 billion annually. However, challenges like cybersecurity risks, biased algorithms, and regulatory hurdles must be addressed. To safely integrate AI, healthcare organizations need strong governance, human oversight, and continuous monitoring to ensure patient safety and trust.

How AI is Transforming Medical Diagnostics

AI is reshaping how diseases are detected and diagnosed. Its ability to process massive amounts of data with precision is changing the landscape of diagnostics, improving patient outcomes while also presenting new challenges. By spotting patterns that might escape human eyes, AI is making waves in three key areas: radiology, pathology and genomics, and ophthalmology.

AI in Radiology: Advancing Image Analysis

AI is making a big impact in radiology by analyzing medical images with impressive accuracy. It can pick up on subtle patterns and irregularities that might be missed by even the most experienced radiologists [2]. By automating repetitive tasks like image segmentation and measurements, AI frees up radiologists to focus on more complex cases [2]. This boost in efficiency allows radiology teams to manage the increasing demand for imaging without compromising on the quality of care [3]. With aging populations and rising diagnostic needs, this technology is becoming an essential tool for healthcare systems.

AI in Pathology and Genomics: Improving Disease Detection

In pathology, AI pairs with digital tools to transform traditional methods. Glass slides are now converted into high-resolution images, which AI algorithms can analyze to identify tissue structures, detect abnormalities, measure biomarkers, classify tissues, and even grade tumors with remarkable consistency. This level of precision helps pathologists make more accurate diagnoses and recommend better treatment options.

When it comes to genomics, AI teams up with next-generation sequencing (NGS) to provide detailed insights into the genetic, transcriptomic, and proteomic aspects of diseases. These insights empower doctors to create personalized treatment plans tailored to each patient’s unique genetic makeup, a particularly impactful approach in cancer care.

AI in Ophthalmology: Detecting Eye Diseases Early

AI is also making strides in ophthalmology, especially in the early detection of eye diseases. AI-powered retinal screening tools can analyze vast datasets of retinal scans to identify conditions like diabetic retinopathy, glaucoma, and age-related macular degeneration [4]. By catching these issues early, patients can receive timely treatment, improving outcomes and reducing healthcare costs.

But the benefits don’t stop there. AI-assisted imaging in ophthalmology is also being explored as a way to detect early signs of neurological conditions. By identifying subtle changes in retinal images, routine eye exams could become a window into broader health concerns, turning them into powerful diagnostic opportunities.

The Benefits of AI in Diagnostics

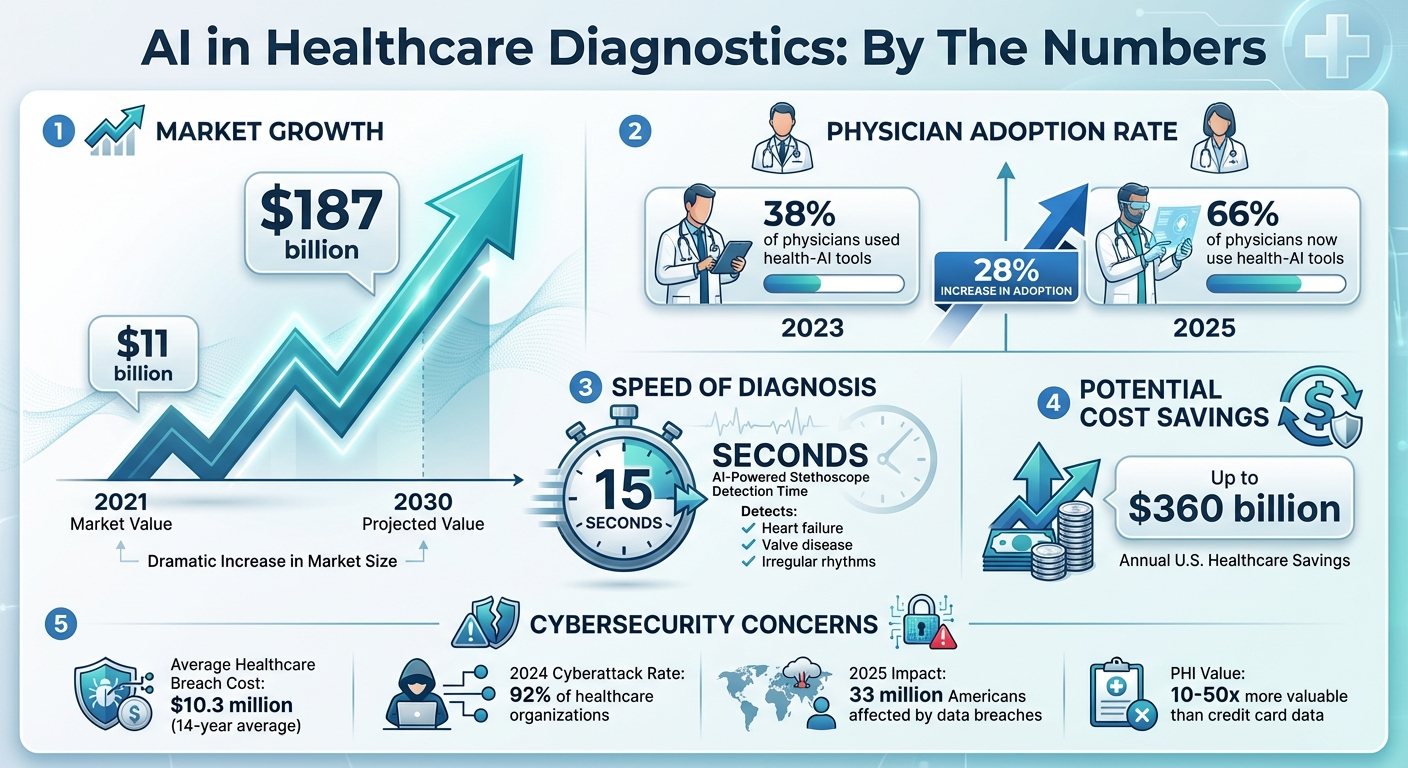

Diagnostics: Key Statistics and Market Growth 2021-2030

AI in diagnostics is more than just a technological milestone; it's reshaping patient care, streamlining operations, and delivering measurable financial benefits for healthcare systems. As its use grows, the real-world advantages are becoming increasingly evident.

Better Diagnostic Accuracy

AI brings a new level of precision to diagnostics by leveraging advanced algorithms to process vast amounts of medical data with incredible accuracy [5]. These systems can identify intricate patterns, subtle anomalies, and even the smallest irregularities in medical images and datasets - details that might escape even the most experienced human eyes [6][7]. By providing consistent, data-driven insights, AI reduces diagnostic inconsistencies and enhances reliability [6][7]. Its predictive capabilities are particularly powerful, enabling earlier detection of diseases by analyzing historical data and identifying trends or risk factors before conditions escalate [6][7]. This early intervention means patients can receive timely treatments when they are most effective, leading to better outcomes and, in many cases, saving lives. These advancements also contribute to more efficient diagnostic workflows.

Faster Diagnosis and Workflow Efficiency

In healthcare, speed is critical, and AI is making a tangible difference. By handling large volumes of imaging data in real time, AI systems can quickly identify subtle abnormalities and automate routine tasks. This not only improves productivity but also reduces patient wait times [8][2][9]. For instance, in August 2025, researchers at Imperial College London introduced an AI-powered stethoscope capable of detecting heart failure, valve disease, and irregular rhythms in as little as 15 seconds by combining ECG data with heart sound analysis [9]. The growing adoption of such tools is reflected in the AI healthcare market, which was valued at $11 billion in 2021 and is expected to reach nearly $187 billion by 2030 [9]. Additionally, a 2025 AMA survey revealed that 66% of physicians now use health-AI tools, a significant jump from 38% in 2023 [9]. This rapid adoption highlights how AI is transforming workflows across the medical field.

Cost Savings and Economic Impact

The economic advantages of AI in diagnostics are just as compelling as its clinical benefits. By reducing errors and enabling early disease detection, AI helps avoid costly treatments for advanced conditions [10][12][13]. Early intervention not only improves patient outcomes but also lowers healthcare expenses. Hospitals benefit from AI-driven operational efficiencies, such as optimized scheduling, staffing, and resource allocation, all of which contribute to reduced operational costs [11][12][14]. Furthermore, AI minimizes unnecessary patient readmissions, which can lead to financial penalties under Medicare [12]. A notable example is the 2025 pilot program in Telangana, India, where AI-based cancer screenings for oral, breast, and cervical cancers are being deployed to address radiologist shortages and improve early detection [9]. This initiative shows how AI can expand diagnostic access to underserved communities while keeping costs under control. These financial benefits make the integration of AI in diagnostics not just a medical necessity but also a smart economic choice. However, as these technologies advance, ensuring robust cybersecurity and ethical governance remains essential.

Risks and Challenges in AI-Driven Diagnostics

AI has the potential to transform medical diagnostics, but it also brings a host of risks that healthcare organizations must confront. These challenges not only threaten patient safety but can also result in steep financial repercussions.

Cybersecurity Risks and Data Vulnerabilities

The healthcare sector is a prime target for cyberattacks, with breach costs averaging $10.3 million over the past 14 years. In 2024 alone, 92% of healthcare organizations reported experiencing cyberattacks, and by 2025, data breaches had impacted 33 million Americans. Why is healthcare such a lucrative target? Protected health information (PHI) is highly valuable, fetching 10 to 50 times more than credit card data on dark web marketplaces. Alarmingly, 80% of stolen patient records now come from breaches involving third-party vendors rather than hospitals directly.

AI-driven diagnostic systems add another layer of complexity. Even the tiniest adversarial attacks - altering just 0.001% of input data - can cause catastrophic errors. The ECRI Institute has flagged AI as the top health technology hazard for 2025. The situation is further complicated by a 30% surge in healthcare ransomware attacks in 2025, with 293 incidents targeting direct-care providers in just the first three quarters of the year. The interconnected nature of AI systems, spanning cloud platforms, medical devices, and third-party integrations, creates numerous vulnerabilities, including data poisoning and model inversion attacks [15].

Bias and Ethical Concerns in AI Models

AI models are only as good as the data they’re trained on, and when that data is biased, the results can lead to inequities in healthcare. These biases often arise from unrepresentative demographic data, limited sampling from specific populations, or flawed assumptions during the algorithm's development. This can result in skewed outcomes that disproportionately affect certain groups.

Adding to the problem is the "black box" nature of many AI systems, which makes it difficult for clinicians and patients to understand how diagnostic conclusions are reached. This lack of transparency can erode trust. Studies have also highlighted significant gaps in the demographic representation of training data for FDA-approved AI/ML devices [16][17][18][20][22][23]. To address these issues, healthcare organizations need to validate AI systems across diverse populations, monitor for disparities in outcomes, and adopt explainable AI frameworks with strong human oversight. Tackling bias is critical to ensure AI enhances diagnostic precision rather than perpetuating existing inequities.

Beyond the ethical concerns, navigating the regulatory landscape adds another layer of challenge.

Regulatory and Compliance Challenges

The rapid evolution of AI in healthcare has outpaced existing regulatory frameworks, creating hurdles for its adoption. Many AI/ML diagnostic tools are classified as medical devices under the Federal Food, Drug, and Cosmetic Act, requiring FDA approval. However, traditional regulatory processes often struggle to accommodate adaptive AI systems that continuously learn and evolve. To address this, new approaches like Predetermined Change Control Plans (PCCPs) are being developed.

At the same time, HIPAA compliance has grown increasingly complicated as AI tools handle vast amounts of sensitive patient data. Healthcare organizations must secure cloud-based platforms, prevent the re-identification of de-identified data, and ensure that AI systems access only the PHI necessary for their function. Transparency is also a key requirement - organizations must disclose AI's role in diagnostics to maintain trust and accountability.

Failure to meet these standards can open organizations to legal risks, such as penalties under the False Claims Act if AI inaccuracies lead to billing errors or diagnostic mistakes [18][19][20][21][23]. Robust human oversight remains essential to ensure that AI complements, rather than replaces, clinical judgment. These challenges highlight the need for comprehensive risk management strategies as AI becomes more integrated into healthcare.

sbb-itb-535baee

Strategies for Safe AI Adoption in Healthcare

Healthcare organizations face unique challenges when integrating AI into diagnostics. Traditional cybersecurity practices often fall short in addressing the sophisticated threats posed by cybercriminals, who use AI for phishing, voice cloning, deepfakes, and data manipulation [24]. To stay ahead, healthcare providers must shift from reactive defenses to proactive governance strategies right from the start.

Here’s how organizations can effectively manage AI risks.

Collaborative Risk Management with Censinet RiskOps™

Effective AI risk management starts with a clear view of potential vulnerabilities across the entire ecosystem. Censinet RiskOps™ offers a centralized platform that consolidates real-time data on third-party and enterprise AI risks into a single, easy-to-navigate dashboard.

This system, powered by Censinet AITM, streamlines vendor risk assessments by automating tasks like security questionnaires, summarizing evidence, and capturing critical integration details. While automation speeds up the process, human oversight ensures that risk teams retain control through customizable rules and review processes. Key findings are routed to the appropriate stakeholders, such as members of the AI governance committee, ensuring that the right people address the right issues at the right time. Think of this as air traffic control for AI oversight - coordinating Governance, Risk, and Compliance (GRC) functions to maintain accountability without slowing down AI adoption.

Implementing Human-in-the-Loop Oversight

AI in healthcare should enhance clinical decision-making, not replace it. To ensure this balance, organizations should establish a multidisciplinary AI governance committee that includes experts from legal, compliance, IT, clinical, and risk management departments [18][1]. This committee plays a critical role in evaluating AI tools before deployment, addressing potential biases, and ensuring compliance with both federal and state regulations.

Clear policies are essential for documenting how AI tools are used, including capturing physicians’ reasoning when they override AI recommendations. Additionally, continuous monitoring systems should be in place to detect issues like performance degradation, model drift, or errors in real-world applications [25][18]. Before any AI tool is deployed clinically, rigorous validation and bias assessments must be conducted, particularly to test performance across diverse demographic groups. This helps prevent inequities and ensures reliable outcomes.

Building a Culture of Continuous Governance

AI systems are constantly evolving, which means governance must be an ongoing effort. Written policies should guide every stage of AI integration, from procurement to deployment and monitoring, while staying aligned with changing standards [18][1].

Recent enforcement actions under the False Claims Act highlight the risks of flawed AI tools, such as those that produce inaccurate billing codes or diagnostic results [18]. To mitigate these risks, healthcare organizations can use real-time dashboards and automated workflows to maintain transparency, track compliance metrics, and quickly identify emerging threats. By adopting a continuous governance model, organizations can ensure that risk management evolves alongside AI capabilities, avoiding bottlenecks that could stifle innovation.

Conclusion: Balancing Innovation and Responsibility

AI is reshaping diagnostics by delivering faster, more precise results that enhance patient care while cutting costs. Whether it's identifying cancers in radiology images or uncovering genetic markers in pathology, these technologies provide healthcare professionals with powerful tools to improve outcomes. However, these advancements also come with notable challenges.

Issues like cybersecurity risks, algorithmic bias, data privacy breaches, and regulatory hurdles are far from theoretical. For instance, in 2024, the US Department of Justice issued subpoenas to several pharmaceutical and digital health companies to investigate potential AI-related violations, highlighting the increasing focus on government oversight [18]. Adopting AI in healthcare isn’t just a technical endeavor - it demands collaboration across disciplines to design, test, and monitor these systems effectively [26].

To navigate these challenges, robust oversight is non-negotiable. Success depends on proactive governance. Organizations must establish comprehensive compliance programs, ensure human oversight at critical decision points, and implement adaptive monitoring systems that grow with AI advancements. This includes forming multidisciplinary governance teams, rigorously validating AI systems across diverse patient groups, and prioritizing data accuracy to minimize risks like misdiagnoses or legal repercussions [18][26].

The path forward combines innovation with responsibility. By adopting centralized risk management tools, integrating automated workflows with human oversight, and using real-time compliance dashboards, healthcare providers can embrace AI while ensuring patient safety. Diagnostic AI should enhance clinical expertise, maintaining transparency and accountability every step of the way.

FAQs

How can healthcare organizations reduce cybersecurity risks when using AI in medical diagnostics?

Healthcare organizations can tackle cybersecurity risks in AI-powered diagnostics by focusing on data security and privacy safeguards. Key measures include encrypting sensitive patient data, enforcing strict access controls, and actively monitoring systems to detect vulnerabilities.

Beyond that, it's essential to routinely evaluate the performance of AI tools, keep detailed records of their usage, and ensure decision-making processes remain open and transparent. Building strong governance structures and offering continuous training to staff on AI limitations and cybersecurity protocols can strengthen both safety and confidence in these advanced technologies.

How does bias in AI algorithms affect medical diagnostics, and what steps can reduce its impact?

Bias in AI algorithms can have a serious impact on diagnostic accuracy, particularly for underrepresented groups, which could deepen existing healthcare disparities. This often stems from training data that fails to adequately represent a wide range of populations or medical conditions.

Healthcare organizations can take key steps to tackle this challenge. One approach is to ensure that training data includes a diverse mix of demographics and conditions. Another step involves using sophisticated methods to identify and correct biases during the development phase. Finally, ongoing audits and monitoring of AI systems can help catch and address new biases as they arise. By focusing on fairness and accountability, AI can provide more accurate and equitable diagnostic results for everyone.

What regulatory hurdles do AI-driven diagnostic tools face in healthcare?

AI-powered diagnostic tools face a range of hurdles within healthcare regulations. One major concern is ensuring safety and reliability, particularly as these tools often use adaptive algorithms that can change and evolve over time. Another pressing issue is tackling bias in these systems to ensure fair and equitable healthcare outcomes for all patients. On top of that, safeguarding data privacy and security is crucial, considering the sensitive nature of patient information.

There's also the need for clearly defined approval processes for these tools, along with ensuring their transparency and ease of understanding. This is vital for earning the trust of both healthcare professionals and patients. Navigating these challenges demands vigilant oversight to strike the right balance between advancing technology and adhering to ethical and legal standards.

Related Blog Posts

- The New Risk Frontier: Navigating AI Uncertainty in an Automated World

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- AI in the ICU: Balancing Life-Saving Technology with Patient Safety

- The Healthcare AI Paradox: Better Outcomes, New Risks

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How can healthcare organizations reduce cybersecurity risks when using AI in medical diagnostics?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare organizations can tackle cybersecurity risks in AI-powered diagnostics by focusing on <strong>data security</strong> and <strong>privacy safeguards</strong>. Key measures include encrypting sensitive patient data, enforcing strict access controls, and actively monitoring systems to detect vulnerabilities.</p> <p>Beyond that, it's essential to routinely evaluate the performance of AI tools, keep detailed records of their usage, and ensure decision-making processes remain open and transparent. Building strong governance structures and offering continuous training to staff on AI limitations and <strong>cybersecurity protocols</strong> can strengthen both safety and confidence in these advanced technologies.</p>"}},{"@type":"Question","name":"How does bias in AI algorithms affect medical diagnostics, and what steps can reduce its impact?","acceptedAnswer":{"@type":"Answer","text":"<p>Bias in AI algorithms can have a serious impact on diagnostic accuracy, particularly for underrepresented groups, which could deepen existing healthcare disparities. This often stems from training data that fails to adequately represent a wide range of populations or medical conditions.</p> <p>Healthcare organizations can take key steps to tackle this challenge. <strong>One approach</strong> is to ensure that training data includes a diverse mix of demographics and conditions. <strong>Another step</strong> involves using sophisticated methods to identify and correct biases during the development phase. <strong>Finally</strong>, ongoing audits and monitoring of AI systems can help catch and address new biases as they arise. By focusing on fairness and accountability, AI can provide more accurate and equitable diagnostic results for everyone.</p>"}},{"@type":"Question","name":"What regulatory hurdles do AI-driven diagnostic tools face in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>AI-powered diagnostic tools face a range of hurdles within healthcare regulations. One major concern is ensuring <strong>safety and reliability</strong>, particularly as these tools often use adaptive algorithms that can change and evolve over time. Another pressing issue is tackling <strong>bias</strong> in these systems to ensure fair and equitable healthcare outcomes for all patients. On top of that, safeguarding <strong>data privacy and security</strong> is crucial, considering the sensitive nature of patient information.</p> <p>There's also the need for clearly defined approval processes for these tools, along with ensuring their <strong>transparency and ease of understanding</strong>. This is vital for earning the trust of both healthcare professionals and patients. Navigating these challenges demands vigilant oversight to strike the right balance between advancing technology and adhering to ethical and legal standards.</p>"}}]}

Key Points:

How is AI transforming medical diagnostics across clinical specialties?

- Radiology: Detects subtle abnormalities with greater accuracy and automates segmentation and measurements.

- Pathology & Genomics: Analyzes high‑resolution slides, identifies biomarkers, and supports personalized therapy.

- Ophthalmology: Screens for diabetic retinopathy, glaucoma, and AMD using retinal imaging.

- Predictive Analytics: Identifies early disease signals and improves preventative care.

What are the top benefits of diagnostic AI for healthcare providers?

- Greater diagnostic precision through pattern detection beyond human vision.

- Faster turnaround times with real-time image assessment and automation.

- Earlier disease detection driving improved patient outcomes.

- Cost savings through reduced errors, fewer readmissions, and optimized workflows.

What cybersecurity risks does diagnostic AI introduce?

- PHI exposure from cloud systems, device integrations, or vendor systems.

- Adversarial attacks that subtly alter inputs and cause misdiagnoses.

- Ransomware and malware targeting imaging systems and data pipelines.

- Third‑party vulnerabilities where outdated vendor systems compromise hospitals.

- Model inversion & data poisoning that expose or corrupt training data.

What ethical and bias challenges affect AI-driven diagnostics?

- Underrepresentation in training data causes unequal accuracy for different populations.

- Black box algorithms reduce transparency and hinder clinician trust.

- Inconsistent performance across demographic groups leads to care inequity.

- Lack of explainability complicates patient consent and clinical validation.

How do regulatory frameworks address AI in diagnostics?

- FDA oversight for AI/ML medical devices, including PCCPs for adaptive models.

- HIPAA rules for PHI use, data minimization, and secure cloud storage.

- Transparency requirements for disclosing AI’s role in patient care.

- Legal risks including False Claims Act penalties tied to AI-driven errors.

How does Censinet RiskOps™ support safe diagnostic AI deployment?

- Automates third‑party AI assessments' and summarizes evidence.

bb Provides real-time dashboards' tracking risks, policies, and tasks.

bb Routes findings to appropriate reviewers' for human-in-the-loop oversight.

bb Aggregates risk data' from across the enterprise and vendor ecosystem.

bb Supports HIPAA, NIST AI RMF, and internal governance models' with centralized compliance workflows.