Digital Doctors: The Promise and Peril of AI in Clinical Decision-Making

Post Summary

By analyzing large datasets, enhancing imaging accuracy, predicting risks, and supporting treatment planning.

It drafts communications, summarizes histories, and helps identify effective treatments while keeping humans in the loop.

Bias, unequal care, opacity, misdiagnosis, and reduced clinician oversight due to black‑box models.

Vulnerable devices, data manipulation, model theft, insecure interoperability, and purpose creep in data use.

Tools like the NIST AI RMF guide organizations in mapping, measuring, managing, and governing AI risks with transparency and accountability.

It centralizes AI oversight, automates risk assessment, aggregates real‑time data, and maintains human‑in‑the‑loop governance.

Artificial intelligence (AI) is reshaping healthcare. It helps doctors diagnose diseases, plan treatments, and predict patient outcomes by analyzing large datasets like medical records and imaging scans. While AI offers faster and more accurate insights, it also raises concerns about bias, transparency, and cybersecurity risks.

Key points:

To use AI effectively, healthcare leaders must focus on ethical practices, robust governance, and ongoing monitoring to keep patient safety and trust at the forefront.

How AI Improves Clinical Decision-Making

What AI Can Do in Clinical Settings

AI is transforming healthcare by analyzing vast amounts of patient data - from medical records to genomics - to uncover patterns that might go unnoticed with manual methods [6]. In clinical decision support, it aids physicians in making more informed choices about patient care, often surpassing traditional tools like the Modified Early Warning Score (MEWS) in predicting clinical deterioration [6].

In diagnostic imaging, AI enhances precision by helping clinicians interpret scans more accurately. It also excels in risk prediction, forecasting potential complications before they escalate. Beyond that, AI contributes to treatment planning by analyzing post-treatment data to identify the most effective therapies for specific patient profiles. This not only reduces complications but can also help lower costs [8]. These capabilities underscore AI's role as a powerful assistant in clinical settings.

AI as a Support Tool for Clinicians

AI isn’t here to replace doctors - it’s here to enhance their expertise. Dr. Samir Kendale, Medical Director of Anesthesia Informatics at Beth Israel Lahey Health and Assistant Professor of Anesthesia at Harvard Medical School, highlights how AI integrates into daily practice:

"AI aids in drafting patient communications, summarizing histories, and suggesting tailored medications."

This human-in-the-loop approach ensures that physicians remain at the core of decision-making, while AI handles data-heavy tasks. For instance, when evaluating treatment options, AI can sift through extensive datasets to identify similar cases, helping clinicians diagnose rare conditions more quickly and pinpoint effective treatments for comparable patients [2]. Dr. Maha Farhat, Associate Physician of Pulmonary and Critical Care at Massachusetts General Hospital, elaborates:

"When clinicians are looking at similar cases to predict which patients will best respond to treatments, AI can help them better personalize their treatment regimens."

Proven Benefits of AI in Healthcare

AI’s ability to deliver precise imaging analysis and rapid diagnostic results directly improves patient outcomes [7][9].

Risks of AI in Clinical Settings

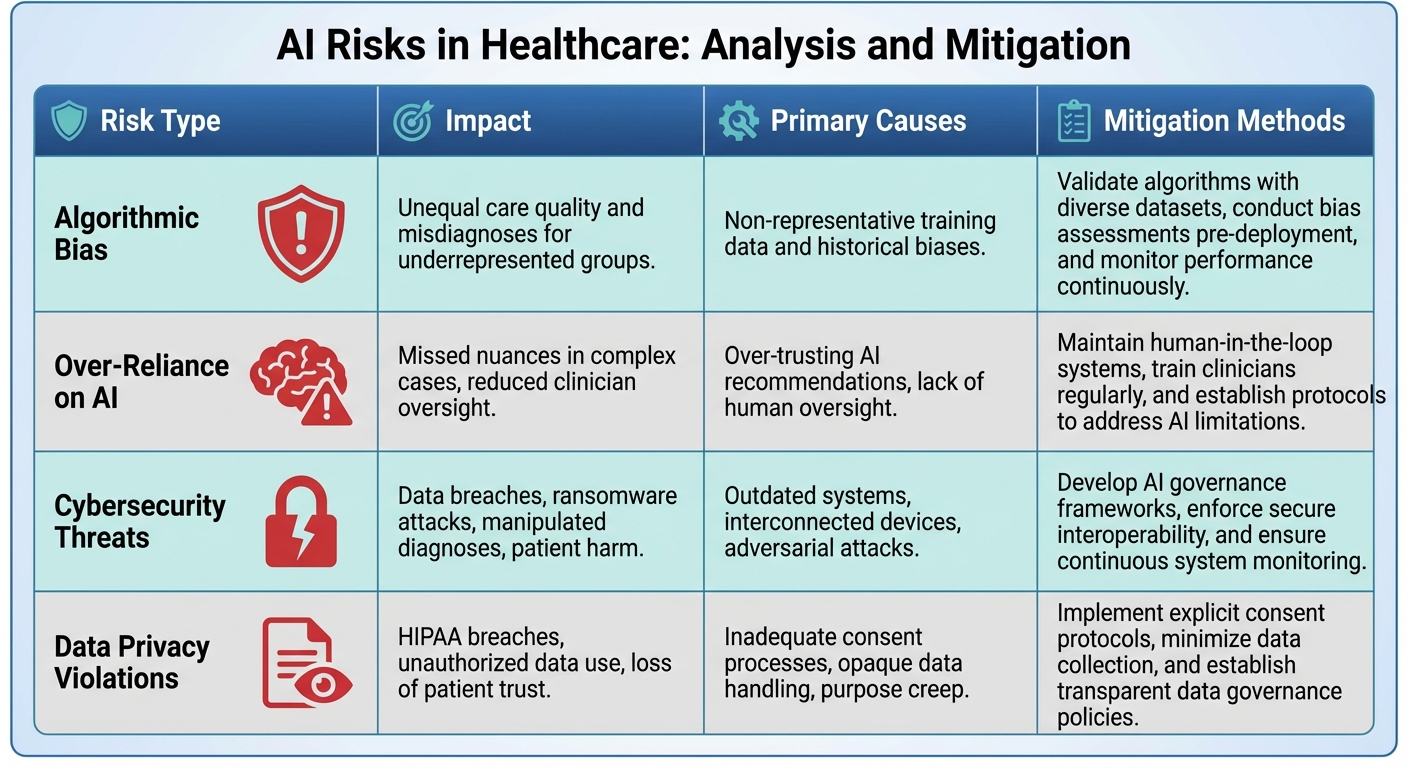

: Risk Types, Impacts, and Mitigation Strategies

Ethical and Clinical Concerns

AI systems in healthcare can unintentionally reinforce existing health disparities, particularly when trained on datasets that fail to reflect diverse patient populations. This algorithmic bias can lead to unequal care, misdiagnoses, and skewed treatment recommendations, especially for marginalized groups [3][5][10][4]. For example, an AI tool trained predominantly on data from a narrow demographic might overlook or misdiagnose conditions in underrepresented populations.

Another major issue is the "black box" nature of many AI systems. When the decision-making process is opaque, it becomes harder for patients and clinicians to understand or challenge AI-driven recommendations. This lack of transparency undermines informed consent and can erode trust in these technologies. Additionally, over-reliance on AI might cause clinicians to miss critical nuances in unusual or complex cases, reducing their ability to provide effective oversight.

While these ethical dilemmas raise concerns about equity and transparency, they are compounded by the growing threat of cybersecurity vulnerabilities in AI-driven healthcare systems.

Cybersecurity and Data Privacy Threats

Integrating AI into healthcare opens the door to new cybersecurity risks. Connected medical devices, from pacemakers to imaging systems, create potential entry points for cyberattacks. Malicious actors could exploit these vulnerabilities to manipulate diagnostic data - such as altering MRI results - or even steal proprietary AI models and sensitive patient information [11][12].

Another pressing issue is the collection and use of vast amounts of patient data by AI systems, often without clear or explicit consent. This increases the risk of "purpose creep", where data is used for unintended purposes [13][14]. Under U.S. laws like HIPAA, healthcare organizations are required to safeguard patient health information, but outdated software, hardware vulnerabilities, and lack of secure interoperability between hospital systems can expose critical weaknesses. A single breach in such a system could compromise the safety and privacy of countless patients [11].

Risk Categories and Mitigation Approaches

Unequal care quality and misdiagnoses for underrepresented groups

Non-representative training data and historical biases

Validate algorithms with diverse datasets, conduct bias assessments pre-deployment, and monitor performance continuously

Missed nuances in complex cases, reduced clinician oversight

Over-trusting AI recommendations, lack of human oversight

Maintain human-in-the-loop systems, train clinicians regularly, and establish protocols to address AI limitations

, manipulated diagnoses, patient harm

Outdated systems, interconnected devices, adversarial attacks

Develop AI governance frameworks, enforce secure interoperability, and ensure continuous system monitoring

, unauthorized data use, loss of patient trust

Inadequate consent processes, opaque data handling, purpose creep

Implement explicit consent protocols, minimize data collection, and establish transparent data governance policies

These risks highlight the urgent need for well-structured governance and risk management strategies, which will be explored in the following section.

Managing AI Risks in Healthcare

Core Principles for AI Governance

Managing AI in healthcare effectively begins with a solid foundation of ethical principles. These include beneficence (ensuring AI is used to improve patient outcomes), nonmaleficence (avoiding harm), patient autonomy (respecting informed consent and individual choices), and justice and equity (promoting fair access and outcomes for all patients) [3][18][5][19][20].

In addition to these ethical principles, healthcare organizations must emphasize transparency in AI decision-making, accountability for outcomes, and robust privacy and security measures to protect patient data. Human oversight should remain a cornerstone, with AI acting as a tool to assist, not replace, clinical judgment [3][19][10].

To operationalize these principles, organizations should establish structured frameworks that define clear responsibilities across IT teams, compliance officers, clinicians, and patients. Regular communication and ongoing assessments of AI's impact are essential to ensure these principles are upheld. Together, these guidelines provide a strong foundation for managing AI risks effectively, as described in the following framework.

Risk Management Frameworks for AI

The NIST AI Risk Management Framework (AI RMF), introduced on January 26, 2023, offers a structured approach for addressing AI risks in healthcare [21][23]. This voluntary framework is built around four key functions - Govern, Map, Measure, and Manage - and prioritizes accountability, transparency, and ethical practices [23]. On July 26, 2024, NIST released an additional profile tailored to generative AI (NIST-AI-600-1), which helps organizations tackle the unique challenges these advanced systems present [21].

To enhance AI risk management, healthcare organizations can integrate this framework with existing CRM tools and HIPAA compliance assessments. This proactive approach shifts the focus from reacting to risks to anticipating and mitigating them [11]. The framework also emphasizes the importance of assigning clear roles for AI oversight, employing diverse risk assessment methods, and crafting detailed mitigation strategies [23]. By adopting these practices, organizations can ensure AI systems remain trustworthy and effective, with patient safety as the top priority.

"I think it'd be much better if the standard was: does it improve patient outcomes?" - Jeremy Kahn, AI editor at Fortune

Kahn's observation highlights the importance of keeping patient well-being and clinical effectiveness at the heart of AI risk management, rather than merely checking boxes for technical compliance.

Using Censinet RiskOps™ for AI Risk Management

Censinet RiskOps™ builds on these frameworks by providing a centralized platform for managing AI risks. It streamlines governance processes by routing risk findings to key stakeholders, such as members of the AI governance committee, for thorough review and approval.

With its Censinet AI™ features, the platform simplifies risk assessments by automatically summarizing vendor data, integration specifics, and external exposures. It generates comprehensive risk reports, ensuring that human oversight remains integral to the process. This human-in-the-loop approach enables automation to enhance, not replace, decision-making, with customizable rules and workflows for added flexibility.

The platform also offers real-time data aggregation through an intuitive AI risk dashboard. This tool provides healthcare organizations with a clear, continuous view of AI-related policies, risks, and tasks. By centralizing oversight, Censinet RiskOps™ helps maintain compliance with regulations like HIPAA, addresses complex AI challenges, and ensures patient safety while supporting better clinical decision-making.

sbb-itb-535baee

Implementing AI Safely in Clinical Practice

Introducing AI into clinical practice requires more than just advanced algorithms - it demands a thoughtful approach to design, oversight, and ongoing refinement to ensure safety and trust.

Building Trust Through Design

For AI systems to gain acceptance in healthcare, trust is non-negotiable. Clinicians need to understand how these tools arrive at their conclusions, especially with concerns about "black box" algorithms and fears that machines might replace human decision-making [25]. Positioning AI as a support tool rather than a replacement can help ease these worries.

Involving healthcare professionals - physicians, nurses, patients, and support staff - during every step of AI development is key. By engaging these stakeholders in design, testing, and refinement, developers can ensure the technology fits seamlessly into clinical workflows and addresses practical needs [24]. Hands-on experience with AI tools further builds trust, as clinicians see firsthand how these systems perform in real-world patient care scenarios. Beyond technical performance, adoption hinges on user acceptance, clear and interpretable outputs, and ethical interactions between humans and machines [24].

Transparency is another cornerstone of trust. Clinicians need access to information about the data sources, diversity of training datasets, and performance metrics to determine if a tool suits their specific patient population. Ultimately, AI should enhance - not override - clinical judgment [1]. This foundation of trust paves the way for integrating safety mechanisms directly into clinical workflows.

Adding Safety Controls to Clinical Workflows

Safety in AI implementation starts with rigorous validation. Algorithms should undergo extensive clinical trials involving diverse populations. Additionally, healthcare organizations should adopt a "clinician-in-the-loop" approach, requiring that human professionals verify AI recommendations before applying them to patient care [1][26][17]. This oversight acts as a safeguard, leveraging AI's analytical strengths while minimizing the risk of errors.

Once implemented, monitoring becomes critical. Tracking near-misses, adverse events, and other safety concerns allows teams to identify and address issues early on. Regular evaluations should focus on detecting algorithmic bias to ensure the system maintains high standards of care across all demographic groups [17]. These safeguards naturally feed into ongoing improvement processes, creating a cycle of refinement and reliability.

Ongoing Monitoring and Improvement

AI systems must evolve alongside clinical needs, and continuous monitoring is essential to this process. Feedback loops - incorporating clinician insights and real-time performance data - help detect issues like model drift. Clear incident response plans ensure swift action, including disabling the system if necessary.

Regular audits play a vital role in keeping AI tools aligned with clinical standards and regulatory requirements. These audits should also assess performance across diverse patient populations to prevent disparities in care. AI oversight committees can further strengthen this process by reviewing performance data, identifying emerging risks, and authorizing updates to address changing clinical demands. Together, these practices ensure that AI remains a safe and effective tool in dynamic healthcare environments.

Conclusion: Managing AI in Healthcare

AI has the potential to reshape clinical decision-making in profound ways. However, realizing this potential hinges on maintaining a balanced approach to oversight. By grounding AI implementation in established ethical principles and robust risk management practices, healthcare leaders can ensure that patient safety remains the top priority. The challenge isn't about choosing between AI's advantages and its risks - it's about managing both effectively through careful governance and ongoing supervision.

Key Takeaways for Healthcare Leaders

For AI to succeed in healthcare, leaders must focus on three critical areas: patient safety, clinician trust, and transparent oversight. These priorities should guide every phase of AI deployment. Comprehensive governance frameworks are essential to address ethical issues, algorithmic bias, data privacy, and cybersecurity threats - preventing these challenges from compromising patient care [27][17].

Building trust in AI depends on adhering to core principles like fairness, universality, traceability, usability, robustness, and explainability throughout the AI lifecycle [28]. These principles are the foundation for fostering trust and ensuring effective AI adoption in healthcare.

How Censinet RiskOps™ Supports Safe AI Adoption

Censinet RiskOps™ offers a practical solution for healthcare organizations aiming to safely integrate AI. Acting as a centralized platform, it enables organizations to assess, monitor, and mitigate AI-related risks across their entire ecosystem. By streamlining governance, centralizing risk data, and maintaining human oversight, Censinet RiskOps™ ensures that the appropriate teams address the right issues at the right time. This unified approach simplifies AI oversight, helping healthcare organizations confidently navigate the complexities of AI adoption.

FAQs

How can AI reduce bias in healthcare data?

AI has the potential to make healthcare data more inclusive by addressing bias in datasets. By gathering information from a broad range of demographics, it ensures that no group is underrepresented, creating a foundation for fairer and more balanced outcomes in model development.

On top of that, AI can leverage algorithms designed specifically to identify and reduce bias during both data processing and decision-making stages. When combined with transparent practices and collaborative efforts, these approaches not only improve fairness but also build trust in the systems, ensuring that AI-driven healthcare solutions serve everyone equitably.

How can patient data be kept secure when using AI in healthcare?

Ensuring the security of patient data in AI-powered healthcare systems requires a multi-layered approach. First, implementing advanced encryption protocols is a must to safeguard sensitive information during storage and transmission. Alongside this, enforcing strict access controls ensures that only authorized personnel can view or handle patient data. Regularly conducting comprehensive security audits is another key step to identify and address vulnerabilities.

Adhering to HIPAA regulations is non-negotiable for maintaining compliance and protecting patient privacy. Healthcare organizations should also establish clear policies for handling sensitive information, restrict access exclusively to authorized staff, and keep a vigilant eye on systems to detect and respond to potential threats. Together, these measures build a robust framework for securing patient data and fostering trust in AI-driven healthcare solutions.

How does AI ensure transparency in clinical decision-making?

AI plays a crucial role in making clinical decision-making more transparent by providing explainability and interpretability. These features allow clinicians and patients to understand the reasoning behind specific recommendations or diagnoses. This not only builds trust but also gives healthcare providers the ability to review and validate AI-generated outputs.

On top of that, AI systems are equipped with accountability mechanisms to promote responsible use. These tools help healthcare organizations track AI performance, spot potential biases, and uphold ethical standards within clinical settings.

Related Blog Posts

- The New Risk Frontier: Navigating AI Uncertainty in an Automated World

- The Diagnostic Revolution: How AI is Changing Medicine (And the Risks Involved)

- The Healthcare AI Paradox: Better Outcomes, New Risks

- The Future of Medical Practice: AI-Augmented Clinicians and Risk Management

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How can AI reduce bias in healthcare data?","acceptedAnswer":{"@type":"Answer","text":"<p>AI has the potential to make healthcare data more inclusive by addressing bias in datasets. By gathering information from a broad range of demographics, it ensures that no group is underrepresented, creating a foundation for fairer and more balanced outcomes in model development.</p> <p>On top of that, AI can leverage algorithms designed specifically to identify and reduce bias during both data processing and decision-making stages. When combined with transparent practices and collaborative efforts, these approaches not only improve fairness but also build trust in the systems, ensuring that <a href=\"https://censinet.com/resource/a-beginners-guide-to-managing-third-party-ai-risk-in-healthcare\">AI-driven healthcare solutions</a> serve everyone equitably.</p>"}},{"@type":"Question","name":"How can patient data be kept secure when using AI in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>Ensuring the security of patient data in AI-powered healthcare systems requires a multi-layered approach. First, implementing <strong>advanced encryption protocols</strong> is a must to safeguard sensitive information during storage and transmission. Alongside this, enforcing <strong>strict access controls</strong> ensures that only authorized personnel can view or handle patient data. Regularly conducting <strong>comprehensive security audits</strong> is another key step to identify and address vulnerabilities.</p> <p>Adhering to <strong>HIPAA regulations</strong> is non-negotiable for maintaining compliance and protecting patient privacy. Healthcare organizations should also establish clear policies for handling sensitive information, restrict access exclusively to authorized staff, and keep a vigilant eye on systems to detect and respond to potential threats. Together, these measures build a robust framework for securing patient data and fostering trust in AI-driven healthcare solutions.</p>"}},{"@type":"Question","name":"How does AI ensure transparency in clinical decision-making?","acceptedAnswer":{"@type":"Answer","text":"<p>AI plays a crucial role in making clinical decision-making more transparent by providing <strong>explainability</strong> and <strong>interpretability</strong>. These features allow clinicians and patients to understand the reasoning behind specific recommendations or diagnoses. This not only builds trust but also gives healthcare providers the ability to review and validate AI-generated outputs.</p> <p>On top of that, AI systems are equipped with <strong>accountability mechanisms</strong> to promote responsible use. These tools help healthcare organizations track AI performance, spot potential biases, and uphold ethical standards within clinical settings.</p>"}}]}

Key Points:

How does AI improve clinical decision-making in healthcare?

- Analyzes vast datasets such as medical records, genomics, and imaging to reveal patterns humans may miss.

- Outperforms traditional tools like MEWS in predicting deterioration.

- Enhances diagnostic imaging accuracy and speeds interpretation.

- Supports personalized treatment planning through data‑driven insights.

How does AI support — rather than replace — clinicians?

- Drafts patient communications and summarizes histories to reduce administrative burden.

- Identifies similar clinical cases to aid diagnosis and treatment selection.

- Maintains a human‑in‑the‑loop model, ensuring clinicians remain the final decision-makers.

- Accelerates rare‑disease identification by comparing patient data across large datasets.

What ethical and clinical risks arise when AI is used in healthcare?

- Algorithmic bias from non‑representative training data can worsen disparities.

- Opaque “black‑box” models undermine trust and informed consent.

- Over-reliance on AI can reduce clinician oversight and nuance detection.

- Unequal care outcomes may emerge across demographic groups.

What cybersecurity and privacy challenges does AI introduce?

- Vulnerable connected devices (e.g., pacemakers, imaging systems) expand attack surfaces.

- Manipulation of diagnostic data threatens patient safety.

- Theft of proprietary AI models and PHI is an increasing risk.

- Purpose creep emerges when data is reused without explicit consent.

- Legacy software and poor interoperability create exploitable weaknesses.

How do governance frameworks ensure safer AI deployment?

- Ethical principles (beneficence, nonmaleficence, autonomy, justice) guide decision-making.

- NIST AI RMF provides Govern–Map–Measure–Manage structure for managing AI risk.

- Generative AI profiles (NIST‑AI‑600‑1) address emerging model-specific issues.

- Defined roles and accountability ensure transparency and proper oversight.

- Integration with HIPAA and CRM improves proactive risk mitigation.

How does Censinet RiskOps™ help healthcare organizations manage AI safely?

- Centralizes governance and routes AI risk findings to appropriate reviewers.

- Automates AI risk assessments, summarizing vendor and exposure data.

- Maintains human‑in‑the‑loop oversight for safer, more transparent decisions.

- Provides real‑time dashboards for ongoing monitoring of policies, risks, and tasks.

- Improves HIPAA compliance and strengthens cybersecurity posture.