The Future Risk Manager: Human Expertise Enhanced by AI Capabilities

Post Summary

Healthcare cybersecurity is becoming more complex, with cyberattacks on the rise. In 2024, breaches exposed over 238 million healthcare records, impacting 70% of the U.S. population. The increasing use of Electronic Health Records (EHRs) and Internet of Medical Things (IoMT) devices has expanded vulnerabilities. AI is now playing a critical role in managing these risks by detecting threats in real time, automating risk assessments, and consolidating data for better decision-making.

Key Points:

- AI in Cybersecurity: AI tools predict vulnerabilities, detect risks faster, and automate compliance tasks.

- Human Judgment is Key: AI supports data analysis, but humans handle complex decisions, like risk acceptance or patient safety dilemmas.

- Skills for Risk Managers: Understanding AI basics, data quality, and compliance frameworks like NIST AI RMF is essential.

- AI Tools in Use: Platforms like IBM QRadar and Censinet RiskOps™ are transforming threat detection, vendor risk management, and governance processes.

AI and human expertise together are reshaping healthcare risk management, balancing efficiency with thoughtful decision-making to protect patient data and ensure compliance.

Skills Healthcare Risk Managers Need for AI Integration

Required Skills for Managing AI-Driven Solutions

Healthcare risk managers must build a solid foundation in technical knowledge to effectively navigate AI tools. A basic understanding of AI and machine learning fundamentals - such as key concepts, terminology, and how these technologies operate - is essential today [3]. While no one expects risk managers to become data scientists, they must know how to ask insightful questions and recognize potential red flags.

Another critical skill is data literacy. Risk managers need to grasp how data quality impacts AI outcomes, identify possible biases in datasets, and understand the dependencies AI systems have on the data they process. Familiarity with the specific risks tied to AI systems is also necessary to mitigate vulnerabilities and ensure system integrity.

Additionally, risk managers must evaluate the limitations of AI and determine when human intervention is necessary, particularly for decisions that impact patient safety or compliance with regulations.

Working with Healthcare Leadership Teams

Managing AI risks effectively requires leadership’s commitment to ethical practices, transparency, and accountability [4]. Risk managers must work hand-in-hand with key figures like CISOs, privacy officers, clinical leaders, and compliance teams to align AI initiatives with organizational goals and regulatory standards.

Many organizations are forming cross-functional AI governance committees to oversee AI activities. These committees often include data scientists, clinicians, ethics experts, IT professionals, cybersecurity specialists, and privacy leaders [3]. They ensure proper oversight throughout the AI lifecycle by reviewing decisions, addressing risks, and maintaining compliance. Risk managers play a vital role in these discussions, translating technical risks into terms leadership can understand and guiding them through the implications of AI-driven decisions.

Clear governance processes are essential. These processes should define roles and responsibilities at every stage, from AI development to deployment and ongoing monitoring [3]. Strong communication skills are crucial for risk managers to bridge the gap between technical experts and non-technical stakeholders, ensuring everyone understands their part in creating safe and compliant AI systems.

This collaborative approach sets the stage for integrating U.S. frameworks into a broader AI competency strategy.

U.S. Frameworks for AI Competency Development

Several frameworks in the U.S. provide structured guidance for healthcare organizations aiming to build AI competency. The NIST AI Risk Management Framework (AI RMF) offers voluntary guidelines to help organizations manage AI risks while emphasizing trustworthiness throughout the AI lifecycle - spanning design, development, usage, and evaluation [5][6]. Accompanying resources like the Playbook, Roadmap, Crosswalk, and the Trustworthy and Responsible AI Resource Center support implementation and promote global alignment [5].

Another resource, NIST-AI-600-1, the Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile, specifically addresses the unique challenges posed by generative AI systems [5]. Together, these frameworks provide a structured approach for skill-building and consistent AI governance, equipping organizations to navigate the complexities of AI integration effectively.

AI Tools for Healthcare Cybersecurity Risk Management

AI-Powered Threat Detection and Predictive Analytics

AI-driven Security Information and Event Management (SIEM) platforms are reshaping how healthcare organizations detect and respond to cyber threats. Tools like IBM QRadar Advisor with Watson, Splunk User Behavior Analytics, and LogRhythm's NextGen SIEM Platform analyze massive volumes of logs and network events. These platforms excel at identifying security incidents, cutting down on false positives, and uncovering advanced or previously unknown threats [7].

But it's not just about spotting threats. Predictive analytics takes things a step further by using historical and real-time data to anticipate risk areas. This proactive approach helps prevent patient harm, compliance violations, and financial losses. AI-enabled platforms also centralize risk-related data, breaking down silos and offering real-time dashboards that enable quicker and more informed decision-making [1]. These tools are paving the way for more efficient enterprise risk assessments across the healthcare sector.

Automated Third-Party and Enterprise Risk Assessments

Beyond detection, automated risk assessments are transforming how healthcare organizations manage operational risks. Handling vendor relationships and enterprise risks manually can be time-consuming and error-prone. Tools like Censinet RiskOps™, powered by Censinet AI™, streamline these processes by automating third-party risk assessments. Vendors can quickly complete security questionnaires, while the platform summarizes documentation, identifies product integration details, flags fourth-party exposures, and generates detailed risk reports. Importantly, these tools include configurable rules and review processes, ensuring human oversight for complex decisions.

Automated tools also simplify compliance audits and documentation, reducing the likelihood of human error. By automating routine tasks, they free up staff to focus on higher-priority strategic initiatives [1].

AI-Assisted Governance and Policy Management

AI is also enhancing governance and policy management, which are critical components of a robust risk management framework. Platforms like Censinet AI streamline governance workflows by routing assessment findings and risk-related tasks to the right stakeholders. These tools consolidate data in centralized dashboards, making it easier to monitor AI-related policies, risks, and tasks in one place.

When choosing AI tools for governance, healthcare organizations should prioritize platforms that operate in HIPAA-compliant cloud environments, offer strong encryption, maintain detailed audit trails, and implement role-based access controls [1]. Automated compliance reporting ensures adherence to regulations like HIPAA and the NIST AI RMF, while features like transparent consent management, continuous monitoring, and bias testing support ethical and responsible AI use [2][3].

When to Use AI vs. Human Judgment

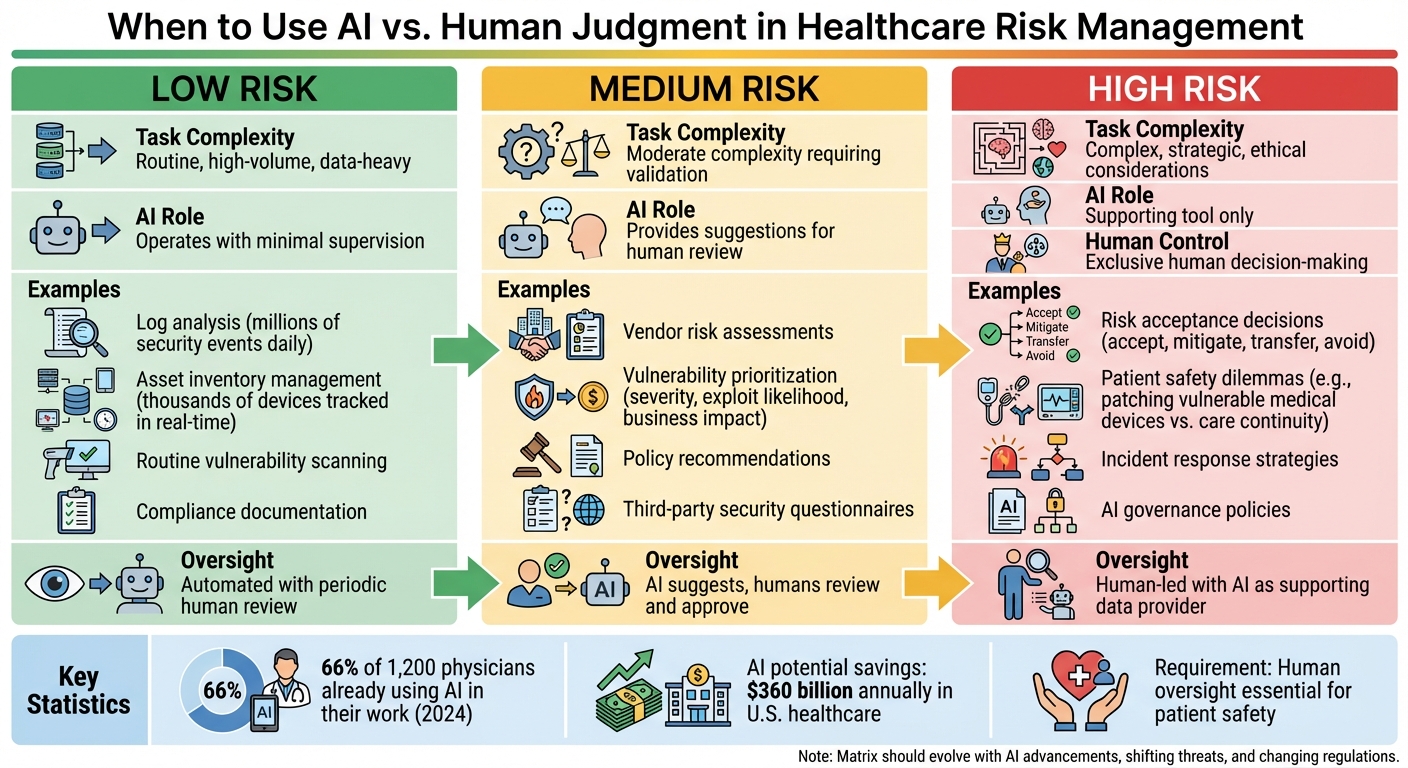

AI vs Human Judgment in Healthcare Risk Management Decision Matrix

Building on the strengths of AI and the irreplaceable value of human judgment, it's crucial to understand when to lean on each. The balance between the two plays a key role in strengthening healthcare cybersecurity.

Tasks Where AI Shines

AI thrives in handling repetitive, high-volume, and data-heavy tasks. Take log analysis, for example: AI-driven SIEM platforms can process millions of security events daily, pinpointing patterns and anomalies that would take human analysts weeks to uncover. Similarly, asset inventory management benefits from AI's capabilities, as it can automatically track and catalog thousands of healthcare devices, applications, and endpoints in real time.

Another area where AI proves invaluable is vulnerability prioritization. Instead of manually sifting through hundreds of vulnerability scan results, AI algorithms can evaluate factors like threat severity, exploit likelihood, and potential business impact to rank risks. This lets security teams zero in on critical exposures. In fact, a 2024 survey revealed that 66% of nearly 1,200 physicians were already using AI to assist in their work, highlighting the growing role of AI in healthcare operations [8].

Decisions That Require Human Oversight

While AI excels at routine data analysis, it falls short in areas requiring nuanced decision-making. This is where human judgment becomes indispensable. For instance, decisions about risk acceptance - whether to accept, mitigate, transfer, or avoid a risk - demand a deep understanding of business context, regulatory requirements, and the potential impact on patients, elements that AI cannot fully comprehend.

Patient safety is another area where human expertise is critical. Imagine a scenario where a vital medical device has a known vulnerability, but applying a patch would require downtime that could jeopardize patient care. Healthcare risk managers must weigh these competing priorities, factoring in ethical considerations, clinical expertise, and operational realities. While AI has the potential to save the U.S. healthcare system up to $360 billion annually by improving efficiency, achieving these savings requires human oversight to ensure patient safety remains the top priority [4].

Creating a Decision Matrix for AI and Human Roles

To effectively allocate tasks between AI and human oversight, organizations can use a decision matrix. This structured approach categorizes tasks by complexity, risk level, and the expertise required. For low-risk, high-volume activities like routine vulnerability scanning or compliance documentation, AI can operate with minimal supervision. Medium-risk tasks, such as vendor risk assessments or policy recommendations, should involve AI providing suggestions that humans review and approve.

High-risk decisions, however, demand exclusive human control. These include incident response strategies, risk acceptance for critical systems, and policies governing AI itself. In these cases, AI serves as a supporting tool rather than the final decision-maker. The matrix should remain flexible, evolving alongside advancements in AI, shifting threats, and changing regulations. Clear escalation paths should also be defined, ensuring that stakeholders review AI-generated recommendations before implementation and that accountability for final decisions lies firmly with humans [6][9].

sbb-itb-535baee

Implementing AI-Driven Risk Management in Healthcare

AI is transforming risk management in healthcare, but turning its potential into practice requires a thoughtful and compliant approach. Here's how AI can be effectively integrated across the risk management lifecycle.

Using AI Across the Risk Management Lifecycle

To embed AI into healthcare risk management, organizations can adopt an Enterprise Risk Management (ERM) framework [10][4]. This approach allows AI to play a central role in identifying emerging risks, assessing vulnerabilities, prioritizing threats, and speeding up responses to incidents. It also supports ongoing monitoring and informed decision-making. By following this lifecycle-based strategy, healthcare providers can strengthen their risk management processes with AI-driven insights.

Creating AI Governance and Compliance Frameworks

Governance is critical when implementing AI in healthcare. It extends beyond standard IT practices to include medical ethics and clinical guidelines [12]. In the U.S., frameworks like the NIST AI Risk Management Framework (released on January 26, 2023) offer voluntary guidance for integrating AI in a secure and compliant way [10]. This framework emphasizes key principles such as accountability, transparency, fairness, and safety to ensure AI systems contribute positively while minimizing harm [11][12].

Defending Against AI-Powered Threats

AI's adoption also brings new cybersecurity challenges. Risks include data breaches, opaque algorithms, and vulnerabilities in AI-driven medical devices [2]. Generative AI introduces additional concerns like data leaks, algorithm tampering, and deepfakes. Furthermore, interconnected medical devices - such as pacemakers or insulin pumps - are increasingly targeted by ransomware and denial-of-service attacks [2].

To address these threats, healthcare organizations must adapt their cybersecurity strategies. This includes improving monitoring protocols, conducting regular security assessments of AI systems, and deploying defenses tailored to AI-specific risks. These measures are vital to safeguarding sensitive medical data and ensuring the reliability of AI-powered tools.

Conclusion: The Future of AI and Human Expertise in Risk Management

Healthcare cybersecurity is at a turning point. AI is transforming how threats are detected, data is analyzed, and operations are streamlined. But with these advancements come new risks. The future lies in the hands of risk managers who can skillfully balance AI's capabilities with the human judgment needed for thoughtful, strategic decisions.

Key Takeaways for Healthcare Risk Managers

To succeed in AI-driven risk management, healthcare organizations must strike the right balance. AI excels at processing large volumes of data and automating repetitive tasks, but human judgment remains critical for addressing complex, strategic challenges. Risk management teams need to build expertise in AI, from understanding how these systems work to recognizing their limits and knowing when human oversight is essential. Additionally, as AI tools and their vendors introduce new vulnerabilities, strengthening third-party risk management becomes crucial for protecting healthcare IT systems.

By blending advanced AI technologies with ongoing skill development and strong governance practices, healthcare organizations can create a more proactive and resilient defense against cyber threats.

What's Next for AI-Enhanced Risk Management

Looking ahead, the journey will require constant adaptation in both strategy and technology. As cyber threats evolve, so too will the ways attackers and defenders use AI. Healthcare organizations that embrace AI while embedding secure-by-design principles into their infrastructure will be better equipped to safeguard patient data and maintain operational stability.

This collaboration between AI and human expertise isn't just about efficiency - it’s about building a stronger, more resilient healthcare system. By combining AI’s ability to analyze data with the nuanced judgment of human professionals, healthcare risk managers can anticipate emerging threats, navigate shifting regulations, and protect the patients and communities they serve. This partnership ensures a forward-thinking approach to cybersecurity, grounded in both innovation and human insight.

FAQs

How does AI support healthcare risk managers in strengthening cybersecurity?

AI is transforming the way healthcare risk managers tackle cybersecurity threats. Through real-time monitoring, it can spot potential risks early, preventing them from growing into bigger problems. With the help of predictive analytics, AI identifies weak points and forecasts future threats, allowing for smarter, forward-thinking decisions.

It also takes over labor-intensive tasks like conducting risk assessments and evaluating third-party vendors. This not only saves time but also boosts accuracy. Another critical area AI supports is the monitoring of Internet of Medical Things (IoMT) devices, ensuring these connected tools stay secure from cyber threats. By blending human expertise with AI-powered insights, healthcare organizations can create more resilient defenses to face ever-changing cybersecurity risks.

What key skills do healthcare risk managers need to successfully use AI tools?

Healthcare risk managers need a mix of technical know-how and strategic insight to make the most of AI tools. Here are some key skills they should focus on:

- Understanding AI: A basic grasp of how AI works and its potential uses in risk management is essential.

- Data interpretation: Knowing how to analyze and act on insights from AI-generated data is crucial for making informed decisions.

- Cybersecurity knowledge: Staying updated on current threats and understanding how AI can help detect and prevent them is a must.

- Regulatory knowledge: Being well-versed in healthcare regulations ensures that AI tools are used ethically and responsibly.

- Team collaboration: Strong communication and teamwork skills are needed to work effectively with technical experts and integrate AI solutions smoothly.

By mastering these areas and applying their healthcare expertise, risk managers can use AI to enhance decision-making, improve efficiency, and tackle tough challenges in healthcare cybersecurity.

When is it important for human judgment to take precedence over AI in healthcare risk management?

When ethical concerns, biases, or gaps in data come into play, human judgment must take precedence over AI. This becomes even more crucial in situations involving sensitive or complex patient factors that AI might struggle to interpret or address effectively.

In high-pressure or uncertain circumstances - like ethical dilemmas or cases that demand empathy and an understanding of cultural nuances - human expertise is indispensable. It ensures decisions are not only accurate but also morally responsible. While AI serves as a powerful tool, its true value lies in supporting human insight, not replacing it.