The Governance Gap: Why Traditional Risk Management Fails with AI

Post Summary

AI is reshaping healthcare cybersecurity, offering advanced tools for detecting threats and managing AI-powered medical devices. However, it also introduces significant risks like data breaches, algorithmic failures, and cyberattacks on medical systems. Traditional risk management approaches can't keep up with AI's dynamic nature, opaque models, and evolving threats.

Key challenges include:

- Static Assessments: Conventional periodic reviews fail to address AI's changing risk profiles, such as model drift and unpredictable system errors.

- Black Box Models: AI's lack of transparency makes it hard to trace decisions, identify biases, or ensure accountability.

- Fragmented Oversight: Many healthcare organizations lack dedicated AI governance, leading to unclear accountability and increased vulnerabilities.

- Emerging Threats: AI-specific risks like data poisoning, adversarial attacks, and deepfake scams expose healthcare systems to new dangers.

To address these gaps, healthcare organizations must adopt AI-specific governance strategies, including:

- Forming cross-functional committees to oversee AI risks.

- Using frameworks like NIST AI Risk Management to guide AI oversight.

- Implementing continuous monitoring systems to track AI performance and security.

Without tailored governance, healthcare systems remain vulnerable to AI-related failures and cyber risks, jeopardizing patient safety and operational stability.

AI Governance Gaps in Healthcare: Key Statistics and Challenges

Key Governance Gaps in Managing AI Risks

Static Assessments vs. AI's Changing Nature

Traditional risk management relies on periodic reviews, but AI systems are anything but static. They evolve constantly, shifting their risk profiles in ways that traditional methods struggle to capture. This phenomenon, often referred to as model drift, can lead AI systems to degrade over time or generate unexpected errors [1][2].

The issue with annual or quarterly reviews is that they provide only a limited snapshot of a system that’s always changing. Without mechanisms for continuous monitoring, validation, and retraining, organizations are left exposed to risks that may only become apparent after a failure occurs [1][2]. This gap leaves decision-makers in the dark, reacting to problems instead of preventing them.

Black Box Models and Explainability Problems

Many advanced AI systems, such as deep learning models and large language models, function as "black boxes." These systems rely on statistical correlations rather than transparent, rule-based logic [1]. The challenge? Traditional risk assessments depend on understanding how a system works, but with black box models, even their developers may not fully grasp how specific decisions are made.

"Intelligent systems can be subject to 'hallucinations', producing incorrect or inaccurate outputs, which requires careful supervision of the generated responses." - Di Palma et al. [1]

This lack of clarity makes it harder to identify biases, trace potential data poisoning, or ensure informed consent [4][5]. The opacity also complicates governance, as it becomes difficult to assign accountability when something goes wrong.

Fragmented Ownership and Accountability

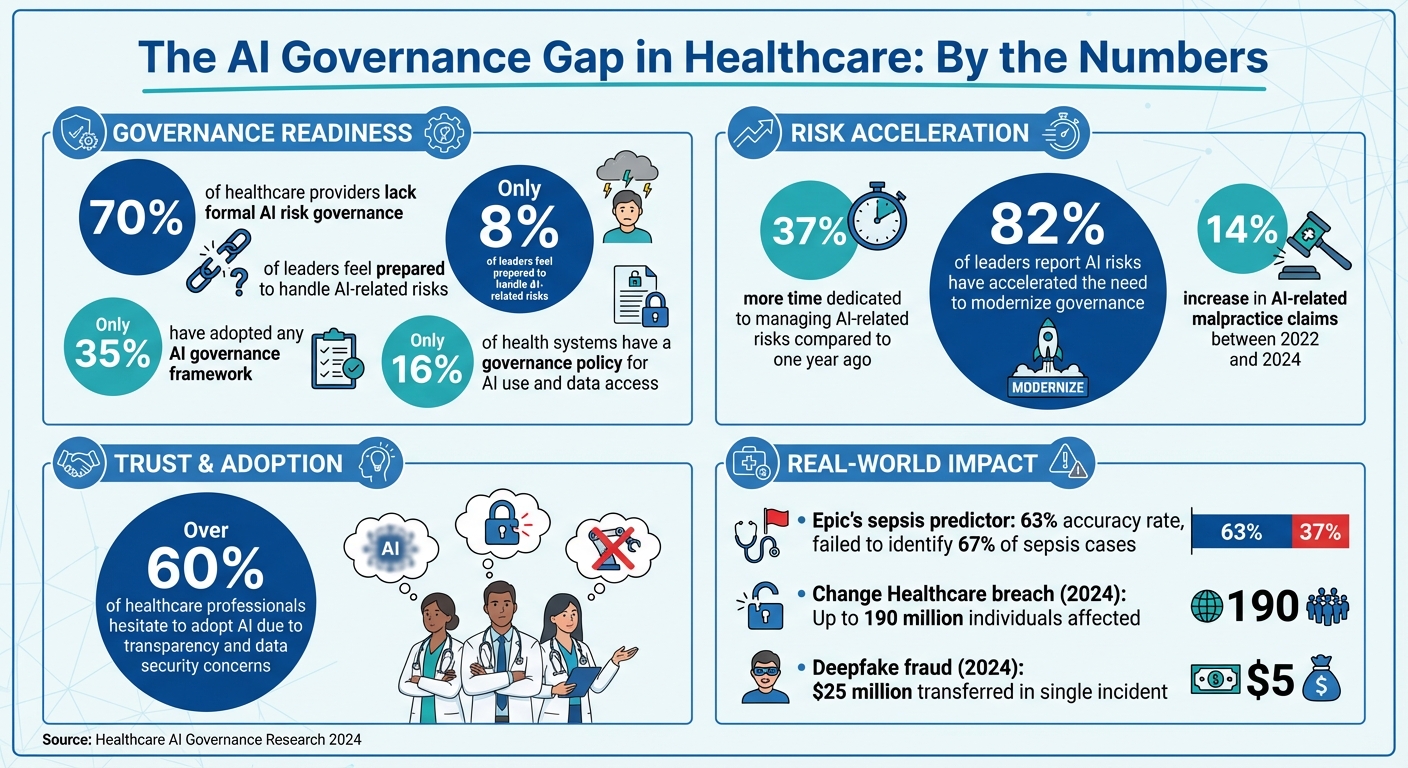

Technical challenges aside, the fragmented nature of AI oversight significantly worsens risk management. A survey found that 70% of healthcare providers lack formal AI risk governance, and only 35% have adopted any kind of framework. This leaves accountability unclear and risks unaddressed [6][8].

Experts describe this as "diffused accountability", where no single entity takes full responsibility [8]. IT teams handle infrastructure, compliance officers focus on regulations, and clinical staff use the tools, but no one oversees AI risks comprehensively. This fragmented approach often leads to oversight failures, delayed responses to risks, and persistent vulnerabilities. When issues arise, questions of legal liability between AI developers, healthcare providers, and institutions become tangled and unresolved [7].

AI-Specific Risks in Healthcare Cybersecurity

AI-Specific Threats in Healthcare

AI systems in healthcare face threats that traditional security measures just aren’t equipped to handle. For instance, data poisoning happens when attackers tamper with training data or patient records, leading AI models to make flawed diagnoses or treatment suggestions [4]. Then there are adversarial attacks, where bad actors feed the AI inputs specifically designed to mislead it, and model inversion, which enables attackers to reverse-engineer AI models to extract sensitive patient details.

Take the ransomware attack in September 2020 on a university hospital in Düsseldorf as an example. It paralyzed IT systems, forcing the transfer of a heart patient to another facility 30 kilometers away. Tragically, the patient passed away before receiving treatment [1]. Fast forward to 2024, when a cyberattack on Change Healthcare disrupted claims processing nationwide, affecting as many as 190 million individuals. The breach potentially exposed insurance IDs, billing information, Social Security numbers, and treatment data [10].

Generative AI has also opened up new avenues for cyberattacks. In February 2024, an Arup Group employee fell victim to a deepfake video call and transferred $25 million. The employee was the only real person on the call, highlighting just how advanced AI-powered social engineering has become [12]. Unsurprisingly, over 60% of healthcare professionals are hesitant to adopt AI, citing concerns about transparency and data security [11].

These emerging threats not only pose technical challenges but also bring significant regulatory and clinical hurdles, as explained below.

Regulatory Gaps in AI Governance

The rapid evolution of AI has outpaced the ability of regulations to keep up. While frameworks like HIPAA, NIST CSF, and HICP provide general security guidelines, they fall short when addressing the unique risks posed by AI. This leaves healthcare organizations largely responsible for validating and ensuring AI safety on their own [14][9]. With AI adoption moving faster than the development of risk, legal, and compliance structures, governance gaps are widening [6][14]. In fact, only 35% of companies have an AI governance framework in place, and just 8% of leaders feel prepared to handle AI-related risks [6].

"Organizations often look to regulation as a north star for governance, but AI legislation is a moving target. Regulations can arrive out of nowhere, prompting compliance scrambles – or move so slowly it's unclear what to prepare for and if they're coming at all." - Riskonnect [6]

This regulatory uncertainty creates blind spots that can lead to operational disruptions, reputational harm, and legal consequences [14]. It also causes what’s known as "responsibility diffusion", where accountability for AI risks becomes unclear among developers, providers, and payers [8]. Organizations are now dedicating 37% more time to managing AI-related risks than they did a year ago, and 82% of leaders report that AI risks have accelerated the need to modernize governance processes [15].

Without clear rules in place, these governance gaps don’t just create compliance headaches - they also amplify clinical risks, as we’ll explore next.

Clinical Impacts and Automation Bias

The governance challenges described above spill over into clinical settings, where they create dangerous vulnerabilities. One major issue is automation bias, where clinicians place too much trust in AI recommendations and fail to apply their own critical judgment. This can result in misdiagnoses, delayed treatments, or inappropriate care plans that jeopardize patient safety [2]. For example, Epic's sepsis predictor failed to identify 67% of sepsis cases at the University of Michigan’s hospital system. It had an accuracy rate of just 63% and produced so many false alerts that it contributed to alert fatigue among clinicians [13].

AI tools also struggle to account for nuanced patient factors, and biases in training data can worsen health disparities [2][16]. When AI systems learn from flawed or biased data, they risk perpetuating errors through a process called error propagation [17]. Alarmingly, only 16% of health systems have a governance policy specifically addressing AI use and data access [13], leaving the majority exposed to these risks.

"Overreliance on AI tools in patient care can lead to critical errors." - Morgan Lewis [2]

The lack of transparency in how AI makes decisions further complicates matters. When physicians can’t explain AI-driven treatment recommendations to patients, it erodes trust and makes accountability murky when errors occur [16][17]. Without continuous oversight, these risks can snowball, creating vulnerabilities that traditional risk management strategies simply aren’t equipped to handle.

Building an AI Governance Model for Healthcare

Addressing the challenges in healthcare AI requires a solid governance framework. This is essential not only to harness AI's potential but also to safeguard patients and healthcare systems [9][19]. To achieve this, organizations need to establish clear policies, define accountability, and ensure oversight throughout the AI lifecycle.

Creating a Cross-Functional AI Governance Committee

The foundation of effective AI governance lies in assembling a multidisciplinary committee. This group should include experts from various fields such as medical informatics, clinical leadership, legal, compliance, safety, quality, data science, bioethics, patient advocacy, IT, and ethics [9][19][20].

"Governance isn't just a safeguard - it's the frame that holds everything together. As AI becomes a permanent fixture in health care, thoughtful governance will be the difference between tools that merely function and those that truly transform care."

– IHI Leadership Alliance [9]

This committee is responsible for setting enterprise-wide AI policies, prioritizing use cases, assessing risks, and defining what constitutes "governable AI" [9][19]. It also establishes thresholds, control measures, and reporting processes to address issues promptly. Securing support at the board level is essential, as it ensures that AI initiatives align with the organization's broader strategic goals [18].

"Risk and compliance leaders can't be brought in after the fact. An AI risk assessment isn't a rubber stamp – it's a structured process that ensures decisions about AI are aligned with ethical, legal, and operational standards from day one."

– Clivetty Martinez, PhD, Director, Compliance and Privacy Services, Granite GRC [20]

Adopting AI-Specific Risk Management Frameworks

Using AI-specific frameworks like the NIST AI Risk Management Framework can help organizations focus on key principles such as fairness, transparency, accountability, and security. These frameworks guide the identification, evaluation, and mitigation of AI-related risks, including bias and explainability concerns. Organizations should enforce these frameworks through regular audits, centralized compliance reporting, and clearly defined accountability. Appointing an AI Compliance Officer can further enhance communication between executives and the board [18][19].

Extending Governance to Third-Party Risk Management

AI governance shouldn't stop at internal processes. Third-party tools and services often lack the necessary transparency to evaluate AI-related practices like model training, bias mitigation, and data lineage [23].

To address this, organizations must apply rigorous due diligence when managing third-party vendors. This includes asking vendors about their training data, model validation processes, bias safeguards, and how they protect PHI (Protected Health Information). Explicit contractual controls should also be implemented to ensure compliance.

Platforms such as Censinet RiskOps™ help centralize AI-related policies, risks, and tasks. Tools like Censinet AI™ streamline issue identification, routing findings to the appropriate teams for immediate action, and maintaining continuous oversight. This ensures that governance extends seamlessly across both internal and external operations.

sbb-itb-535baee

How to Implement AI Governance

To put AI governance into practice, start with immediate steps to reduce risks and establish long-term strategies to oversee evolving systems.

Create an AI Inventory and Conduct Risk Assessments

The first step is to compile a detailed inventory of all AI systems in use. Back in November 2025, the Health Sector Coordinating Council (HSCC) previewed its 2026 AI cybersecurity guidance. This guidance, developed by its Cybersecurity Working Group and an AI Cybersecurity Task Group of 115 healthcare organizations, stressed the importance of maintaining a complete inventory. Such an inventory helps organizations understand each AI system’s function, data dependencies, and potential security risks [3].

This inventory should also classify AI tools based on a five-level autonomy scale to ensure appropriate human oversight, depending on the risk each system poses [3]. Once the inventory is complete, conduct gap analyses to pinpoint high-risk applications - those that could significantly impact patient care, compliance, or financial stability [21]. Use frameworks like the NIST AI Risk Management Framework to guide these assessments, ensuring robust security and data control measures are in place [3][22].

Armed with this understanding, you can set up safeguards to address immediate vulnerabilities.

Implement Minimum Governance Guardrails

To address risks right away, establish safeguards that can be implemented quickly. Examples include running bias checks to identify fairness issues before deploying systems, setting up logging requirements to track AI decisions and data usage, and applying human-in-the-loop policies for critical decisions - especially those affecting patient care or sensitive data.

These measures should align with regulatory standards like HIPAA and FDA guidelines [3]. Start by focusing on key risk areas such as privacy, data protection, security vulnerabilities, bias, transparency, and safety [24]. Define your organization’s risk tolerance levels and conduct interactive risk walkthroughs with stakeholders to clarify roles and responsibilities [22][24].

Once these immediate safeguards are in place, ongoing monitoring is essential to manage risks as they evolve.

Use Platforms for Continuous Monitoring

AI systems don’t stay static - they evolve, making continuous oversight a must. Tools like Censinet RiskOps™ offer centralized management of AI-related policies, risks, and tasks. Meanwhile, Censinet AI™ simplifies vendor security assessments, gathers details on product integrations and fourth-party risks, and routes critical findings to the appropriate stakeholders, including members of the AI governance committee.

These platforms act as a centralized hub for AI risk management. With real-time data displayed in an intuitive dashboard, the right teams can address issues promptly and effectively. Continuous monitoring also ensures human oversight remains intact through configurable rules, enabling scalable risk management without compromising safety.

Conclusion: Closing the AI Governance Gap

Traditional risk management frameworks fall short when it comes to addressing the unique challenges of AI. Static assessments struggle to keep pace with rapidly evolving AI systems. Black box models obscure how decisions are made, and fragmented ownership creates accountability gaps. These flaws aren't just theoretical - they come with real-world consequences. For instance, AI-related malpractice claims rose by 14% between 2022 and 2024[25], highlighting the urgent need for a more tailored approach to governance.

Addressing these risks requires a shift from reactive measures to a more strategic and proactive approach. As federal agencies and state regulations push for safer and more transparent AI usage[19], proactive governance becomes not just a best practice but a necessity. This approach safeguards patient safety, fosters trust, and reduces malpractice risks. To achieve this, healthcare organizations need cross-functional committees, AI-specific governance frameworks, and continuous oversight that spans the entire AI lifecycle - from initial design and development to deployment and ongoing monitoring.

The first steps include creating a comprehensive inventory of AI systems, implementing robust guardrails, and ensuring real-time oversight. Tools like Censinet RiskOps™ and Censinet AI™ can help centralize these efforts by providing visibility and routing critical findings to the right stakeholders, such as members of AI governance committees.

Healthcare organizations must move away from reactive compliance and embrace strategic oversight. The governance gap won't close itself - it demands deliberate action, specialized tools, and a sustained commitment. With the right frameworks and technologies, healthcare leaders can unlock AI's potential while prioritizing what matters most: patient safety and high-quality care.

FAQs

Why aren’t traditional risk management methods enough for managing AI risks in healthcare?

Traditional risk management approaches often fall short when applied to AI in healthcare. Why? They’re simply not built to tackle the distinct challenges that AI brings to the table. These challenges include algorithmic bias, opaque decision-making processes, data security risks, and the unpredictable behavior of systems that learn and evolve over time.

AI’s ability to change and adapt adds another layer of complexity. Static, outdated frameworks just can’t keep pace. To manage these risks effectively, healthcare organizations need to adopt proactive governance, implement continuous monitoring, and develop tailored oversight models. These strategies are key to addressing emerging risks and ensuring AI systems remain safe and dependable in healthcare environments.

What steps can healthcare organizations take to effectively govern AI systems?

Healthcare organizations can manage AI systems responsibly by establishing a clear governance framework that emphasizes accountability, openness, fairness, and safety. This means setting up cross-functional AI committees, embedding governance practices throughout the AI lifecycle, and performing regular audits to detect and address potential biases.

To build trust and ensure compliance, organizations should align with federal regulations, consider using frameworks like the NIST AI RMF, and involve key stakeholders - such as clinicians, patients, and operational teams - in oversight and decision-making processes. Staying ahead of emerging risks through continuous monitoring and updates to governance practices is essential for maintaining the reliability and integrity of AI systems in healthcare.

What are the key AI-related risks healthcare providers should watch for?

Healthcare providers need to stay alert to AI-specific risks that could threaten patient safety, compromise data security, and disrupt operations. Key concerns include data breaches, algorithm bias that might result in unfair or inaccurate decisions, and a lack of transparency in how AI systems make their choices.

Other pressing dangers involve adversarial attacks, where bad actors manipulate AI systems, deepfake impersonations for fraudulent purposes, and ransomware targeting AI-powered healthcare infrastructure. On top of that, weaknesses in Internet of Medical Things (IoMT) devices could expose sensitive patient data or even interfere with critical services.

Addressing these challenges requires implementing strong AI governance practices, performing regular audits, and ensuring that AI systems are built and deployed with security and ethical principles as top priorities.