The Healthcare AI Paradox: Better Outcomes, New Risks

Post Summary

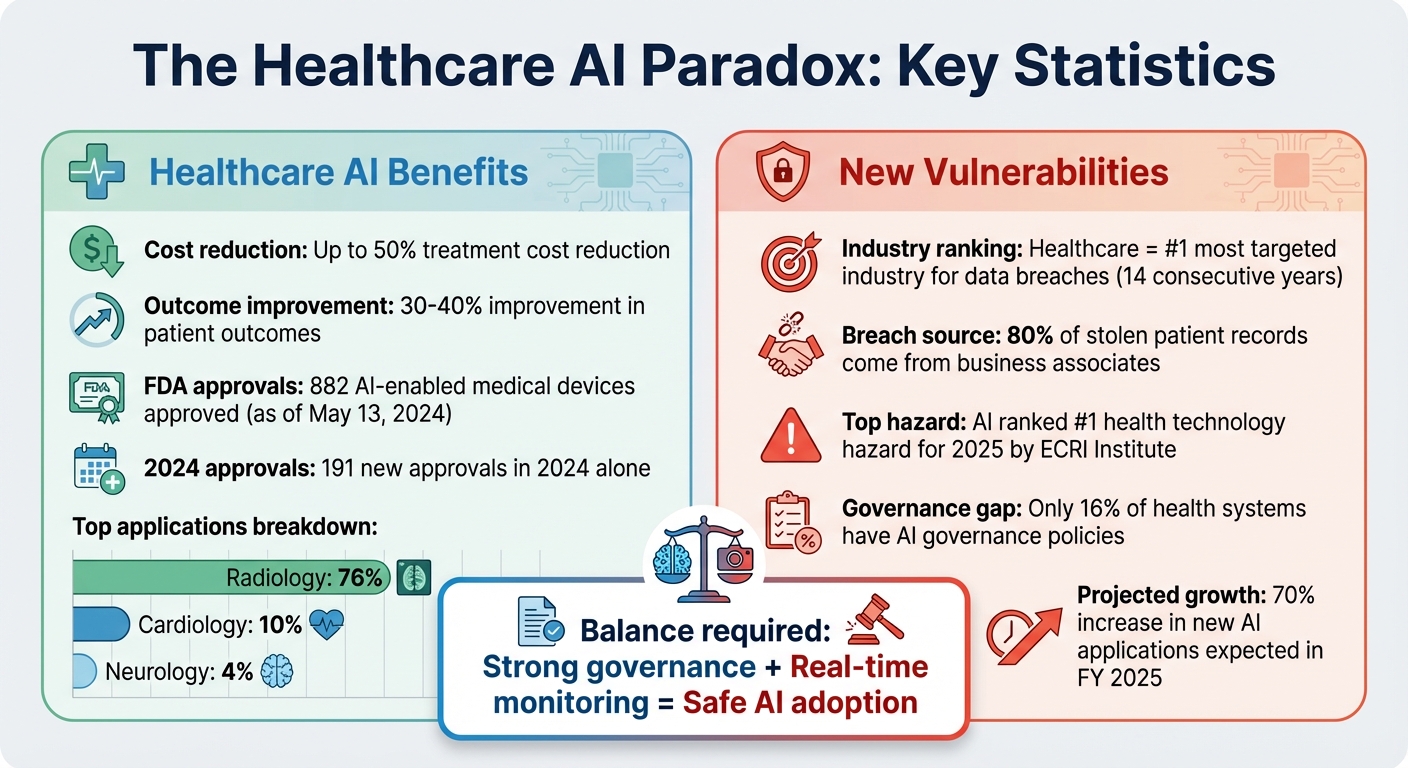

AI is transforming healthcare by improving diagnostics, tailoring treatments, and reducing costs by up to 50%. It offers faster, more accurate diagnoses and streamlines operations, benefiting both patients and providers. However, these advancements come with risks like cyberattacks, data breaches, and algorithmic errors. For example, a 2020 ransomware attack in Germany led to a patient's death, highlighting the critical need for strong security measures.

To balance progress with safety, healthcare organizations must implement robust governance, monitor risks in real time, and secure third-party systems. Tools like Censinet RiskOps™ and frameworks such as the NIST AI Risk Management Framework help manage these challenges, ensuring AI's potential is harnessed responsibly while protecting patient safety.

Healthcare AI Benefits and Risks: Key Statistics and Impact

How AI Improves Healthcare Delivery

AI is reshaping healthcare delivery in ways that are both practical and impactful. From improving diagnostic accuracy to streamlining administrative tasks, these technologies are making healthcare more efficient and patient-centric. By enhancing precision, tailoring treatments, and cutting costs, AI is driving progress in both clinical and operational areas.

Better Diagnostics and Personalized Treatment

AI has transformed how patient data is analyzed. With the ability to process vast amounts of information quickly, it enables faster and more precise diagnoses while crafting treatment plans tailored to individual needs [1]. For instance, as of May 13, 2024, the FDA had approved 882 AI-enabled medical devices, with 191 of those approvals occurring in the same year. Radiology leads the pack, accounting for 76% of these devices, followed by cardiology (10%) and neurology (4%) [5]. These numbers highlight AI's growing presence and effectiveness in healthcare.

AI tools aren't limited to diagnostics. They also monitor vital signs in real time, alerting healthcare providers to critical changes that could signal worsening conditions. Beyond immediate alerts, these systems recommend personalized lifestyle changes and therapeutic adjustments for managing chronic illnesses, all based on a patient’s unique health data [1]. This approach ensures timely interventions and better long-term disease management.

"AI offers significant advantages in hospitals, increasing the speed and accuracy of diagnoses." - Gianmarco Di Palma et al. [1]

By uncovering complex patterns in healthcare data, AI often outperforms traditional methods [5]. It also minimizes biases linked to human error, ensuring a more equitable delivery of quality care [4].

Streamlined Operations and Lower Costs

AI’s impact extends beyond clinical settings, revolutionizing administrative and financial operations in healthcare. Natural Language Processing (NLP) automates documentation, reducing the burden of repetitive paperwork and allowing staff to dedicate more time to patient care [2]. AI-powered virtual assistants further enhance efficiency by scheduling appointments, sending medication reminders, answering common medical questions, and managing follow-ups [2].

One of AI’s standout contributions is in revenue cycle management. These systems speed up payment collection, improve patient retention, and optimize staffing and supply chain logistics [6]. They even handle tasks like automating prior authorizations and detecting fraud [7]. The financial benefits are striking - AI is projected to cut treatment costs by up to 50% while improving patient outcomes by 30-40% [2].

New Vulnerabilities Created by Healthcare AI

While AI is reshaping the healthcare landscape, it also introduces new security risks that organizations must address. These vulnerabilities go beyond the usual IT threats, exposing healthcare systems to a broader range of challenges, including cyberattacks, faulty clinical decisions, and weaknesses in vendor systems.

Cybersecurity Attacks and Data Breaches

One of the most pressing concerns is the rise in cyberattacks targeting AI systems that manage protected health information (PHI). For 14 years straight, healthcare has been the most targeted - and costliest - industry for data breaches [8]. AI systems handling PHI have become attractive targets for ransomware attacks and breaches. Interestingly, 80% of stolen patient records now come from business associates rather than direct attacks on hospitals [8]. The risks tied to AI-controlled devices are significant too. The ECRI Institute has even ranked AI as the top health technology hazard for 2025, citing its potential for catastrophic failures [8].

Algorithmic Bias and Clinical Decision Errors

AI systems are not immune to errors. They can produce incorrect outputs - sometimes referred to as "hallucinations" - that lead to flawed clinical decisions. These errors can worsen disparities in patient care, especially when the AI is trained on biased or incomplete datasets.

The issue is further complicated by "black box" models, where the decision-making process of the AI is opaque. This lack of transparency makes it harder for healthcare providers to explain AI-driven decisions to patients or catch errors before they cause harm. When training data is skewed, AI systems might unintentionally deprioritize certain patient groups, reinforcing existing inequities in healthcare delivery.

Vendor and Third-Party Vulnerabilities

AI integrations with external vendors introduce additional weak points. Cybercriminals often exploit these connections, using a "hub-and-spoke" approach to breach multiple healthcare systems by targeting a single vendor. This strategy can exponentially increase the damage from a single attack.

Emerging threats like data poisoning and model manipulation add another layer of concern. In these scenarios, attackers tamper with training data or alter algorithms, causing AI systems to produce harmful or misleading results. Many third-party AI vendors lack strong cybersecurity measures, leaving healthcare organizations vulnerable to breaches that may go unnoticed until significant damage is done.

sbb-itb-535baee

Managing AI Risks in Healthcare Organizations

Healthcare organizations can balance AI-driven advancements with security by employing strong governance frameworks and automated risk monitoring. This process begins with careful management at the vendor level.

Third-Party Risk Management with Censinet RiskOps™

Vendor relationships become more manageable with tools like Censinet RiskOps™, which automates risk assessments and centralizes oversight. This platform empowers healthcare organizations to benchmark cybersecurity across their vendor networks, identifying vulnerabilities as they arise.

The Censinet AITM simplifies the process by automating vendor questionnaires, summarizing evidence, documenting integration details, and addressing fourth-party risks. By incorporating a human-in-the-loop approach, this system shortens assessment timelines, allowing risk teams to focus on high-priority decisions.

Implementing AI Governance and Oversight

Managing vendor risks is just one piece of the puzzle. Healthcare organizations also need a robust AI governance strategy. Alarmingly, only 16% of health systems have implemented systemwide AI governance policies, leaving many at risk [10]. Recognizing this gap, the U.S. Department of Health and Human Services (HHS) introduced its AI strategy on December 4, 2025. The strategy’s first pillar focuses on ensuring governance and risk management to maintain public trust [11]. With HHS projecting a 70% rise in new AI applications for FY 2025 [11], establishing comprehensive governance is now a necessity.

Organizations can turn to established guidelines like the NIST AI Risk Management Framework to oversee AI throughout its lifecycle - from selection to deployment and eventual decommissioning. Additionally, the ONC HTI-1 Rule Section (b) requires certified health IT systems to disclose critical details about AI and predictive algorithms, including their purpose, development process, data sources, and any known limitations [9].

The Censinet AITM acts as a central hub for AI governance, directing assessment findings and tasks to the appropriate teams. This ensures that issues are addressed promptly and by the right stakeholders, maintaining continuous oversight and accountability.

Real-Time Risk Monitoring and Response

To complement vendor management and governance, continuous monitoring is critical for addressing threats as they emerge. Each AI tool introduces potential cybersecurity risks [12]. Censinet One™ provides real-time risk assessments through an intuitive dashboard that aggregates data from all AI systems and vendor interfaces. This centralized platform helps organizations quickly identify and respond to new threats.

Effective risk management requires a proactive approach. Integrate security measures like endpoint protection, keep systems updated with the latest patches, and enforce stringent data risk management practices to minimize the likelihood of breaches [12]. The aim is to weave risk management seamlessly into the AI deployment process rather than treating it as an afterthought.

Conclusion: Managing AI Benefits and Risks Together

As explored earlier, healthcare stands at a crossroads with AI, offering groundbreaking advancements in diagnostics, personalized treatments, and operational efficiency. However, these benefits come with challenges, including cybersecurity threats, algorithmic biases, and compliance hurdles. The rapid pace of AI adoption has outstripped regulatory frameworks, leaving healthcare organizations to navigate these complexities with their own risk management strategies [3][13].

To effectively integrate AI into healthcare, proactive risk management must be woven into deployment processes. This involves continuous monitoring, well-defined governance structures, and tools tailored specifically for healthcare needs.

A solution like Censinet RiskOps™ plays a pivotal role by centralizing oversight of AI risks across vendor networks, clinical applications, and operational systems. Complementing this, Censinet AITM simplifies vendor assessments and ensures critical findings are swiftly routed to governance teams. This "human-in-the-loop" approach maintains the balance between automation and the essential human oversight required to safeguard patient safety.

As AI algorithms evolve, organizations must prioritize ongoing monitoring and adapt their risk management practices to keep pace. This ensures transparency for patients and maintains effective oversight [1]. Those who establish comprehensive governance frameworks today will be better equipped to maximize AI's potential while protecting both operational efficiency and patient safety.

Ultimately, success with AI in healthcare isn't about eliminating risks entirely - it's about managing them intelligently through the right blend of governance, automated tools, and healthcare-focused solutions.

FAQs

What are the key cybersecurity risks of using AI in healthcare?

AI's growing role in healthcare brings along some serious cybersecurity challenges that need attention. For instance, data breaches can expose sensitive patient information, creating privacy concerns and potential legal issues. There's also the risk of algorithm manipulation, where attackers tweak AI models to generate incorrect or even harmful results.

Other threats include adversarial attacks, where malicious inputs are designed to trick AI systems into making errors, and data poisoning, which involves tampering with training data to degrade the system's performance. On top of that, model drift - when AI systems lose accuracy over time due to evolving data - and weaknesses in AI-powered medical devices add to the list of potential vulnerabilities.

To tackle these risks, healthcare organizations must implement strong security protocols and establish clear governance frameworks. These steps are crucial for protecting patient safety and preserving trust in AI-driven healthcare solutions.

What steps can healthcare organizations take to prevent bias in AI systems?

Healthcare organizations can take meaningful steps to reduce bias in AI systems and ensure fairer outcomes. A key approach is using diverse and representative training data. This helps algorithms consider a broad spectrum of patient demographics and medical conditions, making them more inclusive.

Another important measure is conducting regular bias audits throughout the AI's lifecycle. These audits help identify and address disparities that could impact patient care. Organizations can also adopt fairness-focused algorithms and involve stakeholders from underrepresented or vulnerable groups during the development process. This collaboration ensures that the systems reflect a wider range of perspectives.

Finally, ongoing monitoring and evaluation of AI performance are crucial. By consistently reviewing how the system operates, healthcare providers can catch and correct any new biases that arise. Together, these steps can lead to more equitable and effective healthcare for everyone.

What are the best strategies for managing AI risks in healthcare?

To address AI risks in healthcare effectively, start by establishing clear governance frameworks. These frameworks should outline specific roles, responsibilities, and oversight measures across every stage of the AI lifecycle. It's also essential to ensure compliance with federal regulations like HIPAA and FDA guidelines to safeguard patient data and maintain legal standards.

Regularly performing risk assessments is another critical step. These assessments help pinpoint vulnerabilities, enforce robust data security measures, and promote transparency and accountability. Collaboration among clinical, legal, and cybersecurity teams is vital to tackle potential risks and biases in AI systems. Lastly, make ongoing monitoring and auditing of AI tools a priority to ensure they stay secure, fair, and effective as they evolve.