Healthcare AI Vendor Contracts: Essential Risk Management Terms and Conditions

Post Summary

Managing AI vendor contracts in healthcare requires careful attention to risks like patient safety, data privacy, and regulatory compliance. AI systems handle sensitive patient data and influence critical outcomes, making well-defined contracts a necessity to protect organizations from legal, financial, and reputational fallout. Here's what you need to know:

- Data Protection: Specify data ownership, usage limits, and retention policies. Vendors must comply with HIPAA and other laws.

- Performance Guarantees: Require accuracy benchmarks, bias audits, and compliance with evolving regulations.

- Indemnification: Cover risks like algorithm errors, regulatory violations, and data misuse. Ensure vendors carry adequate insurance.

- Ongoing Oversight: Regularly monitor system updates, performance metrics, and adherence to contract terms.

The stakes are high, but tools like Censinet RiskOps™ can help streamline assessments, governance, and monitoring, ensuring contracts align with your organization's objectives and safeguard patient outcomes.

Pre-Contract Risk Assessment and Governance

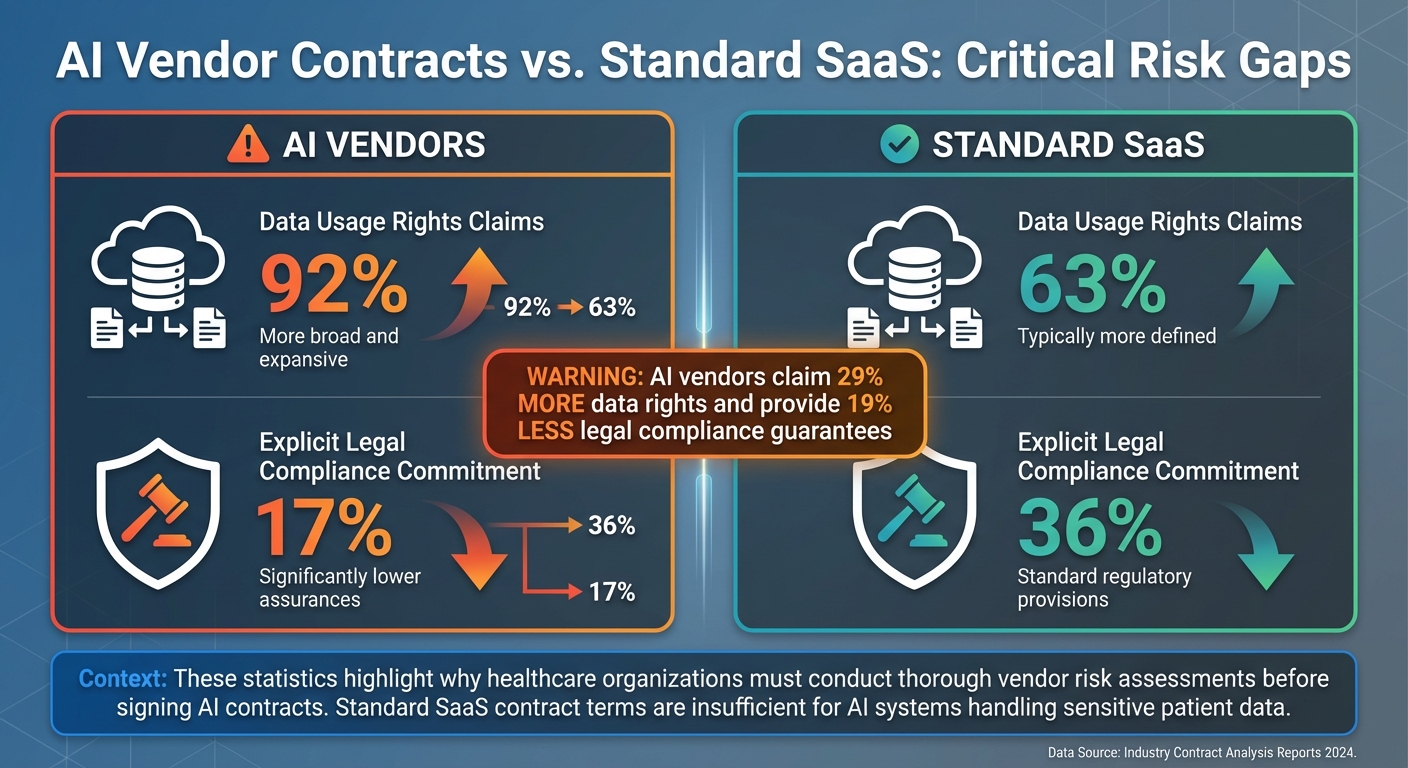

AI Vendor vs SaaS Contract Terms: Key Risk Statistics for Healthcare

Conducting Vendor Risk Assessments

Before committing to an AI vendor contract, it's crucial to carry out a thorough risk assessment that addresses key areas like patient safety, data privacy, regulatory compliance, and operational reliability.

Examine the AI product across its entire lifecycle - this includes aspects such as model architecture, training methods, data types, access protocols, and the context in which it will be used. Remember, the level of risk varies; clinical applications often carry more significant patient safety concerns than administrative uses [6]. To streamline this process, consider using the NIST AI Risk Management Framework (AI RMF 1.0) as a guide for crafting internal risk policies and procedures [1][11]. Additionally, enhance your due diligence questionnaires with AI-specific inquiries, such as whether the vendor complies with ISO/IEC standards, how they address bias, the explainability of their models, and their data governance strategies.

Statistics highlight the risks tied to AI vendors. For instance, 92% of AI vendors claim broad data usage rights - far surpassing the 63% average for broader SaaS contracts. Moreover, only 17% of AI vendors explicitly commit to adhering to all applicable laws, compared to 36% in SaaS agreements [3].

When evaluating data security and privacy, dig into the vendor's practices around data governance, security protocols, quality assurance, secondary data use, and retention policies. Ensure the vendor adheres to recognized standards like HITRUST, ISO 27001, or SOC-2 as evidence of robust security measures.

Financial stability is another critical factor. Some vendors may lack the resources to sustain long-term operations or could shift their product focus, potentially disrupting your organization or putting patient data at risk.

A well-structured assessment framework not only identifies risks but also lays the groundwork for engaging internal stakeholders and aligning organizational policies.

Stakeholder Engagement and Policy Alignment

Effective risk assessment requires input from various departments to ensure no critical aspect is overlooked. By incorporating insights from across your organization, you can create a more comprehensive evaluation. Aligning internal policies with the findings from your vendor risk assessment is essential to protect patient data and maintain compliance with regulations.

Form a cross-functional team that includes representatives from legal, privacy, IT, compliance, clinical, administrative, and data science departments. Each group brings a unique perspective to the table:

| Stakeholder Group | Key Responsibilities in Risk Assessment |

|---|---|

| Privacy Officers | Assess data privacy compliance with laws like HIPAA, GDPR, and CCPA. |

| IT Leaders | Evaluate technical integration with existing systems (e.g., EHRs), data security, and overall cybersecurity. |

| Compliance Officers | Ensure adherence to regulatory frameworks such as FDA, HIPAA, and HITECH, and keep track of legal changes. |

| Clinical Leadership | Examine risks to patient safety and efficacy, including potential diagnostic errors and workflow disruptions. |

| Legal Counsel | Review legal aspects, including intellectual property, liability, and data usage terms. |

Leadership involvement is crucial. Boards and executives must integrate AI oversight into the organization's culture, taking responsibility for managing AI risks while emphasizing ethical use, transparency, and accountability [8].

As Performance Health Partners explains: "AI risk management begins with leadership. Boards and executives must embed AI oversight into the organization's culture, ensuring that ethical use, transparency, and accountability are prioritized."

Before reaching out to vendors, clearly define your clinical or operational objectives and ensure they align with your organization's broader strategic goals. This clarity will help you identify which risks are most critical and determine the level of scrutiny each vendor requires. Ultimately, this approach ensures that contract terms are designed to manage AI risks effectively while supporting your organization's goals.

Required Contract Terms and Conditions for Risk Management

When it comes to managing AI-related risks, well-crafted contract terms are essential. These agreements are your organization's first line of defense against challenges like data breaches, regulatory penalties, and algorithmic failures. In healthcare, where the stakes are particularly high, these terms must address the unique risks AI systems pose. Below, we break down the critical clauses that can help translate risk assessments into enforceable safeguards.

Data Protection and Privacy Clauses

To protect sensitive information, contracts must clearly define how vendors can access, use, and manage data - especially patient information. This includes specifying ownership of derivative data like logs, embeddings, and fine-tuning outputs [12]. It's crucial to restrict vendors from using patient data to train their general-purpose or commercial AI models without explicit consent [12]. Contracts should also outline data retention periods and require secure destruction or de-identification of Protected Health Information (PHI) once the agreement ends. Compliance with HIPAA and other privacy laws should be mandatory, with provisions for regular audits to ensure adherence.

Secondary data use is another area that requires careful attention. If a vendor plans to use anonymized or aggregated data for research or product development, the contract must spell out the exact scope and purpose. Any changes to how data is handled - especially those affecting PHI - should require prior written approval. These safeguards not only protect sensitive information but also lay the groundwork for performance warranties that further secure your organization.

Performance and Compliance Warranties

Vendors should provide guarantees, or warranties, that their AI outputs will meet industry-standard accuracy benchmarks. These benchmarks should be clearly defined in the contract to avoid ambiguity.

Douglas A. Grimm, Partner and Health Care Practice Leader at ArentFox Schiff, emphasizes: "Vendors may be required to warrant ongoing compliance with all current and future regulatory requirements, with indemnity for any breach" [2].

A strong warranty clause might include language such as: "Vendor warrants AI outputs will meet industry-standard accuracy per Exhibit A and remains liable for failures or material errors."

Additionally, contracts should require warranties that address bias and discrimination. Vendors must validate their AI models using diverse datasets and commit to regular bias audits. Compliance with regulations like FDA oversight, HIPAA, and emerging state laws should also be guaranteed [2]. Intellectual property protection is another critical area - vendors must ensure their AI models and training data do not infringe on third-party rights. Transparency is key, so contracts should demand access to documentation detailing model limitations, intended use cases, and training methodologies. Vendors should also notify you of any significant updates to their models or training data that might impact performance or risk profiles [12].

Gouchev Law advises: "You should negotiate meaningful warranties about model behavior, updates, and mitigation of bias or discrimination" [5].

One healthcare staffing firm learned this lesson the hard way. They implemented an AI-powered screening tool with an "as-is" warranty and no guarantees for bias mitigation. This resulted in a discrimination complaint, regulatory fines, and the loss of a hospital contract worth $3 million annually. The firm also incurred $380,000 in legal and compliance costs. An investigation revealed that the vendor had used screening data, including candidate health information, to train their model without proper safeguards or bias audits [5]. This underscores the importance of defining performance standards and securing associated risks both financially and legally.

Indemnification and Liability Limitations

Indemnification clauses in AI contracts must go beyond the scope of traditional IT agreements. They should cover risks like algorithmic errors, bias-related claims, regulatory violations, and improper use of PHI [2]. These provisions help shield your organization from financial and legal fallout due to vendor failures, such as intellectual property disputes, data misuse, or regulatory penalties.

Make sure the indemnification terms are comprehensive, addressing risks tied to training data, customer prompts, and deployment decisions [12]. Liability caps and damage exclusions should reflect your organization’s actual risk exposure - not just the vendor’s preferences [12]. Additionally, vendors should carry robust insurance policies, including cyber liability, technology errors and omissions, professional liability, and commercial general liability coverage. Your healthcare organization should be named as an additional insured in these policies [2].

Contracts should also include trigger provisions that require renegotiation or review if the AI system undergoes significant updates, is repurposed, or if relevant laws change [2]. Vendors should be obligated to cooperate with bias audits, impact assessments, and regulatory investigations to ensure ongoing compliance [2].

Ongoing Monitoring and Contract Management

Keeping a close eye on your vendor's AI system is crucial to ensure it stays compliant, performs as expected, and adjusts to emerging risks. In healthcare, this requires collaboration between users, IT teams, and compliance departments [6]. This kind of teamwork helps identify problems early, preventing them from escalating into regulatory breaches or patient safety issues. By building on earlier risk assessments, you can safeguard both compliance and system performance over time.

Monitoring AI System Updates and Performance

Oversight doesn’t end after the initial risk evaluation - it’s an ongoing process throughout your partnership with the vendor. Regularly audit AI system updates to ensure they align with the metrics, service level agreements (SLAs), and documentation outlined in your contract [1][10][9]. Keep tabs on whether the AI outputs continue to meet accuracy standards and watch for potential new risks, like bias or performance drops, introduced by model updates. Additionally, monitor data usage to confirm the vendor sticks to the agreed boundaries, particularly when it comes to using data for training commercial models or other purposes outside the original agreement [3][7][9].

Transparency is key. Documenting model logic, outputs, and safeguards against bias on a regular basis is essential [9]. This becomes even more important when third-party AI tools are integrated into the vendor’s solution. Alarmingly, only 17% of AI vendors explicitly commit to adhering to all applicable laws, compared to 36% in standard SaaS agreements [3]. This gap makes proactive oversight a non-negotiable part of managing AI systems.

Contract Renegotiation Triggers

Your contract should be flexible enough to adapt to changing circumstances, and continuous monitoring can help identify when renegotiation is necessary. Include clauses that allow for updates in cases like significant system changes, failure to meet performance metrics, regulatory shifts, or the discovery of compliance issues [1][9]. With the rapid development of generative AI and evolving regulatory frameworks, your contract should outline clear processes for tracking legal changes, communicating them to all parties, and making adjustments to ensure compliance with updated state or federal laws.

To stay ahead, build performance milestones and metrics directly into your contract. Automated contract management tools can help track renewal dates across vendors, allowing you to renegotiate terms proactively rather than waiting until the last minute [7]. When AI-related problems arise - like algorithm errors, data inconsistencies, or bias issues - use incident reports to detect patterns that might indicate larger issues. These insights can guide necessary technical updates or prompt contract renegotiations [8].

sbb-itb-535baee

Using Censinet RiskOps for AI Vendor Contract Management

Healthcare organizations face the challenge of managing risk assessments, compliance checks, and ongoing monitoring for AI vendor contracts. To address this, Censinet RiskOps™ provides a centralized platform tailored specifically for the healthcare sector. It simplifies the entire process, from initial vendor evaluations to continuous oversight and governance. Let’s explore how this platform uses advanced AI to automate risk assessments and streamline governance.

Automating Risk Assessments with Censinet AI™

Censinet AI™ builds upon established vendor risk frameworks to make risk assessments more efficient. One of its standout features is the automation of security questionnaires, which saves time by summarizing vendor-provided evidence and documentation while also capturing essential product integration details.

The platform operates on a balanced model that combines automation with human oversight. While the AI handles tasks like validating evidence, drafting policies, and offering preliminary risk mitigation suggestions, your risk teams stay in control. Configurable rules and review processes allow experts to step in when needed, ensuring that critical healthcare decisions are never left solely to automation. This balance enables organizations to scale their risk management efforts without sacrificing the expertise required for complex situations.

Centralized AI Governance and Risk Monitoring

Beyond automated assessments, Censinet RiskOps serves as a hub for centralized AI governance. It consolidates key findings and routes tasks to the right stakeholders, ensuring nothing falls through the cracks. When the system identifies significant AI-related risks - such as concerns about data usage, performance, or compliance - it flags these issues and alerts designated reviewers, including members of your AI governance committee. This streamlined approach keeps oversight organized and ensures that potential risks are addressed promptly and effectively.

Conclusion

Healthcare AI vendor contracts play a crucial role in protecting against patient safety risks, data breaches, regulatory violations, and operational challenges. With nearly 1,000 AI-powered medical devices approved by the FDA [6] and projections showing AI could save the U.S. healthcare system up to $360 billion annually [8], the potential of AI in healthcare is undeniable. However, only 16% of health systems have governance policies in place for AI usage and data access [4], leaving many organizations vulnerable to risks that are often underestimated.

These challenges highlight the need for contracts that go beyond basic terms. Contracts must address key areas like data protection, performance guarantees, and liability limits. They also need to be flexible enough to adapt to changes in technology and regulations, incorporating provisions for ongoing monitoring, updates, and renegotiation as needed. Traditional contract management approaches often fail to keep pace with the complexities of AI vendor relationships.

Censinet RiskOps™ offers a solution by centralizing AI vendor contract management. Using Censinet AI™, it automates risk assessments and provides continuous oversight within a unified governance framework. The platform’s human-in-the-loop model ensures that automated tools complement expert judgment, enabling risk teams to scale their efforts without losing control. This combination of automation and expert oversight empowers healthcare organizations to manage AI risks effectively while prioritizing patient safety and outcomes.

FAQs

What key data protection terms should healthcare organizations include in AI vendor contracts?

To protect sensitive healthcare data, it's crucial to implement a combination of strategies. Start with data encryption, which ensures that information remains secure both during transmission and while stored. Add access controls to restrict who can view or handle the data, and use data anonymization to minimize the chances of identifying individual patients.

Contracts should also include provisions for regular security audits to uncover any vulnerabilities, HIPAA compliance clauses to meet U.S. regulatory standards, and breach notification protocols to ensure swift action in case of security incidents. Lastly, it's important to clearly outline data ownership rights to prevent disputes over how the data is used or shared.

What steps can healthcare organizations take to ensure AI vendors meet performance and compliance requirements?

Healthcare organizations can take important steps to ensure that AI vendors meet both performance expectations and compliance standards. Here’s how they can approach this:

- Thorough Vendor Evaluation: Carefully assess the vendor’s security measures, certifications (like SOC 2 or HITRUST), and past audit records to verify their reliability and compliance readiness.

- Set Clear Expectations: Establish well-defined performance metrics and negotiate service-level agreements (SLAs) to ensure vendors remain accountable for their performance.

- Strong Contractual Protections: Include contract provisions that cover critical areas such as incident response, data protection, and compliance with regulations like HIPAA and the Healthcare Cybersecurity Act of 2025.

- Continuous Oversight: Develop a system for ongoing monitoring, including regular evaluations, to confirm the vendor maintains compliance and operational dependability over time.

By addressing these areas proactively, healthcare organizations can minimize risks while fostering secure, dependable partnerships with AI vendors.

What key protections should indemnification clauses include in AI vendor contracts for healthcare?

Indemnification clauses in healthcare AI vendor agreements need to tackle specific risks tied to AI technology. These risks can include liabilities stemming from algorithmic mistakes, data breaches, misuse of protected health information (PHI), regulatory non-compliance, and claims over third-party intellectual property connected to AI outputs or training data.

To ensure clarity and fairness, it’s essential to outline each party’s responsibilities when it comes to regulatory penalties, cybersecurity breaches, and other AI-related challenges. For balanced protection, consider adding exceptions for willful misconduct and gross negligence. Additionally, liability limits should be carefully set - not overly restrictive, but reflective of the unique complexities and risks that AI introduces to the healthcare sector.

Related Blog Posts

- Machine Learning Vendor Risk Assessment: Data Quality, Model Validation, and Compliance

- Healthcare AI Data Governance: Privacy, Security, and Vendor Management Best Practices

- The Healthcare AI Paradox: Better Outcomes, New Risks

- Clinical Intelligence: Using AI to Improve Patient Care While Managing Risk