The Healthcare Cybersecurity Gap: Medical AI's Vulnerability Problem

Post Summary

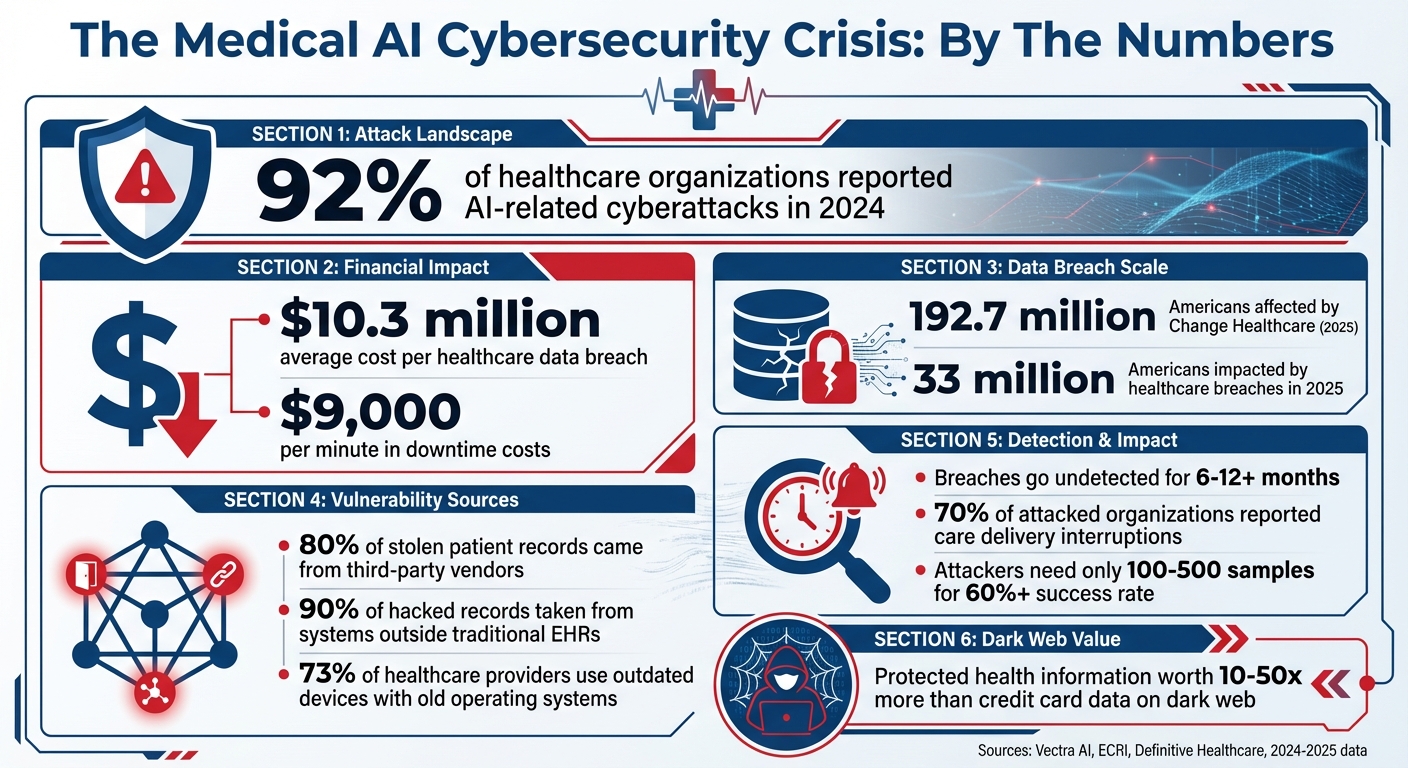

The rise of medical AI is transforming healthcare, but it comes with serious cybersecurity risks. These systems, used for diagnosing diseases, managing patient care, and operating AI-powered devices, have become prime targets for cyberattacks. In 2024, 92% of healthcare organizations reported AI-related cyberattacks, exposing vulnerabilities in data pipelines, AI models, and interconnected devices. With breaches costing an average of $10.3 million per incident and compromising sensitive patient data, the stakes are higher than ever.

Key issues include:

- Data pipeline risks: Complex systems with multiple entry points make protected health information (PHI) a lucrative target.

- AI model threats: Attacks like data poisoning and adversarial manipulation can lead to misdiagnoses and treatment errors.

- Device vulnerabilities: Outdated software in medical devices opens the door to ransomware and denial-of-service attacks.

To address these risks, healthcare organizations must focus on:

- Establishing strong AI governance frameworks for oversight and accountability.

- Securing data, models, and devices with cryptographic verification, regular updates, and security testing.

- Implementing continuous monitoring and AI-specific incident response plans.

Medical AI has immense potential, but ensuring its safety requires proactive measures to protect patient care and privacy.

Medical AI Cybersecurity Statistics: Breach Costs, Attack Rates, and Healthcare Impact 2024-2025

Main Vulnerabilities in Medical AI Systems

Data Pipeline Risks and PHI Concentration

Medical AI systems rely on vast amounts of patient data moving through intricate pipelines. These pipelines connect collection points, storage systems, analysis tools, APIs, cloud platforms, and third-party services. Unfortunately, this complexity creates numerous entry points for attackers. Without robust security measures, sensitive information can easily fall into the wrong hands.

In 2025, healthcare organizations faced staggering costs from data breaches, averaging $10.3 million per incident, with 33 million Americans impacted[1]. Even more concerning, 80% of stolen patient records originated from third-party vendors, and 90% of hacked records were taken from systems outside traditional electronic health records (EHRs)[1]. The 2025 Change Healthcare breach alone affected 192.7 million Americans, disrupted nationwide prescription processing, and caused operational losses exceeding $2 billion[1].

"Protected health information commands 10 to 50 times the value of credit card data on dark web marketplaces, containing comprehensive personal, financial, and medical details that enable identity theft, insurance fraud, and targeted social engineering." – Vectra AI[1]

The high concentration of protected health information (PHI) in AI systems makes them prime targets. A single breach can reveal full medical histories, insurance details, and personal identifiers. Compounding this issue, the lack of secure interoperability between healthcare systems means vulnerabilities in one area can spread across the entire network[5]. Beyond these data pipeline concerns, the integrity of the AI models themselves faces significant threats.

Model Integrity Threats: Poisoning and Evasion

Medical AI models are uniquely vulnerable to attacks that can undermine their decision-making while leaving little trace. One such threat is data poisoning, where attackers inject malicious data to skew outcomes. This could lead to misdiagnoses, incorrect medication recommendations, or flawed treatment plans - all while the system appears to operate normally.

Adversarial manipulations are another major concern. Subtle alterations to medical images, lab results, or patient data can cause AI systems to produce inaccurate conclusions, potentially misleading clinicians.

An emerging issue is model drift exploitation. Attackers can feed edge cases into the system, gradually degrading its reliability for specific patient groups or conditions. This slow erosion of accuracy often goes undetected until patient safety is compromised, widening the gap in medical AI cybersecurity[1][6].

AI-Enabled Device and Interconnected System Vulnerabilities

AI-powered medical devices add another layer of risk by bridging digital systems with physical healthcare operations. Devices like pacemakers, insulin pumps, imaging equipment, and patient monitors are increasingly networked, creating new attack surfaces. Many of these devices still run on outdated operating systems, leaving them exposed to modern threats like ransomware and denial-of-service attacks[5].

The interconnected nature of healthcare systems amplifies these risks. AI-controlled devices often communicate with hospital networks, EHRs, and cloud platforms. A breach in one area can ripple across the entire infrastructure. Attackers could potentially alter device settings remotely - adjusting insulin dosages, modifying pacemaker rhythms, or tampering with diagnostic imaging results. The 2025 Synnovis ransomware attack in London highlighted this danger, disrupting laboratory and diagnostic services across multiple hospitals, including Guy's, St Thomas', King's College, Evelina Children's Hospital, and others[4].

Denial-of-service attacks are particularly dangerous for AI-enabled medical devices. Overloading these systems with traffic or malicious requests can render critical equipment unusable during emergencies. Unlike traditional IT systems, even brief downtime for medical devices can directly endanger lives, making these vulnerabilities especially urgent in acute care situations. Together, these risks underscore the growing cybersecurity challenges in medical AI.

How Medical AI Vulnerabilities Affect Healthcare

Security flaws in medical AI systems don't just threaten the technology itself - they directly impact patient safety, data privacy, and the overall functioning of healthcare systems. When these systems are compromised, the consequences are real and far-reaching, affecting lives, trust, and operational stability.

Patient Safety and Clinical Decision-Making Risks

When medical AI systems are compromised, the risks to patient safety are immense. Hackers can manipulate AI by subtly altering medical images, lab reports, or patient data. These seemingly minor changes can trick the system into making errors that human eyes might not detect.

Take medical imaging, for example. Attackers could add or erase tumor indicators in scans, alter ECG readings, or tweak pathology images. Such alterations can lead diagnostic AI to provide dangerously incorrect results. Even AI-controlled medical devices aren't immune - unauthorized changes to insulin pump dosages or pacemaker signals could have life-threatening consequences [1][5].

A chilling real-world example occurred in September 2020, when a ransomware attack hit a university hospital in Düsseldorf, Germany. The attack blocked access to critical system data, forcing the transfer of a critically ill patient to a hospital 19 miles away. Tragically, the patient died before receiving care, prompting an investigation into manslaughter [5].

The problem is growing fast. ECRI has already flagged AI as the top health technology hazard for 2025 [1][7]. Alarmingly, attackers with access to just 100–500 samples can compromise healthcare AI systems with over a 60% success rate. Worse still, breaches often go undetected for 6 to 12 months - or longer [8]. The result? Misdiagnoses, inappropriate treatments, and delays in care, all of which directly harm patients.

Data Breaches and Privacy Violations

Beyond patient safety, data breaches in medical AI systems pose serious privacy risks and financial repercussions. These breaches can expose sensitive information such as Social Security numbers, insurance details, financial records, and even biometric data. Once stolen, this data can be sold on the dark web, used to alter prescriptions, or even manipulate medical procedures [5].

The fallout isn’t just personal - it’s institutional. Healthcare organizations face steep penalties for violating HIPAA and state privacy laws, not to mention the damage to their reputation. Class-action lawsuits often follow, and rebuilding patient trust can take years. The financial costs? Substantial. The reputational damage? Long-lasting.

Operational and Resilience Challenges

Cyberattacks targeting AI systems can throw entire healthcare operations into chaos. When AI-powered tools like diagnostic platforms or imaging systems are disabled, hospitals are forced to revert to slower, error-prone manual processes, such as paper records and phone-based coordination [9]. This disruption has a direct impact on patient care - 70% of healthcare organizations that experienced a cyberattack reported interruptions in care delivery [9].

"Cyberattacks don't just take systems offline - they fracture the clinical workflows those systems support." – Definitive Healthcare [9]

The financial toll of downtime is staggering, costing up to $9,000 per minute [9]. Healthcare organizations are also more likely than other sectors to pay ransoms, with incidents leading to diverted ambulances, postponed surgeries, and critical care decisions made without access to essential diagnostic tools [1].

Outdated medical devices only add to the problem. A shocking 73% of healthcare providers still rely on older devices with outdated operating systems. As of January 2022, more than half of connected hospital devices had known critical vulnerabilities [10]. When these devices are compromised, the consequences can be immediate and severe.

"The bad guys, once they're in the network, may deploy ransomware, which encrypts the pathways to medical devices - potentially the medical devices themselves - denying the availability of the device for clinicians and patients. That's where the real potential risk and harm is." – John Riggi, National Advisor for Cybersecurity and Risk, American Hospital Association [10]

sbb-itb-535baee

How to Reduce Medical AI Cybersecurity Risks

Tackling cybersecurity challenges in medical AI calls for a well-thought-out, multi-faceted strategy. Healthcare organizations need to shift from reactive measures to proactive defenses that safeguard data, models, and devices throughout the AI lifecycle. When applied effectively, practical solutions can dramatically lower these risks.

Building Strong AI Governance Frameworks

Developing a solid AI governance framework is crucial. This framework should clearly outline roles, responsibilities, and oversight for every stage of the AI lifecycle [6]. To achieve this, healthcare organizations can establish multidisciplinary committees that bring together clinicians, IT security specialists, data scientists, legal advisors, and risk managers.

Start by creating a comprehensive inventory of all AI systems, documenting each system’s purpose, data requirements, and potential security risks [3]. Then, incorporate AI-specific risks into your broader enterprise risk management strategies. For instance, the NIST AI Risk Management Framework provides a useful blueprint for identifying relevant standards and implementing robust security and data controls [6].

This structured governance lays the groundwork for stronger technical protections in the next steps.

Securing AI Systems: Data, Models, and Devices

To protect training data from poisoning attacks, use cryptographic verification and maintain detailed audit trails [1]. Keep training and production environments separate, and regularly retrain models using verified datasets to maintain their integrity [1]. Additionally, monitor systems for unusual behavior that could signal tampering [2].

Model security requires rigorous validation processes to test for both accidental errors and deliberate adversarial manipulation [1]. Techniques like adversarial example generation and boundary testing are critical for assessing how models perform under attack scenarios. These steps are especially vital since even minor manipulations - altering just 0.001% of input tokens - can lead to major medical errors [1].

For AI-enabled medical devices, adopt secure-by-design principles from the start [6][4]. Stay vigilant about vendor patching processes and apply updates promptly to eliminate known vulnerabilities.

Continuous Monitoring and Incident Response for AI

Controls alone aren’t enough - ongoing vigilance is essential. Real-time AI-powered threat detection can drastically cut down the time it takes to identify incidents [1]. Regularly monitor AI systems for signs of model drift, data corruption, or adversarial interference [6].

Prepare for potential threats by developing AI-specific incident response playbooks. These should include detailed procedures for addressing issues like model poisoning, data corruption, and adversarial attacks. Quick containment and recovery depend on secure, verifiable backups [6].

Collaboration between cybersecurity and data science teams is key for effective incident response [6]. Establish AI-driven threat intelligence systems that support both clinical operations and broader healthcare workflows. Focus on resilience testing for AI systems and continuously learn from past incidents to improve future defenses [6].

With 92% of healthcare organizations expected to face AI-related attacks in 2024 [1], the real question isn’t if threats will occur - it’s whether you’ll be ready when they do.

Conclusion: Improving Medical AI Cybersecurity for Safer Healthcare

AI is transforming patient care, but it also brings serious security challenges. Recent breaches have shown how costly and time-consuming it can be to address these vulnerabilities [11].

Tackling these risks requires more than just reacting to problems as they arise. Healthcare organizations must adopt secure-by-design principles, establish strong governance frameworks, and implement continuous monitoring to keep AI security a top priority. For instance, AI-powered threat detection can shorten incident identification by 98 days [1], proving that technology itself can be part of the solution.

One of the most effective proactive strategies is using a unified management platform. Censinet RiskOps™ offers a centralized approach to AI risk management, enabling quicker risk assessments, automated evidence validation, and smarter task routing. Its AI risk dashboard acts as a command center, organizing policies, risks, and tasks so the right teams can address the right issues without delay.

FAQs

What steps can healthcare organizations take to protect AI systems from security threats like data poisoning and adversarial attacks?

To keep AI systems in healthcare secure, organizations need a layered security strategy. Begin with regular risk assessments to spot vulnerabilities and address them before they become problems. Incorporate a zero trust architecture to restrict access to sensitive systems and data, ensuring only authorized individuals can interact with critical information. On top of that, leverage AI-powered threat detection tools to identify unusual activity and react to potential threats in real time.

Take security a step further by implementing data encryption and tokenization to safeguard patient information. Conduct frequent penetration tests to find and fix weaknesses before malicious actors can exploit them. Don’t overlook the human element - training employees to recognize and avoid cyber threats can make a big difference. Lastly, establish clear governance and compliance protocols to stay aligned with cybersecurity standards and regulatory requirements.

How do AI-related cyberattacks impact healthcare systems financially and operationally?

AI-driven cyberattacks are proving to be a costly and disruptive challenge for healthcare systems. On average, these breaches result in $10.9 million per incident, factoring in expenses like data recovery, system repairs, and regulatory penalties.

Beyond the financial toll, the operational impact can be devastating. Attacks often cause system outages, delay treatments, and disrupt patient care. In extreme situations, these delays can jeopardize patient safety, underscoring the critical importance of implementing strong cybersecurity measures in medical AI systems.

How can we protect AI-powered medical devices from cybersecurity threats?

To keep AI-powered medical devices secure, a layered security strategy is key. Start with strict input validation to block harmful data that could disrupt systems. Restrict access by applying the principle of least privilege, so users and devices only get the permissions they absolutely need.

Ensure data exchanges are protected using secure API protocols, and include human-in-the-loop verification to spot irregularities that automated tools might overlook. Conduct regular security testing to uncover and fix vulnerabilities, and maintain detailed audit logs to track activity and identify potential security threats. Together, these measures can reduce risks and strengthen the safety of medical AI systems.