Beyond the Hype: 7 Hidden AI Risks Every Executive Must Address in 2025

Post Summary

Prompt injection attacks that expose PHI, manipulate clinical AI outputs, and compromise compliance.

Employees use unauthorized generative tools, risking data leaks, PHI exposure, and HIPAA violations without IT visibility.

Corrupted or biased training data produces inaccurate, unsafe, or discriminatory clinical outputs.

Attackers use AI to automate targeting, accelerate reconnaissance, bypass defenses, and disrupt clinical systems.

Vendors and their subcontractors often lack healthcare-grade safeguards, creating cascading PHI, compliance, and operational vulnerabilities.

Autonomous AI can make opaque, biased, or unsafe decisions that directly impact patient health and regulatory compliance.

Artificial intelligence is transforming healthcare, but it’s also introducing risks that many leaders are unprepared for. From data breaches to regulatory challenges, the rapid adoption of AI is reshaping the landscape - often faster than security measures can keep up. Here’s what you need to know about the top risks and how to address them:

Key Takeaway: Strong governance, clear policies, and proactive risk management are essential for ensuring AI improves healthcare without compromising safety, compliance, or trust.

1. Prompt Injection and Data Theft in Clinical Workflows

As healthcare organizations continue to adopt AI technologies, they face hidden risks that threaten both data security and operational stability. One such risk is prompt injection attacks, a serious vulnerability that targets AI systems - especially large language models (LLMs) used in clinical environments. These attacks work by embedding malicious instructions into prompts, tricking the AI into exposing sensitive patient information or performing unauthorized tasks. For instance, when a clinician relies on an AI tool to summarize patient records or suggest treatment plans, a cleverly constructed prompt could bypass security measures and extract protected health information (PHI). This not only puts patient safety at risk but also creates significant challenges for regulatory compliance.

Impact on Patient Safety and Compliance

Generative AI models are unpredictable, and existing security measures often fall short of managing their risks [3]. These models can generate inaccurate medical advice, unintentionally reveal training data that includes patient details, or be manipulated to disclose confidential records through sophisticated queries. When AI tools handle patient data during real-time clinical tasks, every interaction becomes a potential vulnerability. Adding to this complexity, the lack of transparency in how these models are trained makes it difficult to anticipate when or how sensitive information might be exposed.

Regulatory and Legal Risks

The integration of AI into healthcare has made HIPAA compliance more complicated than ever. Even if a breach originates from a third-party AI vendor, healthcare organizations remain fully accountable for HIPAA violations [2]. This heightened responsibility amplifies legal and financial risks. In fact, HIPAA fines doubled in 2023, reaching $4.2 million, with 22 investigations leading to penalties or settlements in 2024 [2]. Lawmakers are also proposing to eliminate the current $2 million cap on HIPAA fines, further increasing the stakes [2]. Each AI system that processes patient data creates a new business associate relationship, which must adhere to strict HIPAA standards. Meanwhile, federal agencies like the FTC and HHS are closely monitoring compliance, adding another layer of scrutiny.

Recommended Safeguards and Governance Solutions

Concerns about data privacy are slowing down AI adoption in healthcare, and many executives lack a clear roadmap for integration [7]. To address these challenges, organizations need to act quickly and decisively. Establishing an AI governance committee with representation from clinical, administrative, and legal teams can help ensure accountability for AI-related decisions [2]. Policies should be developed to cover data usage, clinical applications, staff training, and incident response, aligning these with current compliance frameworks [2].

Maintaining a detailed inventory of all AI algorithms and models is equally important. This inventory should be overseen by a designated C-suite leader and a diverse committee [4]. Additionally, systems must be implemented to document AI usage in patient care and to maintain audit trails that support OCR compliance [2]. Rather than adopting a one-size-fits-all approach, governance frameworks should be flexible, tailored to specific AI applications and use cases [3][6]. This kind of targeted strategy can help mitigate risks while enabling the safe and effective integration of AI into healthcare workflows.

2. Shadow AI and Unmanaged Generative Tools

Shadow AI presents a serious and often underestimated risk in modern enterprises. Unlike traditional shadow IT, generative tools operate anonymously, making them difficult to trace or govern. For example, employees might use open-source large language models within enterprise cloud environments, rely on AI code assistants without oversight, or even upload sensitive patient information to public generative AI platforms like ChatGPT. These actions can bypass security measures and lead to data leaks or compliance violations [8].

Impact on Patient Safety and Compliance

The absence of visibility into shadow AI activities leaves security teams unable to evaluate risks, enforce policies, or hold users accountable. Imagine a scenario where a public chatbot is used to create clinical summaries - this could inadvertently expose protected health information (PHI). Similarly, deploying an unauthorized AI model trained on biased or incomplete data could produce inaccurate outputs, endangering patient safety and creating compliance challenges [8].

Regulatory and Legal Risks

Unregulated shadow AI tools can breach business associate agreements and violate regulations like HIPAA and HITECH, putting organizations at significant legal risk [8]. The lack of transparency regarding how these tools are trained, the data they store, or where that data is kept complicates compliance efforts. During audits or investigations, organizations may struggle to demonstrate adherence to regulations, leaving them fully accountable for any violations or security lapses.

These legal and regulatory gaps highlight the urgent need for proactive governance.

Recommended Safeguards and Governance Solutions

To address these risks, organizations should implement strategies that provide full visibility into all AI tools being used - whether authorized or not [2]. This includes deploying monitoring systems to track AI usage, creating clear policies that outline acceptable tools, and establishing formal approval processes for new applications. Additionally, educating employees about the dangers of using unauthorized AI platforms with sensitive data is critical. These measures can help mitigate vulnerabilities before they escalate into serious compliance or patient safety issues.

3. Model Integrity Attacks and Training Data Poisoning

Model integrity attacks and training data poisoning represent serious, often hidden threats to healthcare AI systems. Much like prompt injection vulnerabilities, these issues arise when training data is compromised - either through flaws or intentional tampering. This can lead to lasting biases and inaccuracies being embedded within the AI system. When such flawed data reflects historical inequities, the resulting models perpetuate and even amplify those disparities, creating a "bias in, bias out" problem[4]. This sets the stage for a range of downstream challenges.

Impact on Patient Safety and Compliance

AI models trained on biased data can produce unfair, inaccurate, or discriminatory outcomes in clinical settings. These errors can lead to misdiagnoses, delayed treatments, or substandard care, directly putting patient safety at risk[4].

Regulatory and Legal Risks

Using biased AI systems can open organizations to lawsuits, including anti-discrimination claims, and hefty fines[4]. The lack of transparency in AI training data, combined with the "black box" nature of many algorithms, makes compliance audits and investigations even more challenging. This lack of clarity can increase the legal and regulatory liabilities faced by healthcare providers.

Operational Disruption and Financial Implications

The consequences of compromised AI models extend beyond patient care. Organizations may face significant financial costs to fix or replace flawed systems. Clinical workflows can also be disrupted, eroding trust among providers and leading to expensive regulatory penalties.

Recommended Safeguards and Governance Solutions

To counter these risks, proactive governance is essential. Leaders should prioritize thorough audits of training data to identify and address representation gaps. This includes ensuring that key metadata - such as race, ethnicity, and socioeconomic factors - is accurately captured and balanced[4]. Diverse development teams and multiple rounds of validation testing with varied patient populations can further reduce bias. Regularly monitoring AI outputs for unusual patterns is another critical step. Maintaining detailed records of data sources, model training processes, and decision-making logic supports compliance efforts and accountability. These actions not only reduce bias but also enhance the security and reliability of healthcare AI systems.

4. AI as an Attack Multiplier Against Healthcare

As healthcare increasingly integrates AI into its systems, cybercriminals are finding ways to exploit it for more advanced attacks. AI enables attackers to automate reconnaissance, craft highly targeted phishing campaigns, and uncover system vulnerabilities at an alarming speed. Ron Southwick, writing for Chief Healthcare Executive, put it succinctly: "AI is improving cybersecurity in health care, but attackers are using it, too"[9]. This isn't just a hypothetical risk - cybersecurity has already become the second most pressing issue for healthcare leaders[9]. The growing sophistication of these AI-driven threats highlights the urgent need for stronger and more adaptive defense strategies.

Impact on Patient Safety and Compliance

AI-powered cyberattacks bring serious risks to patient safety by targeting critical clinical systems, disrupting workflows, and even tampering with medical data. Unlike traditional attacks that might simply lock files for ransom, AI-enhanced threats can zero in on specific clinical applications, medical devices, or patient records to cause maximum disruption. For instance, if attackers exploit vulnerabilities in connected medical devices or electronic health record systems, the fallout could include care delays, treatment errors, or worse - life-threatening situations. These risks don't stop at patient safety; they ripple out to create operational chaos and financial strain.

Operational Disruption and Financial Implications

The financial damage from AI-driven attacks goes far beyond the immediate costs of ransom payments or system recovery. Healthcare organizations often face hefty regulatory fines, legal fees, and wasted investments in compromised technology. Entire care networks can grind to a halt, with delayed procedures, patient diversions, and broken workflows becoming the norm. Worse still, the reputational harm can lead to patient loss and make it harder to recruit skilled staff. Adding to the financial burden, many insurance policies - such as Directors and Officers (D&O), Errors and Omissions (E&O), and cyber liability coverage - now include broad exclusions for AI-related incidents. This leaves healthcare providers vulnerable to bearing the full cost of these attacks.

Recommended Safeguards and Governance Solutions

To counter AI-driven threats, healthcare leaders must adopt security measures that address both traditional and AI-specific risks. A key step is creating robust AI governance frameworks that involve clinical, administrative, and legal oversight before implementing any AI tools. Jonathan B. Perlin, President and CEO of The Joint Commission Enterprise, stressed the importance of proactive measures: "The fear that as a society we won't have sufficient guardrails in place to protect us from the unintended consequences of AI in healthcare, which could cause real harm. These range from user error, hallucinations and algorithmic biases that amplify care disparities to novel data security threats and inappropriate use"[6].

Healthcare organizations should routinely assess AI systems for vulnerabilities before they can be exploited. Real-time security monitoring, combined with AI system logs, can help detect anomalies quickly. Strict access controls and transparent, explainable AI operations are also essential for enabling fast and effective responses to emerging threats. By prioritizing these strategies, healthcare providers can better protect their systems, patients, and reputations in an increasingly AI-driven landscape.

5. Third-Party AI Tools and Fourth-Party Exposure

Using third-party AI tools doesn't just bring the vendor's risks into play - it also introduces the risks tied to their entire supply chain. Unlike internal AI challenges, these tools expand your exposure to external threats. This creates a serious weak spot: over 80% of stolen PHI (Protected Health Information) records are linked to breaches involving third-party vendors, business associates, and non-hospital providers[1]. Cybercriminals have adjusted their strategies to exploit this vulnerability, employing a "hub-and-spoke" model. By targeting one third-party vendor (the "hub"), they gain access to multiple healthcare organizations connected to it[1]. This chain reaction of vendor risks increases regulatory scrutiny and legal responsibilities for healthcare organizations.

Regulatory and Legal Risks (e.g., HIPAA, HITECH)

When AI systems handle patient data, they often create new business associate relationships. However, many AI vendors, especially those outside the healthcare sector, may not fully understand the intricacies of HIPAA compliance. This leaves healthcare organizations on the hook for any violations[2]. Federal agencies like the FTC and HHS are ramping up oversight, and new state-level laws are being introduced across the country, further complicating the regulatory environment[2][4].

Recommended Safeguards and Governance Solutions

To tackle these vendor-related risks, organizations need a structured governance strategy. Start by forming an AI governance committee that includes representatives from clinical, administrative, and legal departments. This ensures accountability for AI-related decisions across the organization[2]. When evaluating AI vendors, prioritize those with robust compliance practices. Require documentation of their compliance measures, proof of insurance, indemnification clauses, and transparent data handling protocols[2].

Additionally, implement AI-specific policies that address data usage, clinical integration, staff training, and incident response. These policies should align with your existing compliance and risk management systems[2]. Tools like Censinet RiskOps™ can simplify the process by serving as a centralized platform for managing third-party AI risks. For example, Censinet AI™ helps streamline vendor assessments by summarizing key vendor evidence, identifying fourth-party risks, and directing critical findings to your AI governance committee. This ensures that the right teams address the most pressing issues efficiently and effectively.

sbb-itb-535baee

6. Governance Gaps in AI Oversight and Accountability

Weak governance structures in healthcare leave AI systems largely unchecked, creating risks that can jeopardize both patient safety and regulatory compliance. It’s not just external threats that pose a problem - internal oversight failures can lead to vulnerabilities that directly impact patient care. Alarmingly, over 60% of organizations across industries admit they lack governance policies for managing AI or detecting shadow AI [8]. In healthcare, this oversight gap becomes even more critical since AI decisions can have life-altering consequences and regulatory implications.

Internal governance issues amplify the risks posed by external vulnerabilities, making the need for robust oversight even more urgent.

Impact on Patient Safety and Compliance

Without proper oversight, AI tools can be deployed without adequate validation, putting clinical safety at risk. These unvalidated systems may harbor biases that go unchecked, potentially leading to unsafe patient outcomes and violations of compliance standards. Nearly half of healthcare executives rank the appropriate use of AI among their top three challenges [7]. When accountability is unclear, essential questions remain unanswered: Who verifies the accuracy of AI algorithms? Who checks for bias in clinical recommendations? Who ensures that patient safety standards are upheld?

Regulatory and Legal Risks (e.g., HIPAA, HITECH)

Many AI vendors lack the specialized knowledge required to navigate healthcare’s complex regulatory landscape, creating a disconnect between what the technology can do and what compliance demands [2]. AI systems handling patient data often trigger HIPAA requirements, yet many organizations are unprepared to manage these obligations. Weak vendor oversight exacerbates this issue, leaving healthcare providers liable for breaches - even when the fault lies with an external vendor [2].

Recommended Safeguards and Governance Solutions

To address these risks, healthcare organizations need to implement targeted governance reforms without delay. AI decisions should have clear legal accountability, not just technical oversight. Establishing an AI governance committee that includes clinical, administrative, and legal experts can ensure direct accountability [2]. Each AI application should undergo a specific risk assessment tailored to its use case, rather than relying on generic oversight practices [6].

When selecting AI vendors, organizations should go beyond evaluating functionality and cost. Key criteria should include documented compliance measures, proof of insurance, indemnification clauses, and transparent data-handling practices [2]. Tools like Censinet AI™ can strengthen oversight by delivering critical risk findings directly to the appropriate stakeholders, including governance committee members. With real-time data presented in an intuitive dashboard, Censinet RiskOps™ offers a centralized platform for managing AI-related policies, risks, and tasks - ensuring that the right teams address the right issues promptly and effectively.

7. Overreliance on Autonomous AI Without Human Oversight

When healthcare organizations rely too heavily on autonomous AI systems, they risk severe consequences for patient outcomes. While these systems can improve efficiency, healthcare decisions often carry life-or-death implications. Removing human oversight in such scenarios is a gamble no one can afford to take.

Impact on Patient Safety and Compliance

One of the biggest dangers of autonomous AI is algorithmic bias - a systemic flaw that can lead to unfair, inaccurate, or discriminatory results. These biases not only worsen existing health disparities but can also deeply impact patient safety [4][10][11]. Adding to the complexity, many deep learning models function like black boxes, making it nearly impossible to trace how or why a specific clinical recommendation was made [4]. Without human checks at critical stages, these biases could perpetuate harmful practices, putting patient care and regulatory compliance in jeopardy.

Regulatory and Legal Risks (e.g., HIPAA, HITECH)

Even when autonomous AI systems cause a breach, healthcare organizations remain legally accountable for adhering to HIPAA regulations [2]. A common issue is that many AI vendors lack the necessary expertise in healthcare regulations, yet their tools are often deployed in workflows without adequate human oversight [2]. With enforcement of AI-related HIPAA violations on the rise, the risks are growing. Generative AI and large language models, in particular, pose unique compliance challenges due to their unpredictable nature and wide-ranging capabilities. Without human involvement, organizations cannot ensure patient data is handled in line with regulatory standards. This highlights the urgent need for human oversight to navigate these compliance risks effectively.

Recommended Safeguards and Governance Solutions

To address these risks, healthcare organizations need to maintain direct control over the AI lifecycle instead of blindly relying on autonomous systems [4]. This includes integrating human-in-the-loop checkpoints at crucial decision points, especially where AI outputs directly influence patient care or data management. The goal should be to enhance AI efficiency without compromising human oversight.

An example of this balanced approach is Censinet AI™, a platform that blends autonomy with human guidance. It streamlines risk assessments and evidence validation while keeping humans in charge through configurable rules and review processes. By ensuring that stakeholders review AI-generated findings before any action is taken, this method promotes accountability while maintaining efficiency.

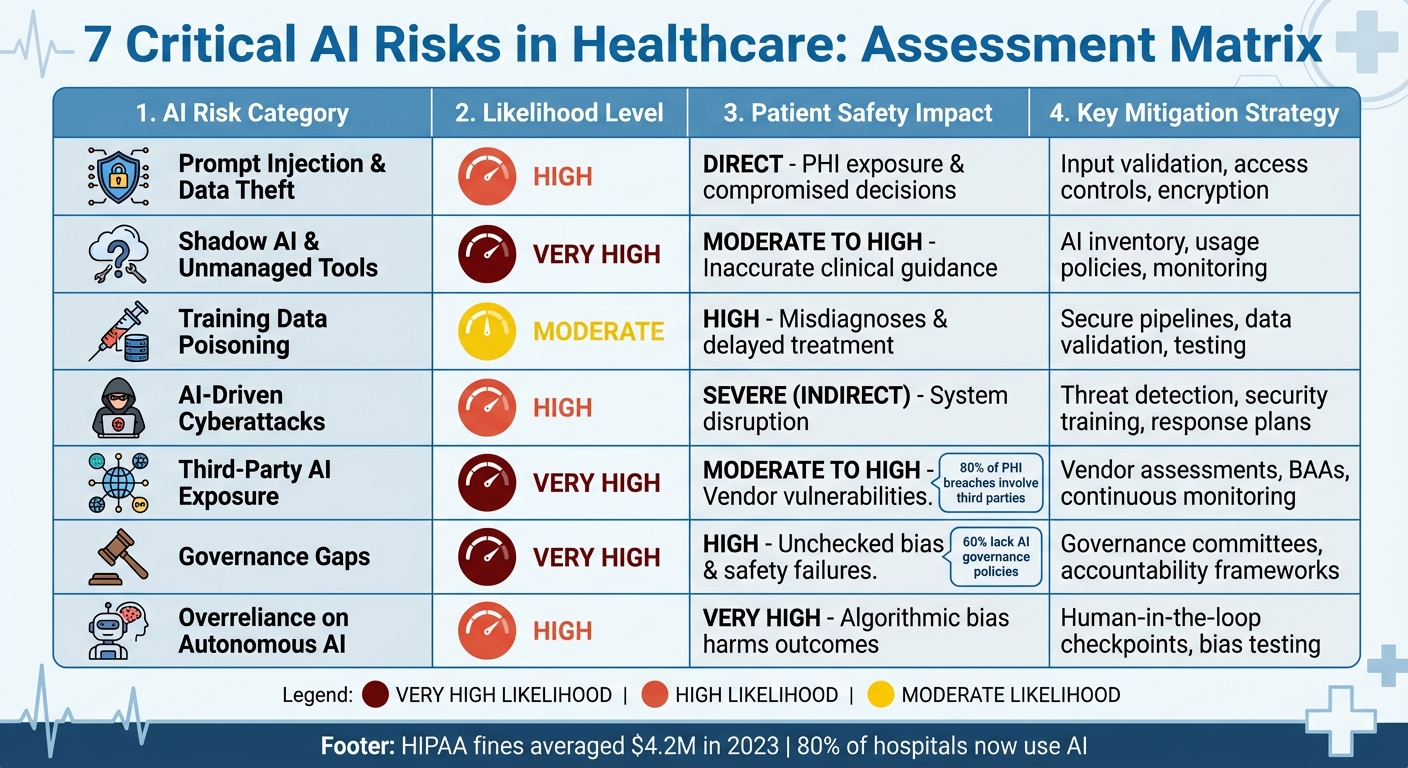

Risk Comparison Table

7 Critical AI Risks in Healthcare: Likelihood, Impact, and Mitigation Strategies

The table below highlights various AI-related risks in healthcare, breaking them down across four key dimensions: likelihood, impact on patient safety, regulatory implications, and mitigation strategies. This structured approach offers a practical guide for prioritizing risk mitigation efforts.

High

Direct - potential exposure of PHI and compromised clinical decisions

HIPAA violations, breach notification requirements, potential fines

Input validation, access controls, data encryption, prompt sanitization

Very High

Moderate to High - unvetted tools may generate inaccurate clinical guidance

Non-compliance with HIPAA, lack of BAAs, unauthorized PHI processing

Comprehensive AI inventory, usage policies, employee training, monitoring tools

Moderate

High - corrupted models could lead to misdiagnoses, delayed treatment, or patient harm

Liability exposure, potential regulatory scrutiny if patient outcomes are affected

Secure training pipelines, data validation, model testing, version control

High

Indirect but severe - accelerated attacks disrupt systems supporting patient care

HIPAA enforcement if breaches occur, incident reporting obligations

Enhanced threat detection, security awareness training, incident response plans

Very High

Moderate to High - vendor vulnerabilities may cascade into patient data exposure

HIPAA business associate liability, supply chain compliance gaps

, BAA requirements, fourth-party mapping, continuous monitoring

Very High

High - lack of oversight can lead to unchecked bias and safety failures

Evolving state and federal AI regulations, enforcement of existing HIPAA rules

AI governance committees, clear accountability frameworks, policy documentation

High

Very High - algorithmic bias and opaque decisions directly harm patient outcomes

HIPAA violations, legal liability for adverse events, discrimination claims

Human-in-the-loop checkpoints, bias testing, transparent decision-making processes

Key Takeaways for Risk Mitigation

Conclusion

The risks associated with AI in healthcare are not hypothetical - they're here, and they directly impact patient safety, regulatory compliance, and the stability of organizations. With HIPAA fines now averaging $4.2 million, the financial stakes are higher than ever. Meanwhile, 80% of hospitals are using AI to improve patient care and streamline workflows, underscoring its growing presence in the industry [2].

Strong governance and oversight are no longer optional. Nearly 60% of C-suite leaders believe having a dedicated AI officer is critical, highlighting a governance gap that must be addressed [5]. This gap, combined with rapidly changing regulations and growing security threats, makes it essential to monitor AI systems for biases and ensure their performance is continuously validated [4][7].

The regulatory environment is evolving quickly. The FDA is creating new frameworks for AI oversight, the Office for Civil Rights is ramping up enforcement, and state medical boards are introducing updated standards [2][3][4]. Healthcare organizations that establish strong defenses can innovate responsibly while avoiding costly compliance missteps. However, nearly 70% of healthcare executives point to data privacy and security concerns as major obstacles to AI adoption, making it clear that addressing these challenges is key to building patient trust [7].

In this complex regulatory landscape, practical tools are indispensable. Censinet RiskOps offers centralized AI risk management, simplifying critical tasks like vendor assessments, mapping of fourth-party risks, and automating workflows. With features like real-time risk visualization and automated evidence summarization, Censinet AI enhances these processes while maintaining human oversight to ensure expert decision-making. These tools work hand-in-hand with strategic governance to safeguard AI operations.

From managing risks like prompt injection to addressing shadow AI, proactive measures are essential. Now is the time to implement strong governance, effective safeguards, and continuous monitoring to ensure AI in healthcare remains safe, secure, and compliant.

FAQs

How can healthcare organizations protect themselves from AI-driven cyberattacks?

Healthcare organizations can strengthen their defenses against AI-driven cyberattacks by leveraging AI-powered threat detection systems to spot risks as they arise. Regular vulnerability assessments are essential for identifying and addressing weak points in security. Keeping systems up to date, enforcing strict access controls, and maintaining continuous oversight of third-party vendors are also key to reducing risks.

Using established frameworks like the NIST Cybersecurity Framework 2.0 and following AI risk management guidelines can further enhance security. These tools not only support responsible AI use but also help ensure compliance and reduce exposure to new threats. By taking these steps, organizations can maintain a strong position in the ever-changing world of cybersecurity.

What steps can healthcare organizations take to ensure HIPAA compliance when using third-party AI tools?

When using third-party AI tools, healthcare organizations must go beyond simply securing a Business Associate Agreement (BAA) to ensure HIPAA compliance. It's crucial to thoroughly evaluate vendors to confirm they implement strong measures to protect data privacy and security. This includes maintaining detailed audit trails that track how patient data is used and ensuring vendors fully understand their responsibilities under HIPAA.

Additionally, organizations should verify that AI tools handle data in ways that comply with HIPAA, particularly when operating across multiple jurisdictions. Regularly reviewing vendor practices, conducting comprehensive risk assessments, and establishing clear lines of accountability are key steps to reducing compliance risks and safeguarding patient information.

What steps can healthcare executives take to avoid overreliance on autonomous AI systems?

To prevent becoming overly dependent on autonomous AI systems, it's important to put well-defined governance policies in place. These policies should clearly outline how AI will be used within your organization. Conducting regular audits and performance reviews is another key step to ensure your systems are operating correctly and staying aligned with your business objectives.

Equally important is maintaining human oversight. Assign experienced team members to monitor AI-driven decisions and step in when needed. Also, enforce strict access controls to protect sensitive information and prevent any misuse of AI tools. Together, these steps help strike a balance between leveraging AI's advantages and ensuring accountability while managing potential risks.

Related Blog Posts

- The Invisible Threat: How AI Amplifies Risk in Ways We Never Imagined

- The New Risk Frontier: Navigating AI Uncertainty in an Automated World

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- The Healthcare AI Paradox: Better Outcomes, New Risks

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How can healthcare organizations protect themselves from AI-driven cyberattacks?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare organizations can strengthen their defenses against AI-driven cyberattacks by leveraging <strong>AI-powered threat detection systems</strong> to spot risks as they arise. Regular <strong>vulnerability assessments</strong> are essential for identifying and addressing weak points in security. Keeping systems up to date, enforcing <strong>strict access controls</strong>, and maintaining continuous oversight of third-party vendors are also key to reducing risks.</p> <p>Using established frameworks like the <strong><a href=\"https://www.nist.gov/cyberframework\" target=\"_blank\" rel=\"nofollow noopener noreferrer\">NIST Cybersecurity Framework</a> 2.0</strong> and following AI risk management guidelines can further enhance security. These tools not only support responsible AI use but also help ensure compliance and reduce exposure to new threats. By taking these steps, organizations can maintain a strong position in the ever-changing world of cybersecurity.</p>"}},{"@type":"Question","name":"What steps can healthcare organizations take to ensure HIPAA compliance when using third-party AI tools?","acceptedAnswer":{"@type":"Answer","text":"<p>When using third-party AI tools, healthcare organizations must go beyond simply securing a <strong>Business Associate Agreement (BAA)</strong> to ensure HIPAA compliance. It's crucial to thoroughly evaluate vendors to confirm they implement strong measures to protect data privacy and security. This includes maintaining detailed audit trails that track how patient data is used and ensuring vendors fully understand their responsibilities under HIPAA.</p> <p>Additionally, organizations should verify that AI tools handle data in ways that comply with HIPAA, particularly when operating across multiple jurisdictions. Regularly reviewing vendor practices, conducting comprehensive risk assessments, and establishing clear lines of accountability are key steps to reducing compliance risks and safeguarding patient information.</p>"}},{"@type":"Question","name":"What steps can healthcare executives take to avoid overreliance on autonomous AI systems?","acceptedAnswer":{"@type":"Answer","text":"<p>To prevent becoming overly dependent on autonomous AI systems, it's important to put <strong>well-defined governance policies</strong> in place. These policies should clearly outline how AI will be used within your organization. Conducting regular <strong>audits and performance reviews</strong> is another key step to ensure your systems are operating correctly and staying aligned with your business objectives.</p> <p>Equally important is maintaining <strong>human oversight</strong>. Assign experienced team members to monitor AI-driven decisions and step in when needed. Also, enforce <strong>strict access controls</strong> to protect sensitive information and prevent any misuse of AI tools. Together, these steps help strike a balance between leveraging AI's advantages and ensuring accountability while managing potential risks.</p>"}}]}

Key Points:

Why are prompt injection attacks a major threat to clinical workflows?

- Expose PHI by manipulating LLM prompts used in care delivery

- Bypass AI controls and trigger unauthorized actions or disclosures

- Generate unsafe clinical advice due to unpredictable model behavior

- Compromise HIPAA compliance, even when breaches occur through vendors

- Increase legal and regulatory scrutiny as enforcement intensifies

What makes shadow AI one of the most underestimated risks in healthcare?

- Unapproved generative tools handle PHI without safeguards

- No visibility into model training data, storage, or retention

- High chance of biased or inaccurate outputs influencing clinical decisions

- Violates BAAs and HIPAA, creating legal exposure

- Creates gaps in accountability, making audits difficult

How do model integrity attacks and data poisoning impact patient safety?

- Introduce hidden biases that degrade diagnostic accuracy

- Lead to misdiagnoses or delayed treatments

- Corrupt clinical recommendations via manipulated data

- Increase legal and regulatory liability for discriminatory outputs

- Require costly remediation to rebuild, retrain, or replace corrupted models

How does AI help attackers scale healthcare cyber threats?

- Automates reconnaissance and vulnerability scanning

- Crafts highly targeted phishing and social engineering attacks

- Identifies weaknesses faster than traditional security tools

- Disrupts clinical systems and medical devices

- Creates uninsured financial exposure due to AI exclusions in D&O and cyber policies

Why are third‑party AI tools and fourth‑party dependencies so risky?

- Over 80% of PHI incidents originate from vendors, not the provider

- Vendors may lack HIPAA expertise, exposing organizations to liability

- Fourth‑party sub‑processors often go unmonitored

- Supply‑chain breaches cascade across multiple hospitals

- Regulatory pressure rising, with state and federal scrutiny intensifying

Why is overreliance on autonomous AI dangerous?

- Algorithmic bias directly affects clinical outcomes

- Opaque models (“black boxes”) lack explainability

- No human audit trail for unsafe or incorrect outputs

- Regulators hold healthcare organizations accountable even when vendors err

- Human‑in‑the‑loop controls reduce risk while preserving AI efficiency