HIPAA Compliance in Anonymization Protocols

Post Summary

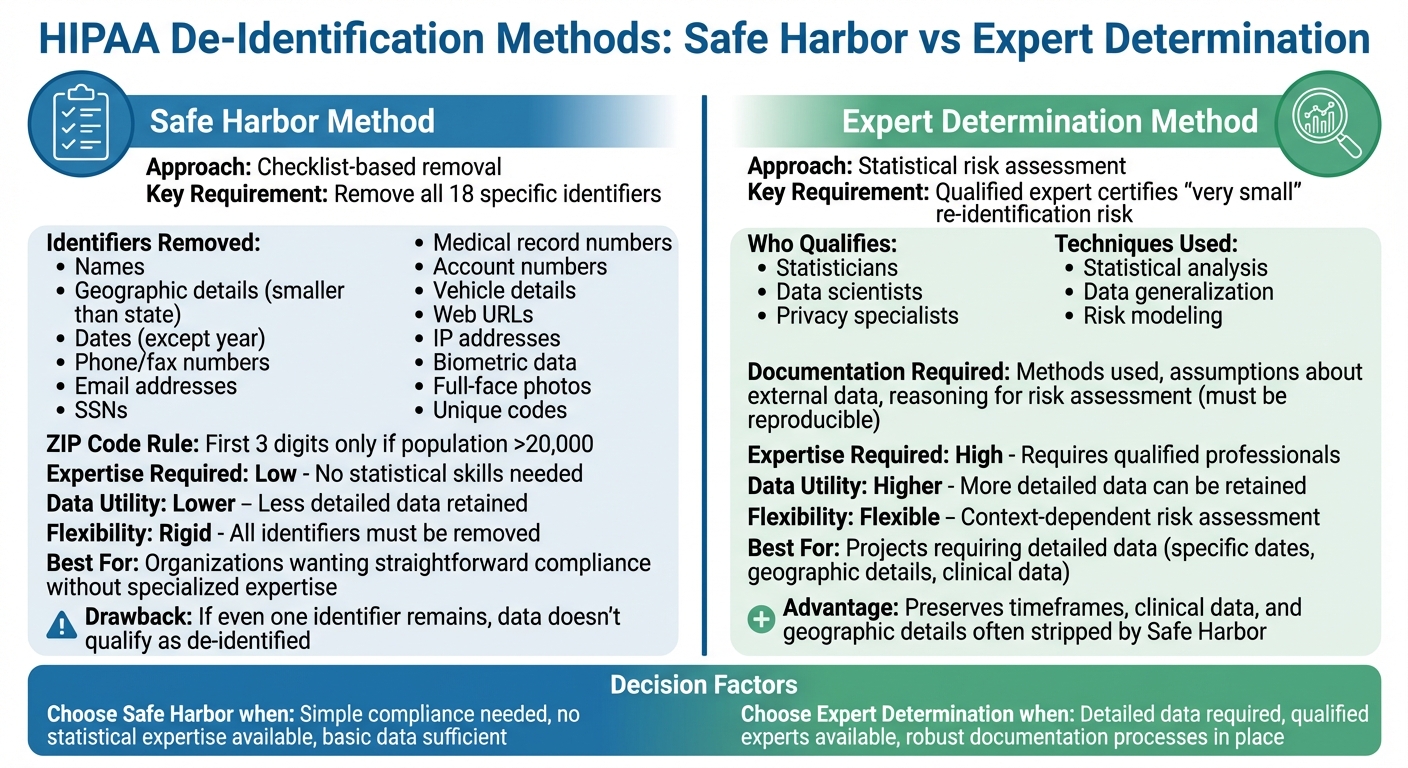

HIPAA compliance ensures patient data privacy while enabling its use for research and innovation. The key lies in transforming identifiable health information (PHI) into de-identified data through two methods:

- Safe Harbor Method: Removes 18 specific identifiers (e.g., names, addresses, dates) to ensure data cannot identify individuals.

- Expert Determination Method: A qualified expert assesses and minimizes re-identification risks, allowing more detailed data to remain usable.

Healthcare organizations use techniques like suppression, generalization, and AI-driven tools to anonymize data effectively. These methods balance privacy with usability, supporting research, AI development, and public health efforts. However, risks like re-identification require ongoing governance, data-sharing agreements, and regular audits to maintain compliance. Tools like Censinet RiskOps™ streamline risk management, ensuring HIPAA standards are met while advancing data-driven projects.

HIPAA's Two De-Identification Methods: Safe Harbor and Expert Determination

HIPAA Safe Harbor vs Expert Determination De-Identification Methods Comparison

HIPAA outlines two main methods for de-identifying protected health information (PHI): Safe Harbor and Expert Determination. Each approach has unique requirements and offers different levels of flexibility when it comes to balancing privacy and data usability. Organizations can select the method that aligns best with their goals, resources, and how they plan to use the data.

Safe Harbor Method

The Safe Harbor method relies on a clear-cut checklist to strip away 18 specific identifiers from health records. If all these identifiers are removed and there's no actual knowledge that the remaining data could still identify an individual, the information is no longer considered PHI under HIPAA [1].

These identifiers include names, geographic details smaller than a state, most date elements (except the year), phone and fax numbers, email addresses, Social Security numbers, medical record numbers, account numbers, vehicle details, web URLs, IP addresses, biometric data, full-face photos, and any other unique identifying codes [1].

For example, the first three digits of a ZIP code can only be retained if the population in that area exceeds 20,000 people [1].

The main draw of the Safe Harbor method is its straightforwardness. Compliance teams can follow its checklist without needing advanced statistical skills or specialized tools. However, it has a major drawback: if even one identifier remains, the data doesn't qualify as de-identified. This limitation can make it less useful for research that depends on detailed dates or precise geographic data [1][2].

Expert Determination Method

The Expert Determination method allows for more flexibility by enabling certain details to remain in the dataset - provided a qualified expert determines that the risk of re-identification is minimal [1][2]. These experts are typically statisticians, data scientists, or privacy specialists experienced in reducing re-identification risks through accepted scientific and statistical methods.

The expert evaluates the dataset using techniques like statistical analysis and data generalization. This approach preserves more detailed information, such as specific timeframes, clinical data, or geographic details, which are often stripped away under the Safe Harbor method [1][2].

HIPAA requires the expert to document their process thoroughly. This includes detailing the methods used, assumptions about external data, and the reasoning behind their conclusion that the risk of re-identification is negligible. This documentation must be clear enough for other qualified professionals to understand and reproduce, ensuring accountability during audits [1][2].

The "very small" risk standard is context-dependent. For instance, an expert might allow three-digit ZIP codes and broader age categories for internal projects, while recommending stricter data transformations for datasets shared externally.

Organizations tend to choose the Expert Determination method when their projects demand detailed data - like specific admission dates for analyzing treatment patterns or finer geographic data for public health studies. This method is ideal when the organization has access to skilled experts and can maintain robust documentation processes. Both Safe Harbor and Expert Determination play a crucial role in ensuring privacy while enabling valuable data use.

Anonymization Techniques That Meet HIPAA Standards

Common Anonymization Techniques

To comply with HIPAA standards, organizations use various methods to strip identifiable information from datasets. One common approach is suppression, which removes outliers such as unusual occupations or rare ages that could make individuals identifiable. Other techniques like generalization, aggregation, masking, and pseudonymization replace specific details - such as exact birth dates or ZIP codes - with broader categories or surrogate values. These methods ensure data retains its internal consistency while safeguarding patient identities.

More advanced techniques include perturbation, which introduces statistical noise into numeric fields, and privacy models like k-anonymity, l-diversity, and t-closeness. With k-anonymity, quasi-identifiers (e.g., age, gender, ZIP code) are generalized until every unique combination appears in at least k records, reducing the risk of singling out individuals. L-diversity takes this further by ensuring each group contains at least l distinct sensitive values, minimizing the chances of inferring private details. T-closeness refines this by making sure the distribution of sensitive attributes in each group closely reflects that of the overall dataset, lowering the risk of attribute disclosure. These foundational methods set the stage for more advanced, AI-driven solutions.

AI-Based Anonymization Methods

AI tools have brought a new level of sophistication to anonymization, especially when dealing with unstructured data like clinical notes, discharge summaries, and medical images. Using natural language processing (NLP) and machine learning, these tools can identify patterns of Protected Health Information (PHI) embedded in complex narratives, such as names, addresses, dates, facility names, and contact details. For example, entity recognition models trained on annotated medical data can tag sensitive identifiers and replace them with placeholders (e.g., replacing names with "[NAME]" or generalizing dates to "[MONTH YEAR]"), all while maintaining the clinical context of diagnoses, symptoms, and treatments.

When it comes to medical images, AI technologies address PHI in two key areas: DICOM headers (which may contain patient names, IDs, or birth dates) and visible text within the images themselves. Computer vision models can detect and blur or mask text regions, while advanced algorithms can identify facial features in scans like head CTs or MRIs and apply defacing or blurring to prevent biometric identification. To ensure accuracy, many organizations use human-in-the-loop workflows, where health information management staff review samples of AI-processed data to confirm that all 18 HIPAA identifiers have been properly removed.

Balancing Privacy Protection with Data Usability

While protecting privacy is paramount, organizations must also ensure that anonymized data remains useful for research and clinical purposes. To achieve this balance, teams evaluate data utility using quantitative metrics such as model accuracy, AUC scores, and error rates. They also compare distributions of key variables (e.g., lab values or diagnosis frequencies) before and after anonymization to ensure the data's integrity is preserved.

Privacy risks are assessed through methods like re-identification estimates, which measure the likelihood of identifying individuals based on quasi-identifiers. This might involve simulating linkage attacks or applying models like k-anonymity and l-diversity. Governance committees or data access boards then review these assessments to determine whether the anonymization level is appropriate for the dataset's intended use. For datasets shared more broadly, stricter transformations are often applied, while internal projects may use more nuanced approaches with tighter controls. Under the Expert Determination standard, organizations can retain specific details - such as timeframes or geographic information - if a qualified expert certifies that the risk of re-identification remains minimal [1].

Managing Re-Identification Risks and Data Governance

Understanding Re-Identification Risks

Even after health data is de-identified, the risk of re-identification remains significant, primarily due to linkage attacks. These attacks occur when anonymized data is combined with external datasets - like voter rolls, commercial databases, social media profiles, or public records - that share overlapping quasi-identifiers. According to an analysis cited in HIPAA guidance, 87% of the U.S. population could be uniquely identified using just three pieces of information: a 5-digit ZIP code, gender, and full date of birth [1].

Certain factors can make re-identification even easier. Small cohort sizes, especially those with fewer than 10 or 20 individuals, coupled with rare conditions or demographics, increase the likelihood of identification. Additionally, detailed quasi-identifiers like full ZIP codes, specific dates, or uncommon diagnoses amplify the risk. Longitudinal data, which includes detailed timestamps or sequences of events, can create unique "fingerprint-like" patterns, further complicating anonymity. To manage these risks, organizations must evaluate the context in which data is shared - considering who will access it, what external datasets might be available, and which identifiers remain in the dataset.

The Expert Determination method offers a structured approach to assess re-identification risks. A qualified expert uses established statistical and scientific principles to determine if the risk of re-identification is "very small." This process includes documenting all assumptions, thresholds, and validation tests in a formal risk assessment report. Techniques like k-anonymity, l-diversity, or t-closeness are often used to evaluate how many individuals share the same quasi-identifier profile. Importantly, these assessments should be revisited whenever new variables are added, data granularity changes, or sharing practices expand. Managing these risks effectively requires strong data governance and well-defined data-sharing protocols.

Using Data Sharing Agreements for Compliance

To mitigate re-identification risks, healthcare organizations must implement strict data sharing agreements. For HIPAA Limited Data Sets - which can retain identifiers like city, state, ZIP code, and full date elements - a Data Use Agreement (DUA) is essential. A compliant DUA outlines clear rules for data use, ensuring compliance and reducing the risk of misuse.

| Essential DUA Element | Purpose |

|---|---|

| Permitted uses and disclosures | Defines the scope of use (e.g., research, public health) and prohibits attempts to identify individuals. |

| Administrative, physical, and technical safeguards | Requires measures like access controls, encryption, and secure environments for data analysis. |

| Incident reporting | Mandates prompt reporting of unauthorized use or re-identification attempts. |

| Downstream sharing restrictions | Limits data access for subcontractors or collaborators, ensuring adherence to DUA terms. |

| Return or destruction | Specifies that data must be securely disposed of after use unless retention is legally required. |

A robust governance framework integrates policies, processes, and technology. Organizations should establish a data governance committee, including members from compliance, privacy, security, legal, clinical, and analytics teams. This committee can review data-sharing proposals, approve DUAs, and set de-identification standards across the organization. Role-based access controls ensure that only authorized personnel can access Limited Data Sets or de-identified data. Meanwhile, technical safeguards - like secure research environments, network segmentation, data loss prevention tools, and activity logging - help monitor and prevent unauthorized data linkage. Regular audits of queries and outputs further ensure adherence to DUA terms.

Platforms like Censinet RiskOps™ can simplify the integration of re-identification risk management into routine processes. These tools streamline third-party risk assessments for vendors and research partners handling de-identified data, PHI, or Limited Data Sets. They also help organizations monitor de-identification practices, enforce DUA compliance, and maintain a detailed inventory of externally shared datasets. This inventory includes critical details like the de-identification method used, the recipient, legal basis for sharing, and expiration dates. By tying these measures into the broader framework for protecting patient data under HIPAA, healthcare organizations can adapt to evolving data environments and emerging risks of re-identification.

sbb-itb-535baee

Implementing Anonymization Protocols in Healthcare Organizations

Building an Anonymization Framework

Creating a structured anonymization framework is crucial for healthcare organizations to handle sensitive data responsibly. This framework should clearly define roles such as:

- Privacy officers: Responsible for interpreting HIPAA policies, deciding between Safe Harbor and Expert Determination methods, and approving de-identification processes.

- Data governance committees: Tasked with cataloging data sources, classifying data elements, and prioritizing use cases like research, quality improvement, or AI model development.

- Compliance teams: Oversee adherence to HIPAA and internal policies, conduct audits, manage documentation, and align agreements with anonymization protocols.

Here’s a step-by-step approach to implementing anonymization:

- Define the use case and legal basis: Assess whether the project requires fully de-identified data or if a limited data set suffices.

- Map and classify data: Identify data sources such as EHRs, claims, imaging, and clinical notes, while flagging direct and indirect identifiers.

- Select a de-identification method: Use Safe Harbor (removing all 18 identifiers) or Expert Determination (conducting a statistical risk assessment).

- Design the anonymization pipeline: Employ techniques like generalizing quasi-identifiers (e.g., age ranges, three-digit ZIP codes), suppressing rare data combinations, and applying masking or pseudonymization.

- Execute and validate: Process the data and conduct manual reviews to ensure personal health information (PHI) has been removed while maintaining data utility.

- Document everything: Record risk assessments, expert evaluations, and validation results.

- Control access: Store anonymized data securely, with strict access controls, data use agreements, and continuous monitoring.

To ensure ongoing effectiveness, organizations should maintain updated policies, use version-controlled standard operating procedures (SOPs), and provide regular staff training on identifying PHI and applying anonymization methods. Periodic audits using test datasets can verify that no identifiers remain, creating a strong foundation for compliance and data security.

Using Censinet RiskOps™ for Compliance Management

Incorporating advanced platforms like Censinet RiskOps™ can elevate the efficiency of an anonymization framework. Censinet RiskOps™ is an AI-powered platform designed for healthcare organizations, simplifying risk management for patient data, medical records, and third-party vendors. The platform operates as a cloud-based risk exchange, enabling secure collaboration among healthcare providers and over 50,000 vendors.

Censinet RiskOps™ enhances compliance by automating third-party risk assessments and monitoring de-identification practices. It tracks critical details like de-identification methods, recipients, legal bases, and data expiration dates. Additionally, its cybersecurity benchmarking tools help organizations spot vulnerabilities, secure necessary resources, and ensure anonymization practices align with industry standards.

"Censinet RiskOps allowed 3 FTEs to go back to their real jobs! Now we do a lot more risk assessments with only 2 FTEs required."

- Terry Grogan, CISO, Tower Health

"Benchmarking against industry standards helps us advocate for the right resources and ensures we are leading where it matters."

- Brian Sterud, CIO, Faith Regional Health

The platform's Censinet AI™ feature further streamlines the process by automating vendor security questionnaires, summarizing documentation, and identifying fourth-party risks. It generates comprehensive risk reports while maintaining human oversight for critical tasks like evidence validation and risk mitigation. This balance of automation and human input boosts operational efficiency without compromising compliance.

Maintaining and Improving Anonymization Practices

Anonymization protocols aren’t a one-and-done effort - they require constant monitoring and refinement. Organizations should regularly reassess the risk of re-identification, especially for datasets that are widely shared or stored for extended periods. This involves keeping an eye on emerging re-identification techniques, new external data sources, and updates to regulations.

To stay compliant, healthcare organizations should:

- Review policies regularly to align with HHS guidance.

- Train staff on recognizing PHI and applying anonymization techniques.

- Establish incident response procedures for suspected re-identification, including notification, containment, and remediation steps.

Even with AI tools that speed up processes, HIPAA-compliant workflows must include oversight from privacy officers or other qualified personnel. For high-risk datasets, documented reviews are essential. Regularly benchmarking AI tools - such as measuring their precision and recall in detecting PHI - and using feedback loops to refine models can ensure anonymization practices remain effective and up-to-date over time.

Conclusion

Meeting HIPAA standards for anonymization is about more than just compliance - it's a commitment to safeguarding patient privacy while enabling advancements in research and care. The two de-identification methods approved by HIPAA, when carefully implemented, effectively protect sensitive information while retaining its utility for analysis and clinical insights[1][2].

Striking the right balance between privacy and data usability is key. Techniques such as generalization and k-anonymity ensure data is de-identified without losing its value for research or operational purposes. However, anonymization isn't a one-and-done effort. As new technologies and data sources emerge, the risks of re-identification evolve. This makes ongoing governance, regular audits, and comprehensive workforce training indispensable. These measures work hand-in-hand with broader risk management efforts to keep data secure.

Healthcare organizations that embed anonymization into their risk management processes are better equipped to thrive in a complex landscape. Tools like Censinet RiskOps™ simplify this by centralizing third-party risk assessments, benchmarking cybersecurity practices, and maintaining strong de-identification controls across systems and vendors. This ensures that anonymization protocols remain effective and audit-ready over time. As Matt Christensen, Sr. Director GRC at Intermountain Health, aptly puts it:

"Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare."

Looking ahead, organizations should build on their existing anonymization frameworks by evaluating how data is used, consulting experts for challenging datasets, leveraging tools designed specifically for healthcare, and updating governance policies regularly. By weaving these practices into their operations, healthcare providers can protect patient trust while fostering the research and innovation needed to advance care for all.

FAQs

What’s the difference between the Safe Harbor and Expert Determination methods for de-identifying health data under HIPAA?

The Safe Harbor method protects privacy by either removing or generalizing specific details like names, dates, or geographic information. This makes it extremely difficult to link the data back to an individual. While this method is simple to implement, it can reduce the data's usefulness for certain applications.

On the other hand, the Expert Determination method relies on a qualified expert to apply statistical or scientific techniques to evaluate and lower the risk of re-identification. Though more complex, this approach offers greater flexibility and preserves more of the data's value, all while adhering to HIPAA's privacy standards.

Both methods safeguard patient information, and the choice between them depends on your organization’s specific priorities and objectives.

How do AI tools improve the anonymization of sensitive health data like clinical notes and medical images?

AI tools bring a new level of efficiency to anonymizing sensitive health data. By leveraging advanced algorithms, these tools can pinpoint and remove protected health information (PHI) with impressive accuracy. For instance, when it comes to clinical notes, AI can sift through free text, identifying and redacting sensitive details. This not only cuts down on the need for manual review but also reduces the chances of human error. Similarly, in medical imaging, AI can blur identifiable features, like facial details, while ensuring the image remains useful for diagnostic purposes.

By offering a faster and more precise way to anonymize data, these tools make it simpler for healthcare organizations to securely share information, all while staying compliant with HIPAA regulations.

How can healthcare organizations reduce the risk of re-identification in de-identified data?

Healthcare organizations can reduce the risk of re-identification by implementing thorough anonymization practices in line with HIPAA regulations. This involves using techniques like masking, encryption, and data aggregation to keep sensitive patient information secure.

Conducting regular risk assessments is another critical step to uncover potential weaknesses and maintain compliance. On top of that, AI-powered tools can play a key role by monitoring and addressing risks in real time, bolstering patient data security while ensuring adherence to HIPAA standards.