Human-AI Collaboration: Building Teams That Leverage Both Intelligence Types

Post Summary

They automate tasks such as risk assessments and threat detection while keeping humans responsible for critical decisions.

Oversight prevents overreliance on AI and is required for high-impact AI systems under federal guidance.

Through transparency, explainable AI, reliable performance, and public reporting of AI use cases.

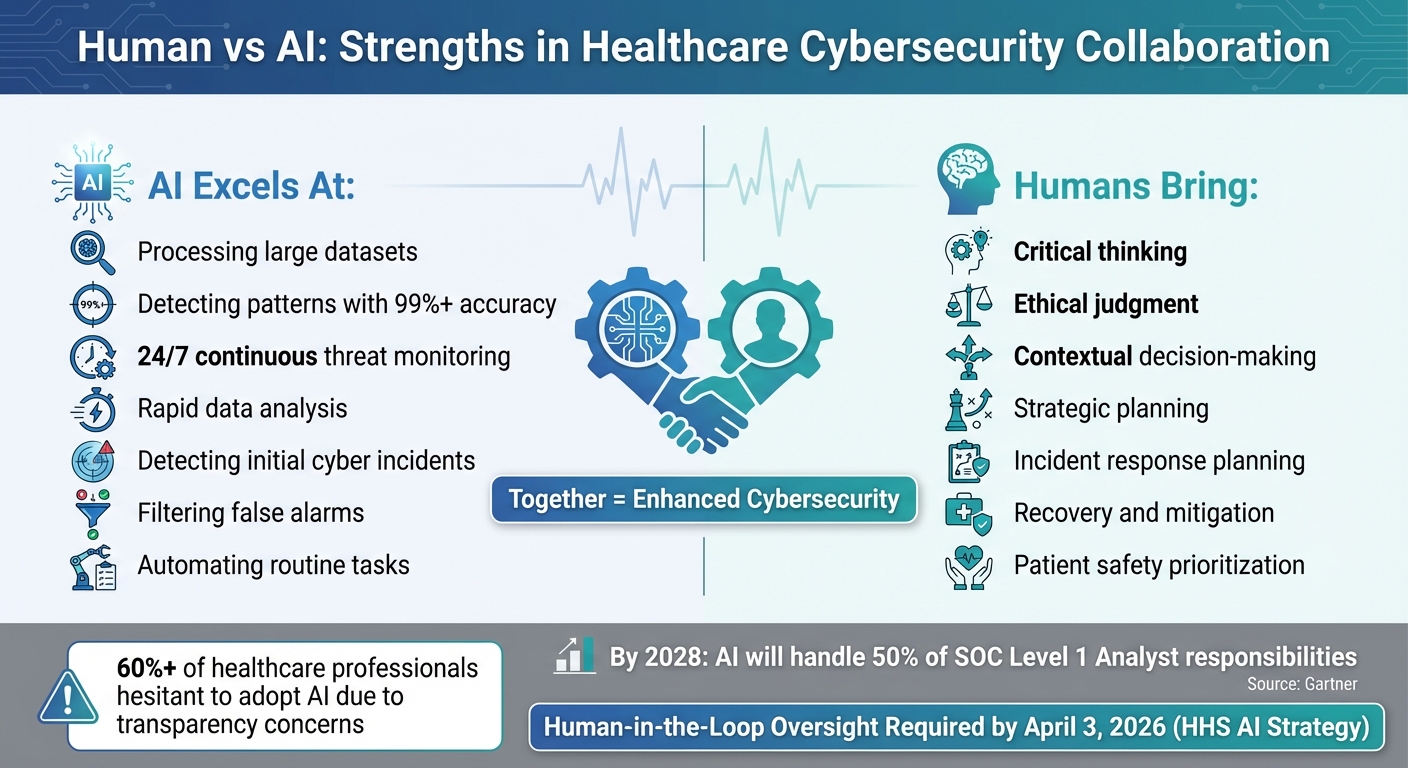

AI handles monitoring, data analysis, and incident detection; humans provide contextual judgment, ethics, and strategic decision-making.

Diverse expertise ensures AI risks are evaluated across governance, clinical, technical, and security domains.

Key Takeaways:

AI tools like Censinet AI streamline tasks such as risk assessments and threat detection while keeping humans in charge of critical decisions. This balance ensures faster responses to threats without sacrificing judgment or ethical considerations. By integrating AI thoughtfully, healthcare organizations can better protect sensitive data and maintain trust in patient care.

Human vs AI Strengths in

Collaboration

Core Principles for Human-AI Collaboration

Collaborating effectively in healthcare cybersecurity requires a thoughtful design that balances automation with human expertise. By focusing on three key principles, organizations can ensure AI systems support human judgment rather than replace it, while meeting the ethical demands of healthcare. These principles are essential for integrating AI into cybersecurity risk management.

Human-in-the-Loop Oversight

Human oversight is crucial to avoid overreliance on automation. The U.S. Department of Health and Human Services (HHS) highlighted this in its December 4, 2025, AI Strategy. This strategy requires that all high-impact AI systems implement minimum risk management practices - such as human oversight - by April 3, 2026. If these safeguards aren’t in place, the AI tool must be discontinued or phased out [9].

The Health Sector Coordinating Council (HSCC) developed a five-level autonomy scale to classify AI tools and ensure that the level of human oversight corresponds to the system's risk [1]. A practical example of this is the FDA's December 2025 rollout of an "agentic AI" platform. Designed to assist employees with complex tasks like regulatory meeting management and pre-market product reviews, the platform incorporates human oversight and remains optional for users [9].

Equally important is the establishment of governance structures. AI Governance Boards, which include leaders from IT, cybersecurity, data, and privacy, should oversee significant AI-related decisions and policies [9].

Building Trust in AI Systems

Once robust oversight is in place, the next step is earning trust in AI systems. Transparency and reliability are key to achieving this. Currently, over 60% of healthcare professionals are hesitant to adopt AI due to concerns about transparency and data security [5]. Their caution is understandable - AI systems can sometimes produce errors if left unchecked.

Explainable AI (XAI) helps address these concerns by making algorithms more understandable and clearly outlining their limitations [5][7]. When cybersecurity teams can grasp how an AI system reaches its conclusions, they’re better equipped to evaluate its recommendations and spot potential mistakes. To promote transparency and public trust, the HHS strategy mandates annual public reporting on AI use cases and risk assessments [9].

It’s also important to manage expectations. While AI excels at processing large datasets and identifying patterns, it lacks the nuanced understanding that humans bring to complex scenarios. Organizations should adjust AI autonomy levels based on the complexity and criticality of tasks [8]. For instance, routine threat monitoring might need minimal human input, but incident response planning requires significant human judgment.

Defining Complementary Roles

To maximize efficiency, human-AI teams should focus on their respective strengths. AI is well-suited for continuous threat monitoring, rapid data analysis, and detecting initial cyber incidents [9][3]. Meanwhile, humans are better at tasks requiring contextual judgment, ethical decision-making, and strategic planning [3].

The HSCC’s AI Cybersecurity Task Group, which includes 115 healthcare organizations, is actively preparing the sector for this complementary approach [1]. Their upcoming 2026 guidance will help organizations incorporate AI-specific risk assessments into existing cybersecurity frameworks while ensuring humans retain responsibility for critical decisions. As AI adoption grows - HHS anticipates a 70% increase in new AI use cases for fiscal year 2025 [9] - clearly defining these roles will be essential to managing cybersecurity risks effectively.

Designing Multidisciplinary Teams for Risk Management

Building diverse, multidisciplinary teams is key to effective human-AI collaboration in healthcare. A notable example is the Health Sector Coordinating Council (HSCC) and its AI Cybersecurity Task Group. Their structured approach highlights the importance of expertise spanning areas like education, operations, governance, secure design, and supply chain transparency [1]. This diversity ensures that AI's analytical capabilities are paired with human judgment to address complex challenges.

The rise of AI automation is reshaping team roles across industries. Gartner projects that by 2028, AI will take over 50% of the responsibilities currently handled by SOC Level 1 Analysts [10]. This shift isn't about reducing staff but about evolving skill sets. As AI takes on tasks like threat detection and log analysis, the demand grows for professionals who can oversee AI systems, interpret intricate data patterns, and make strategic decisions [10]. With ransomware attacks in healthcare surging by 40% over the last 90 days [12], the need for such specialized teams has never been more urgent.

Key Roles in Human-AI Teams

As AI adoption changes team dynamics, clearly defining roles becomes vital. A well-rounded cybersecurity team combines technical know-how, clinical expertise, and strategic oversight. Here's how these roles typically break down:

The HSCC emphasizes the importance of collaboration between cybersecurity and data science teams for effective AI-driven cyber operations [1]. Formal governance structures should outline roles and responsibilities, ensuring clinical oversight throughout the AI lifecycle [1]. This framework helps align technical decisions with real-world clinical needs, turning AI insights into actionable security measures. Additionally, including experts in ethical AI deployment and regulatory compliance ensures that healthcare organizations meet growing demands for transparency and data privacy.

Integrating AI into Collaborative Workflows

Once multidisciplinary teams are in place, the next challenge is embedding AI into their workflows without disrupting efficiency. The goal is to have AI insights complement human decision-making, not create bottlenecks. One example comes from a leading surgical robotics company. By integrating a cloud-based video processing pipeline with secure, AI-driven systems, the company used machine learning models to monitor system activity in real time. This setup flagged unauthorized access attempts and automated security alerts, cutting incident response times by 70% [12]. This showcases how well-integrated human-AI collaboration can drive meaningful results.

Tools like Censinet RiskOps™ further streamline collaboration by offering a unified platform where team members can access AI-generated insights alongside their own analyses. To ensure AI systems work seamlessly with human efforts, it's crucial to provide context for AI insights so security teams can act quickly [11]. Regular vulnerability assessments, system audits, and continuous monitoring also help maintain alignment between human and AI components [7].

Using Censinet AI for Human-Guided Automation

Censinet AI simplifies decision-making by automating key steps in risk assessment - like evidence validation, policy creation, and risk mitigation - while keeping human oversight front and center. This approach helps healthcare organizations expand their cybersecurity efforts without compromising on patient safety or regulatory requirements.

What sets Censinet AI apart is its role as a support tool rather than a replacement for human expertise. Risk teams stay in control by using customizable rules and review processes, ensuring that AI insights enhance, rather than override, human decision-making. This thoughtful balance tackles a major challenge in healthcare: achieving efficiency without losing the depth of analysis needed for complex security decisions. By doing so, Censinet AI accelerates the pace of risk assessments without cutting corners.

Accelerating Risk Assessments

Traditionally, third-party risk assessments have been slow and labor-intensive. Censinet AI changes that by turning this cumbersome process into a more efficient workflow. The platform automates tasks like vendor questionnaires, evidence summaries, integration tracking, and fourth-party risk evaluations. It even generates comprehensive risk summary reports, allowing experts to dedicate their energy to high-level strategic decisions.

The benefits go beyond just saving time. By automating repetitive tasks and initial data analysis, healthcare organizations can address more risks in less time. This is especially critical given the growing number of third-party relationships and the increasing demands on already stretched security teams. While the platform handles routine tasks, human experts can focus on interpreting the findings that require deeper judgment. This combination of speed and human insight ensures that operational efficiency doesn’t come at the cost of effective governance.

Balancing Automation and Human Insight

Censinet AI fosters collaboration by streamlining task routing and coordination across Governance, Risk, and Compliance (GRC) teams. The platform ensures that key findings are sent to the right stakeholders, including AI governance committees, for timely review. This structured workflow strengthens teamwork across disciplines, combining the strengths of human expertise with AI-driven capabilities.

The platform also provides a centralized AI risk dashboard, offering real-time data on policies, risks, and tasks. This hub ensures that the right teams address the right issues at the right time, creating a unified and efficient approach to AI risk management. By maintaining continuous oversight and accountability, the system avoids workflow bottlenecks while keeping operations aligned with industry standards. For healthcare leaders, this means scaling risk management efforts without sacrificing control, ultimately safeguarding both organizational integrity and patient care.

sbb-itb-535baee

Strategies for Threat Detection and Response

The healthcare sector is a prime target for ransomware attacks and data breaches. Defending against these threats requires a mix of AI's speed and human judgment. AI systems excel at monitoring networks nonstop, analyzing vast amounts of data, and flagging unusual activity that could indicate an attack. However, humans are indispensable for interpreting these findings, understanding the bigger picture, and making key decisions about how to respond effectively.

This partnership addresses a critical issue in healthcare cybersecurity: security teams are often overworked as cyber threats become more frequent and complex. By delegating tasks like continuous monitoring and initial analysis to AI, human experts can concentrate on the nuanced decisions that demand experience, intuition, and insight into how security incidents affect patient care and business operations. This collaboration creates specialized roles in monitoring and recovery, ensuring a well-rounded defense.

AI-Driven Threat Monitoring

AI-powered tools are designed to monitor networks, devices, and user behaviors around the clock, identifying suspicious activity that could signal a breach [13]. Using machine learning, these systems can detect malicious files or behaviors that traditional antivirus software might overlook, such as zero-day attacks or polymorphic malware [13]. AI’s true strength lies in its ability to operate 24/7 without fatigue [2][7].

Security Operations Center (SOC) teams reap significant benefits from AI automation. These tools can filter out false alarms, prioritize real threats, and cross-check data from multiple sources. In some cases, AI can even execute predefined response actions autonomously, reducing the need for constant human oversight [13]. This alleviates a common challenge for cybersecurity teams: alert fatigue. By managing and ranking alerts by risk level, AI allows human analysts to focus on addressing genuine threats rather than wasting time on false positives.

Human-Led Recovery and Mitigation

While AI can play a major role in isolating compromised systems and containing threats, human expertise is essential for recovery and mitigation. Security professionals bring critical thinking and contextual awareness that AI cannot replicate. They validate AI findings, assess recommendations, and make informed decisions about remediation. This is especially important when dealing with AI models that may lack transparency in their decision-making processes [3][4][5].

Recovery efforts go beyond technical fixes. Human teams ensure that systems are restored in a way that prioritizes patient safety, maintains business operations, and adheres to regulatory requirements. They also coordinate with different departments, communicate with stakeholders, and implement long-term strategies to minimize the risk of future breaches. Continuous learning and collaboration across teams strengthen recovery efforts [1]. This human-centered approach ensures that recovery plans align with organizational goals, safeguarding what matters most: patient care and the integrity of sensitive data.

Overcoming Challenges in Human-AI Collaboration

To make the most of human-AI collaboration, organizations must tackle some tough challenges. In healthcare cybersecurity, these challenges are particularly pronounced. From technical vulnerabilities to navigating complex regulations and ensuring both AI systems and human teams stay ahead of evolving threats, there’s a lot to manage. Addressing these issues directly helps ensure collaboration stays secure, effective, and focused on protecting patient safety.

Mitigating AI Vulnerabilities

AI systems bring their own set of security risks that can impact their reliability. For example, opaque algorithms can make it hard to understand how an AI reaches its conclusions, while "hallucinations" - or incorrect outputs - can lead to errors in patient data or clinical decisions [3][14][16]. On top of that, AI models are susceptible to threats like data poisoning or adversarial attacks, where bad actors manipulate training data or inputs to disrupt the system or produce faulty results [3][14][16].

The interconnected nature of medical devices, outdated IT systems, and the constant evolution of AI models only add to the complexity. Issues like accidental data exposure and poorly aligned security measures further increase risks in environments driven by AI [3][7][15][14][6]. To counter these vulnerabilities, organizations must prioritize robust security protocols and remain vigilant as technology advances.

Ethical and Regulatory Considerations

The use of AI in healthcare doesn’t just raise technical concerns - it also opens up ethical and legal questions. For instance, when AI makes a mistake, who’s responsible? Without clear accountability, clinicians might end up unfairly blamed for errors caused by AI systems [6][17][18][19].

To address this, healthcare organizations need well-defined governance frameworks. These frameworks should clarify who is responsible for decisions made with AI, ensure human oversight for critical cybersecurity actions, and document how AI systems operate. Following regulations like HIPAA and maintaining transparency about AI processes can build trust among staff and demonstrate regulatory compliance. Staying informed about changes in legal requirements and adjusting AI practices accordingly can help avoid compliance gaps. Together, these steps strengthen the foundation for safe and ethical AI use.

Continuous Training and Adaptation

The fast-changing nature of cybersecurity threats means that both AI systems and human teams need to evolve constantly. Ongoing learning and adaptation are key to making human-AI collaboration work, as both sides improve through regular interaction [18]. Feedback loops between human teams and AI systems allow the technology to refine its responses over time, aligning better with team needs and objectives [18].

Training plays a critical role in this process. Educating staff about AI’s capabilities, limitations, and ethical considerations not only boosts adoption but also leads to better outcomes [18][4]. Training programs should go beyond technical skills to include lessons on when to trust AI recommendations and when to question them. This balanced approach helps human-AI teams stay agile and effective as the cybersecurity landscape continues to shift. By investing in continuous education, organizations can ensure their teams are well-prepared to navigate the challenges ahead.

Conclusion

As we've delved into human-AI collaboration, the key takeaway is the importance of weaving these practices into everyday risk management, especially in healthcare cybersecurity. This field thrives on a partnership where humans contribute critical thinking, ethical judgment, and the ability to navigate complex problems, while AI excels at sifting through massive datasets and spotting patterns that might escape human detection.

Success in this space hinges on assembling multidisciplinary teams with clearly defined roles, maintaining continuous oversight, and establishing effective feedback systems. The forthcoming 2026 guidance from HSCC emphasizes the need for responsible AI adoption, highlighting its growing role in healthcare risk management [1].

Censinet RiskOps™ offers a practical example of how human-guided automation can transform risk management. It speeds up vendor assessments, validates evidence, and directs significant findings - all while ensuring human oversight remains a priority. Meanwhile, Censinet AI™ efficiently processes data and handles initial analyses, but the ultimate decisions stay in the hands of risk teams, thanks to customizable rules and approval workflows that keep human judgment at the forefront.

By blending human expertise with AI capabilities, healthcare leaders can achieve greater operational efficiency, meet regulatory requirements, and, most importantly, enhance patient safety.

The future of healthcare cybersecurity lies in this balanced integration of human skill and AI technology. Investing in the right tools, training, and governance today is essential to safeguarding patient data and ensuring secure, reliable care.

FAQs

How does human oversight improve AI's effectiveness in healthcare cybersecurity?

Human oversight is essential in improving how AI operates within healthcare cybersecurity. By incorporating ethical, legal, and contextual judgment into automated processes, humans ensure greater transparency, accountability, and help reduce the likelihood of system errors or biases.

When professionals review AI-generated alerts, validate threat detections, and make final decisions, they play a key role in minimizing false positives and ensuring regulatory compliance. This partnership between humans and AI not only builds trust but also reinforces the security framework, making it more dependable and better equipped to handle cybersecurity challenges.

How can organizations build trust in AI systems for healthcare collaboration?

To build trust in AI systems, organizations should focus on transparency and making AI outputs straightforward and understandable. Incorporating clinical guidelines into AI decision-making ensures that recommendations align with established medical standards.

Equally important is maintaining human oversight - AI should complement human expertise, not replace it. Having clear protocols for intervention keeps critical decisions firmly in human hands. Lastly, fostering collaboration between AI systems and healthcare professionals strikes a balance where technology supports human judgment rather than overshadowing it.

Why is it essential to clearly define roles in human-AI healthcare teams?

Defining roles clearly within human-AI healthcare teams is crucial to ensuring tasks are distributed effectively. This approach leverages the strengths of both human professionals and AI systems, minimizes confusion, improves collaboration, and fosters trust among team members.

When responsibilities are well-defined, each contributor can focus on their strengths. AI systems shine when it comes to processing and analyzing large datasets at incredible speed, while humans provide critical thinking, empathy, and the nuanced judgment required for context-specific situations. This balance is particularly vital in healthcare, where trust and clear communication directly influence patient safety and outcomes.

Related Blog Posts

- The Human Element: Why AI Governance Success Depends on People, Not Just Policies

- The AI Risk Manager: Human Intuition Meets Machine Intelligence

- The Human Factor: Why People Remain Critical in AI-Driven Organizations

- The AI Risk Professional: New Skills for a New Era of Risk Management

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How does human oversight improve AI's effectiveness in healthcare cybersecurity?","acceptedAnswer":{"@type":"Answer","text":"<p>Human oversight is essential in improving how AI operates within healthcare cybersecurity. By incorporating ethical, legal, and contextual judgment into automated processes, humans ensure greater transparency, accountability, and help reduce the likelihood of system errors or biases.</p> <p>When professionals review AI-generated alerts, validate threat detections, and make final decisions, they play a key role in minimizing false positives and ensuring regulatory compliance. This partnership between humans and AI not only builds trust but also reinforces the security framework, making it more dependable and better equipped to handle <a href=\"https://censinet.com/resource/challenges-remain-for-healthcare-cybersecurity\">cybersecurity challenges</a>.</p>"}},{"@type":"Question","name":"How can organizations build trust in AI systems for healthcare collaboration?","acceptedAnswer":{"@type":"Answer","text":"<p>To build trust in AI systems, organizations should focus on <strong>transparency</strong> and making AI outputs straightforward and understandable. Incorporating <strong>clinical guidelines</strong> into AI decision-making ensures that recommendations align with established medical standards.</p> <p>Equally important is maintaining <strong>human oversight</strong> - AI should complement human expertise, not replace it. Having clear protocols for intervention keeps critical decisions firmly in human hands. Lastly, fostering <strong>collaboration</strong> between AI systems and healthcare professionals strikes a balance where technology supports human judgment rather than overshadowing it.</p>"}},{"@type":"Question","name":"Why is it essential to clearly define roles in human-AI healthcare teams?","acceptedAnswer":{"@type":"Answer","text":"<p>Defining roles clearly within <a href=\"https://censinet.com/resource/promise-and-peril-of-ai-in-healthcare\">human-AI healthcare teams</a> is crucial to ensuring tasks are distributed effectively. This approach leverages the strengths of both human professionals and AI systems, minimizes confusion, improves collaboration, and fosters trust among team members.</p> <p>When responsibilities are well-defined, each contributor can focus on their strengths. AI systems shine when it comes to processing and analyzing large datasets at incredible speed, while humans provide critical thinking, empathy, and the nuanced judgment required for context-specific situations. This balance is particularly vital in healthcare, where trust and clear communication directly influence patient safety and outcomes.</p>"}}]}

Key Points:

Why is human oversight critical in AI-driven healthcare cybersecurity?

- Prevents overreliance on automation and ensures high-risk systems follow required oversight practices.

- Aligns with federal guidelines, which mandate human oversight for high-impact AI.

- Protects decision integrity by ensuring humans validate recommendations and catch errors.

- Supports governance boards that approve policies and high-stakes AI decisions.

How can organizations build trust in AI systems?

- Use explainable AI (XAI) to clarify how outputs are generated.

- Improve transparency through public reporting on risks and AI use cases.

- Set realistic expectations by emphasizing AI’s limitations and appropriate autonomy levels.

- Ensure reliability so teams can confidently validate results.

What strengths do humans and AI each bring to cybersecurity?

- AI excels at: continuous monitoring, rapid pattern detection, log analysis, early breach alerts.

- Humans excel at: contextual interpretation, ethical judgment, prioritization, long-term strategy.

- Together, they reduce risk by combining speed with nuance.

- Complementary roles prevent errors caused by either humans or automation alone.

Why are multidisciplinary teams essential for safe AI use?

- Automates repetitive tasks like vendor questionnaires and evidence summaries.

- Documents integrations and evaluates fourth‑party risks.

- Routes findings to governance committees for human approval.

- Provides a real-time dashboard with enterprise-wide visibility into AI-related risks.

How should AI be embedded into cybersecurity workflows?

- Integrate AI insights without disrupting clinical or security operations.

- Provide context around automated recommendations.

- Use continuous monitoring and system audits to catch drift or vulnerabilities.

- Keep humans accountable for final actions and escalation decisions.