Human-Centered AI Safety: Keeping People at the Heart of Intelligent Systems

Post Summary

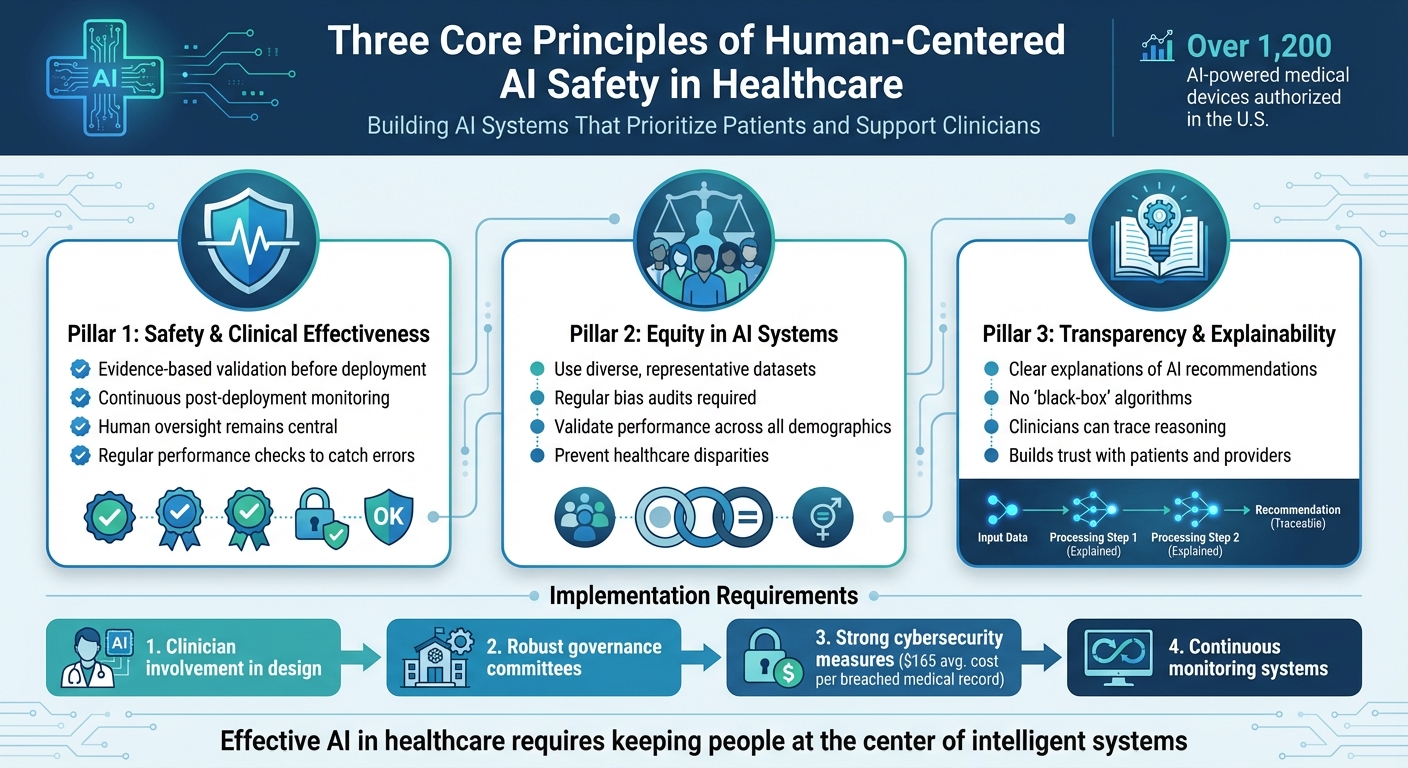

AI in healthcare is advancing quickly, but are we prioritizing patient safety and clinician needs? This article explores how human-centered AI design ensures technology supports - rather than replaces - human judgment in healthcare settings. Key takeaways include:

- Patient safety: AI systems must be rigorously tested and monitored to avoid errors and harm.

- Equity: Bias in datasets can lead to disparities in care; diverse and representative data is critical.

- Transparency: Clinicians and patients need clear explanations of AI recommendations to build trust.

With over 1,200 AI-powered medical devices authorized in the U.S., the stakes are high. Effective solutions require clinician involvement, robust governance, and strong cybersecurity measures to protect patient data and ensure ethical AI use. By keeping people at the center, healthcare organizations can improve outcomes while minimizing risks.

Three Core Principles of Human-Centered AI Safety in Healthcare

Core Principles of Human-Centered AI Safety

To tackle the challenges we’ve discussed, healthcare organizations must ground artificial intelligence in principles that prioritize patient safety and support clinicians. Crafting AI systems that genuinely benefit both patients and healthcare providers requires more than just good intentions - it demands a firm commitment to principles that mitigate risks and ensure technology enhances care quality rather than undermines it. Let’s explore three key principles essential to achieving this balance.

Safety and Clinical Effectiveness

Patient safety must always come first. In healthcare, AI systems need thorough, evidence-based validation before they’re ever put to use. But the work doesn’t stop there - continuous monitoring after deployment is critical to ensure these tools consistently improve health outcomes without introducing harm [3][1][6]. This means testing AI in real-world environments and keeping a close eye on its performance as medical practices and patient needs evolve.

Accuracy and reliability are non-negotiable when AI is used to support crucial decisions. Oversight systems must be in place to catch errors before they affect patients, and post-deployment monitoring should identify any performance drifts that could compromise care. All of this ensures that human oversight remains central to the process. And beyond just being accurate, AI must work for everyone - fairly and equitably.

Equity in AI Systems

AI systems that rely on limited or biased datasets risk perpetuating existing disparities in healthcare - or even making them worse. Fairness isn’t just a goal; it’s a necessity. To address this, healthcare organizations must use datasets that reflect the full diversity of patient populations. Regular audits and proactive steps to correct biases are essential [3][1][6].

For example, an AI diagnostic tool that performs well for one demographic but poorly for another can have devastating consequences. Preventing such outcomes requires deliberate effort at every stage - from data collection to algorithm design to clinical implementation. Bias must be identified and corrected before it has a chance to harm patients. And as we’ll discuss next, trust in AI systems also hinges on their ability to clearly explain their reasoning.

Transparency and Explainability

Both clinicians and patients need to understand how AI systems arrive at their conclusions. Black-box algorithms that can’t explain their logic undermine trust and make validation impossible. To be effective, AI systems must clearly communicate their purpose, data sources, and limitations. This allows healthcare professionals to interpret recommendations accurately and maintain the skepticism necessary for sound clinical judgment [3][1][2][4][5][6].

When an AI tool suggests a course of action, clinicians should be able to trace its reasoning, cross-check it against their own expertise, and explain it to patients in plain terms. This isn’t just about building confidence in the technology - it’s about ensuring that human oversight remains at the heart of patient care. Ultimately, the responsibility for patient outcomes must stay where it belongs: with the healthcare professionals who use these tools.

How to Design Human-Centered AI Systems

Creating AI systems that genuinely work in healthcare requires more than just technical expertise - it demands collaboration with the people who will use them. This section highlights how involving stakeholders, ensuring data integrity, and prioritizing user-friendly design come together to build AI systems centered around human needs. The key is to bring the right voices to the table from the very beginning.

Co-Design with Clinicians and Patients

Involving clinicians, patients, and support staff in the design process isn't just helpful - it's essential. Healthcare organizations need to engage these groups at every stage of AI development, from the initial brainstorming phase to pilot testing and beyond. Clinicians, for example, can define tasks, shape user interface requirements, and provide feedback on how AI communicates its recommendations. They also play a critical role in setting boundaries for when AI tools should defer to human decision-making.

Take HCA Healthcare as an example. They actively include frontline staff in creating AI-driven nurse staffing models, ensuring the tools address real-world problems and are practical in clinical settings [8]. Even federal agencies are now recognizing the importance of this approach, linking funding to clear plans for clinician involvement through design teams, advisory panels, and structured feedback mechanisms [1].

Ensuring Data Quality and Representation

Good design starts with good data. Without high-quality, representative datasets, AI systems risk reinforcing biases or even introducing new disparities in healthcare. To address this, organizations must ensure their datasets reflect the diversity of the populations they serve. Performance should be validated across demographic groups to catch any disparities early on [1]. Regular bias audits are essential, and any identified issues should prompt immediate updates or retraining of the AI systems [1].

Managing data quality isn't a one-time task - it requires constant oversight and input from interdisciplinary teams. A strong commitment to fairness and accuracy is crucial to ensure AI tools remain reliable and equitable over time [7].

Human Factors and Usability Engineering

AI systems often fail when they don’t align with how clinicians actually work. That’s where human factors engineering comes in. This approach focuses on creating interfaces that reduce errors, fit naturally into clinical workflows, and remain easy to use - even in high-pressure situations. Simplicity is especially important in critical moments. For example, emergency response systems should be intuitive and efficient, like a one-button alert system designed to match natural reactions during stress [9].

Another crucial element is interoperability. AI tools must integrate seamlessly with existing systems like Electronic Health Records (EHRs) to avoid inefficiencies, redundant testing, or potential errors [1]. The goal is to enhance, not replace, human decision-making - AI should empower clinicians to make better, faster decisions without losing the human touch [9].

"Only by involving patients, SPs and physicians in AI development can these technologies unfold their full potential to deliver equitable, interpretable and patient-centered healthcare." - Zeineb Sassi et al. [7]

Trust is built when clinicians have a meaningful role in validating and guiding AI systems. This not only grounds the technology in clinical expertise but also reduces skepticism about opaque or unreliable algorithms [1]. By aligning AI tools with real-world workflows, they can seamlessly support and enhance clinical decision-making.

Governance and Continuous Oversight of AI Safety

Creating AI systems is just the beginning; ensuring their safety requires ongoing governance and vigilance. Healthcare organizations must implement structured frameworks that provide clear oversight, address risks throughout the AI lifecycle, and respond swiftly when problems arise. Even the most carefully designed AI tools can deviate from their original purpose or introduce unexpected risks over time. A well-thought-out framework establishes the groundwork for effective committees and continuous monitoring.

Establishing AI Governance Committees

An AI governance committee plays a critical role in overseeing how AI systems are chosen, validated, and monitored. These committees should bring together a diverse group of experts, including clinical leaders, IT security professionals, data scientists, ethicists, legal advisors, and risk management specialists. Their responsibilities go beyond the initial approval phase, encompassing ongoing oversight of AI applications, vendor partnerships, clinical rollouts, and post-deployment performance tracking[11].

The committee must develop policies to ensure AI systems remain secure, ethical, compliant, and aligned with clinical standards. This involves merging IT governance with healthcare ethics and clinical best practices[10]. By fostering accountability, these governance structures promote the safe integration of AI while prioritizing patient safety[13]. Embedding these committees into the broader healthcare ecosystem ensures that both domain-specific knowledge and AI expertise are consistently applied[11].

Managing Risks Throughout the AI Lifecycle

AI risk management is an ongoing process that spans the entire lifecycle - from the initial concept to deployment and eventual retirement. A robust framework should address three essential stages: Concept Review and Approval, Design & Deployment, and Continuous Monitoring & Validation[15]. At each stage, organizations should document the model's purpose, data sources, outputs, and decision-making processes to maintain transparency and accountability[15].

This framework must remain adaptable, keeping pace with advancements in AI capabilities, emerging technologies like generative AI, and unexpected risks[12]. Integrating AI governance into existing clinical governance, digital health, risk management, and quality improvement processes ensures consistency and alignment across the organization[12][15]. Such integration helps healthcare leaders navigate complex regulations and ethical challenges effectively.

Continuous Monitoring and Incident Response

Ongoing monitoring is essential because AI models can deteriorate over time, potentially compromising their accuracy, responsiveness, and fairness[1][14]. Regular performance checks, near-miss reporting, and root-cause analyses are critical for identifying and addressing issues before they impact patients. Evaluating performance across different demographic groups also helps ensure AI systems remain fair and effective.

Post-deployment evaluations should be continuous, with clear protocols for addressing problems as they arise. Organizations should define escalation procedures that direct critical findings to relevant stakeholders, including members of the AI governance committee, for prompt action. Centralized dashboards can provide real-time insights into system performance[15]. Through diligent oversight, healthcare leaders can maintain a proactive approach to AI risk management, keeping patient safety at the core of every decision.

sbb-itb-535baee

Integrating Cybersecurity into AI Risk Management

In healthcare, ensuring AI safety and cybersecurity work together is essential. Federal agencies have approved over 1,200 AI-powered medical devices, mainly in radiology, cardiology, and pathology, making the protection of patient data a critical priority [1]. Personal medical data is especially valuable, fetching over 10 times the price of credit card data on the black market, with each breached record costing around $165 on average [18]. This underscores the need for a thorough risk evaluation that connects clinical outcomes to robust cybersecurity measures.

Risk Assessment for AI in Healthcare

Assessing AI risks in healthcare requires a focus on clinical impact, data sensitivity, and system criticality, paired with continuous monitoring. High-stakes decisions that affect mortality or morbidity demand stringent cybersecurity measures alongside clinical validation to guard against data manipulation and unauthorized access during post-market surveillance [1]. Automated monitoring systems can proactively identify near misses and key risk indicators [8]. Tracking metrics such as threat detection rates, access control efficiency, and incident response times provides a clearer picture of how cybersecurity enhances system resilience [1]. A well-executed risk assessment also sets the stage for managing third-party responsibilities effectively.

Vendor and Third-Party Risk Management

Holding vendors accountable is an integral part of a broader governance strategy. Third-party AI vendors must meet strict safety, privacy, and security standards. Developers should involve clinicians at every stage - from defining clinical tasks and validating system interpretability to piloting AI tools in real-world workflows - to address safety and bias issues [1]. Outcomes-based contracts, which tie funding to measurable improvements in patient outcomes, can incentivize the development of safer and more effective AI tools [1]. Additionally, using representative training data ensures fair and accurate results across diverse populations. Transparency is essential, especially as over 60% of healthcare professionals have expressed concerns about adopting AI due to issues like data security and lack of clarity [17].

Technical Safeguards and Training

Beyond vendor management, technical controls play a key role in securing AI systems. Network monitoring and access controls help detect and limit unauthorized AI applications, while multi-factor authentication for platforms handling Protected Health Information (PHI) and detailed audit trails enhance accountability [18]. Following interoperability standards, such as those inspired by TEFCA, allows AI systems to integrate smoothly with various electronic health records while safeguarding patient privacy [1].

Equally important is training staff to work effectively with AI systems. Educational programs covering basic AI concepts, machine learning principles, potential risks, and mitigation techniques can improve clinician confidence and reduce issues like algorithm aversion [1][7][16]. Playbooks outlining how to detect, respond to, and recover from AI cyber incidents - such as model poisoning, data corruption, and adversarial attacks - are critical. With healthcare data breaches averaging $9.8 million in costs, these investments are essential for protecting both patients and organizations [18].

Conclusion: Key Takeaways for Human-Centered AI Safety

To wrap up, a human-centered approach to AI safety in healthcare hinges on a few essential principles and actionable strategies that keep both patients and clinicians at the forefront.

Core Principles and Practical Strategies

At its heart, human-centered AI safety revolves around safety, equity, and transparency. These guiding principles shape strategies that address real-world needs. AI systems should be developed with humans as collaborators, not just end-users. This involves weaving human values into every design choice. For example, working directly with clinicians and patients during the design process, ensuring datasets represent diverse populations, and applying human factors engineering to make tools both effective and user-friendly. Together, these efforts aim to create AI systems that enhance health outcomes while adhering to ethical standards [1][3][5]. However, this approach also demands constant oversight to adapt to new challenges as they arise.

Continuous Governance and Cybersecurity: A Must-Have

AI systems aren't static - they evolve due to shifts in patient demographics, clinical practices, or software updates. Without ongoing governance, these changes can lead to safety risks [1]. Regular monitoring, such as bias audits and gathering feedback from stakeholders, plays a critical role in addressing these vulnerabilities [1].

As one expert puts it:

"Technology alone cannot create a culture of safety. That responsibility rests with leaders who model accountability, allocate resources, and communicate the importance of safety as a shared organizational value" [9].

In tandem with governance, robust cybersecurity measures are vital. They protect patient data while ensuring systems remain compatible and secure.

Actionable Steps for Healthcare Leaders

For healthcare leaders, turning these strategies into action is key. Start by setting up AI governance committees that bring together clinicians, patients, and cybersecurity specialists. Establish systems for post-market surveillance to track AI performance in real-world clinical environments [1]. Negotiate outcomes-based contracts with vendors to ensure accountability and provide staff with training on AI basics, potential risks, and mitigation strategies [1].

Ultimately, the success of AI in healthcare depends on how well it is governed, monitored, and integrated. By keeping human-centered design principles at the core and maintaining vigilant oversight, healthcare organizations can unlock AI's potential while safeguarding what matters most: patient safety and high-quality care [19].

FAQs

How can healthcare organizations create AI systems that are fair and free from bias?

Healthcare organizations can work toward creating fair and unbiased AI systems by starting with diverse and representative datasets. These datasets should mirror the populations the technology is intended to serve, ensuring that no group is left out or misrepresented. Including clinicians and other key stakeholders in the design process is equally important. Their involvement helps ensure the technology addresses practical, real-world challenges and avoids unintended negative effects.

Regular monitoring and evaluation play a vital role in maintaining fairness. By performing routine performance assessments and addressing any disparities that emerge, organizations can refine their AI tools to better support equitable healthcare outcomes. Additionally, emphasizing transparency and accountability builds trust, making it easier for patients and providers to embrace these technologies.

How do clinicians and patients contribute to the development of AI systems in healthcare?

Clinicians and patients play a crucial role in developing AI systems for healthcare. Their input ensures these technologies focus on safety, ethical considerations, and practical usability. Clinicians contribute their medical expertise to verify that AI recommendations align with established standards and meet the needs of patient care.

Patients, meanwhile, provide essential feedback on how user-friendly and accessible these systems are, helping to create tools that are easy to navigate and responsive to their requirements. By working closely with both groups, AI systems can be designed to meet human-centered objectives, ultimately leading to safer and more effective healthcare solutions.

Why is it important to continuously monitor AI systems in healthcare?

Continuous monitoring plays a key role in keeping AI systems in healthcare safe, accurate, and dependable. It ensures that these systems remain aligned with ethical principles and prioritize patient well-being, even as circumstances or data change.

With consistent oversight, healthcare organizations can promptly detect and address risks such as bias, errors, or unexpected outcomes. Taking this proactive stance not only safeguards patient safety but also strengthens trust and allows systems to evolve alongside the demands of real-world clinical environments.