The Human Factor: Why People Remain Critical in AI-Driven Organizations

Post Summary

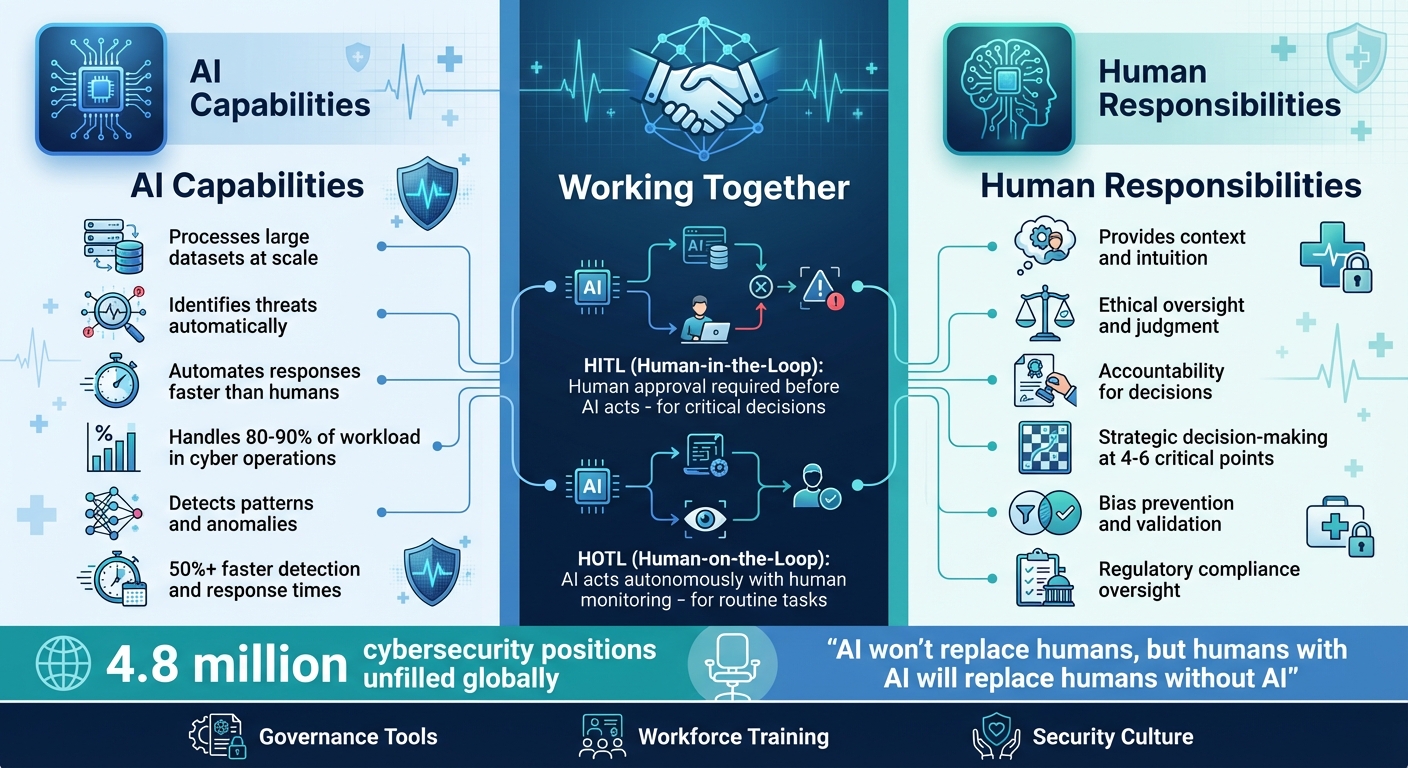

AI is transforming industries, but it can't replace human judgment. While AI excels at analyzing data and automating tasks, people are essential for ethical decision-making, risk assessment, and accountability. In sectors like healthcare cybersecurity, where patient safety is paramount, the collaboration between humans and AI is key.

Here’s what you need to know:

- AI Strengths: Processes large datasets, identifies threats, and automates responses faster than humans.

- Human Expertise: Provides context, intuition, and ethical oversight that AI lacks.

- Collaboration Models: Human-in-the-Loop (HITL) for critical decisions and Human-on-the-Loop (HOTL) for monitoring automated tasks.

- Governance Tools: Platforms like Censinet RiskOps™ ensure humans remain in control, with clear oversight and accountability.

- Workforce Skills: Teams need training to operate AI systems while addressing emerging risks and compliance challenges.

AI and humans work best together. Automation improves efficiency, but human oversight ensures ethical, safe, and responsible outcomes.

Human vs AI Capabilities in Healthcare Cybersecurity

What AI Cannot Replace: Core Human Responsibilities

AI is undeniably powerful at crunching data and identifying patterns, but in the realm of healthcare cybersecurity, it can't replace the human touch. Judgment, accountability, and ethics remain firmly in the hands of people. Below, we’ll explore the key roles humans play in complementing AI’s capabilities.

Leadership Accountability and Regulatory Compliance

Chief Information Officers (CIOs), Chief Information Security Officers (CISOs), and AI governance committees bear the ultimate responsibility for ensuring patient safety and compliance with regulations like HIPAA, NIST, and federal standards. These leaders must carefully weigh AI’s potential against the need for human-centered care by establishing strong governance, risk management, and compliance frameworks. Their job is to prevent discrimination, protect patients, and make tough calls about AI deployment.

For example, human leaders decide when and how to implement AI, assess acceptable risks, and determine the appropriate response to system failures. These decisions can't be left to algorithms - they require human accountability at every step.

On top of regulatory responsibilities, leaders must stay vigilant against ethical pitfalls, particularly AI’s tendency to reflect and amplify biases.

Ethical Oversight and Bias Prevention

AI systems, especially in high-stakes environments like healthcare, are not immune to bias. That’s where human oversight becomes critical. Skilled reviewers are essential for spotting when AI systems make inappropriate recommendations, misuse sensitive data, or produce biased outcomes that could harm patients.

Humans must have the authority to step in and override automated decisions when necessary. This ensures that technology serves people, not the other way around, and that health, safety, and individual rights are protected.

Creating a Security-Aware Culture

A strong security culture starts with people. Human errors - often exploited by attackers - are a major cause of data breaches [4]. Leaders play a crucial role in addressing these vulnerabilities by tackling biases like familiarity bias (trusting what feels familiar) and optimism bias (underestimating risks).

Building this culture involves more than just policies. Leaders need to clearly communicate what AI can and cannot do, guide organizations through the changes that come with adopting new technologies, and foster trust by being transparent about AI-related risks.

Ongoing training and open conversations are key to this process. By educating teams and encouraging dialogue, organizations can create an environment where AI complements human judgment, rather than replacing it.

How to Balance Automation with Human Control

Striking the right balance between AI automation and human oversight is crucial in healthcare, where patient safety and regulatory compliance are non-negotiable. Despite the growing presence of AI, human expertise remains irreplaceable. The challenge lies in determining when AI should operate independently and when human intervention is necessary.

Human-in-the-Loop vs. Human-on-the-Loop Models

To maintain control over AI systems, two main oversight models are commonly used: Human-in-the-Loop (HITL) and Human-on-the-Loop (HOTL).

- HITL requires human approval before AI decisions are implemented.

- HOTL allows AI to act on its own but keeps humans in a monitoring role, ready to step in if needed [5][6][7].

In healthcare cybersecurity, HITL is ideal for high-stakes decisions. For instance, when AI detects a vulnerability in a medical device, such as an infusion pump, a security analyst reviews the flagged issue, confirms the risk, and approves the appropriate actions. On the other hand, HOTL is better suited for lower-risk tasks, like routine log monitoring or automated patch updates for non-critical systems. In these cases, AI handles the day-to-day work while human teams oversee performance and intervene if problems arise.

Effective oversight depends on understanding how the AI system operates. This includes knowing the data it uses, its decision-making process, and its limitations. Transparency is key, as over 60% of healthcare professionals report hesitancy in adopting AI due to concerns about data security and lack of visibility [8]. This highlights the importance of clear, accessible insights into AI systems.

Both HITL and HOTL models support governance tools like Censinet RiskOps™ and Censinet AI, which help maintain this balance.

Implementing Governance with Censinet RiskOps™ and Censinet AI

Censinet platforms streamline risk management without sidelining human judgment. For example, Censinet AI speeds up third-party risk assessments by enabling vendors to complete security questionnaires quickly and automatically generating summaries of evidence. However, human oversight remains integral.

Risk teams stay in control through customizable rules and review processes. When Censinet AI produces a risk summary, stakeholders review and validate the findings before moving forward. The system acts as "air traffic control" for AI governance, ensuring critical tasks and assessments are routed to the right experts - be it cybersecurity specialists, legal advisors, or members of an AI governance committee.

Meanwhile, Censinet RiskOps serves as a centralized hub, providing real-time data through an AI risk dashboard. This dashboard creates detailed audit trails, documenting who reviewed what, when decisions were made, and why specific actions were taken. Such transparency ensures accountability and compliance while allowing organizations to scale their operations safely and efficiently.

Clearly defined roles are another critical piece of this puzzle, ensuring every AI decision is reviewed by the right person.

Assigning Clear Roles and Responsibilities

Unclear responsibilities around AI workflows can lead to serious vulnerabilities. A RACI matrix (Responsible, Accountable, Consulted, Informed) helps clarify who handles each aspect of AI-related decisions.

For example, in vendor risk management:

- Security analysts are responsible for conducting assessments.

- The CISO is accountable for final approvals.

- IT teams are consulted on technical requirements.

- Executive leadership is informed about high-risk vendors.

In incident response workflows:

- Teams monitoring AI alerts are responsible.

- Those validating and escalating threats are accountable.

- Technical experts are consulted.

- Stakeholders receiving updates are informed.

Human teams play a vital role in monitoring AI outputs, interpreting complex patterns, and addressing issues that automated tools might miss. They validate AI recommendations, manage escalated incidents, and adapt configurations to evolving threats [9][10][11]. Regular audits of AI systems help catch and resolve errors or biases promptly [12]. Cross-functional teams - including cybersecurity experts, ethicists, legal advisors, and leadership - oversee areas like AI ethics, algorithmic risks, and data governance [11][13][14].

Without proper accountability and escalation processes, organizations risk repeating failures like the 2024 WotNot data breach. This incident exposed critical weaknesses in AI systems and underscored the importance of robust cybersecurity measures [8].

Managing Security Incidents in AI-Enhanced Environments

Traditional incident response plans often fall short when addressing AI-specific threats like compromised AI agents, AI-generated phishing scams, data poisoning, adversarial attacks, and AI-induced data leaks. While AI operates on a massive scale, the ultimate responsibility for strategic decisions still rests with human experts.

The data paints a striking picture. In one AI-driven cyber espionage campaign, AI handled 80-90% of the workload, but human intervention was necessary at 4-6 critical decision points [15]. This underscores a key point: AI can perform tasks at scale, but humans remain indispensable for guiding strategy. Organizations that effectively integrate AI and automation into their cybersecurity functions report detection and response times that are over 50% faster than their peers [17].

Updating Incident Response Procedures for AI Threats

Standard incident response protocols weren't built with AI-specific challenges in mind. For example, healthcare organizations now need to develop specialized AI incident response playbooks to tackle scenarios such as model drift, compromised AI pipelines, prompt injection attacks, and unauthorized data exposure via AI systems [19]. These playbooks must include clear human checkpoints, especially during critical stages like containment, where decisions could directly impact patient care or regulatory compliance.

When an AI system flags suspicious activity, human analysts must quickly verify the threat and evaluate its implications for patient safety and compliance. Take, for instance, a scenario where AI detects a compromised device. While the system can automatically isolate the threat, human experts must assess whether this action might disrupt patient care or create greater risks than the threat itself.

Organizations should also implement fail-closed kill switches that halt AI outputs until human authorization is provided to reactivate them [19]. Quarterly red team exercises are crucial for uncovering weaknesses in AI response protocols [19]. These measures not only help contain threats but also improve systems over time.

Learning from Incidents Through Human Analysis

Once updated protocols are followed during an incident, post-incident analysis becomes the next critical step in strengthening defenses. While AI can generate detailed reports almost instantly, it cannot replace the strategic insights gained from human-led after-action reviews. These reviews evaluate how well AI tools performed, identify areas for algorithm improvement, and refine policies based on the vulnerabilities exposed during the incident [16].

Human analysts offer a level of contextual understanding that AI lacks. They consider broader factors such as business impact, geopolitical dynamics, and attacker motivations [16][17]. Analysts ask questions that AI simply cannot: Why was this specific vulnerability targeted? What does this reveal about emerging threat trends? How can defenses be adjusted to prevent similar attacks in the future? This type of creative problem-solving is essential for addressing novel threats that fall outside AI’s programmed responses [16][17].

The process doesn’t end with the initial response. A robust feedback loop is vital for long-term success. Security teams must identify AI mistakes, flag inaccuracies, and refine decision-making through structured validation processes [18]. For example, when AI misclassifies a threat or misses a critical indicator, human analysts document the error and update the system’s training data. This continuous improvement ensures that AI systems evolve alongside the ever-changing threat landscape. With 93% of cybersecurity professionals anticipating AI-enabled threats to impact their organizations [17], this human-led refinement process is not just helpful - it’s essential for staying ahead of increasingly complex attacks.

sbb-itb-535baee

Developing Workforce Skills for AI-Integrated Security

The cybersecurity field is grappling with a massive workforce gap - 4.8 million positions remain unfilled globally, and nearly half of all jobs (47%) are vacant [1]. For healthcare organizations incorporating AI into their security operations, this shortage highlights an urgent need: upskill existing teams while building new capabilities. The challenge isn't just about hiring; it's also about equipping current staff to work effectively with AI while maintaining critical oversight. Here's a closer look at the skills and strategies needed to meet this demand.

Skills Needed for AI-Era Cybersecurity

Healthcare cybersecurity professionals now need a blend of traditional expertise and advanced AI knowledge, along with a firm grasp of healthcare regulations. Teams must understand how to operate AI systems, pinpoint potential weaknesses, and interpret AI-generated outputs within the context of patient care and compliance.

New roles are also emerging to address AI-specific risks. These positions demand strong cybersecurity fundamentals alongside an understanding of AI architectures, such as expertise in detecting threats like data poisoning. Additionally, staff must ensure that AI-driven decisions do not inadvertently expose sensitive health data or create compliance issues.

Crafting Targeted Training Programs

Generic cybersecurity training simply doesn’t cut it in an AI-driven environment. Healthcare organizations must design role-specific training programs tailored to the unique challenges posed by AI. For instance, IT practitioners need hands-on experience in identifying AI anomalies, while security analysts must develop techniques to mitigate risks tied to AI system manipulation. These programs should emphasize not only technical skills but also ethical decision-making.

Regular updates to training are essential to keep pace with advancements in AI and emerging threats. The objective is twofold: to ensure technical competence and to nurture critical thinking skills. Staff must be prepared to question AI outputs and take action when system recommendations could jeopardize patient safety.

Building AI Governance Committees

Structured oversight is key to maintaining human control over AI systems, and cross-functional AI governance committees are an effective solution. These committees bring together representatives from IT, security, clinical leadership, compliance, legal, and ethics to evaluate AI deployments, ensuring they align with organizational goals and regulatory standards [20]. By incorporating diverse perspectives, these groups can identify potential blind spots that might otherwise go unnoticed.

These committees also expand existing risk assessment frameworks to include AI-specific considerations, which are rigorously reviewed [20]. Tools like Censinet RiskOps can centralize this oversight process, routing critical AI risk findings to the appropriate stakeholders for review and action. This ensures accountability, making sure the right experts address the right risks at the right time.

Conclusion

Balancing the strengths of AI with human expertise is essential, as highlighted earlier. Here's why human oversight remains irreplaceable.

While AI can process massive amounts of data and identify patterns far faster than humans, it lacks the intuition, ethical judgment, and nuanced decision-making that experienced professionals bring to the table [3]. The real power lies in combining the two: AI handles repetitive tasks efficiently, while humans step in for strategic decisions, ethical considerations, and complex problem-solving [3][1]. As Karim Lakhani from Harvard Business School aptly puts it:

"AI won't replace humans, but humans with AI will replace humans without AI" [2].

A great example of this synergy is how Censinet RiskOps operates. It uses AI to flag critical risks and forward them to the appropriate stakeholders for review. However, it’s the human oversight that validates these findings and ensures decisions align with compliance requirements and organizational values [21][18]. This "trust but verify" approach not only enhances accountability but also ensures that AI's efficiency is used responsibly.

For organizations, especially in sectors like healthcare grappling with workforce shortages and the growing threat of cybercrime [22], the answer isn’t choosing between human expertise and AI. Instead, investing in workforce training and establishing strong governance ensures AI can be deployed safely and effectively.

FAQs

Why is human oversight essential in AI-powered healthcare systems?

Human involvement is crucial in AI-driven healthcare to ensure outcomes are accurate, biases in algorithms are addressed, and the subtle contexts of patient care are properly understood. While AI is exceptional at analyzing large datasets, it falls short when it comes to grasping human emotions, ethical dilemmas, and the complexities of individual cases.

Healthcare professionals are essential for verifying AI-generated recommendations, ensuring adherence to regulations like HIPAA, and preserving trust in medical decisions. By blending AI's precision with human expertise, organizations can enhance patient care while reducing risks and potential errors.

How do humans help prevent AI bias and ensure ethical decision-making?

Humans are indispensable in steering AI systems to function responsibly and fairly. They establish ethical guidelines, carefully review AI outputs to spot and address biases, and set firm boundaries for how these systems can and should be used. Through transparency and accountability, people help foster trust in AI while ensuring it aligns with both organizational goals and broader societal values.

Moreover, human oversight plays a key role in handling complex decisions that AI might struggle to grasp, particularly in areas like managing risks or navigating ethical challenges. By combining human judgment with AI's capabilities, we can achieve outcomes that are both thoughtful and equitable.

How can organizations maintain the right balance between AI automation and human oversight?

To strike a healthy balance between AI automation and human oversight, organizations should focus on creating systems that encourage collaboration between people and technology. This involves incorporating clear decision checkpoints, such as confirmation prompts or override options, and utilizing transparent dashboards to keep human operators well-informed.

Human involvement plays a critical role in tasks like training AI systems, monitoring their performance, and making decisions that require context or ethical judgment. This approach helps uphold ethical standards, minimizes the risk of automation bias, and fosters trust in the system. By blending the strengths of AI with human expertise, organizations can build systems that are both reliable and flexible.