Medical AI Under Siege: Protecting Healthcare Systems from Cyber Threats

Post Summary

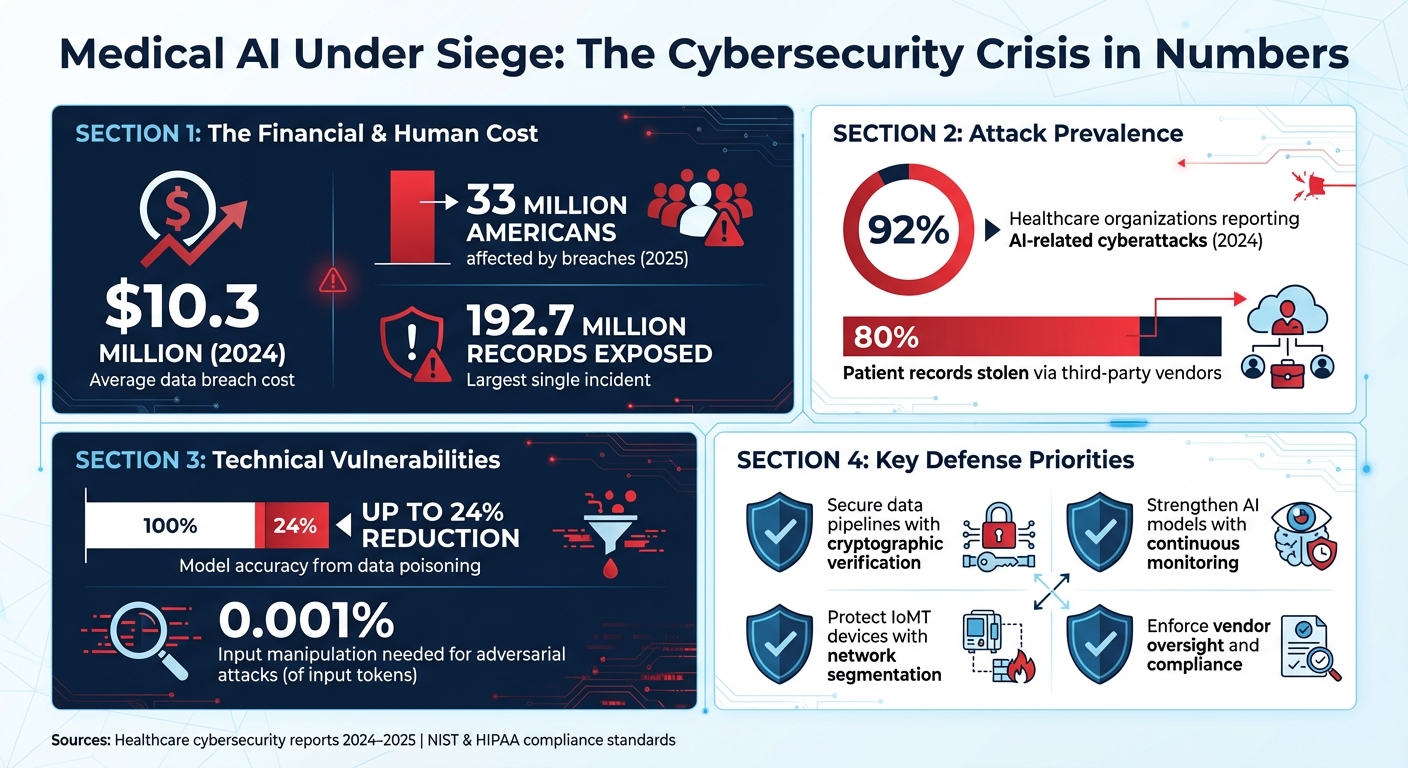

AI is transforming healthcare but also exposing it to dangerous cyber risks. From data breaches costing $10.3 million on average in 2024 to fatal ransomware attacks, the stakes are higher than ever. Cybercriminals target AI systems through methods like data poisoning and adversarial attacks, which can lead to diagnostic errors or compromised medical devices. In fact, 92% of healthcare organizations reported AI-related cyberattacks last year, and vulnerabilities in third-party vendors accounted for 80% of stolen patient records.

Key Takeaways:

- Cyber Threats: AI systems face risks like data manipulation, adversarial attacks, and model theft.

- Impact: Breaches affected 33 million Americans in 2025, with one incident exposing 192.7 million records.

- Defense Strategies: Secure data pipelines, strengthen AI models, and protect IoMT devices with encryption, segmentation, and continuous monitoring.

- Compliance: Align with NIST and HIPAA standards while addressing gaps in AI-specific regulations.

To safeguard medical AI, healthcare organizations must adopt layered defenses, monitor systems continuously, and tighten vendor oversight. The future of patient care depends on robust cybersecurity measures.

Medical AI Cybersecurity Threats: Key Statistics and Impact on Healthcare 2024-2025

Cyber Risks Targeting Medical AI Systems

Medical AI systems are increasingly vulnerable to cyber threats, spanning every stage from data collection to real-time decision-making. These risks not only jeopardize patient safety but also erode trust in the technology. Key concerns include data integrity issues, manipulation during operations, and vulnerabilities in connected devices and third-party services.

Data and Training Pipeline Vulnerabilities

Sensitive patient data is a prime target for breaches, and compromised datasets can lead to significant consequences. For instance, data poisoning - where attackers intentionally corrupt training data - can reduce model accuracy by as much as 24%[3]. In clinical settings, even slight inaccuracies can have life-threatening outcomes. Another pressing issue is model theft, where proprietary AI models are stolen, potentially exposing additional system weaknesses.

Model Manipulation and Inference-Time Attacks

Once deployed, AI systems are not immune to manipulation. Adversarial attacks - where tiny, almost imperceptible changes (as small as 0.001% of input tokens) are introduced - can trick systems into making diagnostic or treatment errors[2]. Similarly, prompt injection attacks exploit vulnerabilities during model drift, forcing the system to bypass safety protocols. These kinds of manipulations highlight the critical need for robust defenses.

AI-Enabled Medical Device Threats

AI-powered medical devices, which play a direct role in patient care, are particularly attractive targets for attackers. Manipulating these devices can lead to catastrophic outcomes. As cybersecurity expert Tapan Mehta emphasizes, tampering with a medical device could literally be a matter of life or death[4].

Identity and Third-Party Ecosystem Risks

Medical AI systems often depend on user credentials, vendor platforms, and third-party services. Weak links in any of these areas can open the door to attackers, enabling them to access sensitive data or disrupt entire healthcare networks. These interconnected systems amplify the potential impact of a single breach.

Adversarial Use of AI by Attackers

Cybercriminals are also leveraging AI to refine their tactics. They use it to create highly convincing phishing campaigns, automate the discovery of system vulnerabilities, and develop tailored social engineering strategies based on staff behavior. This escalating arms race between attackers and defenders underscores the complexity of securing medical AI systems. Recognizing these diverse threats is a critical step toward developing a comprehensive risk assessment framework.

AI-Specific Cybersecurity Risk Assessment Framework

Grasping the risks associated with AI is the cornerstone of effective protection. To address this, a structured risk assessment framework tailored for medical AI systems is critical. The Health Sector Coordinating Council (HSCC) Cybersecurity Working Group is taking steps to meet this need by developing guidance for 2026. Their work includes efforts on cyber operations, governance, secure-by-design principles, and managing third-party risks [5]. The urgency is clear: in 2024, 92% of healthcare organizations faced AI-related attacks [2]. This framework aims to equip healthcare providers with the tools to combat the ever-changing landscape of cyber threats.

Mapping AI Assets and Data Flows

Securing medical AI starts with understanding your systems. Healthcare organizations need to create a comprehensive inventory of all AI tools, from diagnostic imaging algorithms to predictive analytics platforms. Tracking how patient data flows - especially when third-party tools and supply chains are involved - is equally important [5]. Formal governance processes should define roles, responsibilities, and oversight throughout the AI lifecycle to ensure accountability.

A detailed map of AI assets and data flows is essential for identifying and mitigating AI-specific risks.

Key Risk Dimensions for AI in Healthcare

AI in healthcare brings unique challenges that go beyond those of traditional IT systems. To manage these, organizations should categorize AI tools by their level of autonomy using a five-level scale. This approach aligns the required degree of human oversight with the tool’s risk profile. For instance, a fully autonomous diagnostic system would need stricter controls compared to an AI assistant that only flags issues for human review.

Other crucial considerations include the sensitivity of patient data the AI system accesses, the reliability of its performance, the security of the infrastructure it operates on, and potential integration risks with medical devices. Adopting standards like the NIST AI Risk Management Framework can help organizations establish robust security measures and align governance practices with regulations such as HIPAA and FDA guidelines [5].

Using Censinet RiskOps™ for AI Risk Assessments

Managing AI risks on a large scale requires specialized tools, and Censinet RiskOps™ is designed specifically for healthcare. This platform acts as a centralized hub, consolidating all AI-related policies, risks, and tasks. It simplifies third-party risk assessments by organizing vendor questionnaires, summarizing key documentation, and capturing details about product integration and fourth-party risks.

Censinet RiskOps™ also enhances governance by routing critical findings and tasks to relevant stakeholders, such as members of the AI governance committee, for timely review and action. The platform’s intuitive AI risk dashboard provides real-time insights, ensuring that issues are addressed promptly and by the right teams. This approach combines automation with human decision-making, helping organizations standardize processes like vendor vetting, procurement, continuous monitoring, and lifecycle management for AI systems.

Additionally, the platform supports organizations by offering model contract and business associate agreement clauses tailored for AI vendors. These clauses address critical areas such as data use, handling of protected health information (PHI), and breach reporting. By unifying these elements, organizations can establish a continuous risk management and compliance framework that evolves alongside their AI systems.

Defense Strategies for Securing Medical AI Systems

Securing medical AI systems requires a layered approach that addresses vulnerabilities at every step - from the initial data input into training pipelines to the moment AI-powered devices interact with patients. Even minor input changes can trigger adversarial attacks, leading to severe errors. To counteract these threats, organizations need strategies that go beyond standard IT security measures. Below, we outline key methods to secure data pipelines, strengthen AI models, and protect AI-enabled devices.

Securing Data Pipelines and Model Development

AI training pipelines are especially vulnerable to data poisoning attacks, where malicious data is introduced to skew AI decision-making, potentially leading to harmful treatment recommendations[2]. To combat this, cryptographic verification of training data sources should be implemented, alongside maintaining detailed audit trails for any data modifications. Keeping training environments separate from production systems is another critical step to prevent contamination.

It's also essential to conduct rigorous robustness testing. This involves using adversarial examples and boundary testing to uncover potential weaknesses within the model[2]. Collaboration between cybersecurity and data science teams can enhance both preparedness and response to threats[5]. Additionally, data provenance controls should be in place to track the complete lifecycle of every dataset used in model development.

Hardening AI Models and Inference Environments

Once models are deployed, they face new challenges, such as inference-time attacks. These attacks aim to extract proprietary algorithms or manipulate real-time outputs[3]. To prevent such threats, continuous monitoring should be employed to detect anomalies, unauthorized access, or suspicious queries[5][6]. Inputs must also be screened for adversarial perturbations to ensure integrity.

API security plays a significant role in protecting deployed models. Techniques like rate limiting, authentication controls, and encrypted communications can help guard against model extraction attacks[3]. Regular resilience testing and maintaining secure, verifiable model backups are also essential for ensuring quick recovery in case of a breach[5].

Protecting AI-Enabled Devices and IoMT

AI-enabled devices, especially those connected to the Internet of Medical Things (IoMT), present a unique set of vulnerabilities. The IoMT significantly broadens the attack surface, with 80% of stolen patient records now originating from third-party vendors rather than hospitals themselves[2]. Network segmentation can help isolate medical devices and IoMT systems, preventing attackers from spreading across healthcare networks[2]. Adopting a Secure Product Development Framework (SPDF), such as JSP2 or IEC 81001-5-1, ensures cybersecurity is embedded throughout the device lifecycle - from design to decommissioning[7].

Minimum security standards for hardware, IT networks, and protection against unauthorized access should be established[3][7]. Timely patch management processes are critical to providing security updates without disrupting clinical operations. Secure configurations ensure devices work reliably with other systems while reducing risks from software conflicts[3][7]. Combining these measures with secure-by-design principles creates a strong defense for AI-enabled medical devices[5][7].

sbb-itb-535baee

Continuous AI Cyber Risk Management and Compliance

Protecting medical AI systems requires constant vigilance and the ability to quickly adapt to new threats. In 2024, a staggering 92% of healthcare organizations reported experiencing AI-related attacks, highlighting the urgency of continuous monitoring and maintaining compliance within healthcare cybersecurity[2].

Continuous Monitoring and Incident Response

Real-time monitoring of AI systems is crucial for detecting and containing threats before they disrupt patient care. A recent incident underscored the devastating impact of delayed detection, demonstrating the life-or-death stakes involved in healthcare cybersecurity[1].

"Additional priorities include enabling continuous monitoring of AI systems, ensuring rapid containment and recovery of compromised models, and maintaining secure and verifiable model backups." - HSCC Cybersecurity Working Group[5]

Healthcare organizations must develop and implement playbooks tailored to AI-specific threats like model poisoning, data corruption, and adversarial attacks[5]. These playbooks should outline processes for gathering AI-driven threat intelligence and include safeguards that integrate seamlessly with clinical workflows and broader health operations. Risk-based guardrails are essential for managing the unique vulnerabilities of different AI technologies, from predictive machine learning systems to AI embedded in medical devices[5].

Centralized tools, such as Censinet RiskOps™, provide real-time dashboards that monitor AI-related risks across clinical, operational, and financial settings. These platforms issue alerts that enable healthcare teams to respond swiftly and effectively to emerging threats[8].

In addition to proactive system monitoring, robust oversight of third-party vendors is a key component of a comprehensive risk management strategy.

Vendor Oversight and Lifecycle Management

Third-party vendors remain one of the most significant weak points in healthcare cybersecurity. Many breaches originate from business associates, which serve as major conduits for attackers[2]. The healthcare sector relies on thousands of external connections - ranging from EHR vendors to telehealth platforms - each of which could be exploited as an entry point for cyberattacks[2].

To mitigate these risks, healthcare organizations should routinely update their AI system inventories and reassess vendor security measures. This includes tracking which vendors have access to AI training data, understanding where models are hosted, and ensuring regular reviews of their security postures. The updated HIPAA Security Rule now requires multi-factor authentication and encryption, eliminating "addressable" specifications and setting a strict 240-day implementation deadline[2]. Organizations must also revise their business associate agreements to reflect these stricter compliance requirements.

Aligning with U.S. Regulatory Standards

Beyond internal monitoring and vendor oversight, aligning with regulatory standards is essential to maintaining robust AI cybersecurity. Current FDA and HIPAA regulations still lack specific guidelines for AI security assessments, ongoing monitoring, and incident response, leaving critical gaps in the system[2]. To address these challenges, the Health Sector Coordinating Council (HSCC) plans to release new guidance by 2026. This guidance will cover governance, secure-by-design principles, and third-party risk management, aligning with established frameworks like NIST, FDA, and HIPAA[5].

Healthcare providers should incorporate secure-by-design principles into AI-enabled medical devices and align their risk mitigation efforts with the U.S. FDA's recommendations, the NIST AI Risk Management Framework, and CISA guidelines[5]. Tools like the AI Bill of Materials (AIBOM) and Trusted AI BOM (TAIBOM) can enhance visibility and traceability across the AI supply chain. Platforms such as Censinet RiskOps™ further streamline compliance by automating audits, reporting, and documentation, reducing human error, and allowing staff to focus on higher-priority tasks[8]. These platforms also ensure that critical findings are routed to the appropriate teams, ensuring that issues are addressed efficiently and effectively.

Conclusion

Medical AI has become a cornerstone of modern healthcare, but its growing role also makes it a prime target for advanced cyberattacks. Recent incidents highlight the stakes: the average cost of a data breach has climbed to $10.3 million, and adversarial attacks - where altering just 0.001% of input data can derail diagnostic accuracy - pose a direct threat to patient safety[2].

To counter these risks, healthcare organizations need to shift from reactive security measures to a proactive, continuous approach to risk management. This means adopting comprehensive frameworks that secure every stage of the AI lifecycle, from protecting data pipelines and enhancing model development to ensuring secure deployment and ongoing monitoring. Third-party vendors also demand special attention, as they account for 80% of stolen patient records in breaches[2].

Equally important is staying aligned with regulatory requirements. Adhering to evolving standards like NIST and FDA guidelines not only ensures compliance but also helps maintain the trust of patients who rely on these systems for their care.

FAQs

What are the biggest cybersecurity risks facing medical AI systems?

Medical AI systems are increasingly at risk from a variety of cybersecurity threats. Among these, data poisoning stands out, where attackers tamper with training data to disrupt the AI's ability to deliver accurate results. Another challenge is adversarial attacks, which involve feeding the AI misleading data designed to exploit its weaknesses. Additionally, threats like model manipulation and prompt injection can skew the AI's decision-making process. On top of that, system breaches and vulnerabilities in outdated systems or interconnected devices put sensitive patient information and crucial healthcare operations in jeopardy.

To address these risks, healthcare organizations need to implement strong cybersecurity protocols. Regularly testing AI systems for vulnerabilities and adhering to healthcare security standards are essential steps in keeping both patient data and system integrity secure.

What steps can healthcare organizations take to protect AI systems from cyberattacks?

Healthcare organizations can shield AI systems from cyberattacks by embracing a thorough cybersecurity strategy. Key steps include performing regular risk assessments to uncover potential weaknesses, deploying security tools tailored for AI, and maintaining constant system monitoring to detect any unusual activity.

Equally important are measures like enforcing strict data access controls, keeping all software up-to-date with timely patches, and adopting advanced techniques such as zero trust architecture and microsegmentation. These approaches not only protect sensitive patient data but also ensure the reliability of systems and bolster the security of AI-driven healthcare technologies.

How do third-party vendors contribute to cybersecurity risks in medical AI systems?

Third-party vendors play a big role in the cybersecurity challenges faced by medical AI systems. In fact, they’re often tied to a large number of healthcare data breaches. These risks usually arise from flaws in their systems, weak security practices, or gaps in their supply chains.

When vendors don’t secure their systems effectively, it can result in exposed sensitive data, compromised AI tools, and larger attack surfaces that cybercriminals can exploit. That’s why holding vendors to strict cybersecurity standards is critical - not just to safeguard medical AI systems, but also to ensure patient safety.