NIST Cybersecurity Framework for AI Risk in Healthcare

Post Summary

AI is transforming healthcare, but it comes with risks. Misdiagnoses, biased algorithms, and data breaches are just a few of the challenges facing hospitals and health systems. To address these issues, two frameworks from the National Institute of Standards and Technology (NIST) provide guidance:

- NIST Cybersecurity Framework (CSF): Focuses on protecting healthcare IT systems, including those powered by AI, from cyber threats.

- NIST AI Risk Management Framework (AI RMF): Targets risks unique to AI, such as algorithmic bias, safety, and privacy concerns.

Key Takeaways:

- The CSF ensures cybersecurity and compliance with regulations like HIPAA.

- The AI RMF addresses AI-specific risks, including fairness, transparency, and clinical safety.

- Together, these frameworks offer a dual approach to managing AI risks in healthcare, protecting patient data, and ensuring reliable AI performance.

1. NIST Cybersecurity Framework (CSF)

The NIST Cybersecurity Framework offers a structured method for managing cybersecurity risks, including those tied to AI-enabled systems, through five core functions: Identify, Protect, Detect, Respond, and Recover [8][3]. While originally developed for critical infrastructure, the framework has been tailored for the Healthcare and Public Health (HPH) Sector. To assist healthcare organizations, entities like HHS, ASPR, and NIST have created an HPH Implementation Guide that helps apply the framework to areas such as electronic health records (EHRs), medical devices, and clinical workflows [9]. The updated NIST CSF 2.0 has become a go-to resource for tackling ransomware and vendor breaches [2]. This framework provides a roadmap for addressing AI-specific risks within each core function.

Starting with Identify, healthcare organizations should document where AI systems interact with sensitive data. This includes cataloging AI dependencies like EHR integrations, medical devices, APIs, and external data sources. Such an inventory clarifies how AI systems handle protected health information (PHI). For the Protect function, organizations should implement robust identity and access controls, such as multi-factor authentication, role-based access, and least-privilege principles for AI tools used by clinicians. Encrypt PHI during training and inference, both in transit and at rest, and apply secure software development practices like code reviews, dependency management, and security testing to ensure AI model integrity.

The Detect function focuses on identifying anomalies in AI usage. Organizations should establish baselines for normal AI behavior - such as typical API call volumes, timing, and prediction patterns - and set up alerts for deviations that could signal misuse, data breaches, or adversarial attacks [2]. Monitoring for model drift, such as declining performance or skewed error rates among patient groups, is also critical to detecting unsafe or biased AI outputs. Additionally, tamper detection methods like integrity checksums and signed model packages can help protect training data and model artifacts [4][6][7].

For Respond, clear communication across clinicians, IT teams, compliance officers, and leadership is essential to quickly address compromised AI outputs. When it comes to Recover, organizations should have retraining plans ready to exclude contaminated data and ensure models meet clinical validation standards. Updating CSF profiles, policies, and training after incidents can help integrate lessons learned about AI vulnerabilities, vendor issues, or governance gaps.

Healthcare organizations can align AI-related controls within each CSF function to meet U.S. regulatory requirements, including HIPAA, HHS Cybersecurity Performance Goals, and FDA guidelines for AI-enabled medical devices. For FDA-regulated devices, particularly those under Section 524B cybersecurity requirements, organizations should focus on CSF Protect and Respond measures. This includes securing software bill of materials (SBOMs), managing patches and updates, monitoring AI models, and conducting post-market surveillance throughout the device lifecycle [2][9]. Tools like Censinet RiskOps can streamline this process by mapping AI-related risks to CSF categories, automating vendor risk assessments, and benchmarking cybersecurity maturity against industry standards. These steps not only help organizations meet regulatory demands but also establish a strong foundation for managing AI risks effectively.

2. NIST AI Risk Management Framework (AI RMF)

The NIST AI Risk Management Framework (AI RMF) is designed to address the unique challenges of managing risks associated with AI systems, focusing on qualities such as validity, reliability, safety, security, accountability, transparency, explainability, privacy, and fairness [4][7]. While the NIST Cybersecurity Framework (CSF) tackles cybersecurity risks broadly, the AI RMF hones in on the socio-technical risks that arise from how AI is designed, implemented, and integrated into clinical workflows [4]. For healthcare organizations, this means addressing not just data breaches but also issues like biased diagnoses, unsafe clinical recommendations, privacy concerns from training data, and the erosion of patient trust [4][7]. This targeted approach complements the broader cybersecurity strategies outlined earlier.

The AI RMF is structured around four key functions - Govern, Map, Measure, and Manage - that span the entire AI lifecycle [4][6][7].

- Govern: This step lays the foundation for managing AI risks by establishing AI governance committees. These committees, which include clinical leaders, compliance officers, privacy experts, and patient safety representatives, define approval criteria for clinical AI, document accountability for AI-driven decisions, and set risk tolerance levels [4][6].

- Map: This function involves cataloging AI systems that process protected health information (PHI) or influence care decisions. It also requires identifying potential harm scenarios, such as misdiagnoses, biased triage decisions, or privacy violations [4][7].

- Measure: Here, organizations define clinical performance metrics like sensitivity, specificity, false-negative rates, and demographic fairness. Continuous monitoring is essential to detect issues like model drift, performance degradation, or adversarial attacks [4][7].

- Manage: This step focuses on implementing controls to mitigate risks. Examples include requiring human oversight for high-risk AI decisions, restricting access to training data, enforcing formal model change management with rollback options, and conducting regular AI incident response drills. For instance, if a data poisoning attack causes an AI model to misclassify images, teams need clear protocols to disable the model, revert to manual processes, and inform affected patients when necessary [4][6][7].

The AI RMF aligns seamlessly with U.S. regulatory standards, such as FDA and HIPAA requirements, ensuring both technical and ethical practices are upheld. For FDA-regulated AI/ML-based Software as a Medical Device (SaMD), organizations can align their safety and change-management protocols with AI RMF functions, particularly for systems that rely on continuous learning and post-market monitoring [5][7]. Regarding HIPAA compliance, the framework's emphasis on privacy helps organizations assess risks tied to training data containing PHI and implement necessary safeguards [4][7]. Healthcare organizations can also merge AI RMF with the NIST CSF by extending Detect and Respond activities to address AI-specific issues. Post-incident learning under the CSF's Recover function can then be coordinated with AI RMF's Govern function to refine policies and risk thresholds [2][4][8].

Tools like Censinet RiskOps simplify AI RMF adoption by cataloging AI systems, mapping risks across both CSF and AI RMF, automating assessments of AI tools, and tracking compliance with FDA and HIPAA regulations. By combining the CSF's focus on cybersecurity with the AI RMF's emphasis on trustworthiness, healthcare organizations can develop a comprehensive strategy for managing AI risks, ensuring patient safety, data protection, and clinical reliability.

sbb-itb-535baee

Advantages and Disadvantages

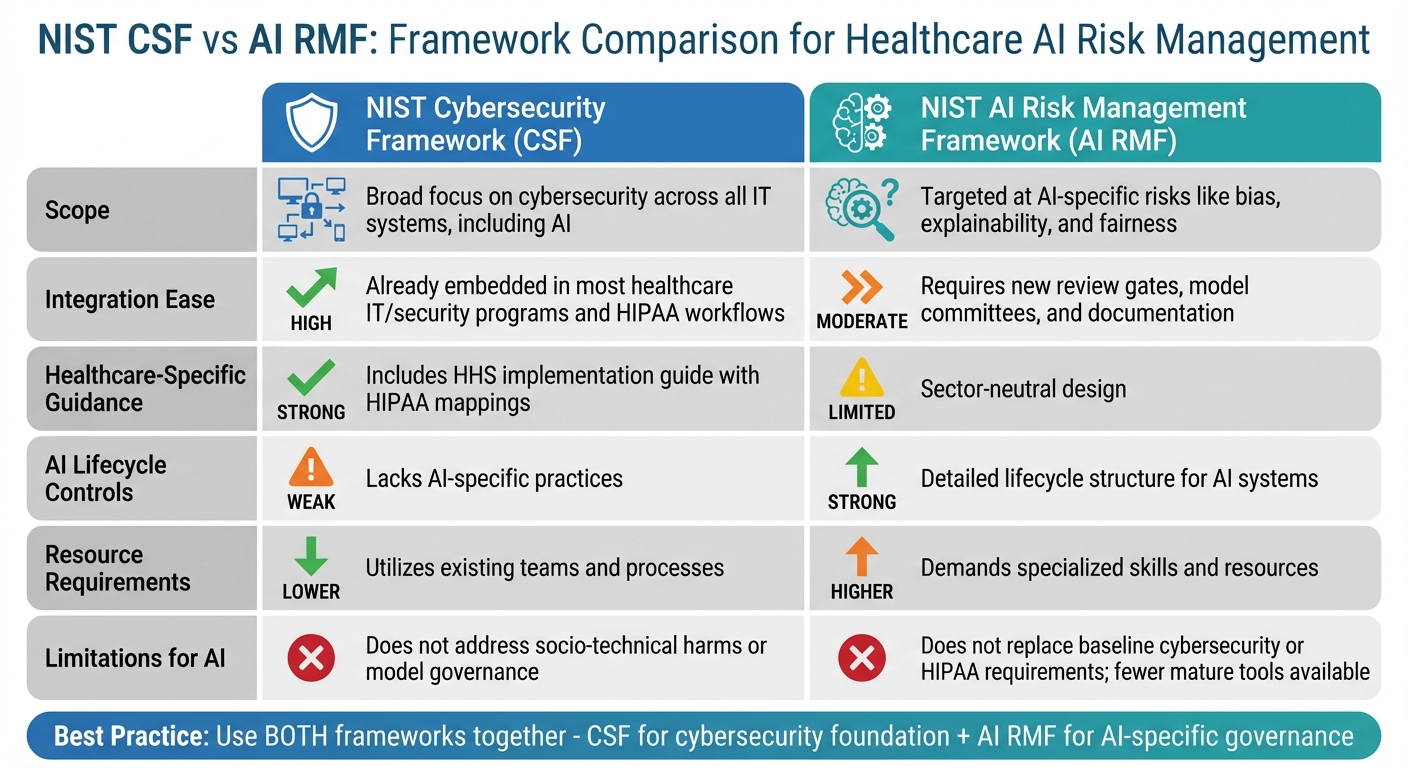

NIST CSF vs AI RMF Framework Comparison for Healthcare AI Risk Management

This section takes a closer look at the strengths and limitations of the NIST Cybersecurity Framework (CSF) and the NIST AI Risk Management Framework (AI RMF) when applied to managing AI-related risks in U.S. healthcare organizations.

The NIST Cybersecurity Framework (CSF) is widely recognized for its ability to integrate seamlessly into existing healthcare IT and security infrastructures. Since most healthcare organizations already use CSF for baseline cybersecurity and HIPAA compliance, it’s a logical choice for adding AI security measures without requiring a complete overhaul of risk management systems. However, CSF falls short when it comes to addressing AI-specific challenges like algorithmic bias, model transparency, data drift, and lifecycle governance [4][7].

On the other hand, the NIST AI Risk Management Framework (AI RMF) is specifically designed to tackle these AI-focused issues. It emphasizes core principles such as fairness, explainability, and robustness - qualities that are critical when AI directly impacts patient outcomes. The framework’s lifecycle approach (Govern, Map, Measure, Manage) aligns with how AI systems are developed and deployed in clinical environments while prioritizing stakeholder impacts. However, implementing AI RMF involves creating new processes for documenting datasets, models, and use cases. It also demands expertise in machine learning, statistics, and ethics, which many healthcare organizations may not have readily available [4][6][7].

Matt Christensen, Sr. Director of GRC at Intermountain Health, explains, "Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare" [1].

Here’s a side-by-side comparison of the two frameworks:

| Dimension | NIST Cybersecurity Framework (CSF) | NIST AI Risk Management Framework (AI RMF) |

|---|---|---|

| Scope | Broad focus on cybersecurity across all IT systems, including AI [4][7] | Targeted at AI-specific risks like bias, explainability, and fairness [4][7] |

| Integration Ease | High - already embedded in most healthcare IT/security programs and HIPAA workflows [9] | Moderate - requires new review gates, model committees, and documentation [4][6] |

| Healthcare-Specific Guidance | Strong - includes HHS implementation guide with HIPAA mappings [9] | Limited - sector-neutral design [4][6][7] |

| AI Lifecycle Controls | Weak - lacks AI-specific practices [4][7] | Strong - detailed lifecycle structure for AI systems [4][6][7] |

| Resource Requirements | Lower - utilizes existing teams and processes | Higher - demands specialized skills and resources [4][6][7] |

| Limitations for AI | Does not address socio-technical harms or model governance [4][7] | Does not replace baseline cybersecurity or HIPAA requirements; fewer mature tools available [4][6][7] |

For healthcare organizations, the best strategy often involves using both frameworks together. CSF provides a solid cybersecurity foundation and integrates well with existing risk programs, while AI RMF adds the AI-specific governance and lifecycle controls necessary for managing clinical AI systems effectively. Platforms like Censinet RiskOps make this dual approach more efficient by combining the benefits of both frameworks. They help catalog AI systems, map risks across CSF and AI RMF, and ensure compliance with FDA and HIPAA requirements - all within a single, healthcare-focused solution.

Conclusion

For healthcare organizations in the U.S., relying solely on the NIST Cybersecurity Framework (CSF) or the NIST AI Risk Management Framework (AI RMF) isn’t enough. When used together, these frameworks create a balanced strategy: the CSF provides the essential foundation for cybersecurity and HIPAA compliance, while the AI RMF introduces controls for managing AI-specific challenges like bias, explainability, model drift, and clinical safety.

To address identified risks, take a dual approach: leverage the CSF to catalog AI assets, safeguard patient information, detect anomalies, and manage incidents. Pair this with the AI RMF to oversee use cases, evaluate clinical impacts, ensure fairness, and manage the AI lifecycle. This integrated strategy ensures that robust security measures align with governance tailored to AI - covering everything from network protection and access control to algorithm transparency and human oversight.

Focus on high-stakes clinical AI systems and conduct combined CSF/AI RMF evaluations both before and after these systems are deployed. Establish an AI governance team that brings together experts from clinical, legal, compliance, security, and data science fields to ensure AI isn’t treated as just another IT project. Additionally, define AI-specific performance metrics - such as clinical outcomes, fairness indicators, and clinician override rates - to enhance ongoing risk monitoring.

To implement this strategy effectively, consider using a centralized risk management platform. Tools like Censinet RiskOps™ can simplify this process by cataloging AI systems, aligning risks with both frameworks, directing findings to governance committees, and ensuring compliance with FDA and HIPAA regulations. This approach helps healthcare organizations scale their AI risk management efforts while maintaining critical human oversight to safeguard patient care.

FAQs

How do the NIST Cybersecurity Framework (CSF) and AI Risk Management Framework (AI RMF) work together to address AI risks in healthcare?

The NIST Cybersecurity Framework (CSF) provides healthcare organizations with a structured method to tackle cybersecurity risks. It emphasizes five core functions: identify, protect, detect, respond, and recover. These steps are crucial for protecting sensitive patient information, safeguarding medical devices, and ensuring the security of critical systems.

On the other hand, the AI Risk Management Framework (AI RMF) focuses specifically on managing risks tied to AI technologies. It offers guidance on the development, deployment, and oversight of AI systems, aiming to ensure these technologies remain secure, ethical, and dependable.

By integrating these frameworks, healthcare organizations can adopt a comprehensive approach. The combination of general cybersecurity principles with AI-specific risk management supports safer patient care and enhances the protection of sensitive data.

What AI-related risks does the NIST AI Risk Management Framework address in healthcare?

The NIST AI Risk Management Framework (AI RMF) zeroes in on handling the major risks tied to AI systems in healthcare. Key areas of focus include bias, explainability, robustness, privacy, security, and safety. By addressing these critical factors, the framework supports healthcare organizations in building AI technologies that are dependable, trustworthy, and in line with patient care standards.

This is especially crucial for safeguarding sensitive patient information, protecting the integrity of clinical tools, and ensuring the secure and effective use of AI-powered medical devices and healthcare solutions.

How do the NIST CSF and AI RMF work together to manage AI risks in healthcare?

The NIST Cybersecurity Framework (CSF) and AI Risk Management Framework (AI RMF) work hand in hand to tackle the specific challenges AI presents in healthcare. These frameworks offer a clear roadmap for identifying, evaluating, and addressing risks associated with AI systems, from cybersecurity threats to ethical concerns.

By applying both frameworks, healthcare organizations can better protect patient data, stay aligned with regulatory requirements, and minimize risks across clinical tools, medical devices, and supply chains. This combined strategy promotes the safer and more dependable use of AI technologies in the ever-changing landscape of healthcare.