OCR Healthcare Data Breach Rules: Vendor Risk Management and Reporting Requirements

Post Summary

Healthcare data breaches are on the rise, with 168 million individuals affected in 2024 alone. The Office for Civil Rights (OCR) has intensified its HIPAA enforcement, focusing on how healthcare organizations and their vendors handle protected health information (PHI). Here’s what you need to know:

- HIPAA Breach Notification Rule: Covered entities must notify affected individuals and OCR of breaches within strict timelines. Vendors (business associates) must alert covered entities within 60 days of discovering a breach.

- Risk Assessments: Organizations must evaluate incidents to determine if PHI was compromised, considering factors like the type of data involved and mitigation efforts.

- Vendor Accountability: Business Associate Agreements (BAAs) define vendor responsibilities for PHI protection and breach reporting.

- Reporting Deadlines: Breaches affecting 500+ individuals require notifications within 60 days. Smaller breaches must be reported annually to OCR but still need individual notifications within 60 days.

- Cybersecurity Risks: Third-party vendors are a major concern, linked to 37% of healthcare breaches in 2025. Regular assessments and monitoring are essential.

Tools like Censinet RiskOps™ streamline vendor risk management and compliance, helping healthcare organizations mitigate risks and meet HIPAA requirements effectively.

The HIPAA Breach Notification Rule Explained

The HIPAA Breach Notification Rule, outlined in 45 CFR §§ 164.400-414, sets clear guidelines for notifying affected individuals, the Office for Civil Rights (OCR), and, in some cases, the media when unsecured protected health information (PHI) is compromised.

Both covered entities and business associates have responsibilities under this rule, but the covered entity holds the ultimate accountability for notifying patients and the OCR. The specific obligations and timelines for these notifications differ between the two groups.

The rule assumes that any unauthorized use or disclosure of unsecured PHI is a breach unless a thorough risk assessment demonstrates a low probability of compromise. This shifts the burden of proof onto healthcare organizations and their vendors, emphasizing the importance of proactive security measures and detailed documentation. This framework brings us to the key question: what exactly qualifies as a reportable breach?

What Qualifies as a Breach of Unsecured PHI

"A breach is, generally, an impermissible use or disclosure under the Privacy Rule that compromises the security or privacy of the protected health information." - HHS.gov [1]

"Unsecured" PHI refers to information that has not been made unusable, unreadable, or indecipherable to unauthorized individuals through methods like encryption or destruction, as outlined in HHS guidance.

However, not every incident involving PHI is considered a reportable breach. The rule identifies three exceptions:

- A workforce member unintentionally accesses PHI within their authorized scope, provided no further unauthorized disclosure occurs.

- PHI is inadvertently disclosed to another authorized individual within the same organization, with no subsequent misuse.

- The organization has a reasonable belief that an unauthorized recipient cannot retain the PHI.

For incidents outside these exceptions, organizations must conduct a risk assessment. This assessment evaluates factors such as the nature and extent of the PHI involved (including identifiers and the potential for re-identification), who accessed or received the information, whether the PHI was actually viewed or acquired, and the effectiveness of any mitigation efforts.

The findings of these risk assessments directly impact the notification timelines described below.

Reporting Deadlines and Notification Requirements

The Breach Notification Rule enforces strict timelines based on the size of the breach. For breaches involving 500 or more individuals, covered entities must notify the OCR and affected individuals within 60 calendar days of discovering the breach. If 500 or more residents in a single state or jurisdiction are affected, media notifications must also be made within the same 60-day period [2].

For smaller breaches - those affecting fewer than 500 individuals - the timeline is slightly different. These incidents must be documented and reported to the Department of Health and Human Services (HHS) no later than 60 days after the end of the calendar year in which they were discovered. However, individual notifications must still be sent within 60 days of discovery [2].

Notifications to affected individuals must be clear and in plain language, including:

- A detailed description of the breach,

- The types of information involved,

- Steps individuals can take to protect themselves,

- A summary of the organization’s response, including containment and corrective actions, and

- Contact information for further questions.

These notifications are typically sent via first-class mail or email if electronic delivery has been agreed upon.

Organizations are required to maintain thorough documentation of the breach, including the risk assessment, decision-making process, and all notifications. This documentation is essential for demonstrating compliance during OCR investigations. Additionally, when appropriate, organizations may offer mitigation options, such as credit monitoring, to help affected individuals [2].

Vendor Obligations Under HIPAA

Under HIPAA, vendors handling protected health information (PHI) for healthcare organizations are classified as business associates, as outlined in 45 CFR 160.103 [3]. This designation applies to any entity managing PHI on behalf of a covered entity [4].

Once a vendor is identified as a business associate, they must adhere to the HIPAA Breach Notification Rule. This federal regulation ensures that business associates are held accountable for notifying relevant parties in the event of a PHI breach. The Department of Health and Human Services (HHS) oversees compliance with these breach notification requirements [3][4].

Below, we explore the critical contractual and accountability obligations for business associates.

Business Associate Agreements (BAAs)

A Business Associate Agreement (BAA) is a key document that outlines a vendor's duties related to PHI protection and breach response. Every relationship between a covered entity and a business associate requires a BAA. This agreement must clearly define responsibilities such as safeguarding PHI, permissible uses and disclosures, and breach notification protocols.

The BAA should also specify which party is responsible for notifying affected individuals in the event of a breach. This decision is often based on which organization has a closer relationship with the patients or better access to their contact information. In some cases, the covered entity may delegate the notification responsibility to the business associate, particularly if the associate is better positioned to handle communication with affected individuals.

Vendor Accountability for PHI Breaches

"If you are a business associate of a HIPAA-covered entity and you experience a security breach, you must notify the HIPAA-covered entity you're working with. Then they must notify the people affected by the breach." - FTC.gov [4]

When a breach occurs, the business associate must notify the covered entity no later than 60 days after discovering the incident. This notification must include a list of affected individuals and any additional information the covered entity needs to fulfill its notification duties [1].

Business associates are also required to demonstrate that they have met all notification requirements or show evidence that the incident does not qualify as a reportable breach [1]. For vendors operating in dual roles - acting as a HIPAA business associate while also providing personal health record services directly to consumers - additional obligations may apply under both HHS and FTC breach notification rules [4].

These responsibilities form the foundation for effective vendor risk management, which will be discussed in the next section.

OCR Reporting Requirements for Vendor Breaches

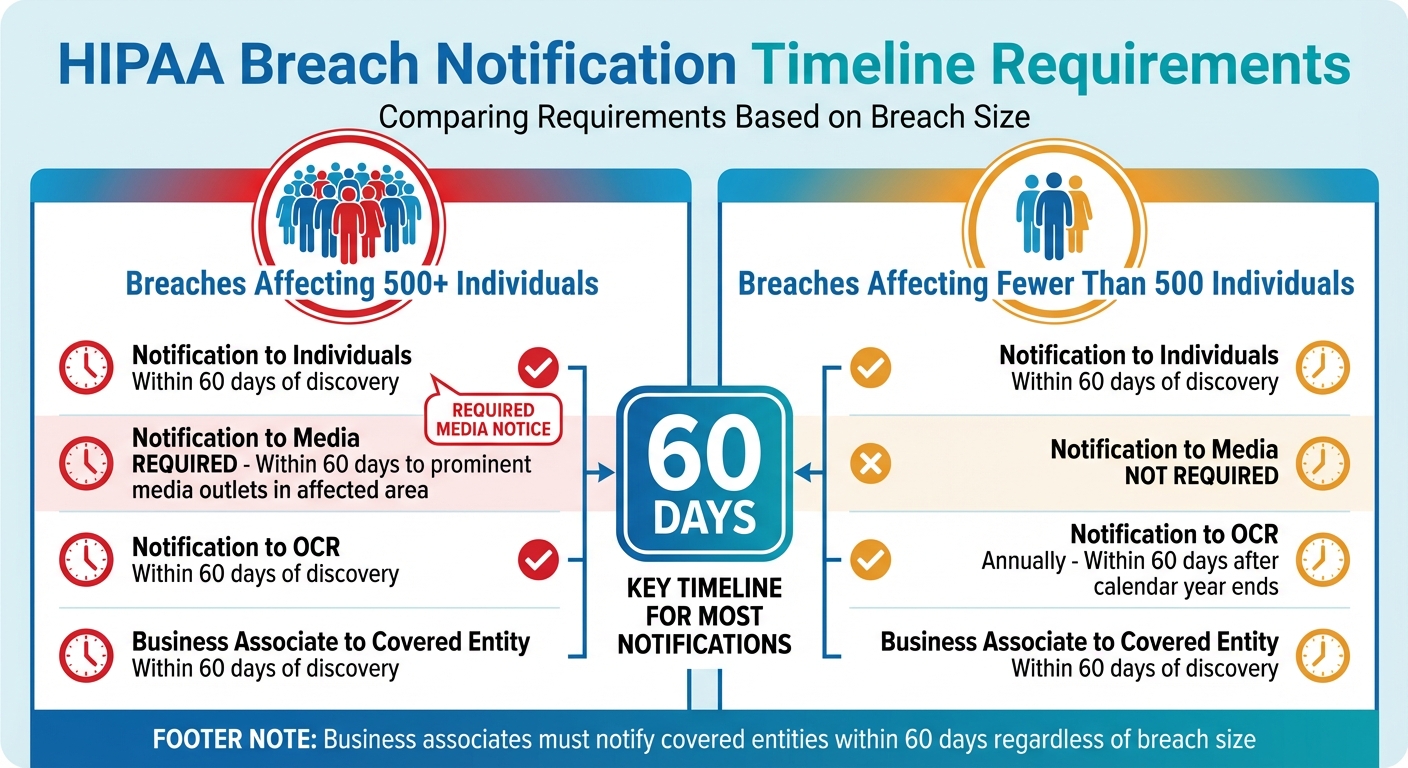

HIPAA Breach Notification Timeline Requirements by Breach Size

When a breach occurs, covered entities must adhere to specific reporting procedures to comply with HIPAA mandates. The Office for Civil Rights (OCR) is responsible for overseeing these requirements and maintains a public record of breaches affecting 500 or more individuals [1][6]. Accurate reporting, along with meeting the required criteria and timelines, is crucial.

How to Report Breaches to OCR

To report a breach, covered entities must use the OCR's electronic breach notification form, accessible via its Web portal [6]. The form requires detailed information, including the nature of the breach, the types of protected health information (PHI) involved, and the number of individuals affected. If the exact number is unknown, an estimate should be provided, with updates submitted later as necessary [6].

Many organizations initially submit placeholder figures when the exact count isn't available, then revise the number after reviewing their files [5]. The form also includes a free-text section for additional details about the incident [6]. If needed, entities can submit addendums to update or clarify earlier reports, using the transaction number from the original submission [6].

Conducting Risk Assessments to Determine Breach Impact

Not all incidents involving PHI qualify as reportable breaches. Organizations must perform a documented risk assessment to decide if there is a low probability that the PHI has been compromised. This assessment evaluates four critical factors:

- The nature and extent of the PHI involved

- The identity of the person who accessed or received the information

- Whether the PHI was actually acquired or viewed

- The extent to which the risk has been mitigated [2]

Covered entities and business associates are responsible for proving that either all required notifications have been made or that the incident does not meet the criteria of a breach [1]. The assessment must be thorough and clearly documented. It should outline what occurred, the types of data involved, recommended steps for individuals to protect themselves, and the measures the entity is taking to investigate, address, and prevent similar incidents in the future [2]. These findings directly influence the reporting timelines outlined below.

Reporting Timelines by Breach Size

The size of the breach determines the reporting timeline and notification requirements. Breaches impacting 500 or more individuals require prompt action across multiple channels, while smaller breaches follow a different schedule.

| Breach Size | Notification to Individuals | Notification to Media | Notification to OCR | Business Associate to Covered Entity |

|---|---|---|---|---|

| 500 or more individuals | Within 60 days of discovery [1] | Required: Within 60 days to prominent media outlets in the affected area [1] | Within 60 days of discovery [1][6] | Within 60 days of discovery [1] |

| Fewer than 500 individuals | Within 60 days of discovery [1] | Not required [1] | Annually: Within 60 days after the calendar year ends [1][6] | Within 60 days of discovery [1] |

Business associates are required to notify the covered entity within 60 days of discovering a breach. This notification must include the names of affected individuals and any other details the covered entity needs to meet its own notification obligations [1]. These deadlines underscore the importance of timely communication from vendors.

sbb-itb-535baee

Vendor Risk Management Practices for Healthcare Organizations

Healthcare organizations heavily depend on third-party vendors for essential services, but this reliance also introduces significant cybersecurity risks. In 2023, 74% of cybersecurity issues or unauthorized access incidents in healthcare were linked to third-party vendors [9]. Additionally, business associates were involved in 37% of reported healthcare breaches in the first half of 2025 [11]. The financial toll is staggering - healthcare breaches cost an average of $7.42 million, the highest across all industries for the 14th year in a row. On top of that, it takes an average of 279 days to identify and contain a breach, which is over five weeks longer than the global average [11]. These stats underscore the need for thorough risk assessments and consistent monitoring to mitigate vendor-related risks.

How to Conduct Vendor Risk Assessments

Before entering into any agreements, healthcare organizations must perform detailed risk assessments of third-party vendors. These assessments should focus on:

- The critical services the vendor provides and the potential fallout from a breach or service failure.

- The vendor's cybersecurity measures and whether they meet required standards.

- Existing safeguards and how well they’re configured to protect data.

- The likelihood and potential impact of identified threats.

- Acceptable risk levels and any corrective actions needed.

The risk analysis must cover all electronic protected health information (e-PHI) that the organization creates, receives, maintains, or transmits - regardless of where or how it’s stored [8]. This includes identifying and documenting potential threats and vulnerabilities, paying close attention to risks tied to external vendors [8].

It’s essential to document every step of the risk analysis process and update it regularly - whether annually, bi-annually, or after major changes like security breaches, ownership shifts, or adopting new technology [8].

Another critical step is identifying single points of failure in systems reliant on third-party vendors. Prepare for potential disruptions by vetting alternative vendors in advance and ensuring they meet the necessary requirements for quick implementation and favorable contract terms [7]. Incident response plans should also be tested frequently, especially for systems that depend on external vendors. Simulating real-world scenarios and involving IT, security, and clinical care teams can make these plans more effective [9]. This proactive approach not only minimizes risks but also strengthens HIPAA compliance efforts.

Using OCR Enforcement Trends to Improve Compliance

Once thorough assessments are in place, healthcare organizations should also pay attention to enforcement trends from the Office for Civil Rights (OCR) to refine their compliance strategies. For example, in May 2025, 59 healthcare data breaches were reported, affecting over 1.8 million individuals. Of these, 77% were caused by hacking or IT incidents [10]. Business associates and medium-to-large healthcare providers were the most affected, with network servers being the top target for cyberattacks [10]. In 2024, 81.3% of large healthcare data breaches were linked to hacking and IT incidents, and OCR closed 22 HIPAA investigations that resulted in financial penalties [12].

OCR’s findings highlight key compliance gaps: the lack of a documented risk analysis, insufficient safeguards for data, and poor oversight of business associates [10]. To address these issues, ensure that all vendors handling PHI have comprehensive Business Associate Agreements (BAAs) and undergo regular evaluations [10].

Hacking incidents involving network servers and business associates are expected to dominate breach reports through 2025, potentially leading to stricter regulations and more lawsuits [11]. To stay ahead, implement strong security measures like data encryption, access controls, and contingency plans. Regularly train staff on security protocols and response strategies, as human error often plays a role in ransomware attacks [10].

How Censinet RiskOps™ Supports Vendor Compliance

Censinet RiskOps™ tackles the challenges of vendor risk management by simplifying workflows and improving oversight. It’s designed to make managing third-party risks and ensuring PHI compliance more efficient and less time-consuming.

Let’s explore how it enhances risk assessments and vendor oversight.

Automating Risk Assessments with Censinet RiskOps™

Censinet RiskOps™ takes the hassle out of vendor risk assessments by automating key steps. Thanks to Censinet AI™, the platform speeds up the process with features like:

- Automating security questionnaires for vendors

- Summarizing evidence quickly and efficiently

- Capturing integration details with ease

- Evaluating fourth-party risks

- Generating risk reports at the click of a button

Even with automation, human oversight remains critical. The system uses a human-in-the-loop approach, allowing risk teams to customize rules and review processes. This ensures that while the platform scales operations, it doesn’t compromise on the thoroughness needed for compliance.

To complement these assessments, Censinet Connect™ brings ongoing vendor oversight into focus.

Managing Vendor Risks with Censinet Connect™

Censinet Connect™ acts as a one-stop hub for overseeing vendor risks. It provides a clear, centralized view of vendor-related issues, Business Associate Agreements, and compliance status across your healthcare network. The platform’s dashboard delivers real-time insights, helping you spot potential breach risks before they become serious problems.

Collaboration is another key feature. Censinet Connect™ allows Governance, Risk, and Compliance (GRC) teams to work together more effectively by routing critical findings and tasks to the right people. This ensures timely action and keeps everyone aligned. Plus, it maintains detailed documentation, which is essential for proving compliance during audits or investigations.

Conclusion

To wrap up the discussion on vendor obligations and risk assessments, it’s clear that solid compliance strategies are essential for protecting patient health information (PHI) and avoiding hefty penalties. The HIPAA Breach Notification Rule makes one thing very clear: covered entities are ultimately responsible for safeguarding PHI, even when breaches occur through their business associates. With vendor-related incidents accounting for a large portion of major healthcare data breaches, keeping a close eye on third-party relationships is not just a good idea - it’s a necessity.

Covered entities must ensure vendors notify them of breaches within 60 days and keep thorough records of every decision to prove compliance. Timely and efficient risk assessments, breach detection, and reporting are crucial to meeting these strict deadlines. Additionally, any unauthorized use or disclosure of PHI is automatically considered a breach unless it can be shown that the risk of compromise is low. This makes detailed documentation and careful risk analysis absolutely vital.

Strong vendor risk management depends on having well-drafted Business Associate Agreements (BAAs), conducting regular assessments, and maintaining ongoing monitoring. Vendor-related breaches remain a major challenge in healthcare, highlighting the importance of securing the supply chain and maintaining vigilant oversight of all third-party partners. However, manual processes often fall short when it comes to keeping up with these demands.

This is where tools like Censinet RiskOps™ come into play. They simplify vendor risk assessments and monitoring, cutting down on administrative work while bolstering security. Similarly, Censinet Connect™ helps organizations maintain audit-ready documentation and spot potential breach risks before they spiral out of control.

FAQs

What responsibilities do business associates have under HIPAA when a data breach occurs?

Under HIPAA, business associates must immediately inform the covered entity if there's a breach involving unsecured Protected Health Information (PHI). They are also required to notify affected individuals. If the breach involves 500 or more individuals, it must be reported to the Department of Health and Human Services (HHS) within 60 days of discovering the incident.

Business associates are also tasked with performing a risk assessment to determine the severity of the breach. This step ensures that all reporting obligations are met and appropriate measures are taken to address the issue. Clear and timely communication plays a key role in staying compliant and safeguarding patient information.

How does the size of a healthcare data breach impact reporting timelines and requirements?

When it comes to healthcare data breaches, the size of the breach plays a crucial role in determining how and when it must be reported.

Breaches impacting 500 or more individuals come with stricter rules. These must be reported to the Department of Health and Human Services (HHS) and the media within 60 days of discovering the breach. Because of their scale, these breaches demand swift and public disclosure.

On the other hand, breaches involving fewer than 500 individuals follow a different timeline. Reporting to HHS is required annually, with a deadline of 60 days after the end of the calendar year in which the breach took place. Knowing and adhering to these timelines is essential to ensure compliance and steer clear of penalties.

How can healthcare organizations effectively manage cybersecurity risks with their vendors?

To tackle cybersecurity risks linked to vendors, begin with thorough risk assessments to pinpoint weaknesses in third-party systems. Make sure sensitive data is encrypted during transmission and while stored, and have well-defined incident response plans in place to handle breaches swiftly.

Conducting regular security audits is key to ensuring vendors stick to your cybersecurity standards. Also, include contractual obligations that require vendors to uphold robust security measures. In the event of a breach, notify affected individuals promptly - usually within 60 days - to stay aligned with regulatory requirements.