The Precautionary Principle in AI: When to Slow Down to Speed Up Safely

Post Summary

The precautionary principle in AI is about preventing harm before it happens. In healthcare, where patient safety and data security are critical, this means implementing safeguards before deploying AI systems. Key risks include data breaches, biased algorithms, and vulnerabilities in AI-powered medical devices. Ignoring these risks can lead to severe consequences, such as compromised patient care and loss of trust.

To address these challenges, healthcare organizations should:

- Conduct thorough risk assessments before deployment.

- Establish strong governance and oversight involving cross-functional teams.

- Use controlled pilots to test AI in low-risk environments.

- Implement continuous monitoring to track performance and security over time.

Tools like Censinet RiskOps™ help streamline risk management by automating assessments, monitoring threats, and ensuring compliance. By applying the precautionary principle, healthcare organizations can responsibly integrate AI while protecting patients and sensitive data.

Why Precaution Matters in Healthcare AI and Cybersecurity

Healthcare operates in an environment where even a small mistake can have life-altering consequences. When artificial intelligence (AI) is added to the mix, the stakes get even higher. Unlike industries where errors might cause financial loss or delays, failures in healthcare AI can directly jeopardize patient safety and compromise sensitive data.

The precautionary principle emphasizes the importance of treating healthcare data with the utmost care. Medical records are among the most sensitive types of information, and when AI systems lack proper security measures, they become vulnerable to exploitation.

Major AI Cybersecurity Risks in Healthcare

The integration of AI into healthcare systems brings a host of cybersecurity challenges that demand careful attention. These risks highlight how even small oversights can quickly escalate into serious problems.

- Data breaches: Patient data is a prime target for cyberattacks. Healthcare systems handle massive volumes of sensitive information, and without robust security measures, they are at risk of unauthorized access. Such breaches can lead to violations of regulations like HIPAA and cause psychological and reputational harm to patients [9][10][11][12].

- Algorithmic opacity: Many AI systems function as "black boxes", meaning their decision-making processes are difficult to understand. This lack of transparency complicates security audits and makes it harder to identify when a system has been compromised.

- AI-controlled device vulnerabilities: Weaknesses in AI-powered medical devices can pose immediate risks to patient safety. If exploited, these vulnerabilities could lead to life-threatening situations.

- Model bias: AI systems trained on biased data can produce discriminatory outcomes in diagnoses and treatment recommendations, further deepening healthcare inequities [1].

What Happens When Precaution Is Ignored

Failing to prioritize precaution can have severe consequences. Beyond regulatory fines, the damage to an organization’s reputation can be long-lasting. When patient data is exposed, trust erodes, and rebuilding it can take years. Patients may lose confidence in their providers, potentially seeking care elsewhere, which hurts both revenue and community health.

The risks to patient safety are even more alarming. Without proper safeguards, AI systems can misdiagnose conditions, suggest inappropriate treatments, or disrupt access to critical care services. These risks underscore the need for proactive measures to prevent harm before it happens.

How Precaution Fits Into Enterprise Risk Management

Addressing these risks requires embedding precaution into every layer of enterprise risk management. Healthcare organizations already conduct risk assessments for clinical operations, data security, and compliance. These practices must now expand to include the risks posed by AI technologies.

AI deployments should be treated with the same scrutiny as new pharmaceutical products. Just as clinical risk assessments are thorough and methodical, AI applications need comprehensive reviews to ensure safety and reliability [8]. Regular security evaluations, combined with board-level oversight of AI initiatives, can create clear accountability and reduce the likelihood of errors.

When to Slow Down: Identifying High-Risk AI Scenarios

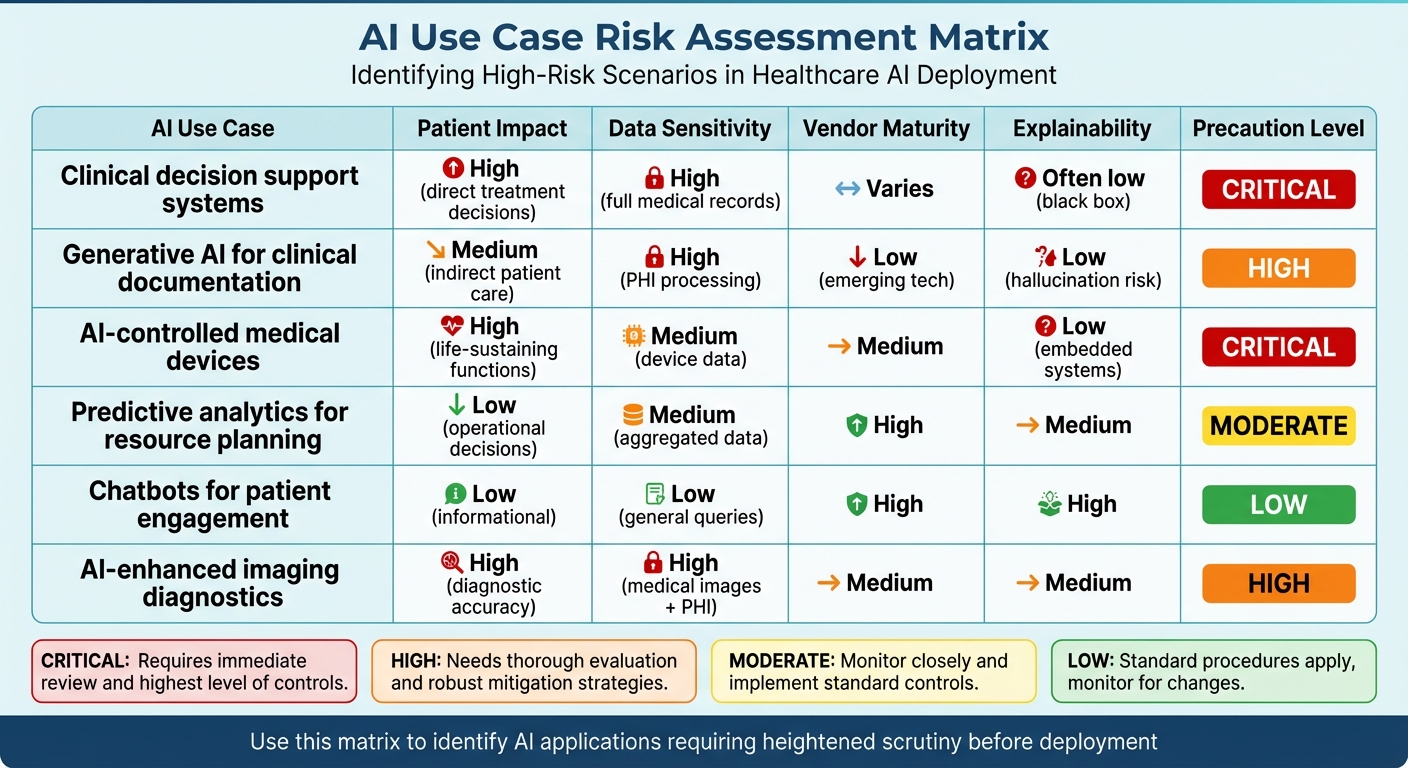

AI Use Case Risk Assessment Matrix for Healthcare

Not all AI applications carry the same level of risk. Building on the precautionary principle, it's critical to pinpoint scenarios in healthcare where AI deployment should proceed with caution. Healthcare organizations need a structured approach to recognize high-risk situations and warning signs that indicate when an AI system might exceed acceptable risk levels. This approach builds on earlier discussions surrounding risk management, emphasizing scenarios where exercising restraint is essential.

AI Use Cases That Demand Extra Vigilance

Some AI applications in healthcare require heightened scrutiny due to their potential effects on patient safety and data security. For instance, clinical decision support systems, which recommend diagnoses or treatment plans, must undergo thorough evaluation. Errors in these systems can directly influence patient outcomes, making rigorous vetting non-negotiable.

Generative AI tools handling protected health information (PHI) also present significant risks. These tools can generate inaccurate outputs or "hallucinations", potentially misrepresenting patient data. Moreover, they are susceptible to misuse, such as creating deepfakes [1][14]. Cybersecurity is another pressing concern: in the first quarter of 2023, healthcare organizations faced over 1,000 cyberattacks weekly - a 22% increase from the previous year. This underscores the importance of carefully assessing systems that process sensitive information.

AI-controlled medical devices, including pacemakers, insulin pumps, and imaging equipment, also require heightened caution. These devices, often interconnected, are vulnerable to ransomware and Denial of Service (DoS) attacks. High-stakes incidents involving these technologies highlight the severity of such risks.

Warning Signs That Signal a Need to Pause

Certain red flags should immediately prompt a reevaluation of AI deployment. A major concern is lack of explainability. When an AI system operates as a "black box", conducting security audits or identifying vulnerabilities becomes nearly impossible [1][13].

Another critical warning sign is working with inadequately evaluated AI vendors. If a vendor cannot provide clear documentation of their security protocols or demonstrate compliance with regulatory standards, such as HIPAA, it’s wise to reconsider moving forward [2][14].

Outdated infrastructure poses another significant risk. AI systems relying on obsolete software or hardware that no longer receive security updates create exploitable vulnerabilities. Similarly, poorly secured interoperability between hospital systems can allow weaknesses to spread across an entire network [1].

Matching AI Use Cases to Risk Triggers

To clarify how these risks apply, the following table maps specific AI use cases to potential risk triggers. This framework highlights where extra caution is warranted, aligning use cases with key concerns discussed earlier.

| AI Use Case | Patient Impact | Data Sensitivity | Vendor Maturity | Explainability | Precaution Level |

|---|---|---|---|---|---|

| Clinical decision support systems | High (direct treatment decisions) | High (full medical records) | Varies | Often low (black box) | Critical |

| Generative AI for clinical documentation | Medium (indirect patient care) | High (PHI processing) | Low (emerging tech) | Low (hallucination risk) | High |

| AI-controlled medical devices | High (life-sustaining functions) | Medium (device data) | Medium | Low (embedded systems) | Critical |

| Predictive analytics for resource planning | Low (operational decisions) | Medium (aggregated data) | High | Medium | Moderate |

| Chatbots for patient engagement | Low (informational) | Low (general queries) | High | High | Low |

| AI-enhanced imaging diagnostics | High (diagnostic accuracy) | High (medical images + PHI) | Medium | Medium | High |

This framework serves as a guide for identifying where the precautionary principle is most crucial. AI systems with high patient impact, those processing sensitive PHI, and those offering limited explainability demand the strictest oversight before deployment [13]. Additionally, dynamic AI algorithms that evolve over time require continuous monitoring and updates to maintain safety standards [1].

How to Apply the Precautionary Principle in AI Deployments

To ensure patient safety and data security, healthcare organizations must embed safeguards at every stage of AI deployment. From initial risk assessment to ongoing monitoring, a structured approach transforms precaution into actionable steps that protect both patients and sensitive information throughout the AI lifecycle.

Pre-Deployment Risk Assessment and Threat Modeling

Start with a detailed risk assessment to pinpoint vulnerabilities, validate testing, and address potential biases. Establish clear governance structures that outline clinical oversight responsibilities across every phase of the AI lifecycle [2][5].

For high-risk applications - like autonomous surgical tools - use a risk-based framework. This means incorporating secure-by-design principles from the start, rather than relying on retrofitted cybersecurity measures [2][7].

Keep an updated inventory of all AI systems and classify each by its level of autonomy [2]. Tools like Censinet RiskOps™ can streamline this process by automating risk assessments and centralizing essential documentation.

Once risks are clearly identified and classified, the focus shifts to governance and piloting to ensure safe and effective AI adoption.

Governance and Oversight for Safe AI Adoption

Create cross-functional AI governance committees that include representatives from executive leadership, regulatory bodies, IT, cybersecurity, clinical teams, and safety reporting. This ensures all stakeholder perspectives are addressed [5].

For high-risk AI applications, implement human-in-the-loop processes. These safeguards allow experts to verify AI outputs and minimize the risks of automation bias [5][6][3][7].

Align governance practices with existing frameworks such as HIPAA, FDA regulations, and the NIST AI Risk Management Framework [2][5]. Additionally, keep an eye on forthcoming resources like the Health Sector Coordinating Council's AI Governance Maturity Model and AI Cyber Resilience Playbooks, expected in early 2026 [2].

Strong governance sets the stage for controlled piloting and continuous monitoring, which are essential for adaptive risk management.

Controlled Piloting and Continuous Monitoring

Begin with controlled pilot programs to test AI systems in limited, low-risk environments. This approach helps identify and address issues early, minimizing potential impacts.

Adopt continuous monitoring to track security, data quality, and performance metrics over time [2][5][6]. Notably, only 41% of organizations strongly believe their current cybersecurity measures adequately protect Generative AI applications, underscoring the importance of robust monitoring [6].

Develop AI-specific incident response playbooks. These should include protocols for threat intelligence, rapid containment, and secure model backups [2]. Regular audits are critical to flagging new vulnerabilities as AI systems evolve. Tools like Censinet RiskOps™ can serve as a centralized hub for real-time data aggregation and quick escalation of critical findings for review.

sbb-itb-535baee

Tools and Frameworks for AI Cybersecurity Precaution

Key Standards and Frameworks

Healthcare organizations rely on structured frameworks to tackle AI cybersecurity risks effectively. The NIST AI Risk Management Framework and HIPAA guidelines provide essential tools for identifying, evaluating, and addressing risks tied to AI systems.

In November 2025, the Health Sector Coordinating Council (HSCC) unveiled early previews of its 2026 guidance for managing AI cybersecurity risks. This guidance, developed by an AI Cybersecurity Task Group comprising 115 healthcare organizations, revolves around five critical areas: Education and Enablement, Cyber Operations and Defense, Governance, Secure by Design Medical, and Third-Party AI Risk and Supply Chain Transparency. These resources will be released in stages starting in January 2026 [2].

Additionally, in 2024, the Joint Commission and the Coalition for Health AI (CHAI) released guidance titled The Responsible Use of AI in Healthcare. This document outlines best practices for building governance frameworks, bolstering data security, monitoring quality, and assessing risks and biases [15].

With these frameworks in mind, platforms like Censinet demonstrate how to translate these principles into actionable controls.

Using Censinet for AI Risk Management

Censinet RiskOps™ streamlines AI risk management throughout the system's lifecycle. It automates risk evaluations with built-in evidence validation, maintains detailed inventories of AI systems, and offers real-time insights into emerging threats.

The Censinet AITM tool accelerates third-party risk assessments by automating vendor questionnaires, summarizing evidence, and analyzing product integrations along with fourth-party risks. It also generates comprehensive risk reports. This approach blends automation with human oversight, ensuring critical decisions are handled appropriately while significantly cutting down assessment time.

Think of it as air traffic control for AI risk: the platform directs findings to the right stakeholders - like AI governance committees - so that the most relevant teams address specific issues promptly and effectively.

Connecting Precautionary Principles to Platform Controls

Censinet integrates precautionary principles into its platform controls, ensuring risks are managed proactively and transparently. The table below illustrates how these principles are applied through specific features:

| Precautionary Principle | Platform Control | How It Works |

|---|---|---|

| Early Risk Identification | Automated Risk Assessments | Uses standardized questionnaires and evidence validation to detect vulnerabilities pre-deployment. |

| Proportional Response | Risk-Based Routing | Escalates critical findings to the appropriate governance teams for swift action. |

| Continuous Monitoring | AI Risk Dashboard | Tracks security, performance, and compliance metrics in real time across AI systems. |

| Transparency and Documentation | Centralized Policy Hub | Stores all AI-related policies, vendor documents, and assessment outcomes in one location. |

| Human Oversight | Human-in-the-Loop Workflows | Ensures human review and approval at key decision points through configurable rules. |

| Third-Party Accountability | Vendor Risk Management | Automates vendor assessments, capturing integration details, fourth-party risks, and supply chain vulnerabilities. |

Conclusion: Balancing Innovation and Safety in AI Adoption

Healthcare organizations are walking a tightrope: embracing AI's transformative potential while safeguarding patient safety and data security. The precautionary principle provides a thoughtful way forward - not by stalling progress, but by ensuring that advancements rest on a foundation of responsibility and care.

To address the unique challenges AI brings, healthcare organizations need to rethink how they manage risks. Traditional models for clinical errors often fall short when it comes to AI-specific issues like algorithmic bias and lack of transparency [1]. This means moving from a reactive stance to a proactive one. Leaders in the field must pinpoint high-risk situations early, establish clear governance structures, and maintain consistent oversight. This proactive strategy aligns with the HHS AI Strategy, which emphasizes governance, workforce training, and the ethical integration of AI into healthcare systems [4][16].

"AI is a tool to catalyze progress. This Strategy is about harnessing AI to empower our workforce and drive innovation across the Department."

– Clark Minor, Acting Chief Artificial Intelligence Officer, HHS [4]

Tools like Censinet RiskOps™ are stepping up to meet these needs, automating risk assessments, flagging critical issues, and offering real-time insights into vulnerabilities. With HHS reporting a surge in active AI use cases [16], scalable solutions like these are becoming essential for healthcare organizations striving to manage risks effectively while adapting to rapid advancements in AI.

FAQs

How does the precautionary principle reduce risks in AI healthcare applications?

The precautionary principle plays a key role in managing risks within AI healthcare by promoting a cautious and thoughtful implementation process. It focuses on conducting detailed risk assessments, maintaining transparency, and establishing protective measures before AI systems are put into use.

This method helps healthcare providers address challenges such as privacy violations, safety risks, and algorithmic bias. By placing patient safety and data security at the forefront, the precautionary principle ensures that AI advancements are introduced responsibly and effectively into healthcare practices.

What are the key signs that AI implementation in healthcare should be put on hold?

If you encounter inconsistent, inaccurate, or biased results while using AI in healthcare, it's a clear signal to hit pause. Other warning signs include a lack of transparency in how decisions are made or any unexpected system behavior. These issues can compromise the integrity of the system.

Even more critical are errors that could threaten patient safety or any indications of discriminatory practices in decision-making. These are serious concerns that demand immediate attention.

Taking the time to thoroughly evaluate these problems is essential. It helps avoid potential harm, preserves trust, and ensures that AI systems meet ethical and safety standards before proceeding.

Why is it important to continuously monitor AI systems in healthcare?

Keeping a constant eye on AI systems in healthcare is crucial to ensure they function safely and efficiently. This ongoing oversight helps catch potential problems - like errors, declining model performance, or security gaps - before they can pose risks to patient safety or compromise data integrity.

By closely monitoring these systems, healthcare providers can stay aligned with regulatory requirements, safeguard sensitive patient information, and respond to new threats or shifts in system behavior. This proactive vigilance not only protects patients but also strengthens confidence in AI-powered healthcare solutions.

Related Blog Posts

- The New Risk Frontier: Navigating AI Uncertainty in an Automated World

- The AI Safety Imperative: Why Getting It Right Matters More Than Getting There First

- The Safety-Performance Trade-off: Balancing AI Capability with Risk Control

- Clinical Intelligence: Using AI to Improve Patient Care While Managing Risk