Protecting Digital Health: Cybersecurity Strategies for Medical AI Platforms

Post Summary

Cybersecurity is essential to protect sensitive patient data, ensure the safe operation of medical AI systems, and maintain trust in healthcare systems.

Risks include data breaches, ransomware, algorithm manipulation, and vulnerabilities in AI-powered medical devices.

Medical AI relies on large datasets and interconnected systems, making it a prime target for cyberattacks and increasing the complexity of securing healthcare systems.

Organizations can implement encryption, zero-trust architectures, regular audits, and AI-driven threat detection to mitigate risks.

Regulations like HIPAA and emerging AI-specific guidelines ensure compliance, protect patient data, and establish accountability for cybersecurity practices.

The future includes AI-driven security solutions, stronger regulations, and a focus on proactive risk management to protect healthcare systems.

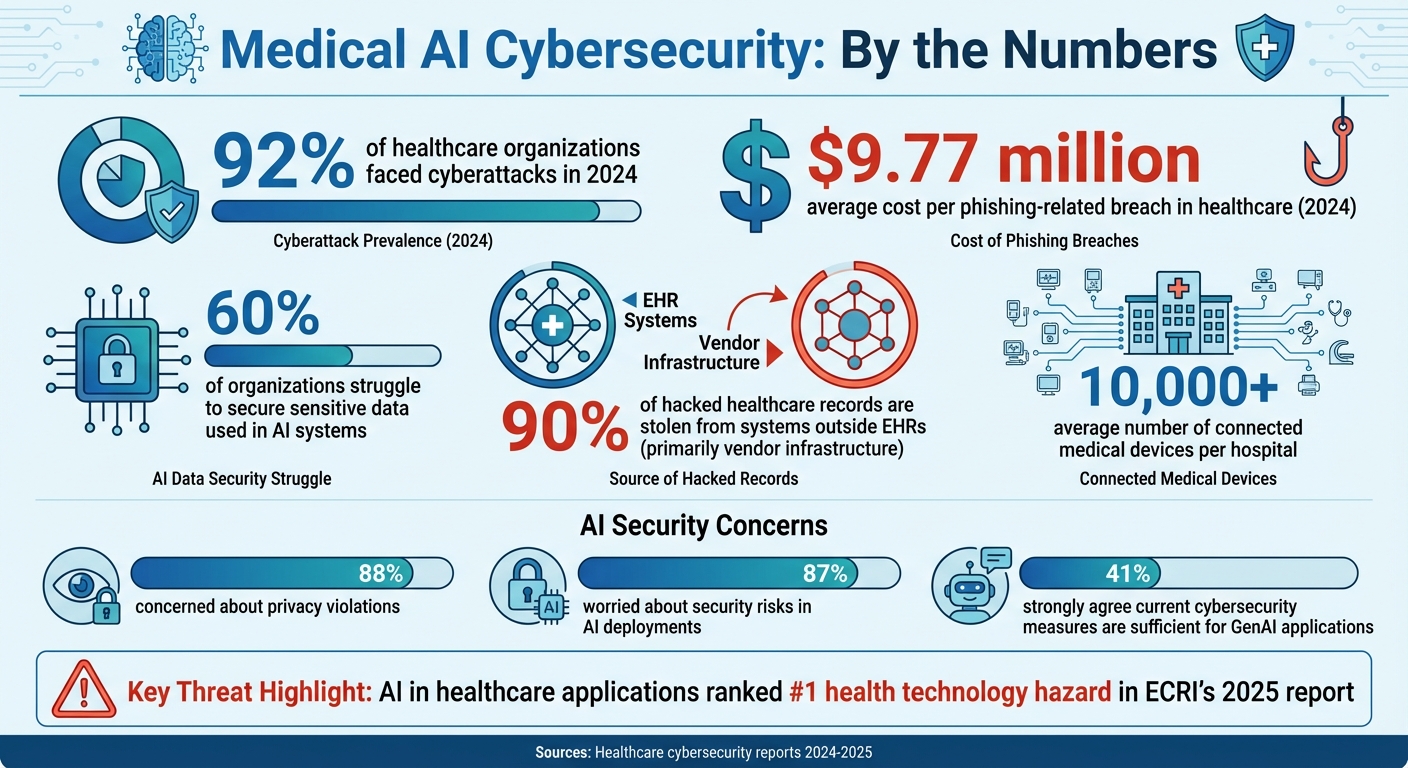

Medical AI platforms are reshaping healthcare by improving diagnostics, streamlining workflows, and reducing costs. But these advancements come with growing cybersecurity risks. In 2024, 92% of healthcare organizations faced cyberattacks, exposing millions of patient records. AI systems are particularly vulnerable to data poisoning, adversarial attacks, and supply chain breaches, which can lead to harmful medical errors.

To secure these platforms, organizations should:

- Implement secure-by-design architectures (e.g., encryption, zero-trust models).

- Strengthen governance and accountability with clear policies and compliance with regulations like HIPAA and FDA guidelines.

- Address third-party and supply chain risks through vendor assessments and continuous monitoring.

Tools like Censinet RiskOps™ simplify risk management by automating assessments and providing real-time oversight. As AI transforms healthcare, robust cybersecurity measures are essential to protect patients and systems from evolving threats.

Cybersecurity Threats Facing Medical AI

Medical AI Cybersecurity Threats and Costs in Healthcare 2024-2025

Medical AI systems face a range of cybersecurity challenges throughout their lifecycle - from the early stages of gathering data and training models to their active use in clinical environments. Recognizing these threats is a critical step in creating safeguards that protect healthcare providers and the patients who rely on their care. Let’s break down the vulnerabilities in the AI lifecycle and examine the increasingly sophisticated methods attackers use.

Vulnerabilities Across the AI Lifecycle

The journey of an AI system from development to deployment is riddled with potential weak points. During the data acquisition and training phase, attackers can introduce corrupted or misleading data, a tactic known as data poisoning. This can skew the system's learning process, leading to errors in clinical decisions that could have serious consequences. Once the AI system is operational, poor deployment practices and unsecured interfaces can open the door to further attacks.

Adding to the complexity, the opaque nature of many AI models makes it difficult to assess their security. This lack of transparency can allow vulnerabilities to persist unnoticed as the systems evolve.

Cyber Threats Targeting AI Systems

In addition to built-in vulnerabilities, cybercriminals are actively exploiting these flaws. Adversarial attacks, for instance, manipulate input data to mislead AI systems. Imagine an imaging system that overlooks a tumor because of subtle pixel modifications - such attacks can have life-altering impacts. Similarly, natural language AI systems are vulnerable to prompt injection attacks, where attackers trick the system into divulging sensitive information or making harmful recommendations.

These issues are compounded by the fact that 60% of organizations struggle to secure the sensitive data used in AI systems [5][7]. The financial toll is staggering too. In 2024, phishing-related breaches in the healthcare sector cost an average of $9.77 million per incident [7].

AI-Powered Cyberattacks

The rise of AI has also armed cybercriminals with new tools to launch advanced attacks. Techniques like deepfake-powered phishing, data poisoning, and zero-day exploits are becoming more common [6]. For example, AI-generated deepfakes can convincingly mimic physicians or executives, tricking employees into granting unauthorized access to critical systems. Machine learning algorithms can also identify vulnerabilities across networks at speeds far beyond human capabilities.

The threat has grown so severe that AI in healthcare applications now ranks as the top health technology hazard in ECRI's 2025 report [8]. AI-powered attacks are highly scalable - a single malicious algorithm can churn out thousands of personalized phishing emails in minutes. Addressing these threats requires more than traditional cybersecurity measures; it demands advanced solutions tailored to counter the unique risks posed by AI-enhanced attacks.

Building a Cybersecurity Framework for Medical AI

To safeguard medical AI systems, organizations must adopt a structured cybersecurity framework that emphasizes strong governance, adherence to regulations, and ongoing risk management.

Governance and Accountability Structures

Securing AI in healthcare begins with defining clear roles and responsibilities. Establishing cross-functional AI governance committees is a crucial first step. These committees should bring together clinical leaders, IT security experts, compliance officers, and data scientists to oversee the entire AI lifecycle - from development and deployment to continuous monitoring.

Policies must outline how AI models are approved, updated, and retired. For instance, organizations should document model management procedures, set performance benchmarks, and establish protocols for addressing unexpected outcomes. Aligning these governance practices with legal requirements and established frameworks like the NIST AI Risk Management Framework helps close accountability gaps and reduces vulnerabilities. This foundation not only enhances security but also ensures compliance with regulatory standards.

Meeting U.S. Regulatory and Privacy Standards

Medical AI platforms must adhere to regulations designed to protect patient health information. For example, compliance with HIPAA requires implementing measures such as encryption, robust access controls, and audit trails to safeguard the confidentiality and integrity of Protected Health Information (PHI) [9]. Additionally, AI systems used in clinical decision-making must align with FDA requirements, ensuring that innovation is paired with strict security standards [9]. These compliance efforts integrate seamlessly into modern risk management practices, helping organizations stay ahead of potential threats.

Using Censinet RiskOps™ for AI Security

Managing AI security in a complex ecosystem of vendors and regulatory requirements can be challenging. Censinet RiskOps™ simplifies this process by providing a centralized platform for AI risk management. It consolidates policies, assessments, and compliance documentation into one system, enabling real-time coordination. The platform ensures that critical findings are routed to the right stakeholders and supports governance committees with timely reviews and decision-making.

Censinet AI™ enhances efficiency by automating vendor assessments, summarizing key evidence, and capturing integration details. Its configurable rules and oversight processes maintain human involvement while helping teams benchmark AI security against industry standards. With an intuitive risk dashboard, organizations can streamline compliance reporting and gain a complete view of their AI security landscape.

Secure Technical Architectures for Medical AI

In the realm of medical AI, cybersecurity isn't just an add-on; it's a fundamental requirement. Modern healthcare systems face increasingly sophisticated cyber threats, and with hospitals managing an average of over 10,000 connected medical devices, the potential attack surface is massive [4]. To protect sensitive patient data and ensure reliable outcomes, secure technical architectures must be built with security woven into every layer. This "secure-by-design" approach integrates protection mechanisms throughout the AI lifecycle, addressing vulnerabilities proactively rather than reacting after an issue arises. It complements the risk management strategies discussed earlier, providing a solid foundation for resilient AI systems.

Secure-by-Design Architecture

One key strategy for secure architectures is network segmentation, which isolates critical AI systems and sensitive data into separate zones. This limits the movement of attackers if they breach initial defenses [4]. Going a step further, microsegmentation creates even finer security boundaries around individual AI workloads and data repositories. This level of granularity is crucial because even small changes to data can lead to catastrophic outcomes.

Encryption plays a central role in protecting electronic protected health information (ePHI), whether it’s stored or in transit. This includes encrypting AI model parameters, training datasets, and inference results as they move between systems. Compliance with HIPAA mandates encryption, multi-factor authentication (MFA), and role-based access to ensure that only authorized personnel can access sensitive information [10].

Adopting a zero-trust architecture means no user or device is automatically trusted. Every access attempt is verified, and permissions are limited strictly to what is necessary. This aligns with CISA's "Secure by Design" initiative, which emphasizes embedding cybersecurity into the core of technology from the very beginning [12].

Protecting Data and AI Models

To safeguard AI systems, data provenance and integrity controls are essential. These measures verify the authenticity of training data through cryptographic methods, maintain detailed audit trails for any data changes, and keep training environments separate from production systems. Regular retraining using verified datasets ensures that poisoned models are identified and corrected before they can be deployed in clinical settings [4].

Robust validation frameworks are equally important. These frameworks test AI models not just for clinical accuracy but also against adversarial attacks and errors. Simulating such attacks during development can reveal vulnerabilities early. Additionally, using anonymized data for training ensures HIPAA compliance, while techniques like differential privacy add noise to datasets, protecting individual patient information without compromising model performance.

When working with third-party AI vendors, Business Associate Agreements (BAAs) must include strict guidelines for data use, protection, and breach notifications. These agreements ensure that vendors handling ePHI adhere to the same high security standards [11].

MLOps and Monitoring Practices

Operational security doesn’t end once an AI system is deployed. MLOps practices must go beyond traditional workflows to address unique challenges like adversarial attacks, data drift, and fault injections. This includes implementing resilience measures, monitoring uncertainty, and ensuring systems degrade gracefully under stress [13]. These steps are critical for AI systems that directly impact patient care [4].

Continuous monitoring is a must after deployment. Tracking metrics like system accuracy, latency, and data drift helps identify potential issues early [13]. AI telemetry provides round-the-clock monitoring for suspicious activity, while regular audits, vulnerability assessments, and penetration testing expose weaknesses before attackers can exploit them [1]. Real-time remote backups also bolster disaster recovery and business continuity plans [2].

Finally, updating outdated systems is essential. Ensuring that the latest hardware and software with advanced security features are in place helps organizations stay ahead of threats [10]. As the Health Sector Coordinating Council works toward releasing its 2026 AI cybersecurity guidance, organizations that prioritize secure-by-design principles in their architectures will be better prepared to meet evolving regulatory demands [1].

sbb-itb-535baee

Managing Third-Party and Supply Chain Risks

Once AI-specific vulnerabilities are identified, managing third-party and supply chain risks becomes a crucial step in protecting medical AI platforms. The healthcare ecosystem is incredibly intricate, creating a broad surface area for potential attacks. With thousands of third-party connections involved, every vendor could become a potential entry point for cyber threats. Here's a staggering statistic: 90% of hacked healthcare records are stolen from systems outside electronic health records, primarily through vendor-controlled infrastructure [4]. This shift underscores the need for healthcare organizations to rethink their approach to AI security. Adapting to this evolving threat landscape requires robust vendor risk assessments and secure supply chain protocols.

Assessing AI Vendor Risks

Vendors working within healthcare systems often have extensive network access. However, many of these business associates operate with less robust security measures and smaller budgets compared to the hospitals they serve [4]. When evaluating AI vendors, organizations need to go beyond basic compliance checklists. For example, secure training environments are vital to prevent data poisoning during the development phase. Data residency is another key consideration, as it determines which regulatory standards apply and where sensitive patient data is stored. Additionally, assessing a vendor’s incident response capabilities is critical - can they quickly contain a threat, or will they become a liability in the event of a breach? Since vendor incidents often require coordinated efforts across multiple parties, understanding these controls is essential for managing broader supply chain risks.

Securing AI Supply Chains

AI supply chains bring their own set of challenges, often introducing vulnerabilities that traditional security assessments might overlook. These include risks from open-source libraries, cloud provider misconfigurations, and API services. Organizations also need to evaluate fourth-party risks - the vendors that their vendors rely on. A single weak link in this extended chain can trigger a ripple effect, compromising the entire healthcare ecosystem [4]. The numbers paint a clear picture: 88% of organizations are concerned about privacy violations, and 87% worry about security risks tied to AI deployments. Yet, only 41% strongly agree that their current cybersecurity measures are sufficient to protect GenAI applications [9]. This gap between concern and preparedness highlights the urgency of addressing these risks.

How Censinet Improves AI Vendor Risk Management

Censinet RiskOps™ offers a scalable solution for healthcare organizations managing hundreds or even thousands of vendor relationships. The platform shifts from periodic assessments to continuous monitoring of risk indicators [4]. Using Censinet AI™, vendors can complete security questionnaires in seconds. The platform also automates the summarization of vendor evidence, captures critical integration details, identifies fourth-party risks, and consolidates findings into actionable reports. Its AI risk dashboard acts as a centralized hub for managing AI-related policies, risks, and tasks, directing key issues to the appropriate stakeholders, including members of AI governance committees. This "air traffic control" model ensures that the right teams address the right issues at the right time, providing comprehensive oversight across the organization's AI vendor ecosystem.

Conclusion

Protecting medical AI platforms requires a comprehensive approach that addresses risks like misdiagnoses, incorrect prescriptions, and flawed surgical decisions. While AI has the potential to revolutionize healthcare, it also introduces new vulnerabilities to cyberattacks. These threats aren't just digital - they can have real-world consequences, including harm to patients, making robust cybersecurity measures absolutely critical [2][3].

To tackle these risks, three core strategies are essential:

- Strong governance: Clearly defined roles and responsibilities that adhere to HIPAA and FDA standards.

- Secure-by-design architectures: Cybersecurity measures should be embedded from the start to prevent issues like data poisoning and model manipulation.

- Supply chain risk management: Addressing vulnerabilities from third-party vendors is key to maintaining overall security.

Despite the growing awareness of these challenges, many organizations still lag in preparedness. This gap underscores the urgency of implementing tailored cybersecurity measures that reflect the complexities of the healthcare environment.

Tools like Censinet RiskOps™ provide solutions by offering continuous monitoring, automated vendor assessments, and centralized oversight. These features help healthcare organizations manage risks effectively while maintaining patient safety. By combining these capabilities with human oversight, healthcare leaders can quickly address vulnerabilities across their AI vendor networks.

As cyber threats continue to evolve, the strategies for securing medical AI must also adapt. Future healthcare leaders must balance the integration of AI with a steadfast commitment to cybersecurity. With so much at stake, only a well-rounded, continuously monitored approach can ensure that the promise of AI in healthcare is realized safely and securely. This guide underscores the importance of such a strategy, ensuring that healthcare remains protected in an increasingly digital world.

FAQs

What are the biggest cybersecurity risks for medical AI platforms?

Medical AI platforms face a range of cybersecurity challenges that could jeopardize both sensitive healthcare data and patient safety. Among the most concerning are data breaches, where unauthorized parties gain access to confidential information, and algorithm manipulation, which can alter AI results, potentially leading to misdiagnoses or improper treatments.

Other risks include adversarial attacks, where attackers exploit vulnerabilities in AI systems, and ransomware or phishing schemes that target system weaknesses. There's also the threat of data poisoning, where input data is deliberately tampered with to corrupt AI models, and deepfake misuse, which can erode trust in these technologies. To address these threats, implementing strong cybersecurity strategies is crucial to protect both the integrity and safety of medical AI platforms.

What steps can healthcare organizations take to manage third-party and supply chain cybersecurity risks?

Healthcare organizations can tackle third-party and supply chain cybersecurity risks by carefully evaluating vendors' security measures before bringing them on board. This includes implementing rigorous access controls to safeguard sensitive systems. Regular audits and thorough risk assessments are essential to ensure compliance with cybersecurity protocols and to uncover any weak points.

Keeping a close watch on vendor activities allows organizations to spot and respond to threats as they arise. Partnering with cybersecurity specialists can also help craft strategies to protect the supply chain and secure patient data. Taking these steps helps create a stronger, safer digital health environment.

Why is it important to design medical AI systems with built-in security measures?

Building security into the design of medical AI systems is a crucial step in minimizing vulnerabilities and protecting sensitive healthcare data from the outset. A secure-by-design approach not only helps these systems align with regulations like HIPAA but also plays a key role in safeguarding patient safety and preserving the trust between healthcare providers and their patients.

Moreover, this strategy enhances the system's capacity to identify and address cyber threats, ensuring that medical AI platforms remain dependable and resilient in critical healthcare settings.

Related Blog Posts

Key Points:

Why is cybersecurity critical in digital health?

- Cybersecurity is vital to protect sensitive patient data and ensure the safe operation of healthcare systems.

- Patient trust depends on the security of their personal and medical information.

- Cyberattacks, such as ransomware or data breaches, can disrupt healthcare services and compromise patient safety.

- Robust cybersecurity measures are essential to maintain compliance with regulations like HIPAA and GDPR.

What are the main cybersecurity risks in medical AI?

- Data breaches: Unauthorized access to sensitive patient information.

- Ransomware attacks: Cybercriminals encrypt healthcare data and demand payment for its release.

- Algorithm manipulation: Attackers can tamper with AI models, leading to incorrect diagnoses or treatment plans.

- Device vulnerabilities: AI-powered medical devices, such as insulin pumps, are susceptible to hacking, which can endanger patient safety.

How does medical AI increase cybersecurity challenges?

- Medical AI relies on large datasets and interconnected systems, making it a prime target for cyberattacks.

- The integration of AI with IoT devices amplifies risks, as many connected devices have known vulnerabilities.

- Algorithmic opacity in AI systems can make it difficult to detect and address security flaws.

- The complexity of securing AI systems increases as healthcare organizations adopt more advanced technologies.

What strategies can healthcare organizations use to secure medical AI?

- Implement encryption: Protects data in motion and at rest.

- Adopt zero-trust architectures: Verifies every connection to prevent unauthorized access.

- Conduct regular audits: Identifies and mitigates vulnerabilities in AI systems.

- Use AI-driven threat detection: Monitors systems in real-time to identify and respond to cyber threats.

- Segment networks: Isolates IoT devices to prevent lateral attacks.

What role does regulation play in securing medical AI?

- Regulations like HIPAA and GDPR establish standards for protecting patient data and ensuring accountability.

- Emerging AI-specific guidelines, such as the AI Act and NIS2 Directive, address the unique risks posed by AI systems.

- Compliance with these regulations ensures that healthcare organizations adopt best practices for cybersecurity.

- Regulatory frameworks also promote transparency and trust in AI-driven healthcare systems.

What is the future of cybersecurity in digital health?

- The future includes AI-driven security solutions that proactively detect and mitigate threats.

- Stronger regulations will ensure that healthcare organizations prioritize cybersecurity.

- Proactive risk management will become a standard practice, focusing on preventing cyberattacks before they occur.

- Collaboration between healthcare providers, regulators, and technology developers will drive innovation in cybersecurity.