Risk Revolution: How AI is Rewriting the Rules of Enterprise Risk Management

Post Summary

By shifting organizations from reactive risk responses to predictive, real‑time risk mitigation.

Data poisoning, model drift, algorithmic bias, PHI exposure, device manipulation, and third‑party vulnerabilities.

NIST AI RMF, HHS AI Strategy, and HSCC guidelines.

Predictive analytics, cybersecurity threat monitoring, and automated vendor risk assessments.

To ensure transparency, bias testing, safety oversight, and compliance with evolving regulations.

It automates assessments, identifies vulnerabilities, manages AI risks, and provides real‑time risk dashboards.

AI is transforming how healthcare organizations manage risks by moving from reactive to predictive strategies. This shift helps address challenges like patient safety, cybersecurity, and regulatory compliance more effectively. Here's what you need to know:

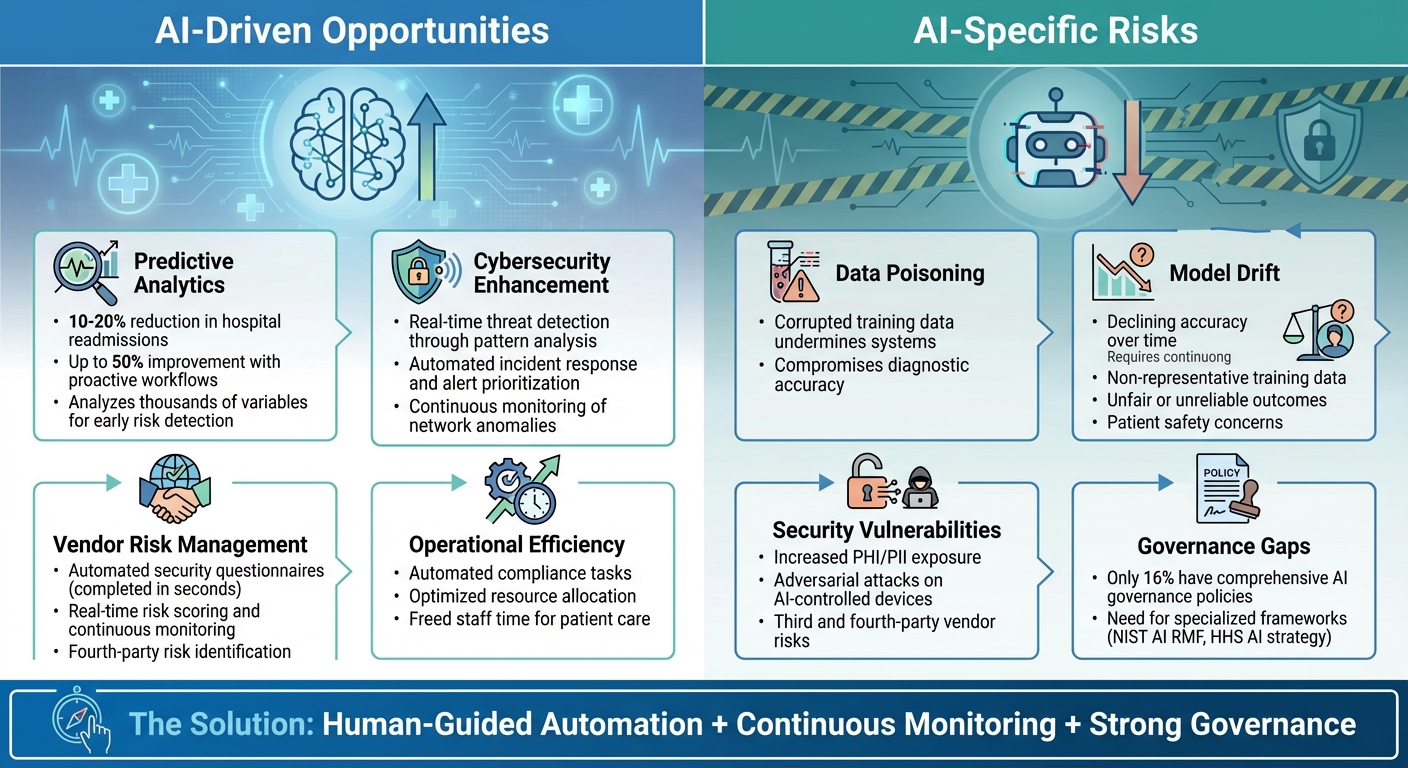

AI Benefits vs Challenges in Healthcare Risk Management

The Changing Risk Environment in Healthcare

AI is reshaping risk management in healthcare, offering advancements in care and operational efficiency while introducing vulnerabilities that traditional enterprise risk management (ERM) frameworks weren’t built to handle. Healthcare organizations now face a double-edged challenge: leveraging AI’s potential while addressing the unique risks it brings. This evolution highlights the need for specialized strategies tailored to AI-driven environments.

AI-Driven Opportunities and Challenges

AI-powered tools are transforming healthcare. Diagnostic algorithms can identify patterns in medical imaging, while predictive models help anticipate patient deterioration, allowing for timely interventions. Administrative tasks are being automated, freeing up healthcare staff to focus more on patient care.

But these advancements don’t come without hurdles. Problems like data poisoning, where training data is intentionally corrupted, can undermine AI systems. Model drift occurs when an AI system’s accuracy declines due to evolving conditions. Then there’s algorithmic bias, where non-representative training data results in unfair or unreliable outcomes. These challenges can jeopardize diagnostic accuracy and, ultimately, patient safety [5] [7] [8].

As AI opens new doors, it also introduces risks that healthcare systems must address with care.

AI-Specific Risks in Healthcare ERM

AI doesn’t just expand opportunities - it also broadens the spectrum of risks. For example, AI increases the exposure of protected health information (PHI) and personally identifiable information (PII), complicating compliance with regulations like HIPAA [6]. AI-controlled medical devices could be targets for adversarial attacks, where malicious actors manipulate inputs to cause system failures [2].

The reliance on AI-enabled vendors and cloud-based services adds another layer of complexity. Vulnerabilities in third-party and even fourth-party systems - those further down the supply chain - can ripple back to healthcare organizations, creating new security and operational risks [2].

Frameworks for Managing AI Risks

To navigate these challenges, healthcare organizations are turning to frameworks like the NIST AI RMF and the HHS AI strategy. These frameworks provide guidelines on governance, mitigating bias, ensuring transparency, and strengthening cybersecurity [9] [10] [11] [12]. They emphasize the need for risk management approaches that go beyond standard IT security measures, striking a balance between innovation and patient safety.

AI Capabilities That Transform Enterprise Risk Management

AI is changing the game for healthcare organizations by enabling them to spot, monitor, and tackle risks head-on. Three major AI applications are reshaping how Enterprise Risk Management (ERM) operates: predictive analytics, cybersecurity threat monitoring, and automated vendor risk management. Let’s dive into how AI is making a difference in each of these areas.

Predictive Analytics for Risk Identification

AI-powered predictive models are like crystal balls for healthcare. They sift through thousands of variables - think demographics, lab results, medications, comorbidities, and even social factors - to predict patient safety issues and operational hiccups. By 2023, most U.S. hospitals had integrated AI into their EHR systems to forecast risks and enable timely interventions[15].

This isn’t just theoretical - hospitals using AI for predictive analytics have seen hospital readmission rates drop by 10% to 20%. In some cases, proactive workflows have pushed these improvements to an impressive 50%[14]. By continuously analyzing EHR and wearable data, AI systems can flag early warning signs like declining vital signs or missed medications. These alerts can then prompt quick actions, such as scheduling follow-up visits or reviewing prescriptions.

One U.S. hospital network went a step further, using machine learning to predict admissions, ICU transfers, and discharge timings. This helped optimize resource allocation and revealed risk factors that even seasoned professionals might miss[13].

AI-Driven Cybersecurity and Threat Monitoring

AI isn’t just about predicting risks - it’s also a powerful guardian of digital infrastructure. By processing massive amounts of data from various sources, AI can detect patterns and anomalies that might signal a cyberthreat[16]. Unlike traditional systems that rely on set rules, AI learns what "normal" looks like in network traffic, device usage, and user behavior. When something seems off - like unauthorized access attempts or unusual data transfers - it raises the alarm.

AI doesn’t stop at detection. It can also assist with incident response by correlating events and prioritizing alerts based on the actual level of risk. This ensures that cybersecurity teams can focus their efforts where they’re needed most.

Automated Third-Party and Vendor Risk Management

Healthcare organizations rely on a web of vendors, each with its own security protocols and vulnerabilities. AI simplifies the daunting task of vendor risk assessment by automating the review of vendor documentation against regulatory standards. Platforms like Censinet RiskOps™ make this process lightning-fast, allowing vendors to complete security questionnaires in seconds. These tools also summarize evidence, capture integration details, and even identify fourth-party risks.

But vendor risk management isn’t a one-and-done job. AI enables continuous monitoring, which is crucial for spotting new vulnerabilities and active threats as they emerge[17]. Dynamic risk scores, powered by AI, provide real-time insights by factoring in elements like data sensitivity, system importance, and access levels[17]. This kind of ongoing oversight strengthens the overall ERM approach. By combining human oversight with automated processes, healthcare organizations can scale their vendor risk management efforts without losing control over critical decisions.

AI in Healthcare Risk Management: Practical Applications

Building on earlier discussions about AI's role in identifying and managing risks, this section dives into how AI is applied in cybersecurity, vendor management, and in blending automation with human oversight. These applications highlight how AI is actively improving outcomes in healthcare environments.

AI for Cybersecurity Risk Monitoring

AI-powered platforms are reshaping how healthcare organizations handle cybersecurity threats. By analyzing massive amounts of data from networks, devices, and user behavior, these systems establish patterns of normal activity and flag anything unusual that might indicate a breach [4]. On top of that, AI can rank alerts by their severity, helping security teams focus on the most pressing threats and minimizing the time systems remain exposed. This enhanced monitoring naturally extends to managing the complexities of vendor-related risks.

Managing Vendor Ecosystems with AI

Healthcare organizations often work with a wide range of vendors, each potentially introducing security vulnerabilities. AI simplifies this challenge by automating risk assessments and continuously monitoring vendors throughout their lifecycle [18]. Tools like Censinet RiskOps™ showcase how AI can revolutionize vendor risk management by identifying risks tied to fourth-party relationships and generating detailed risk summaries based on comprehensive assessment data. These platforms provide real-time risk scores that adjust as new vulnerabilities arise or as vendors change their security measures, replacing outdated yearly reviews with a constant, up-to-date view of risks.

Human-Guided Automation in AI-Driven Risk Management

While automation is a powerful tool, combining it with human oversight ensures a balanced approach to risk management. AI can streamline processes like validating evidence, drafting policies, and mitigating risks, but human input remains critical in reviewing and approving key findings. Censinet AI exemplifies this approach by routing important decisions and AI-generated insights to the appropriate stakeholders. This "air traffic control" model ensures that urgent issues are addressed promptly while maintaining the oversight needed to protect patient care and organizational security. By blending speed with careful review, this approach supports both efficiency and safety.

sbb-itb-535baee

Building an AI-Centric ERM Program

Incorporating AI into enterprise risk management (ERM) requires careful planning and strong governance. Yet, many healthcare organizations lag behind - only 16% currently have a comprehensive governance policy addressing AI usage and data access across their systems [20]. Successfully creating an AI-focused ERM program involves setting clear oversight mechanisms, aligning with regulatory standards, and building systems that can adapt to advancements in AI. This groundwork is essential for developing the governance frameworks needed to effectively integrate AI.

Establishing AI Governance in Healthcare ERM

Effective governance is the backbone of any AI-driven risk management program. Boards must take the lead by setting ethical AI usage standards, defining risk tolerances, and fostering cross-functional collaboration through dedicated governance committees [20]. These committees should bring together a diverse group of professionals - data protection officers, AI specialists, ethicists, clinicians, legal advisors, and regulatory experts - to manage risks and promote a culture of safety [20].

In addition to organizational structures, practical safeguards are critical. Clear policies must define how AI tools are used, ensuring access is limited to trained personnel and that sensitive information is well-protected [1]. When working with third-party AI vendors, organizations should demand transparency, detailed audit trails, rigorous performance testing, and thorough documentation of algorithm updates and maintenance. Ongoing staff training is equally important - clinicians and administrative teams need to understand how to safely interact with AI, recognize its limitations, and report any anomalies they encounter [20].

Integrating Regulatory Frameworks into ERM

AI regulation in healthcare builds on existing digital safety standards, with frameworks like the NIST AI RMF and FDA SaMD pathways providing guidance [21].

To stay compliant, organizations should designate a compliance team or leader to monitor changes in laws and regulations related to AI in healthcare. Embedding these compliance checks into the AI system's development lifecycle ensures that regulatory requirements are seamlessly woven into risk management processes. This proactive approach helps organizations stay prepared for both current and future standards, reinforcing leadership’s commitment to ethical and transparent AI practices [20].

Continuous Monitoring and Improvement

Regulatory compliance is just the beginning - AI systems require ongoing monitoring to identify shifts in performance, bias, or vulnerabilities [19]. Post-deployment audits should focus on key performance indicators (KPIs) that track model degradation, while regular ethical reviews assess the broader impact of AI in real-world scenarios [22].

Incident reporting systems are vital for documenting and addressing AI-related issues. Specialized software can log and categorize problems like algorithm errors, unexpected outcomes, or patient complaints, helping organizations identify patterns and underlying weaknesses [20]. By analyzing this data, teams can implement technical updates and refine processes. Integrating AI-related risks into centralized dashboards allows frontline staff to report anomalies easily, while providing leadership with actionable insights for decision-making and regulatory adherence [20].

Risk dashboards can act as a hub for managing AI-related policies, risks, and tasks. Additionally, manual red-teaming exercises - conducted by specialized professionals - can evaluate model fairness and simulate adversarial attacks to uncover vulnerabilities and biases [22]. Striking the right balance between continuous algorithm updates and human oversight is crucial for mitigating risks, such as errors in diagnoses caused by outdated models, and ensuring AI systems remain effective over time [19].

Conclusion: The Future of Risk Management in Healthcare

AI is reshaping healthcare risk management, shifting the focus from reacting to problems to actively preventing them. This shift not only improves patient safety but also strengthens data governance and introduces new accountability measures to handle both long-standing and emerging challenges [2].

Deputy Secretary Jim O'Neill stated, "AI has the potential to revolutionize health care and human services, and HHS is leading that paradigm shift. By guiding innovation toward patient-focused outcomes, this Administration has the potential to deliver historic wins for the public - wins that lead to longer, healthier lives"

.

This forward-looking viewpoint highlights the importance of creating comprehensive risk management frameworks. Achieving success in this evolving field requires a well-structured approach that integrates strong governance, ongoing monitoring, and collaboration among diverse teams. Experts like data protection officers, AI specialists, clinicians, and regulatory professionals must work together to address challenges like algorithmic bias, system failures, and data privacy concerns [19][4].

As we look ahead, blending human oversight with automation will be crucial for scaling risk management effectively. Tools like Censinet RiskOps™ use AI to simplify vendor assessments by automating security questionnaires while maintaining the essential involvement of human experts. This balanced approach enables risk teams to tackle more threats efficiently, without compromising safety or accuracy.

FAQs

How is AI transforming predictive risk management in healthcare?

AI is transforming the way predictive risk management is handled in healthcare. By processing enormous volumes of patient data quickly and accurately, it pinpoints potential risks much earlier. This early detection allows for timely interventions, helping to minimize preventable complications and enhancing overall patient care.

On top of that, AI plays a critical role in cybersecurity by spotting vulnerabilities and unusual activity in real time. This added layer of protection helps secure sensitive healthcare data against potential breaches. Together, these advancements are making risk management in healthcare more proactive and efficient, especially in such a complex and high-pressure field.

What challenges do healthcare organizations face when using AI for risk management?

Healthcare organizations encounter numerous obstacles when trying to incorporate AI into their risk management processes. A key challenge is dealing with fragmented and siloed data, which makes it tough to piece together a comprehensive view of potential risks. On top of that, navigating complex regulatory and legal frameworks can be daunting, especially as compliance standards continue to evolve.

Organizations also need to tackle other pressing concerns like maintaining patient safety, addressing workforce safety and retention, and guarding against cybersecurity threats. These threats include risks like data breaches or the misuse of AI by cybercriminals. To tackle these issues effectively, healthcare providers must invest in thoughtful planning, resilient systems, and a forward-thinking strategy for managing risks.

How does the NIST AI Risk Management Framework (AI RMF) help address AI-related risks in healthcare?

The NIST AI Risk Management Framework (AI RMF) provides healthcare organizations with practical, adaptable guidelines to address AI-related risks. It focuses on helping these organizations identify, assess, and manage challenges such as ensuring transparency, fairness, and compliance - key priorities in the sensitive healthcare industry.

By emphasizing trust and accountability, the framework guides organizations in deploying safer and more dependable AI systems while upholding ethical principles and meeting regulatory requirements. Its flexibility makes it an essential resource for managing the intricate demands of AI in healthcare settings.

Related Blog Posts

- Explainable AI in Healthcare Risk Prediction

- The AI-Augmented Risk Assessor: How Technology is Redefining Professional Roles in 2025

- From Reactive to Predictive: AI-Driven Risk Management Transformation

- The Healthcare AI Paradox: Better Outcomes, New Risks

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How is AI transforming predictive risk management in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>AI is transforming the way predictive risk management is handled in healthcare. By processing enormous volumes of patient data quickly and accurately, it pinpoints potential risks much earlier. This early detection allows for timely interventions, helping to minimize preventable complications and enhancing overall patient care.</p> <p>On top of that, AI plays a critical role in <strong>cybersecurity</strong> by spotting vulnerabilities and unusual activity in real time. This added layer of protection helps secure <a href=\"https://censinet.com/perspectives/top-7-insider-threat-indicators-in-healthcare\">sensitive healthcare data</a> against potential breaches. Together, these advancements are making risk management in healthcare more proactive and efficient, especially in such a complex and high-pressure field.</p>"}},{"@type":"Question","name":"What challenges do healthcare organizations face when using AI for risk management?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare organizations encounter numerous obstacles when trying to incorporate AI into their risk management processes. A key challenge is dealing with <strong>fragmented and siloed data</strong>, which makes it tough to piece together a comprehensive view of potential risks. On top of that, navigating <strong>complex regulatory and legal frameworks</strong> can be daunting, especially as compliance standards continue to evolve.</p> <p>Organizations also need to tackle other pressing concerns like maintaining <strong>patient safety</strong>, addressing <strong>workforce safety and retention</strong>, and guarding against <strong>cybersecurity threats</strong>. These threats include risks like <a href=\"https://censinet.com/blog/taking-the-risk-out-of-healthcare-june-2023\">data breaches</a> or the misuse of AI by cybercriminals. To tackle these issues effectively, healthcare providers must invest in thoughtful planning, resilient systems, and a forward-thinking strategy for managing risks.</p>"}},{"@type":"Question","name":"How does the NIST AI Risk Management Framework (AI RMF) help address AI-related risks in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>The <a href=\"https://www.censinet.com/blog/censinet-delivers-enterprise-assessment-for-the-nist-artificial-intelligence-risk-management-framework-ai-rmf\">NIST AI Risk Management Framework</a> (AI RMF) provides healthcare organizations with practical, adaptable guidelines to address AI-related risks. It focuses on helping these organizations identify, assess, and manage challenges such as ensuring <strong>transparency</strong>, <strong>fairness</strong>, and <strong>compliance</strong> - key priorities in the sensitive healthcare industry.</p> <p>By emphasizing trust and accountability, the framework guides organizations in deploying safer and more dependable AI systems while upholding ethical principles and meeting regulatory requirements. Its flexibility makes it an essential resource for managing the intricate demands of AI in healthcare settings.</p>"}}]}

Key Points:

How is AI transforming enterprise risk management in healthcare?

- Predictive analytics identify patient‑safety risks before they escalate

- AI‑driven threat detection monitors cyber activity in real time

- Automated vendor assessments reduce manual workload and speed evaluations

- Continuous monitoring replaces outdated annual reviews

- Centralized insights strengthen decision‑making across departments

What risks does AI introduce into healthcare ERM?

- Data poisoning that corrupts training datasets

- Model drift reducing accuracy over time

- Algorithmic bias leading to unsafe or inequitable outcomes

- Adversarial attacks targeting AI models or connected devices

- Expanded PHI exposure through cloud‑based AI vendors

- Fourth‑party dependencies that complicate oversight

How does AI enhance predictive risk identification?

- Analyzes thousands of variables across EHRs, labs, and wearables

- Predicts deterioration and operational bottlenecks in advance

- Reduces readmissions by 10–20% or more through early intervention

- Optimizes staffing and workflows using real‑time signals

- Reveals risk patterns that humans may miss

How does AI improve cybersecurity threat monitoring?

- Learns normal behavior and flags anomalies instantly

- Automates alert triage, reducing noise

- Correlates events across systems for faster detection

- Supports incident response by mapping attack paths

- Prioritizes vulnerabilities based on clinical impact

Why must AI governance be part of ERM?

- Prevents unsafe clinical decisions from opaque models

- Mitigates bias through pre‑deployment testing and validation

- Ensures transparency for clinicians and patients

- Aligns AI tools with HIPAA, FDA, and emerging AI regulations

- Coordinates risk decisions via AI oversight committees

How does Censinet RiskOps™ modernize AI‑enabled ERM?

- Automates third‑party AI assessments and evidence review

- Tracks fourth‑party dependencies and data flows

- Maintains AI risk registers tied to clinical and cybersecurity risk

- Routes findings to AI governance committees for human oversight

- Provides real‑time dashboards covering PHI, vendors, devices, and AI models