Safety by Design: Building AI Systems That Protect Rather Than Endanger

Post Summary

Healthcare AI must prioritize safety from the start. Flawed algorithms can lead to misdiagnoses, biased treatments, or privacy breaches, putting lives at risk. To avoid these outcomes, AI systems should be designed with safeguards, accountability, and compliance baked in from day one.

Key Takeaways:

- Early Threat Modeling: Identify risks like data manipulation or model failures before deployment.

- Regulatory Alignment: Follow frameworks like HIPAA and FDA guidelines to ensure compliance.

- Human Oversight: Keep clinicians involved to maintain accountability and prevent errors.

- Continuous Monitoring: Track AI performance in real time to detect and resolve issues quickly.

- Risk Mitigation Strategies: Use secure systems, diverse datasets, and clear governance to reduce vulnerabilities.

By focusing on safety-first principles, healthcare organizations can build AI systems that not only meet regulations but also protect patients and earn trust.

Core Principles for Building Safe and Secure AI Systems

Creating healthcare AI that prioritizes patient safety requires embedding protective measures at every stage of development. This approach goes beyond quick fixes, focusing instead on designing systems with built-in security, transparency, and accountability. Three key principles guide this process: identifying threats early, adhering to regulatory frameworks, and ensuring human oversight throughout the AI lifecycle.

Early Threat Modeling in AI Development

Spotting security risks before deployment is critical. Early threat modeling allows you to identify vulnerabilities unique to AI, such as data poisoning (where attackers tamper with training data), model manipulation (where adversaries change how AI makes decisions), and drift exploitation (where shifts in real-world data lead to failures). These weaknesses can cause serious issues, like misdiagnoses, incorrect treatments, or breaches of patient data.

The consequences can be severe. For example, a 2020 ransomware attack at a hospital disrupted key IT systems, delaying treatment and tragically resulting in a fatality [9].

To implement effective threat modeling, start by categorizing AI systems based on their potential impact on patient outcomes and data security [8]. Identify high-risk systems - such as those influencing clinical decisions or managing sensitive information - and establish risk management strategies that address bias, monitor outcomes, and ensure strong security measures and human oversight [8]. Collaboration is key: bring together experts from engineering, cybersecurity, regulatory affairs, quality assurance, and clinical teams to incorporate AI-specific risks into your broader risk management processes [7].

Once threats are identified, the next step is aligning with established regulatory standards.

Following Regulatory Frameworks

Regulatory frameworks provide clear guidelines for developing AI responsibly. Tools like the NIST AI Risk Management Framework and FDA guidance for AI/ML-enabled medical devices offer structured methods for managing risks, ensuring systems meet compliance standards and earn trust.

Start by forming an AI governance committee that includes members from legal, compliance, IT, clinical operations, and risk management teams [6]. This cross-functional group should oversee all AI activities - from procurement to implementation - ensuring compliance with federal and state regulations such as HIPAA and FDA guidelines. Develop detailed policies and procedures for every stage of AI use, from procurement to monitoring, while incorporating advanced security measures like encryption, access controls, and audit trails. Conduct formal AI impact and risk assessments to address ethical, legal, operational, and privacy concerns [6].

Kaiser Permanente serves as a great example. When rolling out its assisted clinical documentation tool (Abridge) in March 2025, the organization ensured compliance with privacy laws, encrypted patient data, secured patient consent, and required doctors to review and edit AI-generated notes. Before full deployment, they conducted extensive quality assurance by gathering input from thousands of doctors to ensure the tool served all patients effectively, including non-English speakers. Their thorough approach was later published in a peer-reviewed journal [3].

Maintaining Human Oversight for Ethical AI

Ethical oversight goes beyond compliance, ensuring that human judgment remains central to AI use. AI should support, not replace, human decision-making. Keeping humans in the loop ensures accountability and helps catch errors that algorithms might miss - especially in healthcare, where clinical judgment and patient context are irreplaceable.

Design AI tools with transparency in mind. Clinicians need to understand why an AI system suggests a particular diagnosis or treatment plan; without this clarity, trust in the system may falter. As Ibrahim Habli from the University of York’s Department of Computer Science explains, "The prospect of patient harm caused by the decisions made by an artificial intelligence-based clinical tool is something to which current practices of accountability and safety worldwide have not yet adjusted" [2].

Establish clear governance structures with defined roles and responsibilities. Inform patients when AI is involved in their care and obtain their consent when needed. Additionally, provide comprehensive training for healthcare providers on how to use AI tools effectively, understand their limitations, and follow related policies. Continuous monitoring of AI systems - tracking data quality, clinical outcomes, and adverse events - is essential to quickly identify and address any issues, ensuring patient safety remains the top priority.

Risk Assessment and Mitigation Strategies for Healthcare AI

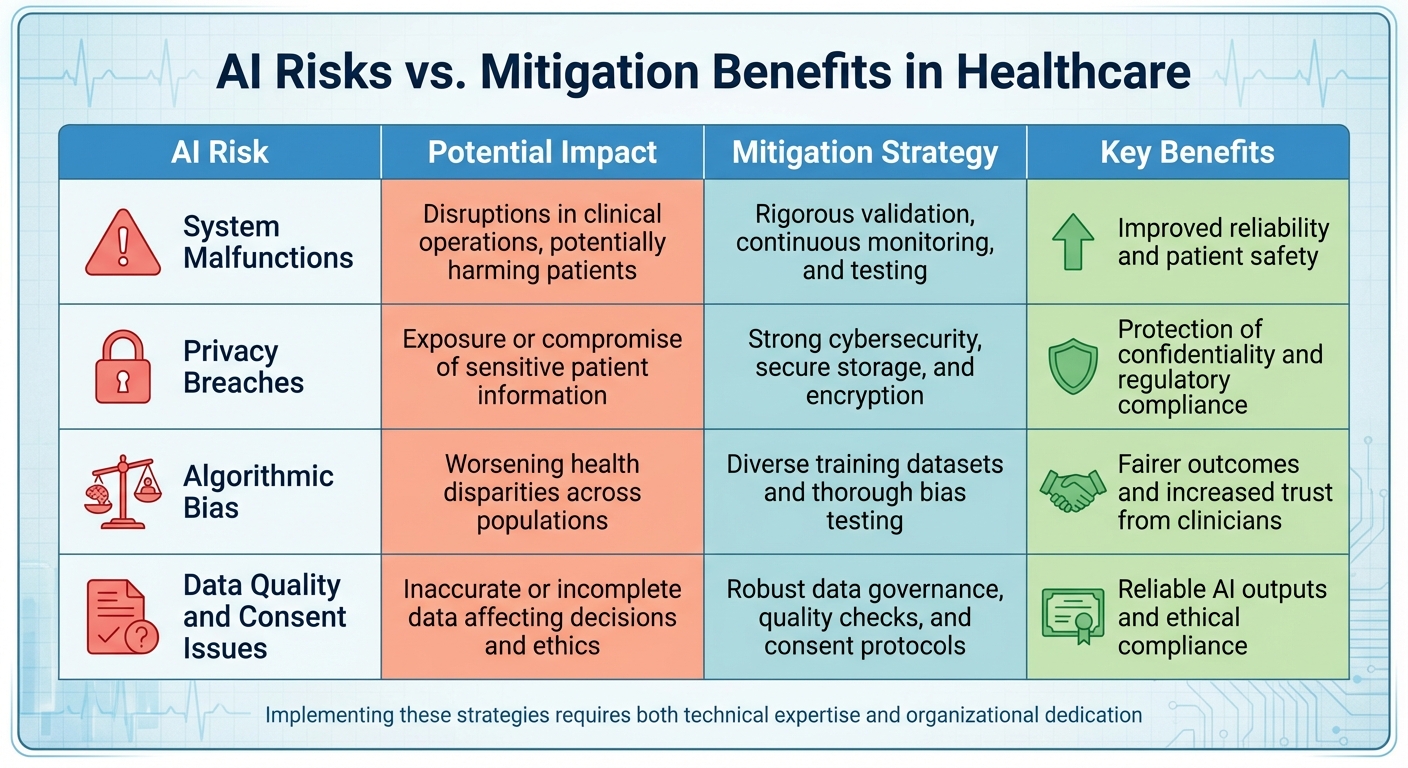

Healthcare AI Risks and Mitigation Strategies Comparison Table

Tackling the risks associated with AI in healthcare calls for a structured approach to address vulnerabilities. Patient safety incidents occur at an alarming rate of one in three hundred [10]. The integration of AI into medical devices, IoT systems, and third-party platforms amplifies these risks. By prioritizing safety from the design stage, organizations ensure that AI systems not only comply with regulations but actively safeguard patient well-being.

How to Conduct AI Risk Assessments

Effective risk assessment begins with a comprehensive understanding of potential vulnerabilities across your entire tech ecosystem. This means evaluating more than just the AI algorithms - look at medical devices, IoT connections, and third-party supply chains that interact with AI systems. For example, in 2020, insecure storage systems in hundreds of hospitals, medical offices, and imaging centers exposed over 1 billion patient images [11].

To manage these risks, categorize AI systems based on their impact on patient outcomes and data security. Bring together experts from fields like cybersecurity, data privacy, computer science, and clinical operations. Systems with high-risk implications - such as those influencing clinical decisions or handling sensitive patient data - demand the most thorough evaluations. Pay special attention to AI-specific challenges like algorithmic transparency, model drift, and vulnerabilities to adversarial attacks.

When partnering with third-party AI solution providers, conduct detailed risk assessments to confirm their security practices align with your organizational standards. Evaluate their AI capabilities, data handling protocols, security measures, and compliance history. Tools like the NIST AI Risk Management Framework (AI RMF) can guide organizations in embedding trustworthiness into AI design, development, and deployment [12].

By anchoring these assessments in a safety-first mindset, organizations can better secure AI’s role in healthcare.

Using Technology to Reduce AI-Specific Risks

Once risks are identified, technology becomes a critical ally in managing them. A unified, data-driven platform is essential for addressing AI-specific challenges [13]. Tools like Censinet RiskOps™ provide healthcare organizations with a centralized view of AI-related risks, covering everything from third-party vendors to internal AI systems. This approach tackles issues like opaque decision-making processes and emerging cybersecurity threats targeting AI-dependent systems.

Censinet AI™ accelerates third-party risk assessments by automating key steps. It simplifies security questionnaires for vendors, summarizes evidence and documentation, and highlights integration details and risk exposures. The platform also generates detailed risk reports, blending automation with expert oversight. This balance allows risk teams to maintain control through customizable rules while efficiently managing cyber risks.

Additionally, the platform serves as a command center for AI governance. It routes critical findings to stakeholders - like AI governance committees - for timely review and approval. With real-time data presented in an intuitive AI risk dashboard, organizations can centralize their policies, risks, and tasks, ensuring issues are addressed by the right teams without delay.

AI Risks vs. Mitigation Benefits

Understanding the connection between specific AI risks and their mitigation strategies is key to prioritizing investments and allocating resources effectively. The table below highlights major risk areas in healthcare AI, along with strategies to address them and their benefits:

| AI Risk | Potential Impact | Mitigation Strategy | Key Benefits |

|---|---|---|---|

| System Malfunctions | Disruptions in clinical operations, potentially harming patients | Rigorous validation, continuous monitoring, and testing | Improved reliability and patient safety |

| Privacy Breaches | Exposure or compromise of sensitive patient information | Strong cybersecurity, secure storage, and encryption | Protection of confidentiality and regulatory compliance |

| Algorithmic Bias | Worsening health disparities across populations | Diverse training datasets and thorough bias testing | Fairer outcomes and increased trust from clinicians |

| Data Quality and Consent Issues | Inaccurate or incomplete data affecting decisions and ethics | Robust data governance, quality checks, and consent protocols | Reliable AI outputs and ethical compliance |

Implementing these strategies takes both technical expertise and organizational dedication. Beyond reducing risks, these measures foster trust among clinicians, protect patients, and prepare organizations to adapt as AI technologies and regulations evolve.

Continuous Monitoring and Incident Response for AI Systems

AI systems in healthcare demand constant attention to ensure they remain accurate, reliable, and safe. Over time, algorithms, data inputs, and models can shift, making continuous evaluation a necessity for delivering dependable outcomes. Without this vigilance, even the most robust systems can develop vulnerabilities over time [1].

Real-Time Monitoring for AI Vulnerabilities

Monitoring AI systems effectively starts at the source. Unlike traditional reporting methods that focus on past incidents like infections or falls, AI introduces digital markers that can highlight potential risks or near misses before harm occurs [15].

"Unlike traditional hospital reporting that focuses on downstream events like infections or falls, AI enables the creation of digital markers that can be used for upstream monitoring - identifying near misses and key risk indicators before harm occurs." – Randy Fagin, M.D., Chief Quality Officer at HCA Healthcare [15]

HCA Healthcare showcased this proactive approach in October 2025 by using AI to identify risks early through digital markers. They also collaborated with GE Healthcare to develop an AI algorithm for fetal heart rate monitoring, addressing critical workforce shortages. As Chris DeRienzo, M.D., from the American Hospital Association, noted:

"There could never be enough human workforce to be able to watch every single one of those strips… That is where AI is making a difference today." – Chris DeRienzo, M.D., Chief Physician Executive, American Hospital Association [15]

Effective real-time monitoring must cover multiple dimensions simultaneously. This includes addressing data quality issues like bias, misclassification, and outliers, which can undermine AI accuracy [14]. Automated tools such as Amazon CloudWatch can trigger alerts for real-time risk detection [16]. Additionally, systems must safeguard privacy by identifying personally identifiable information (PII) and defending against adversarial attacks, such as prompt injections [16]. Transparent documentation of data sources, design decisions, and system limitations is equally critical to ensure AI outputs remain understandable and trustworthy [16].

The frequency of monitoring should align with the level of risk associated with the AI tool. For instance, systems used in clinical decision-making require more frequent checks than those handling administrative tasks [1]. Establishing post-implementation surveillance programs across various real-world settings can help share best practices and detect issues early [17]. This proactive approach enables rapid, automated responses when incidents arise.

Automated Response Playbooks for AI Incidents

When AI systems encounter problems, swift action is essential. Automated response playbooks allow healthcare organizations to address issues promptly while staying compliant with regulations. These playbooks are most effective when integrated into a learning healthcare framework, which supports ongoing evaluation and adaptation [18].

"A major asset of AI is that continuous learning is necessary to ensure optimization and resilience over time from known challenges such as dataset shifts and noise." – Badal et al. [18]

For example, Censinet RiskOps™ assigns incident tasks to the appropriate stakeholders, consolidating AI-related policies, risks, and responsibilities. This ensures that the right teams address problems quickly and efficiently.

Several key elements support effective automated responses. Explainability and interpretability are critical, as they provide clarity on how AI decisions are made - essential for diagnosing issues and crafting solutions [18]. Accountability measures, such as audit trails, document every action in the AI lifecycle, aiding thorough post-incident analysis. Adaptive change control enables quick adjustments during deployment, while designing AI tools to be easily retrainable for local populations enhances flexibility. Prioritizing straightforward, user-friendly algorithms also promotes shared decision-making and greater transparency [18]. Together, these strategies integrate seamlessly with continuous monitoring, ensuring compliance and swift resolution of emerging challenges.

Maintaining HIPAA and Regulatory Compliance

Continuous monitoring plays a vital role in maintaining adherence to regulatory standards like HIPAA. Patient privacy and data security are cornerstones of federal and state laws [3]. Without ongoing oversight, organizations risk missing data breaches, security threats, or performance issues that could compromise both patient information and institutional integrity [1].

Kaiser Permanente’s rollout of Abridge in March 2025 highlighted the importance of rigorous compliance measures, including data encryption, patient consent processes, and ongoing quality checks [3].

"AI tools require a vast amount of data. Ongoing monitoring, quality control, and safeguarding are necessary to protect the safety and privacy of our members and patients." – Daniel Yang, M.D., Vice President, Artificial Intelligence and Emerging Technologies, Kaiser Permanente [3]

To ensure compliance, organizations must establish robust policies and governance frameworks for regularly evaluating AI tools. This includes conducting regular security assessments, vulnerability scans, and keeping data up-to-date. Monitoring also helps uncover and address biases in AI algorithms, a crucial step toward achieving equitable and high-quality outcomes. Ethical review processes, supported by dedicated committees to evaluate AI projects, further enhance responsible and compliant use of these technologies.

sbb-itb-535baee

Building Resilient and Scalable AI Systems for the Future

Healthcare AI systems constantly grapple with evolving threats, changing regulations, and advancing technology. To ensure these systems remain reliable and protect patient safety, they must be designed to handle disruptions effectively. This calls for moving away from static safety checks and embracing dynamic assurance models - systems that adapt to shifting conditions and risks [2][16]. These models build on the principles of continuous monitoring and incident response, discussed earlier.

Resilience Testing and Learning from Incidents

Resilience testing plays a critical role in identifying and addressing potential vulnerabilities in AI systems. To maintain consistent performance during disruptions, healthcare organizations should incorporate redundancy, fault tolerance, and anomaly detection into their systems [4]. These measures expand on the "safety-by-design" principles and ensure systems are prepared for unexpected challenges.

A "learning healthcare system" approach enables continuous improvement by treating incidents as opportunities to enhance AI tools. Systems designed for ongoing evaluation can adapt to dataset shifts and noise, focusing on retraining for specific local populations rather than chasing broad generalizability, which is often impractical [5]. Post-incident analysis, supported by audit trails, helps uncover root causes and implement fixes to prevent similar issues in the future.

Adapting to New Standards and Best Practices

As regulatory frameworks and industry standards evolve, healthcare AI systems must keep pace. Organizations are expected to follow established guidelines like the NIST cybersecurity standards and the Federal Information Security Modernization Act, while staying prepared for new requirements [8]. To manage this, robust governance frameworks should oversee the entire AI lifecycle, covering areas such as risk management, compliance, and incident response [8][16].

With the constant shifts in technology, care delivery methods, and patient preferences, regular assessments of system reliability are essential. Proactive governance ensures systems are ready for both current risks and future regulatory shifts [3]. For example, tools like Censinet RiskOps™ provide centralized management of AI-related policies and risks, routing critical findings to appropriate stakeholders, such as AI governance committees, for review and action.

In addition to adapting to standards, secure backups and redundancy planning are vital for ensuring system resilience.

Secure Model Backups and Redundancy Planning

System continuity during disruptions hinges on having secure model backups and redundancy measures in place. These defenses protect against adversarial attacks or manipulations that could exploit system vulnerabilities [4]. Key safeguards include input/output guardrails, PII detection mechanisms, and validator models, all of which protect sensitive data and prevent inaccurate outputs [16][19].

A "security by design" philosophy integrates cybersecurity measures from the very beginning of system development, rather than as an afterthought [16][19]. Secure cloud and data platforms form the foundation of resilient AI systems [8]. Regular backups, combined with proactive threat modeling for AI-specific risks - such as prompt injection attacks - help prevent data breaches and unauthorized access [8][16].

Finally, maintaining human oversight and clear accountability mechanisms is crucial. This ensures AI systems operate ethically, especially when dealing with complex or assistive functions [2][8][16].

Conclusion: Building AI That Protects Patient Safety

For healthcare AI systems to truly make a difference, safety must be at their core. Generative AI introduces challenges like confabulation and bias, which need to be addressed early to comply with regulations and earn trust [16][15].

A proactive "safety by design and testing" strategy is key. This involves preventing errors, maintaining continuous monitoring, and ensuring human oversight at every stage [19]. As federal agencies refine frameworks for safe AI use, healthcare organizations must implement strong governance structures that integrate operations, finance, and clinical teams [20][15]. This approach aligns with growing expert agreement on the importance of safety-focused AI systems.

Interestingly, experts caution that ignoring AI could carry even greater risks. Organizations that fail to embrace AI may miss critical chances to prevent harm and enhance care [20]. This reinforces the idea that creating resilient and scalable AI systems isn't just a technical task - it's a necessity for protecting patient safety. These principles come to life through advanced tools designed for this purpose.

For example, Censinet RiskOps™ simplifies managing AI policies, risks, and tasks by offering automated workflows and real-time dashboards. This ensures that human oversight works hand-in-hand with automation.

Balancing the potential of AI with patient safety requires constant vigilance, a solid infrastructure, and a commitment to adaptability. This safety-first mindset reflects the principles emphasized throughout this guide.

FAQs

How does early threat modeling help mitigate AI risks in healthcare?

Early threat modeling is a key step in tackling AI-related risks within healthcare. By spotting vulnerabilities and evaluating potential threats before deploying systems, developers can address critical issues like data breaches, algorithmic bias, and safety concerns right from the start.

This forward-thinking approach enables healthcare organizations to put strong safeguards in place, ensuring they meet regulations such as HIPAA while building AI systems that are secure and dependable. The end result? Safer clinical environments that reduce errors and protect both patients and healthcare providers.

Why is human oversight important for ethical AI in healthcare?

Human involvement is essential to keep AI systems in healthcare operating ethically. It ensures that decisions made by AI are carefully reviewed, biases are spotted and corrected, and accountability is upheld. This approach minimizes risks and supports the responsible, patient-focused application of AI tools.

By closely supervising and directing AI systems, people ensure these technologies meet ethical guidelines, comply with regulations, and adapt to the specific demands of healthcare settings.

Why is ongoing monitoring essential for AI systems in healthcare?

Continuous monitoring plays a key role in keeping healthcare AI systems safe, reliable, and effective. It ensures patient safety by catching potential issues, such as errors or biases, early on. By regularly evaluating how these systems perform, healthcare providers can address problems swiftly, preventing them from affecting patient care.

This ongoing oversight also protects sensitive data, ensures compliance with regulations, and allows systems to adjust to the ever-changing demands of healthcare. These efforts are crucial for establishing trust in AI-powered solutions.