The Safety-Performance Trade-off: Balancing AI Capability with Risk Control

Post Summary

AI in healthcare offers incredible advancements but also introduces serious risks. While it can process vast amounts of data, predict threats, and automate tasks, it also creates vulnerabilities like data breaches, black-box decisions, and ransomware attacks. The key challenge is to balance AI's benefits with strict oversight to ensure safety and compliance.

Key Takeaways:

-

Benefits of AI in Healthcare IT:

- Real-time threat detection and prevention.

- Simplified compliance and reduced human error.

- Predictive analytics to anticipate risks early.

-

Risks of AI:

- Opaque decision-making ("black box") complicates compliance.

- Vulnerabilities to adversarial attacks and data breaches.

- AI-powered ransomware targets critical healthcare systems.

-

Risk Management Solutions:

- Use frameworks like the NIST AI Risk Management Framework for structured oversight.

- Implement tools like Censinet AI for centralized risk governance.

- Combine AI automation with human oversight for high-risk scenarios.

Healthcare organizations must adopt a hybrid governance approach, combining automation with human judgment, to safeguard patient data and ensure AI systems operate responsibly. By focusing on proactive monitoring, incident recovery, and secure development practices, they can manage risks effectively while leveraging AI's capabilities.

AI Risks in Healthcare IT Environments

Healthcare IT systems face unique challenges when it comes to managing the risks associated with AI. Understanding these threats is essential for creating strong defenses. Below, we explore how the lack of transparency, targeted attacks, and ransomware tactics pose serious dangers to healthcare IT.

Black Box AI and Regulatory Compliance Challenges

Many AI models operate as "black boxes", meaning their decision-making processes are hidden from view. This lack of clarity creates major hurdles for healthcare organizations that must adhere to strict regulations like HIPAA and FDA standards. For example, when an AI system determines access to patient records, calculates risk scores, or enforces security protocols, administrators often can’t explain the reasoning behind those decisions. This lack of transparency complicates audits and makes it harder to meet compliance requirements.

But these challenges don’t stop at regulatory issues. AI systems are also vulnerable to deliberate attacks.

Adversarial Attacks and Model Poisoning

Hackers can exploit AI systems through methods like adversarial attacks and model poisoning. These tactics involve manipulating algorithms or corrupting data to compromise the system’s accuracy, potentially putting patient safety at risk [1] [3]. Techniques such as data poisoning, algorithm tampering, and exploiting model drift can degrade performance. The opaque nature of AI systems makes it harder to detect these attacks quickly, emphasizing the importance of continuous monitoring and rigorous validation processes.

AI-Powered Ransomware and Supply Chain Vulnerabilities

Cybercriminals are increasingly using AI to make ransomware attacks more effective. AI-driven ransomware can pinpoint critical targets, such as electronic health record (EHR) systems or imaging servers, timing attacks to cause maximum disruption while adapting to avoid detection [4]. On top of that, generative AI tools are being used to create highly convincing phishing emails, making it easier to deceive even well-trained staff. Weaknesses in third-party systems further amplify these risks, exposing entire healthcare networks to potential breaches.

AI Risk Management Frameworks and Tools

Managing AI risks in healthcare requires a careful balance between advancing innovation and ensuring safety.

Using the NIST AI Risk Management Framework

The NIST AI Risk Management Framework (AI RMF), introduced on January 26, 2023 [5], offers a voluntary, consensus-driven guide to addressing AI-related risks. It revolves around four core functions that help organizations navigate the risk management process [6]:

- Govern: Define clear responsibilities for AI safety and establish oversight mechanisms.

- Map: Identify the functions of AI systems and assess potential vulnerabilities.

- Measure: Use metrics to test AI systems, converting risks into actionable insights.

- Manage: Apply controls based on assessed risks and maintain ongoing performance monitoring.

To support practical implementation, NIST provides customizable "profiles" tailored to specific needs. For instance, a generative AI profile released in 2024 includes over 200 actions designed to address its unique challenges. These profiles are particularly useful for applications like diagnostic AI tools, helping organizations align their risk management efforts with real-world scenarios.

Adopting the NIST framework also helps healthcare organizations comply with regulatory standards. For example, the Health Sector Coordinating Council (HSCC) Cybersecurity Working Group has incorporated the NIST AI RMF into its forthcoming 2026 guidance on managing AI cybersecurity risks [3].

Turning these guidelines into actionable practices requires robust risk governance tools.

Censinet AI and Censinet AITM for Risk Governance

To operationalize structured frameworks like NIST, tools such as Censinet RiskOps™, Censinet AITM, and Censinet AI offer comprehensive solutions for managing AI risks.

- Censinet RiskOps™ serves as a centralized platform that simplifies the management of AI policies, risks, and tasks. It provides real-time dashboards to keep organizations informed and organized.

- Censinet AITM focuses on third-party risk assessments. It streamlines vendor evaluations by enabling quick completion of questionnaires, summarizing vendor-provided evidence, and identifying fourth-party risk exposures. The tool generates concise risk summary reports, making it easier for healthcare organizations to address risks effectively.

- Censinet AI introduces autonomous automation with human oversight. It supports evidence validation, policy creation, and risk mitigation through configurable rules and integrated review processes. Key insights are routed to the appropriate stakeholders for timely review, ensuring seamless coordination across Governance, Risk, and Compliance teams.

These tools not only simplify the risk management process but also ensure that automation complements human decision-making, keeping safety and oversight at the forefront.

sbb-itb-535baee

Hybrid Governance: Combining AI Automation with Human Oversight

AI Governance Models in Healthcare: Comparing Autonomous, Human-Guided, and Manual Approaches

Hybrid governance brings together the efficiency of AI automation with the necessary watchfulness of human oversight. By building on established risk management frameworks, this approach helps mitigate risks like data breaches and algorithmic opacity. For healthcare organizations, adopting hybrid governance can enhance cybersecurity measures while ensuring patient safety and data privacy remain priorities.

Maintaining Control with Configurable AI Rules

Hybrid governance creates clear guidelines that outline roles, responsibilities, and oversight throughout an AI system's lifecycle [3]. This setup allows organizations to automate routine tasks while reserving human intervention for critical decisions.

By using configurable rules and a five-level autonomy scale, organizations can align the level of human oversight with the risk profile of their AI tools. High-risk applications, such as those impacting clinical decisions or handling sensitive patient data, demand more rigorous human review [3].

"The 'no-blame culture' of Clinical Risk Management, with its focus on seeing mistakes as opportunities for learning and improvement and not as opportunities to attribute individual blame, aligns perfectly with the challenges posed by AI-related incidents and cybersecurity risks, because it allows for the adoption of systems-based analysis, by holistically assessing the interactions between people, processes and technologies, identifying vulnerabilities and critical points and promoting solutions that strengthen the entire system, ensuring safety, reliability and sustainability of clinical practices even in the presence of complex technological tools." - Di Palma et al. [1]

This balance between automation and oversight becomes even clearer when comparing various governance models.

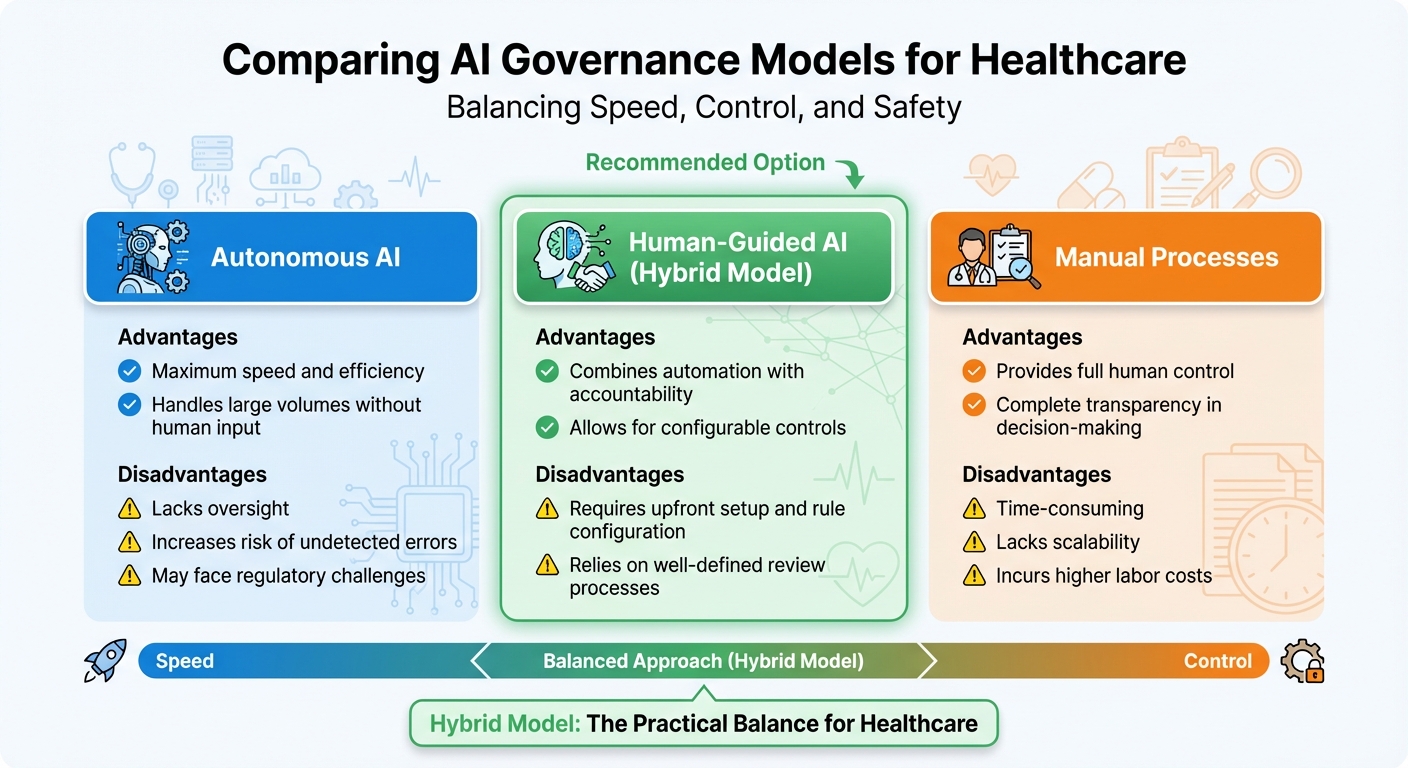

Comparing AI Governance Models

Different governance models come with their own pros and cons, particularly when it comes to balancing speed and control. Understanding these trade-offs helps organizations select the best fit for their needs:

| Governance Model | Advantages | Disadvantages |

|---|---|---|

| Autonomous AI | Offers maximum speed and efficiency; handles large volumes without human input | Lacks oversight; increases the risk of undetected errors; may face regulatory challenges |

| Human-Guided AI | Combines automation with accountability; allows for configurable controls | Requires upfront setup and rule configuration; relies on well-defined review processes |

| Manual Processes | Provides full human control and complete transparency in decision-making | Time-consuming; lacks scalability; incurs higher labor costs |

The hybrid model, also known as human-guided AI, strikes a practical balance. It enables organizations to scale their risk management efforts while maintaining the human oversight necessary to safeguard patient care [3].

Conclusion: Measuring Success in AI Risk Management

Managing AI risks effectively requires a careful balance between encouraging innovation and ensuring safety, all while focusing on measurable outcomes. For healthcare organizations, this means moving beyond outdated compliance checklists and adopting strategic Key Performance Indicators (KPIs) that connect risk management efforts directly to business objectives. Instead of depending solely on retrospective scores, organizations should prioritize predictive indicators and use AI-powered tools to automate data collection and provide real-time insights from complex risk-related data [7].

A proactive approach involves continuous evaluation throughout the AI lifecycle. By fostering a no-blame culture in Clinical Risk Management, organizations can create an environment where mistakes are viewed as opportunities to learn, ultimately fortifying systems against future risks [1]. With tools like real-time monitoring, predictive analytics, and resilience testing, reactive measures can be replaced with proactive safeguards.

Success also hinges on thoroughly cataloging each AI system's roles, data dependencies, and security considerations. This classification enables organizations to determine the level of autonomy for each system and assign the right amount of human oversight [3]. High-risk applications can then undergo more rigorous reviews, while routine processes benefit from automation. By maintaining a detailed AI inventory, healthcare organizations can tailor oversight in line with hybrid governance principles.

Next Steps for Healthcare Organizations

Armed with these measurement strategies, healthcare organizations can take actionable steps to strengthen AI risk management.

- Real-time monitoring: Establish systems to identify and address risks before they affect care or compliance [2].

- Incident recovery plans: Develop AI-specific playbooks to guide detection, response, and recovery from incidents [3].

- Governance maturity models: Use these models to evaluate current capabilities, pinpoint gaps, and prioritize improvements in AI cybersecurity risk management [3].

- Secure-by-design principles: Incorporate security considerations into AI development from the earliest stages, aligning with frameworks like the NIST AI Risk Management Framework [3].

- Ongoing education: Invest in training programs to ensure teams understand AI fundamentals and can apply appropriate control measures [3].

FAQs

How can healthcare organizations ensure AI systems comply with HIPAA while using black-box models?

Healthcare organizations can stay compliant with HIPAA regulations, even when working with complex black-box AI models, by putting solid governance frameworks in place and utilizing Explainable AI (XAI) techniques. These tools shed light on how AI reaches its decisions, promoting both transparency and accountability.

In addition to this, conducting regular risk assessments is essential for spotting potential weaknesses. Maintaining human oversight is equally important to review and validate decisions made by AI systems. Together, these measures help healthcare providers meet regulatory standards while safeguarding sensitive patient information.

How can healthcare organizations protect AI systems from cyber threats like ransomware and adversarial attacks?

To protect AI systems in healthcare, it's essential to focus on continuous network monitoring. This helps identify unusual activities early, allowing for quicker responses to potential threats. Pairing this with AI-driven threat detection tools adds an extra layer of defense, offering advanced protection against cyber risks.

Organizations should also enforce strict access controls to ensure that only authorized personnel can access sensitive systems. At the same time, keeping legacy systems updated is key to addressing known vulnerabilities and preventing exploitation.

Conducting regular AI system audits plays a vital role in spotting and mitigating potential risks. On top of that, adopting secure data-sharing solutions, like blockchain technology, can help maintain data integrity and guard against tampering. Together, these strategies enable healthcare providers to embrace technological advancements while maintaining strong cybersecurity practices.

How does hybrid governance help manage AI risks in healthcare?

Hybrid governance strengthens AI risk management in healthcare by blending technical safeguards with ethical oversight. This method brings together experts from various fields, ensuring that AI policies and practices are shaped by a wide range of perspectives.

By combining clear regulatory frameworks with strong risk controls, hybrid governance fosters trust, enhances accountability, and ensures AI systems are designed to be both safe and effective. This approach is especially important in healthcare, where protecting patient safety and securing sensitive data are paramount.