Secure by Design: Building Cyber-Resilient Medical AI Systems

Post Summary

"Secure by design" ensures that cybersecurity is embedded into medical AI systems from the start, prioritizing resilience against evolving threats.

Cybersecurity protects sensitive patient data, ensures the safe operation of AI-enabled devices, and prevents disruptions caused by cyberattacks.

Risks include data poisoning, ransomware, model manipulation, and vulnerabilities in AI-powered medical devices.

Secure-by-design principles proactively address vulnerabilities, integrate real-time threat detection, and ensure compliance with regulations like HIPAA and FDA guidelines.

Organizations can implement encryption, adopt zero-trust architectures, conduct regular audits, and use AI-driven threat detection tools.

The future includes AI-driven security solutions, stronger regulations, and proactive risk management to ensure resilience against emerging threats.

Medical AI systems are transforming healthcare but face growing risks from cyberattacks. These systems, handling sensitive patient data, are targeted for their vulnerabilities, with healthcare breaches increasing by 23% annually. A single breach can cost millions and jeopardize patient safety. Integrating security measures from the start - known as "Secure by Design" - is essential to protect these systems against threats like data poisoning, model manipulation, and ransomware.

Key takeaways:

This article outlines how to build secure medical AI systems, mitigate risks, and comply with evolving regulations to safeguard patient data and healthcare operations.

Core Principles of Secure by Design

When it comes to medical AI, security can't be an afterthought - it needs to be built into every stage of development. The Healthcare and Public Health Sector Coordinating Council (HSCC) has emphasized this through its guidance, which highlights secure-by-design principles as a crucial focus area. Their Secure by Design Medical subgroup is working to integrate cybersecurity into AI-enabled medical devices, addressing threats like data poisoning and model manipulation while adhering to both FDA guidance and NIST frameworks [1].

At the heart of this approach are three key elements: thorough risk assessments, robust data protection, and strict access controls. In February 2025, the FUTURE-AI Consortium - comprising 117 experts - outlined six principles to ensure healthcare AI is trustworthy: Fairness, Universality, Traceability, Usability, Robustness, and Explainability. Their framework is designed to make AI tools "technically robust, clinically safe, ethically sound, and legally compliant" throughout their lifecycle [8]. These principles serve as the foundation for strengthening AI security.

Risk Assessment Frameworks

Traditional IT checklists won’t cut it for medical AI. This field comes with unique vulnerabilities, like adversarial attacks that can cause diagnostic errors by altering a mere 0.001% of input data [7]. To address these risks, the HSCC is developing an AI Security Risk Taxonomy to identify and evaluate threats specific to AI [1]. Instead of relying solely on periodic audits, continuous monitoring is essential. Healthcare organizations need tools to assess both their real-time security posture and ongoing risks, including robustness testing to analyze how models perform under attack conditions. Collaboration across engineering, cybersecurity, regulatory, quality assurance, and clinical teams ensures AI-specific risks are accounted for from the very beginning [1].

But risk assessment is only part of the equation - protecting the integrity of patient data is just as critical.

Data Encryption and Privacy Controls

Securing patient data requires a multi-layered defense strategy. The updated HIPAA Security Rule now mandates encryption for electronic personal health information (ePHI) while it’s stored or transmitted, eliminating the previous "addressable" standard [2]. Healthcare organizations have 240 days to revise their business associate agreements and implement technical safeguards like encryption and multi-factor authentication. Cryptographic verification, audit trails, and segregated environments can further protect AI training pipelines [7]. Additionally, using deidentification methods such as the Safe Harbor or Statistical Method ensures compliance with HIPAA when dealing with AI training data [6].

Access Control Mechanisms

Access controls serve as the final piece of the puzzle, ensuring data security through strict verification processes. Strong authentication measures help prevent unauthorized access. Under the revised HIPAA Security Rule, multi-factor authentication (MFA) is now mandatory. This means healthcare organizations must use at least two independent credential types, like passwords, security tokens, or biometrics, to verify access [6]. Role-based access control ensures users only see the data they need for their specific roles. For medical AI systems, implementing Zero Trust architecture and microsegmentation adds another layer of protection by continuously verifying every user and system [7]. Updating business associate agreements to include these technical controls, along with network segmentation and regular security training, further reduces risks [6].

"The convergence of AI and cybersecurity, therefore, becomes a critical focus area in ensuring the security and privacy of patient information. Strategies to safeguard against cyber threats must be carefully designed and integrated to preserve the trust patients place in healthcare systems." – IHF

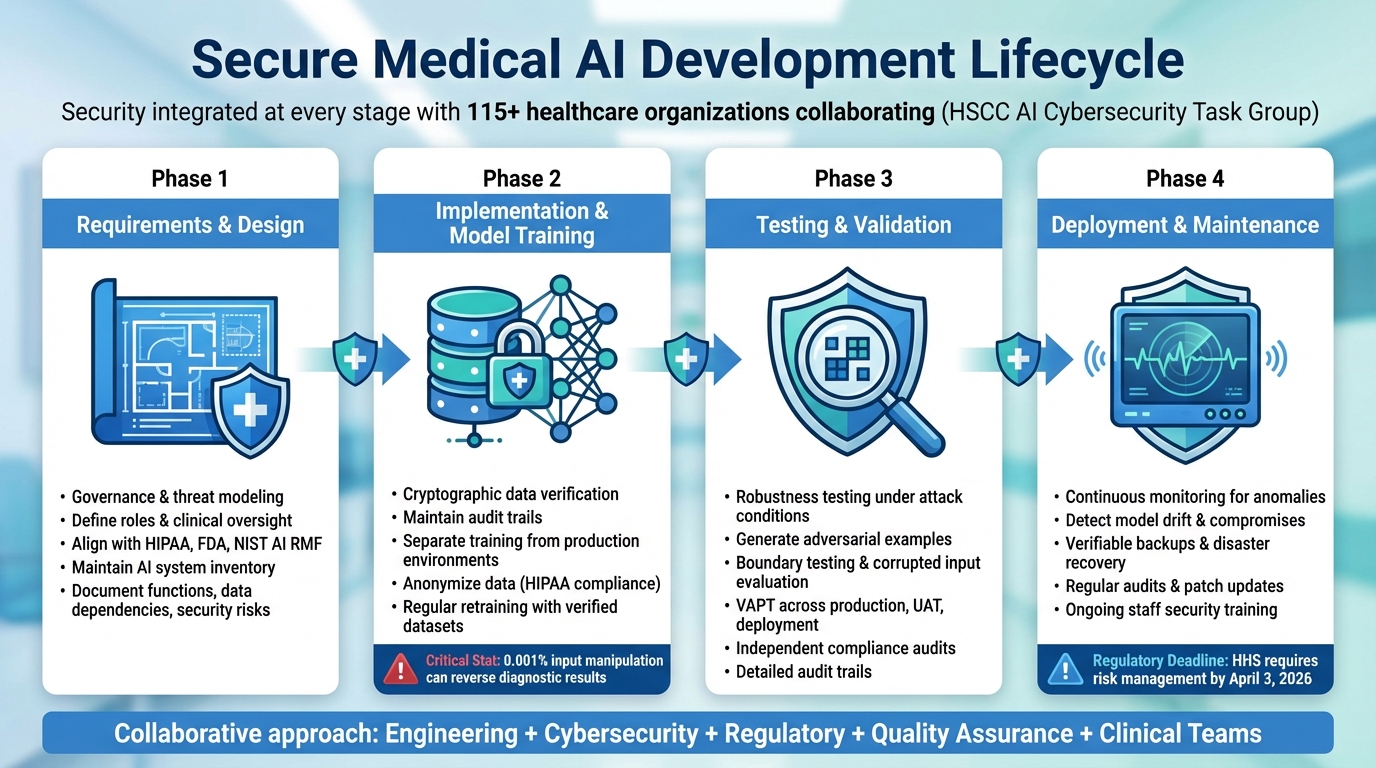

Building Security into the Medical AI Development Lifecycle

Secure by Design Medical AI Development Lifecycle: 4 Critical Phases

Security shouldn't be an afterthought - it needs to be part of every step in the development process. The Health Sector Coordinating Council (HSCC) tackled this challenge by forming an AI Cybersecurity Task Group, bringing together 115 healthcare organizations to create operational guidance for managing AI risks throughout the product lifecycle [1]. This collaborative effort ensures that cybersecurity experts, engineers, regulatory teams, quality assurance professionals, and clinicians work together from the very beginning to address vulnerabilities specific to AI [1]. Let’s break down how to secure each phase of the medical AI lifecycle.

Requirements and Design Phase

A secure foundation for medical AI begins with governance and threat modeling. Organizations need clear processes that define roles, responsibilities, and clinical oversight for the entire AI lifecycle [1]. From the outset, governance controls should align with standards like HIPAA, FDA regulations, and the NIST AI Risk Management Framework [1][10]. Keeping a detailed inventory of all AI systems - documenting their functions, data dependencies, and potential security risks - helps teams identify what needs protection and where vulnerabilities could arise [1]. This phase sets the stage for integrating cybersecurity seamlessly from design to deployment.

Implementation and Model Training Phase

During training, protecting the integrity of data is critical. This means using cryptographic methods to verify data sources, maintaining audit trails, separating training from production environments, and anonymizing data to comply with HIPAA standards [6][7]. Even a tiny manipulation - like altering 0.001% of input tokens - can reverse diagnostic results [7], underscoring the importance of strong data provenance controls. Regular retraining with verified datasets helps identify and fix vulnerabilities caused by data poisoning [7]. This phase highlights the importance of safeguarding data at every step.

Testing and Validation Phase

Medical AI testing goes beyond standard accuracy checks - it demands robustness testing to see how models perform under attack. Techniques like generating adversarial examples, boundary testing, and evaluating responses to corrupted inputs are essential [7]. Conduct vulnerability assessments and penetration testing (VAPT) across production, user acceptance testing (UAT), and deployment environments [4]. Independent audits should confirm compliance with security and privacy policies, with detailed audit trails showing who accessed data and when [6].

"Effective defense against AI threats requires comprehensive validation frameworks that test models against both benign errors and adversarial manipulation. Healthcare organizations must implement robustness testing that specifically evaluates model behavior under attack conditions, not just clinical accuracy metrics." – Vectra AI

Deployment and Maintenance Phase

Once a medical AI system is live, continuous monitoring becomes critical to catch anomalies, detect model drift, and identify potential compromises [1][4][12]. Building on existing security measures, this ongoing vigilance is essential. For example, the U.S. Department of Health and Human Services (HHS) released a 21-page AI strategy on December 4, 2025, requiring all divisions to identify high-impact AI systems and implement minimum risk management practices by April 3, 2026. HHS also committed to monitoring AI projects for compliance even after deployment [11].

To bolster security, maintain verifiable backups and disaster recovery plans using separate data centers [4][12]. Regular audits, timely patch updates, and continuous training for healthcare professionals help minimize human error and keep systems resilient against evolving threats [4][12]. This phase ensures that security remains a priority long after deployment.

Common Threats and How to Mitigate Them

Medical AI systems face a mix of traditional cyberattacks and AI-specific threats, largely due to the unique vulnerabilities within healthcare environments. The high value of Protected Health Information (PHI), reliance on outdated systems, and the critical nature of healthcare operations make these systems prime targets. These challenges highlight the importance of designing systems with security in mind from the start. For example, the Trump Administration's America's AI Action Plan [14] emphasizes the need for stronger AI-related cybersecurity measures, reflecting industry-wide concerns [15]. Below, we'll explore key threats and strategies to address them.

Prompt Injection and Adversarial Attacks

Adversarial attacks manipulate AI inputs to produce harmful or misleading outputs, potentially leading to flawed clinical decisions. To address this, teams should conduct red teaming and stress testing on high-risk AI systems [14]. Simulating attack scenarios using generative AI can help identify weaknesses and improve defenses [15]. Building systems with explainability and resilience from the beginning also makes it harder for adversaries to exploit vulnerabilities [14].

Data Poisoning and Model Manipulation

When training data is compromised, it can lead to diagnostic errors or unreliable outputs. To reduce the risk of data poisoning, implement safeguards like multifactor authentication, role-based access controls, and AES-256 encryption. Training models on anonymized and aggregated data, while using techniques like data masking, tokenization, and differential privacy, further protects sensitive information. Regular audits, thorough risk assessments, and continuous monitoring with automated compliance checks and real-time alerts are essential for spotting and addressing vulnerabilities [15].

Ransomware and Device Manipulation Risks

Healthcare organizations are frequent ransomware targets because of the value of patient data and their reliance on uninterrupted system operations. AI-integrated medical devices expand the attack surface, giving adversaries more opportunities to disrupt services. To mitigate these risks, invest in strong endpoint protection, network segmentation, and regular vulnerability assessments. Advanced threat detection tools paired with human oversight can also help identify and neutralize threats. Collaboration with experts is key to ensuring robust defenses [15].

"AI's ability to analyze massive volumes of data, identify anomalies and respond instantly doesn't just shorten response times - it protects lives and builds trust in healthcare systems." – Greg Surla, Senior Vice President and Chief Information Security Officer at FinThrive

Censinet RiskOps for AI Risk Management

Tackling cybersecurity risks in medical AI requires a platform tailored to the intricate and ever-changing nature of healthcare. Censinet RiskOps™ acts as a central hub, enabling healthcare organizations to assess, monitor, and address risks across vendors, systems, and AI applications. It’s specifically designed to handle the unique challenges of safeguarding patient data, PHI, clinical tools, and medical devices. By combining automated and manual controls, the platform aligns with secure-by-design principles, ensuring a robust approach to AI risk management. Here’s how Censinet RiskOps™ blends automation with human expertise to strengthen AI systems.

Censinet AI™ for Automated Assessments

Censinet AI™ streamlines the vendor risk assessment process, cutting down turnaround times from weeks to mere seconds. It automatically compiles evidence, pinpoints potential vulnerabilities, and generates comprehensive risk summary reports based on the gathered data. This level of automation allows healthcare organizations to handle the sheer volume and speed of managing multiple AI vendors and systems, significantly reducing risks in far less time.

Human-in-the-Loop AI Governance

While automation is crucial for efficiency, human oversight ensures critical decisions are grounded in expert judgment. With configurable rules, Censinet RiskOps™ enables tasks like evidence validation, policy creation, and risk mitigation to proceed quickly and accurately. This hybrid approach - combining automation with human expertise - helps organizations scale their risk management efforts without compromising on safety, compliance, or accountability.

Collaborative GRC Routing and Dashboards

Censinet RiskOps™ simplifies collaboration by routing key findings to the right stakeholders through real-time dashboards. These dashboards provide AI governance committees with timely, actionable insights, offering a clear, unified view of all AI-related policies, risks, and tasks across the organization. By centralizing information and ensuring seamless communication, the platform helps Governance, Risk, and Compliance (GRC) teams work together efficiently, avoiding duplicated efforts and ensuring no vulnerabilities are overlooked.

sbb-itb-535baee

Meeting Healthcare Regulatory Requirements

Once development processes are secure, the next step is ensuring medical AI systems comply with healthcare regulations. This involves navigating a maze of frameworks, many of which naturally align with secure-by-design principles, making compliance a seamless part of the development journey.

NIST AI Risk Management Framework Alignment

The NIST AI Risk Management Framework provides a structured way to identify and address AI-specific security risks throughout a system's lifecycle. In this vein, the Health Sector Coordinating Council (HSCC) is working on 2026 guidance to tackle emerging threats like data poisoning, model manipulation, and drift exploitation. This guidance will align with the NIST AI RMF, allowing healthcare organizations to address vulnerabilities while staying consistent with broader cybersecurity practices.

"The Secure by Design Medical subgroup... addresses unique AI security risks, including emerging threats such as data poisoning, model manipulation, and drift exploitation, aligning mitigation strategies with leading regulatory frameworks such as U.S. FDA guidance, the NIST AI Risk Management Framework, and CISA recommendations." - HSCC Cybersecurity Working Group

To strengthen security, organizations should maintain a complete inventory of AI systems and classify them using a five-level autonomy scale to determine necessary controls. Aligning with FDA standards further enhances this approach.

FDA Guidance and HSCC Recommendations

The FDA has expanded its guidance to include AI-enabled systems, emphasizing secure-by-design fundamentals. The HSCC's Secure by Design Medical subgroup is developing 2026 AI cybersecurity guidance with practical strategies to embed security throughout the development process [1]. Effective integration of AI-specific risks into medical device development requires collaboration across engineering, cybersecurity, regulatory affairs, quality assurance, and clinical teams. Tools like the AI Bill of Materials (AIBOM) and Trusted AI BOM (TAIBOM) can improve visibility, traceability, and trust across the AI supply chain.

HIPAA and PHI Protection Integration

For any AI system handling Protected Health Information (PHI), HIPAA’s Privacy, Security, and Breach Notification Rules are critical [17]. These systems must be actively managed to prevent inadvertent exposure of identifiers, especially in multi-tenant environments [16][18][19]. Best practices include encrypting PHI both at rest and in transit, implementing Zero Trust strategies, enforcing strict access controls, and conducting regular risk assessments to ensure compliance with HIPAA and other relevant regulations.

Incident Response and Continuous Monitoring

Medical AI systems demand constant oversight to identify and address emerging threats effectively. The Health Sector Coordinating Council (HSCC) is working on practical playbooks for 2026, aimed at tackling AI-related cyber incidents in healthcare. These playbooks focus on preparation, detection, response, and recovery, with an emphasis on resilience testing, collaboration between cybersecurity and data science teams, and learning from past incidents to improve readiness. This approach builds on the foundational resilience established during earlier development phases, making structured incident response practices an essential component.

Red-Teaming and Evaluation Practices

Red-teaming exercises - such as resilience testing, penetration testing, and tabletop simulations - are critical for identifying vulnerabilities before they can be exploited by attackers [1][2][20]. In October 2025, HealthTech Magazine underlined the importance of penetration testing as part of a strong healthcare security program, noting that simply having an incident response plan is not enough [2].

"Having an incident response plan in place is a good first step, but the plan needs to be tested to ensure that it works and everyone is familiar with their role." -

Regular testing ensures that teams are prepared to address challenges like data poisoning, model manipulation, or exploitation of model drift. The HSCC’s November 2025 preview of its 2026 AI cybersecurity guidance emphasized that its Cyber Operations and Defense subgroup is prioritizing resilience testing in its forthcoming "AI Cyber Resilience and Incident Recovery Playbook" [1].

Model Tuning and Audit Trails

After conducting red-teaming exercises, ongoing adjustments and detailed audit trails are essential for maintaining and improving security. These measures create a feedback loop that strengthens AI systems over time. Comprehensive audit trails should track access logs, data usage, and system changes to pinpoint vulnerabilities and ensure compliance [20][3][5]. Don Silva Sr., CISO at Raintree Systems, highlighted this point:

"You can only protect health data if you can track it - where is it, who is using it, and why are they using the data?"

In July 2025, Microminder Cyber Security recommended frequent monitoring of privileged accounts and regular reviews of access logs to enforce role-based access controls [20]. To support incident response, healthcare organizations should implement tools like Security Information and Event Management (SIEM) systems, Intrusion Detection Systems (IDS), and endpoint detection solutions. These technologies provide real-time visibility into suspicious activities. AI-powered incident response tools can further enhance security by automatically identifying and responding to threats, such as unusual login attempts, and applying immediate restrictions [2].

"Continuously monitoring healthcare networks helps detect anomalous behavior and prevent threats before they escalate." - Sanjiv Cherian, Cyber Security Director, Microminder Cyber Security

Conclusion

Creating cyber-resilient medical AI systems is no longer optional for healthcare organizations navigating an increasingly aggressive threat environment. By embedding security into the design process, these systems can leverage AI's potential while addressing vulnerabilities that traditional security measures might overlook[2][9][7][4]. Security must be an integral part of every development stage to tackle the diverse risks effectively.

One key to achieving this is fostering collaboration between SecOps, DevOps, and GRC teams. This teamwork ensures that strong security measures are balanced with operational efficiency[21]. Through this approach, organizations can integrate risk assessments and access controls throughout the AI lifecycle - from initial design to deployment and ongoing monitoring. Comprehensive governance frameworks aligned with regulations like HIPAA, FDA guidelines, and the NIST AI Risk Management Framework help healthcare leaders stay compliant while addressing security challenges[1][2][4][5].

Proactive evaluations are another cornerstone of this strategy, allowing vulnerabilities to be identified and mitigated before they can be exploited. This is especially vital in healthcare, where patient data is a prime target, and AI systems often support life-critical functions[2][9][7][4].

Healthcare and cybersecurity leaders who adopt secure-by-design principles are better equipped to defend against evolving threats while maximizing the benefits of AI. As regulatory guidelines continue to evolve, it’s clear that security isn’t a one-time task but an ongoing effort. Resilience depends on embedding security into every phase of AI development and fostering a culture of continuous improvement.

FAQs

What are the most important security measures to protect medical AI systems from cyberattacks?

To protect medical AI systems from cyber threats, implementing robust security measures is essential. Here are some key practices that can help strengthen defenses:

Integrating these strategies into the design and upkeep of medical AI systems can greatly lower the chances of cyberattacks and help safeguard patient data.

What is the 'Secure by Design' approach, and how does it improve the cybersecurity of medical AI systems?

The 'Secure by Design' approach bolsters the cybersecurity of medical AI systems by integrating security measures throughout the development process. This method actively seeks out and resolves vulnerabilities early, tackling risks before they escalate into significant threats.

By conducting risk assessments, adhering to healthcare regulations, and designing secure system architectures, this approach establishes multiple layers of protection. These defenses help minimize exploitable weaknesses, strengthen the system's ability to withstand cyberattacks, protect sensitive medical data, and uphold trust in AI-powered healthcare solutions.

How do regulations like HIPAA and NIST help protect medical AI systems?

Regulations like HIPAA and frameworks such as NIST are key in protecting medical AI systems. They establish clear standards for data privacy, security, and risk management, ensuring sensitive patient information stays secure while helping developers create systems with built-in safety measures.

These rules require strong security controls, regular risk assessments, and adherence to healthcare-specific guidelines. By following them, developers can address vulnerabilities and lower the chances of data breaches, building medical AI systems that focus on patient safety and maintain trust.

Related Blog Posts

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- The Cyber Diagnosis: How Hackers Target Medical AI Systems

- Protecting Digital Health: Cybersecurity Strategies for Medical AI Platforms

- The Healthcare Cybersecurity Gap: Medical AI's Vulnerability Problem

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"What are the most important security measures to protect medical AI systems from cyberattacks?","acceptedAnswer":{"@type":"Answer","text":"<p>To protect medical AI systems from cyber threats, implementing robust security measures is essential. Here are some key practices that can help strengthen defenses:</p> <ul> <li><strong>Multifactor authentication</strong> adds an extra layer of security by requiring multiple forms of verification, ensuring that only authorized users can access sensitive systems.</li> <li><strong>Zero-trust architecture</strong> continuously verifies and monitors all users, devices, and data interactions, assuming that no entity is inherently trustworthy.</li> <li><strong>Network segmentation</strong> isolates different parts of the system, minimizing the potential spread of threats if a breach occurs.</li> <li><strong>Regular penetration testing</strong> helps identify vulnerabilities before attackers can exploit them, allowing for timely fixes.</li> <li><strong>Vendor security assessments</strong> ensure that third-party providers adhere to strict cybersecurity protocols, reducing risks from external partnerships.</li> </ul> <p>Integrating these strategies into the design and upkeep of medical AI systems can greatly lower the chances of cyberattacks and help safeguard patient data.</p>"}},{"@type":"Question","name":"What is the 'Secure by Design' approach, and how does it improve the cybersecurity of medical AI systems?","acceptedAnswer":{"@type":"Answer","text":"<p>The 'Secure by Design' approach bolsters the cybersecurity of medical AI systems by integrating security measures throughout the development process. This method actively seeks out and resolves vulnerabilities early, tackling risks before they escalate into significant threats.</p> <p>By conducting <strong>risk assessments</strong>, adhering to healthcare regulations, and designing secure system architectures, this approach establishes multiple layers of protection. These defenses help minimize exploitable weaknesses, strengthen the system's ability to withstand cyberattacks, protect sensitive medical data, and uphold <a href=\"https://censinet.com/resource/promise-and-peril-of-ai-in-healthcare\">trust in AI-powered healthcare</a> solutions.</p>"}},{"@type":"Question","name":"How do regulations like HIPAA and NIST help protect medical AI systems?","acceptedAnswer":{"@type":"Answer","text":"<p>Regulations like <strong>HIPAA</strong> and frameworks such as <strong>NIST</strong> are key in protecting medical AI systems. They establish clear standards for data privacy, security, and risk management, ensuring sensitive patient information stays secure while helping developers create systems with built-in safety measures.</p> <p>These rules require strong security controls, regular risk assessments, and adherence to healthcare-specific guidelines. By following them, developers can address vulnerabilities and lower the chances of <a href=\"https://www.censinet.com/blog/taking-the-risk-out-of-healthcare-june-2023\">data breaches</a>, building medical AI systems that focus on patient safety and maintain trust.</p>"}}]}

Key Points:

What does "secure by design" mean for medical AI systems?

- "Secure by design" refers to embedding cybersecurity principles into the development of medical AI systems from the outset.

- This approach ensures that systems are resilient against evolving threats and can adapt to new challenges.

- It prioritizes proactive risk management, addressing vulnerabilities before they can be exploited.

- Secure-by-design systems integrate real-time threat detection, automated responses, and compliance with regulatory standards.

Why is cybersecurity critical for medical AI systems?

- Medical AI systems handle sensitive patient data, making them prime targets for cyberattacks.

- Cyberattacks, such as ransomware or data breaches, can disrupt healthcare operations and compromise patient safety.

- Ensuring cybersecurity protects the integrity of AI models, preventing manipulation or misuse.

- Robust cybersecurity measures build trust among patients and healthcare providers.

What are the main risks to medical AI systems?

- Data poisoning: Malicious data injected into AI training sets can distort outcomes and lead to incorrect diagnoses or treatments.

- Ransomware attacks: Hackers encrypt healthcare data and demand payment for its release.

- Model manipulation: Attackers tamper with AI models to alter predictions or steal intellectual property.

- Device vulnerabilities: AI-enabled medical devices, such as imaging systems, are susceptible to hacking.

- Inference attacks: Hackers query AI systems to reconstruct sensitive patient data.

How does secure-by-design improve cyber resilience?

- Secure-by-design principles proactively address vulnerabilities by embedding security measures into every stage of development.

- Real-time threat detection: AI systems monitor for anomalies and respond to potential threats immediately.

- Regulatory compliance: Ensures adherence to standards like HIPAA and FDA guidelines for AI-enabled devices.

- Automated risk management: AI tools prioritize and mitigate risks, reducing the burden on security teams.

- This approach shifts cybersecurity from being reactive to proactive, minimizing the impact of cyberattacks.

What strategies can healthcare organizations use to secure medical AI systems?

- Implement encryption: Protects data in motion and at rest.

- Adopt zero-trust architectures: Verifies every connection to prevent unauthorized access.

- Conduct regular audits: Identifies and mitigates vulnerabilities in AI systems.

- Use AI-driven threat detection: Monitors systems in real-time to identify and respond to cyber threats.

- Comply with regulations: Adhere to standards like HIPAA and FDA guidelines for AI-enabled devices.

What is the future of secure-by-design medical AI systems?

- The future includes AI-driven security solutions that proactively detect and mitigate threats.

- Stronger regulations will ensure that healthcare organizations prioritize cybersecurity.

- Proactive risk management will focus on preventing cyberattacks before they occur.

- Collaboration between healthcare providers, regulators, and technology developers will drive innovation in cybersecurity.