Transforming AI Governance: From Checklist Compliance to Strategic Advantage

Post Summary

AI governance in healthcare is no longer about ticking boxes - it’s about creating a framework that balances patient safety, data security, and innovation. Traditional checklist compliance falls short in addressing the rapid evolution of AI technologies. Instead, healthcare organizations are shifting toward governance frameworks that integrate ethical principles, accountability, and continuous monitoring into the AI lifecycle.

Key takeaways:

- Checklist compliance vs. governance frameworks: Checklists are static and reactive, while frameworks are dynamic, embedding governance into AI systems from the start.

- Governance in action: Hospitals are forming cross-functional AI councils and using tools like the AMA's "Governance for Augmented Intelligence" toolkit to oversee AI use.

- Building blocks: Effective governance includes clear accountability, technical safeguards (e.g., data privacy controls), and vendor risk management.

- Benefits: Strong governance improves cybersecurity, accelerates AI adoption, and builds trust in healthcare systems.

This shift ensures AI is not just safe and compliant but also a driver of better outcomes and operational efficiency.

Moving Beyond Checklists to Governance Frameworks

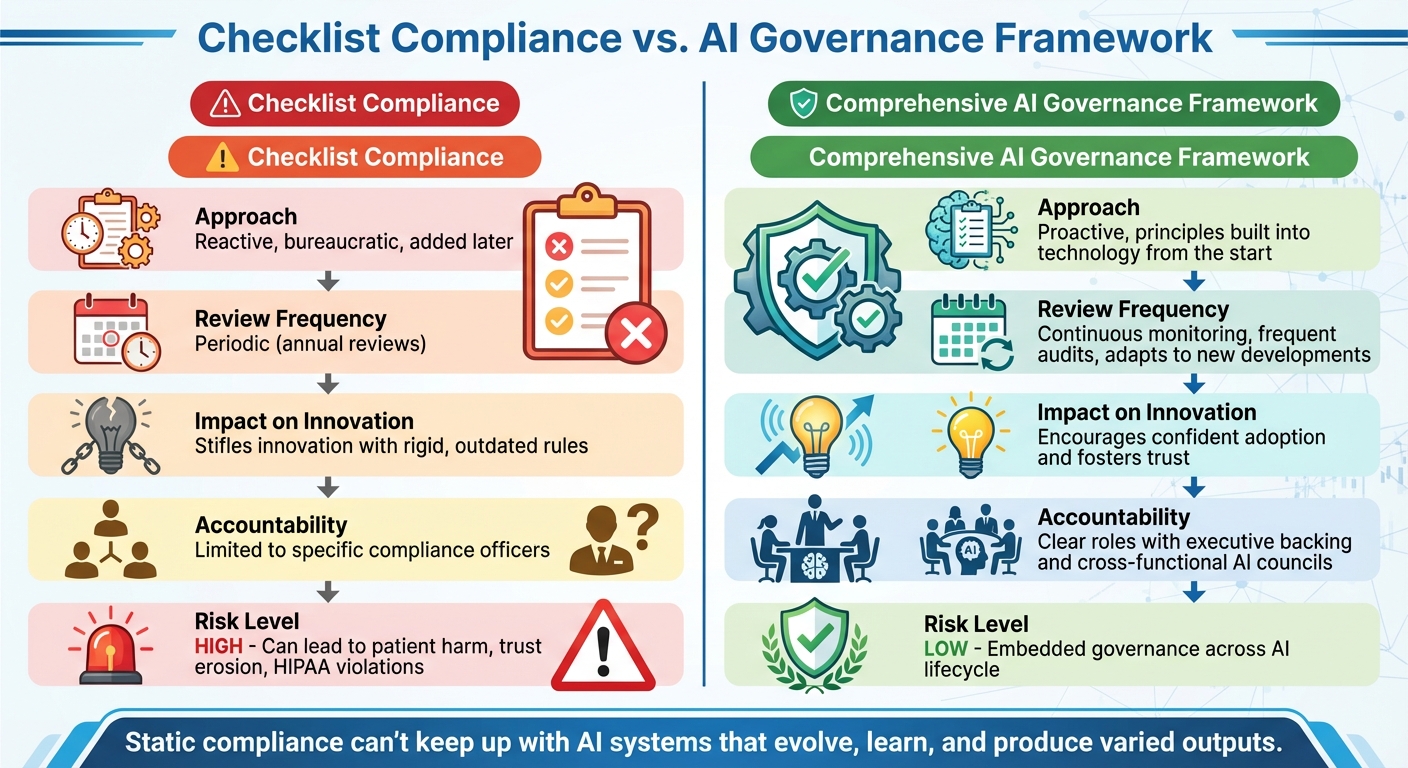

Checklist Compliance vs AI Governance Framework Comparison

Checklist Compliance vs. Governance Frameworks

When it comes to AI governance, there’s a world of difference between simply checking boxes and creating a robust framework. As Kognitos puts it, “AI Governance is not a checklist. It’s an architectural choice” [4]. A governance framework goes beyond surface-level compliance by embedding ethical principles and accountability into the very foundation of AI systems. This ensures that responsible AI practices aren’t just an afterthought but a core feature of the technology itself [4].

| Dimension | Checklist Compliance | Comprehensive AI Governance Framework |

|---|---|---|

| Approach | Reactive, bureaucratic, and often added later [4] | Proactive, with principles built into the technology from the start [4] |

| Review Frequency | Periodic, such as annual reviews [4] | Continuous monitoring, frequent audits, and adaptation to new developments [6][7][8] |

| Impact on Innovation | Can stifle innovation with rigid, outdated rules [6] | Encourages confident adoption and fosters trust [4] |

| Accountability | Limited to specific compliance officers [5] | Clear roles with executive backing and cross-functional AI councils [5][7][8] |

Relying solely on checklists for AI oversight can lead to serious risks, including patient harm, erosion of trust, and violations of regulations like HIPAA [5]. Static compliance measures can’t keep up with AI systems that evolve, learn, and produce varied outputs. This is why continuous, integrated governance is critical across every stage of the AI lifecycle [4][5][7].

By embedding governance into the design of AI systems, organizations can better prepare to address the challenges driving the need for governance in U.S. healthcare.

What's Driving AI Governance in U.S. Healthcare

The rapid adoption of AI in healthcare has created an urgent need for comprehensive governance frameworks. Margaret Lozovatsky, MD, Vice President of Digital Health Innovations at the AMA, highlights this urgency:

"AI is becoming integrated into the way that we deliver care. The technology is moving very, very quickly. It's moving much faster than we are able to actually implement these tools, so setting up an appropriate governance structure now is more important than it's ever been because we have never seen such quick rates of adoption." [8]

With federal regulations still catching up, the responsibility for safe AI deployment often falls on clinicians, IT teams, and healthcare leaders [9]. This makes governance frameworks not just a best practice but a necessity to safeguard both patients and institutions.

To understand what this looks like in action, let’s explore how governance frameworks are being implemented today.

What a Governance Framework Looks Like

In September 2025, Superblocks documented how hospitals are using cross-functional AI councils to oversee governance. These councils combine clinical, technical, and compliance expertise to ensure AI systems are reviewed thoroughly. They also log AI interactions for accountability and conduct regular assessments to identify disparities in performance across different demographic groups [5].

The American Medical Association (AMA) has also taken steps to guide healthcare organizations. In May 2025, they released the "Governance for Augmented Intelligence" toolkit, which outlines an eight-step approach. This includes establishing executive accountability, forming working groups to set priorities, crafting AI policies, defining project intake and vendor evaluation processes, and implementing oversight mechanisms [8].

Similarly, in October 2025, Paul Hastings proposed a three-stage governance model for life sciences companies. This approach includes:

- Concept Review and Approval: Evaluating the cost, benefits, and risks of AI projects.

- Design and Deploy: Setting risk management standards during implementation.

- Continuous Monitoring: Regularly improving and validating AI performance [7].

These examples show how governance frameworks are evolving to meet the challenges of AI in healthcare, ensuring that innovation doesn’t come at the expense of safety or trust.

Building Blocks of AI Governance

Effective AI governance relies on a combination of structured frameworks, technical safeguards, and vendor management strategies. Together, these elements ensure that AI systems are safe, compliant, and aligned with the goals of patient care.

Governance Structures and Accountability

AI governance begins with clear accountability mechanisms. Between 2022 and 2024, malpractice claims involving AI in healthcare rose by 14%. During the same period, AI adoption among physicians jumped from 38% in 2023 to nearly 70% in 2024. Notably, 47% of physicians identified enhanced oversight as the top regulatory action needed to build trust in AI tools [12][13].

For governance to be effective, it must involve a diverse group of stakeholders, including experts in medical informatics, clinical leadership, legal and compliance teams, safety and quality officers, data scientists, bioethicists, and patient advocates. These groups should establish structures that clarify control, reporting processes, and escalation pathways for addressing AI-related performance issues [10]. This involves creating both a high-level AI Governance Board to provide strategic direction and cross-functional working groups to oversee day-to-day operations.

The scale of this task is immense. In fiscal year 2024, the U.S. Department of Health and Human Services (HHS) reported 271 active or planned AI use cases, with a forecasted 70% increase in new use cases for fiscal year 2025 [11]. Without robust accountability frameworks, organizations risk losing track of AI deployments and the challenges they bring.

Next, let’s dive into the technical safeguards that support these governance structures.

Risk, Security, and Privacy Controls

Technical safeguards are a critical pillar of AI governance. In healthcare, this means implementing measures to protect patient data, monitor AI behavior, and ensure compliance with regulations like HIPAA. Secure data handling, access controls, and encryption are essential throughout the AI lifecycle.

A key element of these safeguards is thorough documentation. Amy Winecoff and Miranda Bogen from the Center for Democracy & Technology emphasize its importance:

"Effective risk management and oversight of AI hinge on a critical, yet underappreciated tool: comprehensive documentation" [7].

Organizations must maintain detailed records of AI model development, training data sources, validation results, and performance metrics. This documentation not only supports internal audits but also ensures regulatory compliance.

Privacy controls must address how AI systems access, process, and store protected health information (PHI). Secure vendor access to data, confidentiality agreements, and data de-identification protocols are critical [1]. Regular audits and reassessments of AI models help identify vulnerabilities or privacy risks before they escalate.

These technical controls are complemented by robust vendor management practices, which are vital for addressing the risks associated with third-party AI solutions.

Managing AI Risk in Vendors and Supply Chains

Third-party AI tools present unique governance challenges. Charles Binkley, Director of AI Ethics and Quality at Hackensack Meridian Health, explains:

"The flipped responsibility - where the health system is accountable for AI validation rather than the vendor or regulator - requires a deliberate, self-governed approach that is cautious but not paralyzing" [10].

A case from August 2025 highlights these risks. The U.S. Department of Justice (DOJ) announced a criminal resolution with a healthcare insurance company that misused AI, leading to improper payments to pharmacies for patient referrals submitted through the company’s AI platform. This example underscores the potential consequences of poor vendor oversight [7].

To manage these risks, organizations should maintain an up-to-date inventory of all vendor AI tools and enforce structured evaluations throughout their lifecycle [10]. This includes setting clear standards for data sharing and vendor relationships to protect patient information [10]. A "Concept Review and Approval" stage for all AI use cases - vendor solutions included - can help balance cost, benefit, and risk [7].

Censinet RiskOps™ offers tools to address these challenges, enabling AI-specific assessments and continuous monitoring. Its Censinet AI™ capabilities streamline third-party risk assessments by automating security questionnaires, summarizing vendor evidence, and capturing key integration details. The platform also generates risk summary reports, routes findings to governance teams, and provides a centralized dashboard for tracking AI policies, risks, and tasks.

Strong vendor management reinforces broader governance efforts by ensuring external AI solutions align with patient care goals and organizational risk objectives. When evaluating vendor tools, healthcare organizations should prioritize patient outcomes over isolated model performance. This means asking whether the tool improves outcomes for their specific patient population and defining acceptable levels of benefit and risk [10]. Continuous monitoring and validation plans ensure vendor-provided AI models remain aligned with their intended purpose [7].

sbb-itb-535baee

Putting AI Governance into Practice

Turning AI governance from theory into action means weaving it into the processes healthcare organizations already rely on. It’s about linking AI oversight to existing risk management systems, setting up checkpoints throughout the AI lifecycle, and ensuring collaboration across departments that typically operate independently.

Aligning with Existing Risk Frameworks

Rather than building entirely new systems, it’s smarter to integrate AI oversight into existing risk management frameworks. This could include aligning AI governance with established structures like the NIST Cybersecurity Framework or HIPAA risk analysis protocols.

Healthcare organizations should design AI policies that align with both internal guidelines and external regulations, reviewing them on a regular basis [15]. For instance, if your organization already conducts quarterly risk assessments for third-party vendors, you can enhance these processes by adding AI-specific evaluation criteria. A multidisciplinary AI governance structure is essential - one that includes IT, cybersecurity, compliance, privacy, clinical departments, and legal teams [10][14][15]. Clear executive accountability and oversight should also be part of this setup. Ideally, this governance model should resemble existing committees, complete with defined escalation procedures and reporting systems that fit seamlessly into current workflows.

Once this integration is in place, the focus shifts to embedding governance at every stage of the AI lifecycle.

Governance Across the AI Lifecycle

AI governance isn’t a one-and-done effort - it requires checkpoints at every stage of the AI lifecycle, from initial risk assessments to ongoing monitoring after deployment. Surprisingly, only 61% of hospitals validate predictive AI using local data, and fewer than half test for bias [16]. This highlights the pressing need for structured oversight at every phase.

Organizations should implement thorough, end-to-end processes, including concept reviews, pre-deployment validations, and ongoing performance checks, to uphold consistent standards [15][16]. By integrating AI governance into existing quality assurance programs, healthcare organizations can ensure that AI systems are held to the same high standards as other clinical tools [16].

Automated checkpoints can help streamline these processes, reducing risks more efficiently while still maintaining human oversight through configurable rules and review mechanisms.

With proper lifecycle governance, effective collaboration across teams becomes much easier.

Coordinating Across Teams for AI Oversight

AI governance thrives on teamwork, but it often requires collaboration between groups that don’t naturally work together. High-level AI Governance Boards and cross-division Communities of Practice can help bridge these gaps, balancing top-down directives with bottom-up innovation [11][1].

It’s important to define clear roles and responsibilities. A central AI governance committee should handle overarching concerns like safety, ethics, and regulatory compliance, while individual departments focus on day-to-day implementation within their specific areas [1]. This division of labor ensures smooth operations without creating bottlenecks, while maintaining consistent standards across the organization.

Tools like Censinet RiskOps™ make cross-team coordination easier. By routing key assessment findings and tasks to the right stakeholders for review and approval, and providing an intuitive AI risk dashboard, the platform offers a real-time, unified view of all AI-related policies, risks, and tasks. This ensures ongoing oversight and accountability without piling on extra administrative work.

How AI Governance Creates Business Value

AI governance offers tangible benefits for healthcare organizations, going beyond just managing risks. When implemented effectively, it not only bolsters cybersecurity but also speeds up the adoption of transformative technologies and builds trust in an increasingly regulated industry.

Improving Cyber Resilience and Incident Response

Effective AI governance strengthens an organization's ability to prevent, detect, and recover from cyber incidents. By integrating AI-specific risk assessments into existing cybersecurity frameworks, organizations can handle unique threats like model poisoning, data corruption, and adversarial attacks. Governance frameworks also define clear protocols for responding to these risks, ensuring continuous monitoring of AI systems to quickly contain and recover from breaches. Additionally, resilience testing and collaboration between cybersecurity and data science teams enhance preparedness for future challenges. Keeping a detailed inventory of AI systems, including their functions, data dependencies, and security concerns, enables better risk classification and oversight [17].

The importance of these measures cannot be overstated. A ransomware attack at a university hospital in Germany tragically delayed emergency care for a critically ill patient, leading to a preventable death. This underscores the life-and-death stakes tied to inadequate incident response [19]. Strong governance ensures organizations are better equipped to handle such crises.

Accelerating Innovation and Clinical Transformation

Contrary to the belief that governance slows progress, a well-structured AI governance framework can actually drive innovation. By systematically evaluating AI use cases and balancing costs, benefits, and risks, governance sets the stage for safe and effective implementation [7]. This structured approach ensures that AI technologies are adopted responsibly, maximizing their impact on patient care and operational efficiency [2][3].

Clear approval processes allow clinical teams to confidently deploy AI-powered tools. For example, the U.S. Department of Health and Human Services (HHS) has introduced a "OneHHS" strategy, bringing together agencies like the CDC, CMS, FDA, and NIH to create a unified AI infrastructure. This initiative aims to streamline workflows and enhance cybersecurity [18][11]. As Clark Minor, Acting Chief Artificial Intelligence Officer at HHS, explained:

"AI is a tool to catalyze progress. This Strategy is about harnessing AI to empower our workforce and drive innovation across the Department" [18].

By accelerating innovation while maintaining ethical and safe practices, AI governance builds the trust needed for long-term success.

Building Trust and Meeting Regulatory Requirements

Beyond technical safeguards, strong governance fosters trust through practices like model documentation, version control, and transparent reporting. Regular checks on data quality, the use of diverse training datasets, and fairness audits help reduce bias and ensure accountability [21].

As regulations such as the EU AI Act, Executive Order 14110, U.S. FDA guidelines, HIPAA, and GDPR evolve, governance frameworks play a critical role in ensuring compliance. Automated policy enforcement, audit trails, and risk assessments embedded throughout the AI lifecycle help organizations stay ahead of regulatory demands [21][22]. Consider these statistics: between 2022 and 2024, AI-related malpractice claims rose by 14%, and only 22% of hospitals reported confidence in providing a complete AI audit within 30 days [12][20]. Proactive governance - through clear ownership structures, vendor agreements with audit rights, and regular AI audit drills - positions organizations to meet these challenges head-on [20].

Censinet RiskOps™ supports these efforts by offering a real-time AI risk dashboard. This tool consolidates policies, risks, and tasks into one platform, providing continuous oversight and accountability across the organization.

FAQs

How does checklist compliance differ from a strategic AI governance framework?

Meeting checklist compliance means sticking to specific regulatory requirements by completing a set of predefined tasks. It ensures that basic standards are met, often focusing on short-term goals and mitigating immediate risks.

In contrast, a strategic AI governance framework goes far beyond ticking boxes. It’s a comprehensive approach that weaves together policies, transparency, auditability, and human oversight into a proactive system. This framework is designed not just to manage risks but to encourage innovation and align AI efforts with an organization’s long-term objectives. By adopting this broader strategy, organizations can achieve more than just compliance - they can strengthen security, improve operational efficiency, and gain a competitive edge.

How do cross-functional AI councils improve AI governance in healthcare?

Cross-functional AI councils are essential for improving AI governance in healthcare. By uniting experts from different departments, they create a comprehensive framework for oversight, ensuring AI initiatives align with organizational objectives while tackling potential risks.

These councils take on key responsibilities, such as regularly monitoring AI systems, managing risks, and staying updated with changing regulations. By promoting accountability and keeping patient safety at the forefront, they pave the way for AI implementations that are safer, more compliant, and beneficial for both healthcare providers and patients.

Why is continuous monitoring essential for effective AI governance in healthcare?

Continuous monitoring plays a key role in upholding a robust AI governance framework, particularly in healthcare. It helps organizations spot and tackle risks as they arise, safeguarding patient safety, meeting regulatory requirements, and maintaining operational security.

By consistently overseeing AI systems, healthcare providers can respond effectively to emerging challenges, technological advancements, and potential weak points. This forward-thinking approach not only strengthens trust but also reduces risks such as data breaches and vulnerabilities from third-party systems, aligning AI governance with overall risk management strategies.

Related Blog Posts

- AI Governance Talent Gap: How Companies Are Building Specialized Teams for 2025 Compliance

- The Safety-Performance Trade-off: Balancing AI Capability with Risk Control

- The AI Governance Revolution: Moving Beyond Compliance to True Risk Control

- Board-Level AI: How C-Suite Leaders Can Master AI Governance