The AI Advantage in Risk Management: Faster, Smarter, More Accurate

Post Summary

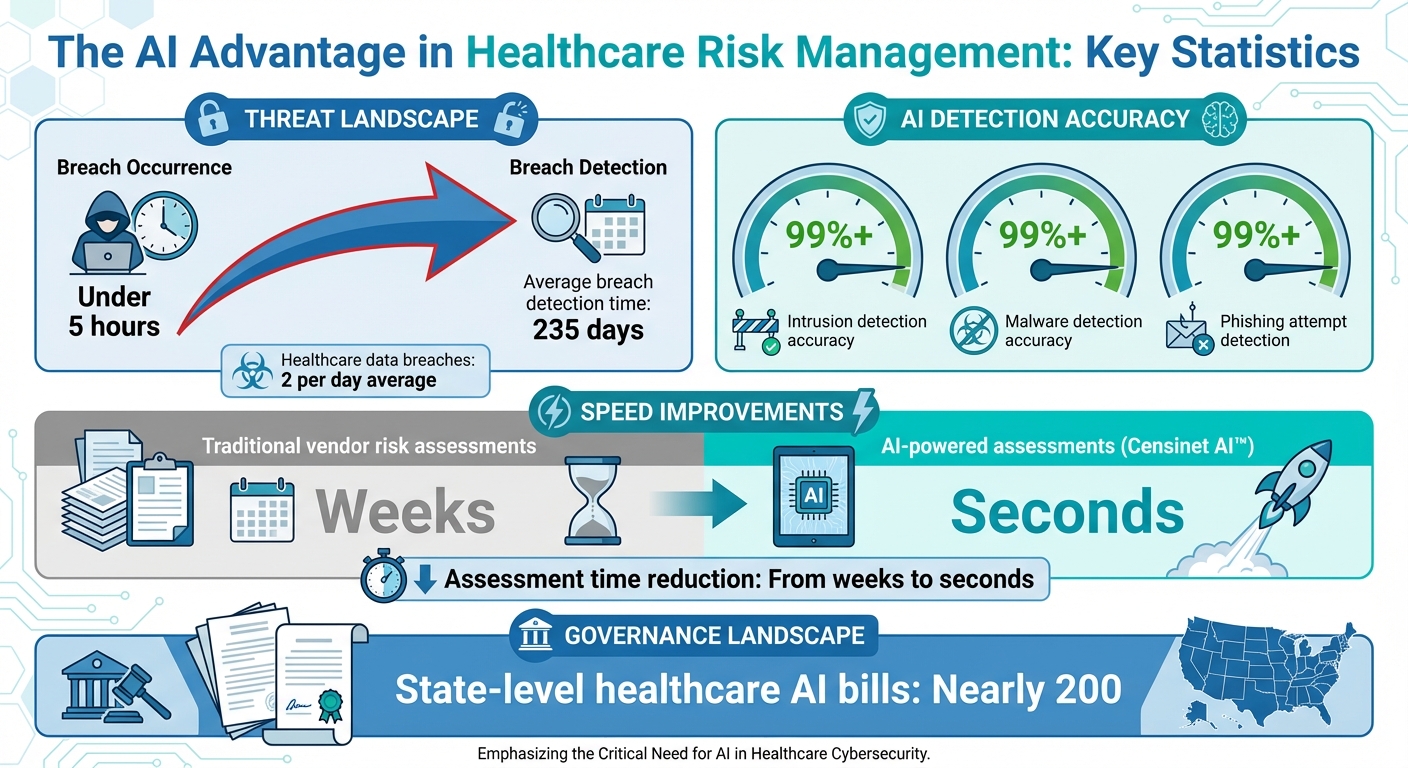

Healthcare organizations are under constant threat from cyberattacks, with hackers infiltrating systems in under five hours while breaches often go undetected for 235 days. Traditional security tools can't keep up, but AI offers a solution by enabling real-time threat detection, improving decision-making with consolidated risk data, and predicting vulnerabilities using machine learning. For example, AI systems can identify intrusions and phishing attempts with over 99% accuracy, helping IT teams act before damage occurs. Platforms like Censinet AI™ further accelerate vendor risk assessments, completing tasks in seconds that previously took weeks. While AI enhances efficiency, human oversight remains critical to validate results, address errors, and ensure compliance with frameworks like HIPAA and the NIST AI Risk Management Framework. By combining AI with human expertise, healthcare organizations can better protect patient data and strengthen supply chain security.

AI in Healthcare Risk Management: Key Statistics and Detection Accuracy

How AI Automation Speeds Up Threat Detection

AI automation is changing the way healthcare organizations tackle cybersecurity threats by constantly monitoring risk factors across their systems. Unlike older security tools that rely on periodic scans or manual reviews, AI-driven solutions sift through massive amounts of structured and unstructured data at lightning speed [5][6].

With machine learning, these systems establish benchmarks for normal traffic and user behavior. Once those benchmarks are set, AI can instantly detect anomalies like unauthorized access attempts or unusual data transfers. This means healthcare IT teams can intervene early, addressing risks before they escalate into full-blown incidents [5][6].

Real-Time Vulnerability Monitoring

AI doesn't just wait for something to go wrong - it actively scans networks, devices, and supply chain connections in real time to uncover new vulnerabilities. By processing data continuously, these systems deliver instant updates when threats emerge. This enables security teams to focus on the most pressing risks and act quickly to prevent attackers from exploiting weaknesses [5][6].

This constant monitoring is especially critical in healthcare, where connected medical devices and third-party integrations create numerous cyberattack entry points. AI keeps an eye on all these systems at once, spotting patterns and anomalies that human analysts might overlook. This ensures that potential threats are flagged while security teams concentrate on resolving them.

Faster Security Assessments

In February 2025, Censinet introduced Censinet AI™ in partnership with AWS to speed up third-party risk assessments. This platform allows vendors to complete security questionnaires in seconds, summarizing evidence, integration details, and even fourth-party exposures into concise risk reports [7].

"With ransomware growing more pervasive every day, and AI adoption outpacing our ability to manage it, healthcare organizations need faster and more effective solutions than ever before to protect care delivery from disruption."

– Ed Gaudet, CEO and founder of Censinet [7]

This speed is a game-changer because traditional security assessments often slow down vendor onboarding, leaving organizations vulnerable. By automating the completion of questionnaires and evidence reviews, Censinet AI™ helps healthcare organizations minimize risks much faster without compromising the thoroughness needed for compliance [7]. These quick assessments not only streamline the risk identification process but also support better decision-making in AI-powered cybersecurity strategies.

Better Decision-Making with Human-Guided AI

AI systems, while powerful, can sometimes produce inaccurate results - commonly referred to as "hallucinations." These errors highlight the importance of human oversight to verify and validate the insights AI generates. By combining human expertise with AI capabilities, organizations can address challenges like the "black box" problem, where the reasoning behind AI decisions can be unclear [1].

This collaboration between AI and human professionals fosters stronger risk management strategies. AI excels at processing vast amounts of data continuously, while experts analyze alerts, confirm findings, and make the final calls. Benoit Desjardins, Professor of Radiology at the University of Montreal and CMIO at the Centre Hospitalier de l'Université de Montréal (CHUM), emphasizes this balance:

"AI will not replace either doctors or cybersecurity experts... Cybersecurity specialists will need to learn AI software, instead of sifting through datasets. A human is still needed to analyze alerts. It's a collaboration between AI and humans." [3]

By automating routine tasks like monitoring and reporting, AI allows human teams to focus on more strategic and complex problem-solving efforts [2].

Configurable Rules and Evidence Validation

To ensure reliable decision-making, Censinet AI™ incorporates configurable rules that keep humans in the loop. Risk teams can define specific criteria for validating evidence, drafting policies, and mitigating risks, ensuring oversight is maintained at every step.

This human involvement helps healthcare organizations respond to new and unexpected AI-related errors that traditional risk management models may overlook. By analyzing how people, processes, and technology interact, this approach strengthens the overall risk management system [1].

AI-Powered GRC Collaboration

Censinet AI™ takes the human-AI partnership further by streamlining governance, risk, and compliance (GRC) collaboration. Acting as "air traffic control" for AI governance, the platform automatically routes critical insights and risks to the right experts, including members of the AI governance committee, for review and approval.

With advanced routing and orchestration, the platform ensures that the right teams address the right issues at the right time. A real-time AI risk dashboard within Censinet RiskOps™ serves as a centralized hub for managing AI-related policies, risks, and tasks. This unified system supports continuous oversight and accountability while fostering a "no-blame culture" in clinical risk management. This approach frames mistakes as opportunities to learn, rather than moments to assign blame [1].

More Accurate Risk Assessments with AI

AI has reshaped risk assessments, turning them from occasional reviews into ongoing evaluations that align with healthcare standards and compliance frameworks. This evolution addresses a major limitation of traditional methods: the inability to catch emerging threats between scheduled reviews and the challenge of maintaining consistency across complex vendor networks.

With AI, data integrity is verified, HIPAA compliance is ensured, and vendor risks are evaluated throughout the AI lifecycle [8][9]. By analyzing risk data across various frameworks, AI uncovers vulnerabilities that manual processes might miss - especially within layered vendor relationships, where fourth-party risks often go unnoticed.

In November 2025, the Health Sector Coordinating Council (HSCC) Cybersecurity Working Group previewed its 2026 guidance on managing AI-related cybersecurity risks. The "Third-Party AI Risk and Supply Chain Transparency" subgroup was created to bolster security and resilience in healthcare supply chains. Their efforts focus on identifying and monitoring third-party AI tools while evaluating systems for risks related to security, privacy, and bias. This guidance aims to reduce systemic vulnerabilities hidden within layered vendor networks [4]. These continuous evaluations not only enhance compliance but also improve how risks are visualized and addressed.

Benchmarking and Compliance Alignment

AI-driven platforms streamline risk assessments by automatically benchmarking them against multiple compliance frameworks, including the NIST AI Risk Management Framework (AI RMF), introduced on January 26, 2023 [11]. This voluntary framework helps organizations integrate trustworthiness into the design, development, and evaluation of AI systems.

It also requires formal AI impact assessments to address ethical, legal, and operational risks [10]. Lance Mehaffey, Senior Director and Healthcare Vertical Leader at NAVEX, highlights the urgency of involving compliance from the start:

"We're seeing healthcare organizations racing to adopt AI without fully understanding the implications. When compliance isn't at the table from day one, you're not managing innovation – you're managing fallout." [10]

To navigate these challenges, healthcare organizations need customized governance programs that address HIPAA compliance, medical device and vendor risks, care delivery standards, reimbursement protocols, and clinical outcomes [10]. Jeffrey B. Miller, Esq., Director-in-Charge at Granite GRC, underscores this need:

"Governance models built for other sectors won't cut it in healthcare. We need risk and compliance strategies that reflect the unique demands of this environment – and that means tailoring our approach from the ground up." [10]

By leveraging strong compliance benchmarks, organizations can shift risk management from a reactive process to a forward-looking strategy.

Risk Visualization Through Command Centers

AI doesn’t just align with compliance - it also transforms how risks are visualized. Centralized command centers consolidate risk data, turning raw information into actionable insights. These platforms reveal patterns, prioritize threats, and track remediation efforts across vendor ecosystems.

With predictive analytics, organizations can proactively identify risks, automate monitoring and reporting, and strengthen financial resilience [2]. By aggregating data from multiple sources into a unified view, risk teams can quickly spot trends and address issues across their entire vendor network.

This centralized visibility is especially crucial for managing third-party and fourth-party AI tools embedded in healthcare supply chains. These platforms enable organizations to maintain detailed AI registries, conduct cross-functional risk audits, and map data lineage to uncover potential bias sources and validate model inputs [12]. By continuously assessing risks and presenting insights in a clear, actionable format, AI equips healthcare organizations to respond decisively in an ever-changing threat landscape.

sbb-itb-535baee

Using AI for Vendor and Supply Chain Security

Healthcare organizations face the challenge of securing increasingly intricate vendor networks. With the rise of third-party and fourth-party relationships across supply chains, traditional manual evaluations often fall short. AI steps in to address this complexity, automating vendor assessments and identifying vulnerabilities before they turn into breaches.

The Health Sector Coordinating Council (HSCC) highlighted this growing concern by establishing its AI Cybersecurity Task Group in October 2025. By November of the same year, the group's "Third-Party AI Risk and Supply Chain Transparency" subgroup began crafting 2026 guidance aimed at fortifying healthcare supply chain security [4]. While AI strengthens internal risk evaluations, its application to vendor and supply chain security helps close significant security gaps. This evolution in vendor review processes seamlessly integrates with data-driven approaches discussed further below.

AI-Powered Vendor Risk Assessments

AI simplifies vendor risk assessments by aggregating real-time data from various vendor relationships [2]. This centralized method allows risk teams to evaluate third-party AI systems across multiple frameworks, such as the NIST AI Risk Management Framework, HICP, and HIPAA, all at once - eliminating the need for redundant efforts [4].

By proactively identifying compliance risks and potential financial liabilities, organizations can address issues before they surface during routine audits [2]. The HSCC's 2026 guidance emphasizes standardizing processes like procurement, vendor evaluation, ongoing monitoring, and end-of-life planning for AI tools [4]. This uniformity ensures consistent vendor assessments while reducing the time spent on each review.

AI also improves transparency through tools like AI Bill of Materials (AIBOM) and Trusted AI BOM (TAIBOM), which offer detailed insights into AI supply chain components [4]. These tools help organizations trace data origins, verify model inputs, and evaluate risks hidden within layered vendor relationships. This level of visibility is especially crucial for addressing fourth-party risks that traditional methods often overlook. Such insights enable stronger defenses against potential supply chain breaches.

Preventing Supply Chain Data Breaches

Healthcare organizations experience an alarming average of two data breaches per day, exposing sensitive personal health information [3]. While hackers can infiltrate systems in under five hours, it takes organizations an average of 235 days to detect these breaches [3]. This gap leaves a dangerous window for attackers to exploit unnoticed vulnerabilities in the supply chain.

AI-driven cybersecurity tools step in by identifying intrusions, malware, and phishing attempts with over 99% accuracy [3]. These systems continuously monitor vendor networks, flagging unusual activity in real time instead of waiting for scheduled audits. By analyzing patterns across the entire supply chain, AI pinpoints vulnerabilities before they can escalate into full-blown breaches, shifting security from a reactive stance to a proactive defense.

The HSCC's guidance also underscores the importance of contractual safeguards with AI vendors. These include model contract clauses and Business Associate Agreements (BAAs) that address data use, handling of Protected Health Information (PHI), and breach reporting requirements [4]. Coupled with technical monitoring, these safeguards form a multi-layered defense. Organizations are also encouraged to establish governance policies, oversight boards, and formal approval processes for implementing third-party AI systems [4]. This ensures security measures are integrated from procurement to system decommissioning, with continuous oversight throughout the lifecycle.

AI Governance and Compliance in Healthcare

As healthcare increasingly relies on AI, maintaining strong governance and compliance frameworks has become essential for ensuring cybersecurity and ethical deployment. While advancements in threat detection and vendor risk management have bolstered security, structured oversight is crucial to address the unique risks AI introduces. Without proper governance, AI systems can lead to data breaches, obscure decision-making processes, and vulnerabilities that conventional risk management strategies may overlook [1]. With nearly 200 state-level bills focusing on healthcare AI [3], organizations must adopt frameworks that align with HIPAA and HICP standards while ensuring ethical use of AI technologies. This creates an urgent need for governance mechanisms that seamlessly integrate AI into healthcare's risk management landscape.

The Health Sector Coordinating Council (HSCC) is preparing 2026 guidance to align AI governance with HIPAA, FDA regulations, and frameworks like the NIST AI Risk Management Framework [4]. These efforts aim to create a foundation for responsible AI use in healthcare.

Platforms like Censinet RiskOps are tackling these challenges head-on. Acting as a centralized hub for AI-related policies, risks, and tasks, Censinet routes critical findings to designated stakeholders, including members of AI governance committees. This ensures timely reviews and approvals, keeping oversight and accountability consistent across the organization.

AI Lifecycle Governance and Bias Testing

AI systems in healthcare often pose challenges due to their complex and opaque decision-making processes, particularly in "black box" models like Large Language Models (LLMs) and deep learning algorithms [1]. These systems can make it difficult to understand how decisions are reached, raising ethical and legal questions about data use and patient autonomy. As Gianmarco Di Palma and colleagues highlight:

"The phenomenon of the so-called 'hallucinations' in LLMs is an example, with potentially significant repercussions on the therapeutic choices that follow" [1].

Bias testing plays a critical role in mitigating risks, as biased algorithms can compromise traditional clinical risk management [1]. Given the evolving nature of AI, informed consent and ongoing monitoring must be continuous efforts, with regular updates to reflect changes in data use and emerging risks [1]. Additionally, organizations must carefully evaluate third-party AI systems for risks related to security, privacy, and bias.

Censinet RiskOps supports this continuous oversight by combining automation with human decision-making. Risk teams maintain control through configurable rules and review processes, ensuring that automation complements rather than replaces critical judgment. This "human-in-the-loop" approach allows healthcare organizations to scale operations efficiently while addressing AI's complex risks, prioritizing patient safety at every step.

Secure AI Procurement

Securing the procurement process is another key aspect of managing AI risks. From vendor selection to end-of-life planning, standardizing procurement processes helps mitigate risks associated with third-party AI tools [4]. Clear governance policies should outline roles, responsibilities, and clinical oversight throughout the AI lifecycle [4]. This includes improving visibility into third-party tools and evaluating vendors for risks tied to security, privacy, and bias.

Censinet AI™ simplifies the third-party risk assessment process by enabling vendors to complete security questionnaires almost instantly. The platform automatically summarizes evidence and integration details, generating risk summary reports that help healthcare organizations address vendor risks much faster.

Conclusion

AI is reshaping healthcare risk management by turning it into a more dynamic, data-focused process. With its ability to provide real-time monitoring, accurate assessments, and centralized insights, AI empowers healthcare organizations to make quicker, more informed decisions. For example, machine learning models tailored to healthcare can analyze both historical and live data to predict potential risk areas, streamlining decision-making at every level [1][2].

That said, AI doesn't come without its challenges. Issues like data breaches, lack of transparency in algorithms, weaknesses in AI-powered devices, and biases in machine learning systems mean that human oversight remains critical. Ensuring patient awareness and securing informed consent are ongoing priorities in this tech-driven landscape [1][3].

The key lies in blending AI's capabilities with human judgment. Take platforms like Censinet RiskOps, for instance - they embody this balance by incorporating customizable rules and review processes. This approach ensures that automation supports, rather than replaces, essential decision-making, all while enhancing risk management and prioritizing patient safety.

FAQs

How does AI enhance real-time threat detection in healthcare cybersecurity?

AI plays a crucial role in improving real-time threat detection within healthcare cybersecurity. By constantly monitoring systems 24/7, it can quickly spot unusual activities or anomalies and initiate automated responses to address potential risks immediately.

What makes AI even more effective is its ability to adapt to new and evolving threats. This ensures healthcare organizations remain one step ahead of potential vulnerabilities, significantly cutting down detection times, boosting accuracy, and reinforcing security measures across the board.

Why is human oversight important when using AI in risk management?

Human involvement plays a key role in keeping AI systems transparent, accountable, and aligned with ethical principles. While AI is incredibly efficient at analyzing massive datasets in record time, it can sometimes introduce biases, miss important context, or act unpredictably in specific situations.

By integrating human expertise, organizations can tackle these challenges, protect sensitive data, and adhere to regulatory requirements. This partnership between humans and AI helps build trust and ensures that AI-powered tools are applied responsibly, especially in fields like healthcare cybersecurity.

How does AI improve vendor and supply chain security in healthcare?

AI is transforming how healthcare organizations manage vendor and supply chain security. It helps pinpoint third-party risks, keeps an eye on connected medical devices, and evaluates vulnerabilities to minimize breaches and maintain compliance.

By automating intricate risk assessments and delivering real-time insights, AI empowers healthcare providers to make quicker, more informed decisions. The result? Better defenses against cyber threats and a supply chain that's more resilient than ever.