AI in the ICU: Balancing Life-Saving Technology with Patient Safety

Post Summary

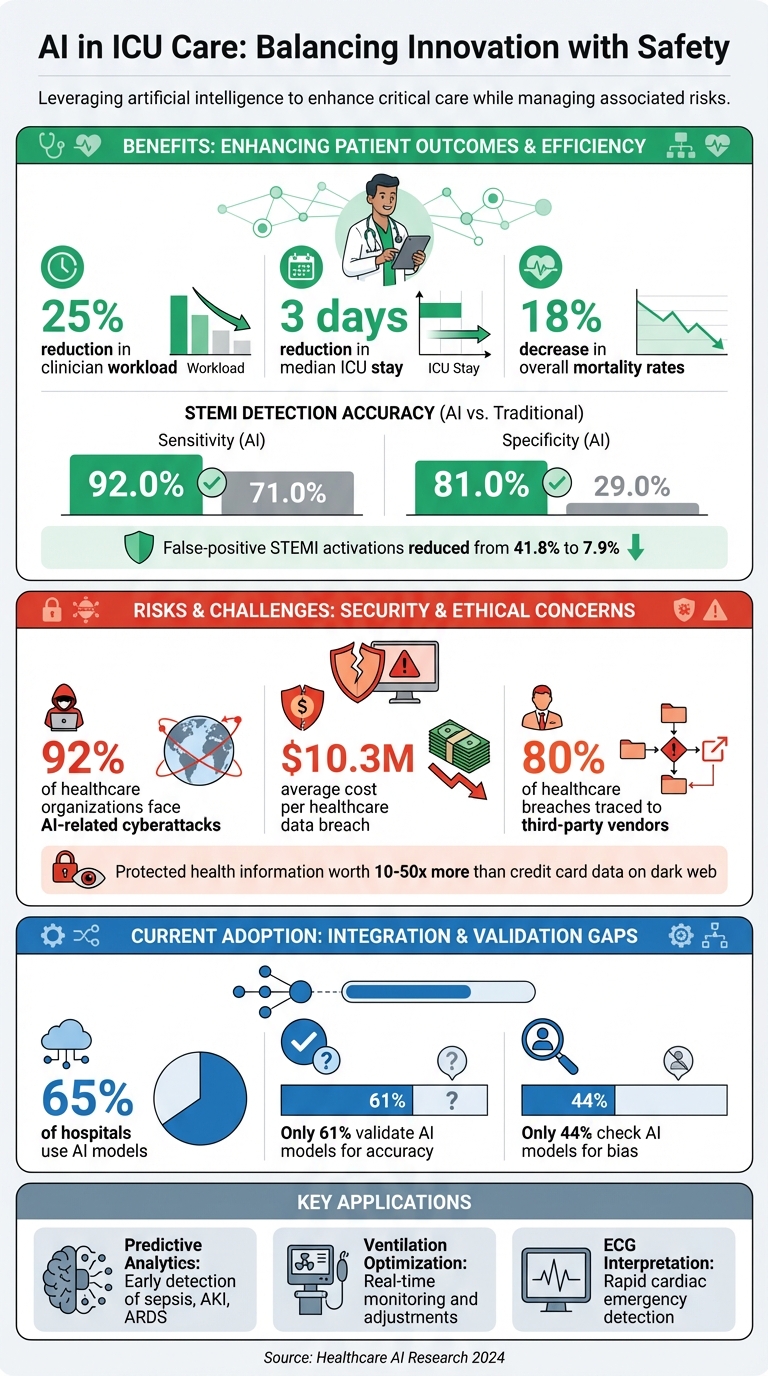

AI is reshaping ICU care, offering tools to predict patient deterioration, optimize treatments, and improve outcomes. But it comes with risks. Cybersecurity threats, algorithm failures, and bias in AI systems could jeopardize patient safety. Here's what you need to know:

- Benefits: AI reduces readmission rates, shortens ICU stays, and improves accuracy in diagnosing critical conditions like sepsis and heart attacks.

- Risks: 92% of healthcare organizations face cyberattacks, costing $10.3M per breach. Vulnerabilities in AI systems can lead to errors in medication or diagnostics.

- Current Use: 65% of hospitals use AI models, but only 61% validate them for accuracy, and 44% check for bias.

- Solutions: Strengthening cybersecurity, implementing human oversight, and establishing AI governance are key to safe adoption.

AI's potential in ICUs is immense, but only with rigorous safety measures and multidisciplinary collaboration can we ensure patient care remains effective and secure.

AI in ICU Care: Key Statistics on Benefits, Risks, and Adoption Rates

AI Applications in ICU Settings

AI technologies are already making a difference in ICUs across the United States, tackling some of the toughest challenges in critical care. These systems process enormous amounts of patient data in real time, identifying subtle patterns that might otherwise go unnoticed. The result? Faster, more accurate interventions. Let’s look at how AI is transforming diagnosis, ventilation management, and cardiac care.

Predictive Analytics for Early Diagnosis

Machine learning and deep learning models are combing through electronic health records - both structured data and clinical notes - to catch early warning signs of patient deterioration [3][4][5][6][7]. These AI systems are particularly effective at predicting critical conditions like sepsis, acute kidney injury (AKI), and acute respiratory distress syndrome (ARDS) before they escalate. Compared to traditional scoring systems like SOFA and MEWS, AI can spot these issues earlier, giving clinicians more time to act.

Dr. Archana, Professor at Rama Medical College Mandhana Kanpur, India, explains: "AI-driven solutions can predict patient deterioration, streamline workflows, and reduce diagnostic errors" [6].

AI-Driven Ventilation Optimization

Managing mechanical ventilation is a core part of ICU care, but it demands constant monitoring and fine-tuning. AI systems step in by analyzing vital signs, lab results, and respiratory parameters continuously. They track changes in factors like resistance and compliance, helping clinicians make adjustments in real time. The impact is striking: AI integration in ICUs has cut clinician workloads by 25% [8], reduced median ICU stays by three days [9], and lowered overall mortality rates by 18% [9].

Cobianchi et al. highlight this efficiency: "AI significantly improves workflow efficiency by automating repetitive tasks, such as documentation and data analysis, and optimizing resource allocation" [8].

By customizing alarms to individual patient baselines, these systems also help reduce alarm fatigue among ICU staff.

AI-Assisted ECG Interpretation

Speed is everything in cardiac emergencies, and AI-powered ECG tools are stepping up to the challenge. These systems analyze heart rhythms to identify conditions like ST-segment elevation myocardial infarction (STEMI) and arrhythmias with impressive accuracy. In one study, AI ECG models achieved a 92.0% sensitivity and 81.0% specificity for STEMI detection - far better than standard triage methods, which managed just 71.0% sensitivity and 29.0% specificity [10]. Even more importantly, AI reduced false-positive STEMI activations from 41.8% to 7.9% [10]. This means fewer unnecessary catheterization lab activations, faster treatment for those who truly need it, and better use of resources.

Cybersecurity Risks in AI-Enabled ICU Systems

AI is transforming ICU care, offering advanced tools for patient monitoring and decision-making. However, this integration also introduces new vulnerabilities that could put patient safety at risk. From data breaches to flaws in algorithms and weaknesses in connected medical devices, AI systems expand the potential attack surfaces in ICUs [1]. Cybersecurity threats in healthcare are not only increasing but are also becoming more sophisticated and expensive to manage each year [11]. Below, we explore specific vulnerabilities in AI-enabled ICU systems and discuss strategies to address these challenges.

Data Integrity and Patient Health Information (PHI)

The electronic health records (EHRs) that power AI systems are a prime target for cyberattacks. If hackers manipulate patient data, it can disrupt every AI-driven decision in the ICU. Such interference can corrupt AI training pipelines, leading to inaccurate clinical predictions and compromised patient care. To safeguard data integrity, healthcare systems must implement cryptographic verification, maintain detailed audit trails, and enforce strict separation between development and production environments [2]. These measures ensure that AI-based clinical interventions remain reliable and trustworthy.

Third-Party Vendor and Medical Device Risks

Third-party vendors represent a significant weak point in healthcare cybersecurity - around 80% of breaches in the sector can be traced back to these external sources [2]. Each vendor or connected device adds another potential entry point for attackers. Medical devices like ventilators, infusion pumps, and cardiac monitors, which rely on network connectivity, are particularly vulnerable to ransomware and denial-of-service attacks [1]. With the complex supply chains involved in AI-enabled ICUs, a single compromised vendor can create a domino effect, jeopardizing multiple critical care devices.

Mitigating Threats to Patient Safety

Protecting ICU patients from cyber threats requires a comprehensive, layered strategy. Implementing Zero Trust Architecture and microsegmentation can provide immediate security by treating every device and user as untrusted until verified [2]. Updated HIPAA Security Rule requirements now mandate multi-factor authentication and encryption, removing earlier leniencies. Regularly retraining AI models with verified datasets is also crucial to identify and fix compromised algorithms before they impact patient care [2]. Additionally, ongoing risk assessments of third-party vendors help ensure robust security and compliance, minimizing potential vulnerabilities in the system.

Using Censinet RiskOps for AI Risk Management

Managing AI risks in ICUs demands tools specifically designed to prioritize patient safety. Censinet RiskOps™ offers a centralized platform to handle cybersecurity challenges in AI-powered systems, complementing the security measures previously discussed. As an AHA Preferred Cybersecurity Provider, Censinet has established its expertise in cyber risk assessment, privacy, and HIPAA compliance [12].

Censinet RiskOps operates with two core strategies: integrating human oversight and implementing centralized governance.

Human-in-the-Loop Oversight

Automation can improve efficiency, but in high-stakes ICU settings, human oversight remains critical. Censinet AI employs a human-guided approach, ensuring healthcare professionals maintain control over decision-making. Risk teams can establish tailored rules and conduct thorough reviews, allowing automation to enhance workflows without replacing careful human judgment. This approach enables organizations to expand their risk management capabilities while preserving the meticulous attention to detail required in ICU environments.

Centralized AI Risk Governance

Censinet RiskOps™ acts as a centralized hub, consolidating real-time data into an intuitive dashboard that highlights AI policies, risks, and tasks. The platform directs key findings and critical risks to the appropriate stakeholders, ensuring timely responses to potential issues. In ICUs where multiple AI systems are in use simultaneously, this centralized governance helps close oversight gaps, reducing the risk of errors that could jeopardize patient safety.

sbb-itb-535baee

Best Practices for AI Governance in ICUs

Creating a solid framework for AI governance in ICU settings demands a focus on accountability, transparency, fairness, and safety. These principles build on traditional IT governance practices while integrating the medical ethics and clinical standards critical to intensive care units [14].

Healthcare organizations need a multi-faceted governance approach that spans management, technology, finance, compliance, and personnel [15]. This strategy goes beyond technical safeguards, addressing the human elements that influence whether AI systems genuinely improve patient outcomes. For hospital leaders, AI governance plays a key role in managing risks, ensuring data security, building trust, and reducing malpractice exposure [15]. These foundational elements set the stage for actionable best practices.

Strong AI governance doesn't just reduce cybersecurity risks - it also strengthens patient safety and supports better clinical decisions in ICUs.

Real-Time AI Risk Monitoring

In ICUs, where patient conditions can shift in minutes, continuous monitoring of AI systems is critical. AI technologies are dynamic - they evolve, adapt, and experience changes like data drift - making it essential to regularly evaluate their accuracy, reliability, and safety [1]. Real-time dashboards are a valuable tool, helping teams quickly identify anomalies before they impact patient care.

These dashboards act like an air traffic control system for AI governance. They consolidate vital information and route findings to the appropriate stakeholders for immediate review and action. This centralized visibility allows risk management teams to oversee AI performance across multiple ICU applications simultaneously, ensuring issues are caught early.

Collaborative Governance and Compliance

Managing AI in healthcare requires input from various teams. No single department can oversee the complex mix of clinical effectiveness, cybersecurity, regulatory compliance, and patient safety. To address this, healthcare organizations should form AI governance committees. These committees should include clinicians, IT security experts, compliance officers, and risk managers.

The Health Sector Coordinating Council (HSCC) is working on 2026 guidelines for managing AI cybersecurity risks, focusing on governance, secure-by-design principles, and third-party risk management. Preparing for these evolving standards now by fostering collaboration can help organizations stay ahead. Cross-functional committees ensure that findings and recommendations are addressed quickly and effectively.

Collaborative practices lay the groundwork for measurable improvements in AI governance and patient care.

Benchmarking Against Industry Standards

Aligning AI governance with established healthcare and cybersecurity standards provides a clear framework for ongoing improvement. Key elements of responsible AI use include policies and governance structures, patient privacy and transparency, data security measures, continuous quality monitoring, voluntary reporting of safety-related events, risk and bias assessments, and staff education [13].

Healthcare organizations should routinely compare their AI governance practices to industry benchmarks like HIPAA and NIST frameworks. This process highlights areas needing improvement and provides a roadmap for refining practices. Strong governance not only mitigates risks but also ensures AI is integrated responsibly, protecting patient safety while maximizing its potential in ICUs. By setting clear metrics and accountability standards, organizations can show their dedication to safe and effective AI use in critical care environments.

Human-AI Teaming Frameworks for ICU Safety

To tackle the dual challenges of clinical and cybersecurity risks in ICUs, effective human-AI collaboration is essential. The best ICU care combines AI's ability to process vast amounts of data quickly with the nuanced judgment of clinicians. While AI systems excel at identifying patterns and trends, they lack the context and intuition that healthcare professionals bring to the table. When thoughtfully integrated, these partnerships can improve safety and streamline ICU workflows, creating a clear and accountable dynamic between humans and machines.

Collaboration Between Clinicians and AI

AI works best as a tool to support - not replace - clinical decision-making. In ICUs, AI systems can monitor patient vitals in real time, flag potential complications, and even predict patterns of deterioration. These are tasks that would be nearly impossible to manage manually given the constant influx of data. However, clinicians must remain actively involved, reviewing and interpreting AI outputs to ensure accuracy and relevance.

This oversight is critical because AI systems are not infallible. Errors, often referred to as "hallucinations", can occur, and biases can emerge from limited or unrepresentative training datasets. For example, an AI model trained predominantly on data from one demographic group might struggle to provide accurate insights for patients from other populations. In high-pressure ICU environments, where decisions carry immediate consequences, clinicians must be aware of these limitations and apply their expertise to validate AI recommendations.

Another challenge is avoiding over-reliance on AI tools. Even systems designed to assist decision-making can sometimes be perceived as definitive authorities, particularly when clinicians are under significant workload pressures. To prevent this, AI outputs should be treated as one piece of a larger puzzle, complementing rather than replacing clinical judgment. Such balanced collaboration is key to minimizing errors and ensuring patient safety.

Preventing Errors Through Human-AI Synergy

Human oversight plays a crucial role in preventing AI-related errors in ICUs. Devices like insulin pumps, pacemakers, and ventilators, which may be partially controlled by AI, require constant monitoring by clinicians to ensure their proper functioning and to guard against potential cyber threats.

Cybersecurity remains a pressing concern. In 2024, a staggering 92% of healthcare organizations reported experiencing AI-related cyberattacks [2]. This highlights the need for vigilance from healthcare providers, who must stay alert to these risks while safeguarding patient data.

Clinicians also play a central role in promoting ethical AI use. From securely entering data to obtaining informed consent and participating in cybersecurity training, their involvement ensures that AI systems are used responsibly. By prioritizing a human-first approach, ICUs can leverage AI's strengths without compromising the oversight and ethical standards needed to protect patients.

Conclusion

AI is reshaping the way Intensive Care Units (ICUs) operate, bringing advancements like predicting patient deterioration, improving life-support systems, and reducing medication errors. These capabilities have the potential to enhance clinical decision-making and improve patient outcomes in ways that were once unimaginable. But achieving these benefits requires more than just deploying advanced technology - it calls for a well-rounded approach that includes governance, cybersecurity, and consistent human oversight. This balance of innovation and caution paves the way for a future where progress and safety go hand in hand.

The stakes are high when it comes to cybersecurity. Breaches in healthcare cost an average of $10.3 million, and a staggering 92% of healthcare organizations have faced cyberattacks. ICU systems, with their interconnected networks and sensitive patient data, are particularly vulnerable. To make matters worse, protected health information is highly valuable on dark web marketplaces - fetching 10 to 50 times the price of credit card data, which incentivizes attackers [2].

"Rigorous validation, responsible implementation, and continuous monitoring by multidisciplinary teams are necessary to address these risks and realize the full potential of AI."

- Patrick Tighe, MD, MS; Bryan M. Gale, MA; Sarah E. Mossburg, RN, PhD [8]

This approach emphasizes the need for clinical, technical, and ethical oversight, aligning with the broader discussion on managing risks effectively.

Strong governance structures are critical to navigating AI's benefits and challenges. Clear validation protocols, strict quality assurance processes, and transparency are non-negotiable. Multidisciplinary teams - including clinicians, data scientists, ethicists, and IT experts - must work together to validate and monitor AI systems throughout their lifecycle [8][16].

The future of ICU care lies in responsible use of AI. Technology should enhance, not replace, human expertise. By prioritizing sound governance, maintaining clinician involvement, and staying vigilant, healthcare organizations can harness AI's potential while safeguarding patient safety and data security.

FAQs

How can hospitals reduce bias in AI systems used in ICUs?

Hospitals can reduce bias in AI systems by using diverse and well-rounded datasets that reflect a wide range of patient populations. This ensures the AI models are trained on data that represents different demographics, leading to more inclusive outcomes. Regular checks of AI performance across various groups and testing in different clinical settings are also crucial to maintain accuracy and fairness.

Incorporating transparency and explainability tools allows healthcare professionals to spot and address biases effectively. By fine-tuning algorithms and verifying their results consistently, hospitals can build confidence in AI systems while ensuring patient care remains fair and equitable.

How can we safeguard AI systems in ICUs from cyberattacks?

Protecting AI systems in Intensive Care Units (ICUs) from cyberattacks involves a mix of strong security practices and vigilant oversight. Start by using robust endpoint protection and performing regular vulnerability checks to uncover and address potential risks. Implementing a zero trust architecture ensures that every access request is thoroughly verified, adding another layer of security.

To further safeguard these systems, enforce multi-factor authentication to block unauthorized access. Incorporating blockchain technology can also provide a secure way to share sensitive data. Real-time monitoring is crucial for spotting unusual activity, while maintaining detailed metadata helps track the role of AI in patient care, promoting both accountability and safety. Together, these measures strengthen the defenses and dependability of AI-driven ICU systems.

How does AI enhance the accuracy of diagnosing critical conditions in ICUs?

AI is transforming diagnostic practices in ICUs by swiftly processing extensive patient data, spotting subtle patterns or irregularities that could easily be overlooked, and offering data-driven insights to clinicians. This allows for earlier and more accurate identification of critical health conditions, which can lead to better patient care and outcomes.

With the help of machine learning and advanced algorithms, AI tools empower medical teams to make quicker, well-informed decisions while minimizing the chances of human error. These technologies are built to enhance, not replace, clinical expertise, ensuring that patient safety stays at the forefront of care.