AI in Supply Chain Incident Detection

Post Summary

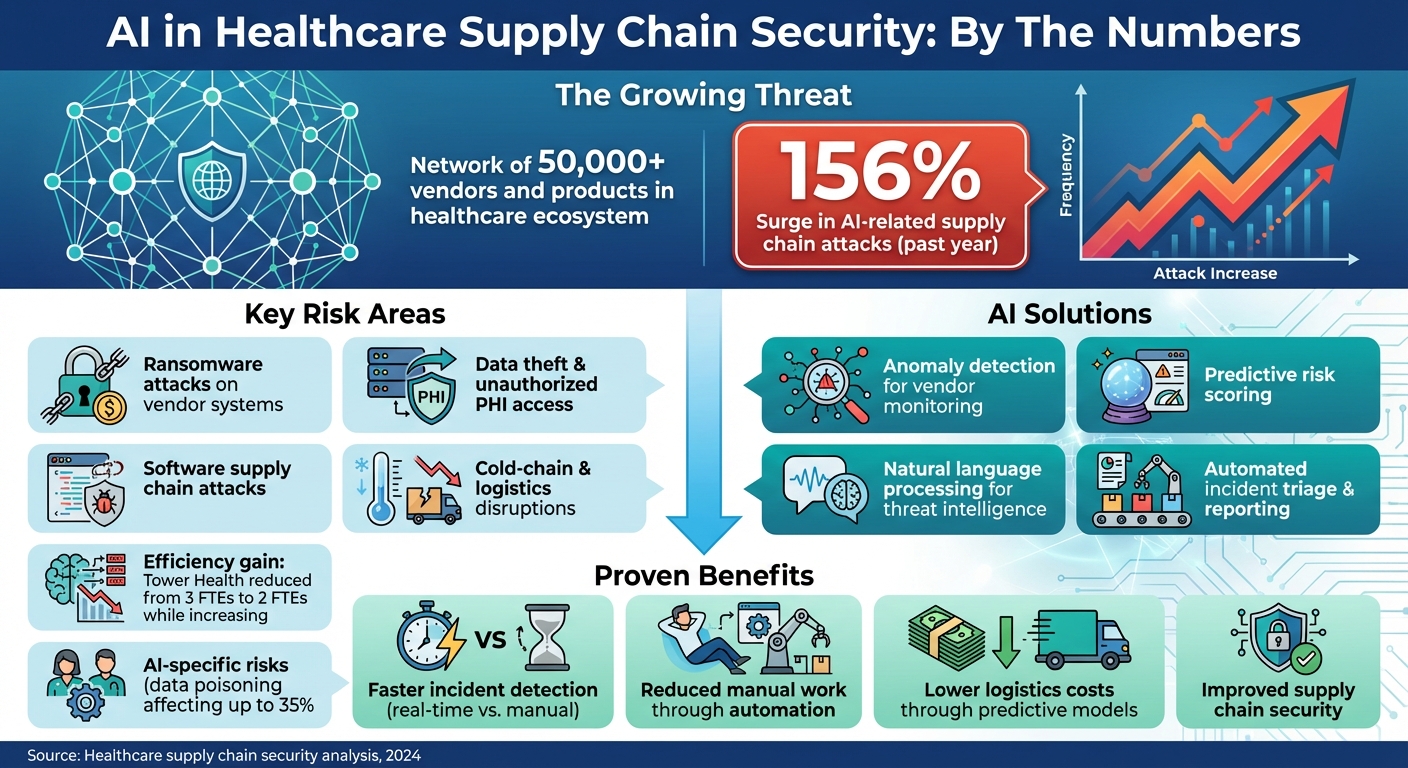

AI is reshaping how healthcare organizations detect and respond to supply chain threats. The healthcare sector faces rising risks, including a 156% surge in AI-related supply chain attacks over the past year. These incidents disrupt vendor networks, delay treatments, and compromise patient safety. Traditional methods like spreadsheets and manual assessments fall short in addressing these challenges.

AI tools, on the other hand, provide real-time monitoring, predictive risk scoring, and automated workflows. They analyze vendor behavior, flag anomalies, and ensure faster responses to potential threats. For example, organizations like Tower Health have reduced manual effort while improving risk management efficiency. Key focus areas include protecting patient data, integrating AI into security systems, and maintaining strong governance to oversee AI use.

Key takeaways:

- Challenges: Ransomware, data breaches, and AI-specific risks like data poisoning.

- AI solutions: Predictive models, anomaly detection, and natural language processing for threat intelligence.

- Benefits: Faster incident detection, reduced manual work, and improved supply chain security.

- Implementation: Strong governance, seamless integration with security systems, and privacy safeguards.

AI is transforming healthcare supply chain security, but its success depends on proper oversight, collaboration, and continuous improvement.

AI-Driven Healthcare Supply Chain Security: Key Statistics and Benefits

Understanding the Healthcare Supply Chain Risk Landscape

Components of the Healthcare Supply Chain Ecosystem

The healthcare supply chain is a complex network, stretching far beyond pharmaceutical distributors. It includes clinical applications and EHRs (whether hosted on-premises or in the cloud), networked medical devices like infusion pumps, imaging systems, and lab analyzers, as well as logistics partners responsible for maintaining cold-chain integrity for sensitive drugs and vaccines. Additionally, cloud and SaaS providers hosting patient data, and even non-clinical vendors - such as HVAC, facilities management, and food services - play a role, often with access to healthcare delivery organization (HDO) networks [1][3][4][6].

Each of these components introduces potential vulnerabilities. For example, logistics partners manage shipments that are both time- and temperature-sensitive, where even a small operational or cyber disruption could compromise the integrity of critical products [3]. Similarly, cloud service providers and SaaS vendors handle protected health information (PHI), making them attractive targets for ransomware and data theft.

The ecosystem also increasingly incorporates AI models, data pipelines, third-party AI tools, and hosting platforms into clinical workflows and supply chain forecasting [4][6][7]. These technologies, while powerful, bring new risks. Attackers can tamper with training datasets or manipulate AI-based routing tools, leading to delays or other disruptions [4][7]. A striking example of the ecosystem’s scale is Censinet’s collaborative risk network, which includes over 50,000 vendors and products across the healthcare industry [1]. This interconnectedness underscores the vast attack surface HDOs must monitor and secure.

The diverse components of this ecosystem explain why supply chain incidents can take so many forms.

Common Types of Supply Chain Security Incidents

Given the complexity of the healthcare supply chain, security incidents can vary widely. Ransomware attacks on vendor systems are a major concern. When key vendors - such as those hosting EHRs, providing imaging systems, or distributing pharmaceuticals - are hit, multiple HDOs can lose access to critical services. These attacks often spread through compromised vendor credentials or malicious software updates, allowing attackers to infiltrate further into networks.

Another common issue is data theft and unauthorized access to PHI. Attackers frequently exploit weak access controls, misconfigured cloud storage, or vulnerabilities in vendor applications to steal patient records and clinical data. A single breach at a vendor can expose sensitive information from dozens of HDOs relying on their services [1].

Software supply chain attacks and AI-specific risks are also on the rise. Adversaries target libraries, APIs, and CI/CD pipelines that are widely used across healthcare organizations [4][7]. A 2024 review highlights how AI systems introduce additional vulnerabilities, such as the risk of data poisoning. In these cases, attackers manipulate training data to degrade AI model performance, often without immediate detection [4]. Reflectiz reports a staggering 156% increase in AI-related supply chain attacks in just one year, with attackers focusing on third-party AI libraries and models used in web applications and SaaS platforms [7]. To combat these threats, AI-driven detection methods are being developed to identify and mitigate risks before they escalate.

Logistics and cold-chain disruptions represent another critical area of concern. Cyberattacks targeting temperature monitoring systems, routing data, or tracking platforms can lead to the spoilage of vaccines, blood products, and other temperature-sensitive medications [3]. These disruptions not only waste valuable resources but also directly endanger patient safety by compromising the quality and availability of essential medical supplies. AI-powered monitoring solutions are proving effective in this area, reducing spoilage and compliance issues by continuously tracking factors like temperature and transit times while providing real-time alerts for anomalies [3].

AI Technologies for Supply Chain Incident Detection

AI has transformed how supply chain incidents are identified, offering faster and more precise detection capabilities. By analyzing vendor behavior, predicting risks, processing threat intelligence, and automating responses, AI tools improve real-time healthcare supply chain security.

Anomaly Detection for Vendor Activity Monitoring

Machine learning models are trained to understand typical vendor behavior by analyzing historical data, such as access logs, API traffic, VPN usage, and data transfers. These models flag anomalies like logins from unusual locations, unexpected PHI transfers, or system access during off-hours. Unlike traditional rule-based systems that rely on predefined thresholds, machine learning adapts by learning patterns from past data, making it capable of spotting subtle, previously unseen irregularities. This adaptability is crucial for managing the ever-changing dynamics of healthcare supply chains. For instance, Censinet RiskOps™ uses AI to monitor risks across a network of healthcare delivery organizations (HDOs) and over 50,000 vendors and products, enabling early detection of suspicious activity before it escalates into a breach [1].

Predictive Risk Scoring for Third-Party Vendors

AI goes beyond detecting anomalies by assigning predictive risk scores to vendors. These scores are calculated using a range of data sources, including security assessments, vulnerability scans, threat intelligence, and financial health metrics. These predictive scores estimate both the likelihood and potential impact of security incidents, helping teams prioritize which vendors need immediate attention. Research demonstrates that machine learning models, such as Random Forest forecasting, can deliver more accurate predictions while reducing costs, as evidenced in healthcare supply chain studies [2]. Censinet's platform integrates real-time shared risk data, enabling dynamic vendor threat assessments and faster response times across the supply chain [1].

Natural Language Processing for Threat Intelligence

Natural language processing (NLP) algorithms streamline the review of regulatory advisories, security notices, vulnerability bulletins, and even contract terms. This automation allows organizations to identify new risks, obligations, or weaknesses far more quickly than manual methods, often within hours instead of days [5]. For example, the Health Sector Coordinating Council (HSCC) is crafting AI cybersecurity guidance for 2026, aiming to address "hidden AI risks across layered vendor supply chains." Their approach emphasizes continuous monitoring of AI systems and rigorous vendor oversight [8][5]. By integrating NLP insights into everyday workflows, organizations gain a more comprehensive view of emerging threats, complementing anomaly detection and risk scoring.

Automated Incident Triage and Reporting

When a potential supply chain incident arises, AI can automatically link related alerts, assess severity, and direct findings to the right teams for action. This automation ensures that critical risks and vendor-related incidents are promptly escalated to key stakeholders, including AI governance committees, without requiring manual effort. Tools like Censinet AI enhance collaboration by enabling advanced routing and orchestration across Governance, Risk, and Compliance (GRC) teams, with real-time data displayed in an intuitive risk dashboard [1]. Integrating AI outputs into platforms like SIEM, SOAR, and GRC systems ensures that third-party vendor incidents are handled efficiently, reducing response and triage times.

Implementing AI-Driven Incident Detection Programs

Using AI to detect supply chain incidents in healthcare requires careful planning, strong governance, seamless technical integration, and strict privacy measures that comply with healthcare regulations.

Governance and AI Oversight Requirements

To effectively oversee AI use, healthcare organizations should establish an AI governance committee. This group should include key stakeholders such as the Chief Information Security Officer (CISO), Chief Information Officer (CIO), Chief Medical Information Officer (CMIO), supply chain leaders, compliance officers, legal experts, and clinical representatives. Their role is to evaluate AI use cases, determine acceptable risk levels, and supervise how AI systems identify and respond to supply chain incidents. The Health Sector Coordinating Council (HSCC) emphasizes the importance of keeping "humans in the loop" for healthcare cybersecurity[5], ensuring that final decisions rest with security analysts and clinicians, not automated systems.

Organizations should also develop a comprehensive AI risk management policy. This policy should outline details like data sources, training methods, model limitations, and testing results. It must also address critical aspects such as explainability, audit logging, and human oversight. AI tools and vendors should be classified as high-risk, requiring thorough due diligence on factors like model supply chains, the integrity of training data, update processes, and vulnerability management. With AI supply chain attacks surging by 156% in a single year[7] and data poisoning potentially impacting up to 35% of model predictions[4], robust governance is essential to prevent AI systems from becoming security risks.

Once governance structures are in place, the next step is to integrate AI into existing security frameworks.

Integrating AI with Security and Risk Management Systems

AI-generated alerts should feed directly into Security Information and Event Management (SIEM) platforms and automate workflows using Security Orchestration, Automation, and Response (SOAR) systems. This integration allows organizations to trigger playbooks that can isolate compromised vendors or notify relevant teams in real time.

A practical example is Censinet RiskOps™, which consolidates AI findings into a centralized risk dashboard. This streamlines coordination across Governance, Risk, and Compliance (GRC) teams. Terry Grogan, CISO at Tower Health, shared:

"Censinet RiskOps allowed 3 FTEs to go back to their real jobs! Now we do a lot more risk assessments with only 2 FTEs required."[1]

This efficiency comes from embedding AI outputs into existing workflows instead of treating them as isolated alerts. With integration handled, protecting sensitive patient data becomes the next priority.

Protecting Patient Data and Ensuring AI Safety

Safeguarding patient data is a cornerstone of AI-driven incident detection. Organizations should minimize the use of Protected Health Information (PHI) by default, opting for de-identified or pseudonymized datasets whenever possible. When PHI is unavoidable, robust controls must be in place. These include enforcing least-privilege access, encrypting data in transit with TLS 1.2 or higher, encrypting data at rest, logging all PHI access, and establishing Business Associate Agreements (BAAs) with AI vendors that handle sensitive information.

Privacy-preserving techniques - such as data aggregation, tokenization, or relying on low-resolution metadata instead of raw clinical data - can further reduce risks while maintaining the analytical value of the data. Additionally, organizations must ensure that data retention and destruction policies comply with HIPAA and state privacy laws. AI training datasets containing PHI should be subject to strict change control and audit procedures to maintain compliance and security.

sbb-itb-535baee

Measuring Performance and Improving Detection Programs

Healthcare organizations need to monitor specific metrics to evaluate how well AI-driven incident detection systems perform. These metrics not only validate AI's role in identifying anomalies but also highlight its impact on securing healthcare supply chains. The Health Sector Coordinating Council advises setting clear performance indicators from the start and conducting regular evaluations to ensure AI systems remain safe, effective, and aligned with risk management goals [5].

Key Metrics for AI Detection Programs

To measure success, organizations should focus on two categories: model-level metrics (like precision, recall, and model drift) and process-level metrics (such as the time between alert and triage, triage to containment, and incidents caught before they disrupt patient care or compromise PHI).

A close watch on false positive and negative rates is crucial, as overreliance on AI predictions can lead to a false sense of security. Experts warn that AI systems may "produce predictions with an illusion of certainty", making it vital to evaluate performance rigorously [4]. Tracking the usefulness of alerts - measured by how many AI-generated alerts lead to real security actions - is another key metric, along with identifying how often incidents go undetected.

Financial and operational outcomes are equally important. Healthcare organizations face significant costs from breaches, with supply chain vulnerabilities playing a major role [4]. Metrics like AI's ability to reduce breach risks, lower logistics expenses, and prevent stock shortages help translate technical performance into tangible business benefits. For example, AI-driven predictive models with low Mean Absolute Error (MAE) in demand forecasting have been shown to cut down stock-outs and reduce logistics costs [2].

These internal measurements can then be used as a foundation for benchmarking against industry standards.

Using Benchmarks and Feedback for Improvement

External benchmarks and consistent feedback are essential for driving ongoing improvements. By comparing their detection capabilities to industry standards, healthcare organizations can identify weaknesses and secure the resources they need. Brian Sterud, CIO at Faith Regional Health, emphasized the importance of this approach:

"Benchmarking against industry standards helps us advocate for the right resources and ensures we are leading where it matters." [1]

Platforms like Censinet RiskOps facilitate collaboration by connecting healthcare organizations with a network of over 50,000 vendors and products. This setup allows for secure sharing of cybersecurity and risk data [1]. Such networks encourage feedback loops where organizations can exchange insights on detection patterns, threat intelligence, and response strategies. James Case, VP & CISO at Baptist Health, highlighted the benefits of this collaboration:

"Not only did we get rid of spreadsheets, but we have that larger community [of hospitals] to partner and work with." [1]

To maintain AI systems' effectiveness, organizations should conduct quarterly model validations using held-out data and real-world incidents [5]. Feedback from security analysts, supply chain teams, and clinicians - collected through post-incident reviews and regular surveys - can help refine detection thresholds, reduce false positives, and adjust models to address evolving threats. With AI supply chain attacks surging by 156% in a single year [7], this continuous improvement process is critical for staying ahead of threats and ensuring reliable detection capabilities.

Conclusion

AI is revolutionizing how supply chain incidents are detected, shifting from reactive, manual processes to continuous, real-time monitoring that can predict disruptions before they impact patient care. Organizations like Tower Health are already seeing this transformation in action, reducing the number of full-time employees needed for risk assessments while handling a greater volume of evaluations [1]. This increase in efficiency directly strengthens the protection of patient data, clinical systems, and medical device supply chains. It also sets the stage for lasting improvements in security practices.

Achieving long-term success, however, requires strong governance. This includes establishing formal AI oversight committees that bring together CISOs, supply chain leaders, clinicians, and compliance officers. These committees should align their efforts with sector-specific guidance, such as the Health Sector Coordinating Council's AI security principles, which stress transparency, accountability, and ongoing evaluation [5][4]. At the same time, privacy-by-design principles - like data minimization, de-identification, and robust access controls - are essential for ensuring AI-driven monitoring complies with regulations like HIPAA, HITECH, and state privacy laws [9][4].

Collaboration is another key piece of the puzzle. Platforms like Censinet RiskOps enable healthcare organizations to securely share cybersecurity and risk data with a network of over 50,000 vendors and products [1]. This collective intelligence is vital for staying ahead of rapidly evolving threats, especially as AI supply chain attacks have surged by 156% in just one year [7].

The business case for AI in supply chain management is clear. It reduces the risk of breaches, cuts logistics costs, and prevents stock shortages that could jeopardize patient safety [2][4]. By combining accurate demand forecasting with real-time anomaly detection, healthcare organizations can respond quickly, maintain optimal inventory levels, and identify threats early [2]. Regular model validations, feedback from security and clinical teams, and benchmarking against industry standards ensure these AI systems remain effective and aligned with the mission of safeguarding patients.

FAQs

How does AI enhance incident detection in healthcare supply chains?

AI plays a key role in improving incident detection within healthcare supply chains by processing vast amounts of data in real time. It can spot unusual patterns, risks, or disruptions - like inventory shortages, shipment delays, or supplier issues - much faster than traditional manual methods. This means healthcare organizations can address problems before they escalate.

With enhanced visibility and automated detection, AI helps cut down delays, lower costs, and ensure essential supplies are delivered promptly. This efficiency directly contributes to maintaining reliable patient care.

What are the key risks of using AI in healthcare supply chains?

AI's integration into healthcare supply chains brings undeniable benefits, but it also comes with certain risks that need careful attention. These risks include cybersecurity threats, data breaches, and supply chain interruptions. Any of these issues could jeopardize patient safety and potentially result in financial setbacks. Moreover, if incidents are detected inaccurately or too late, it can slow down response times and create operational bottlenecks.

To address these challenges, healthcare organizations must prioritize monitoring and securing AI systems. Ensuring these systems are dependable and operate as intended is key. By implementing strong risk management strategies, organizations can safeguard sensitive information and maintain confidence in their supply chain operations.

How can healthcare organizations ensure the safe and compliant use of AI?

Healthcare organizations can use AI responsibly and securely by implementing targeted risk management strategies designed for the specific demands of the healthcare field. This often involves using specialized tools that address risks tied to patient data, protected health information (PHI), clinical systems, and supply chain operations.

Here are some important practices to consider:

- Perform regular cybersecurity evaluations to uncover and fix potential weaknesses.

- Apply strict access controls to safeguard sensitive data.

- Stay compliant with privacy laws like HIPAA by maintaining ongoing monitoring and making necessary updates.

- Adopt collaborative risk management approaches to improve how incidents are detected and handled.

Taking these proactive steps and aligning with established privacy regulations helps healthcare organizations protect critical data while effectively leveraging the potential of AI technologies.