The AI Triangle: Balancing People, Process, and Technology for Success

Post Summary

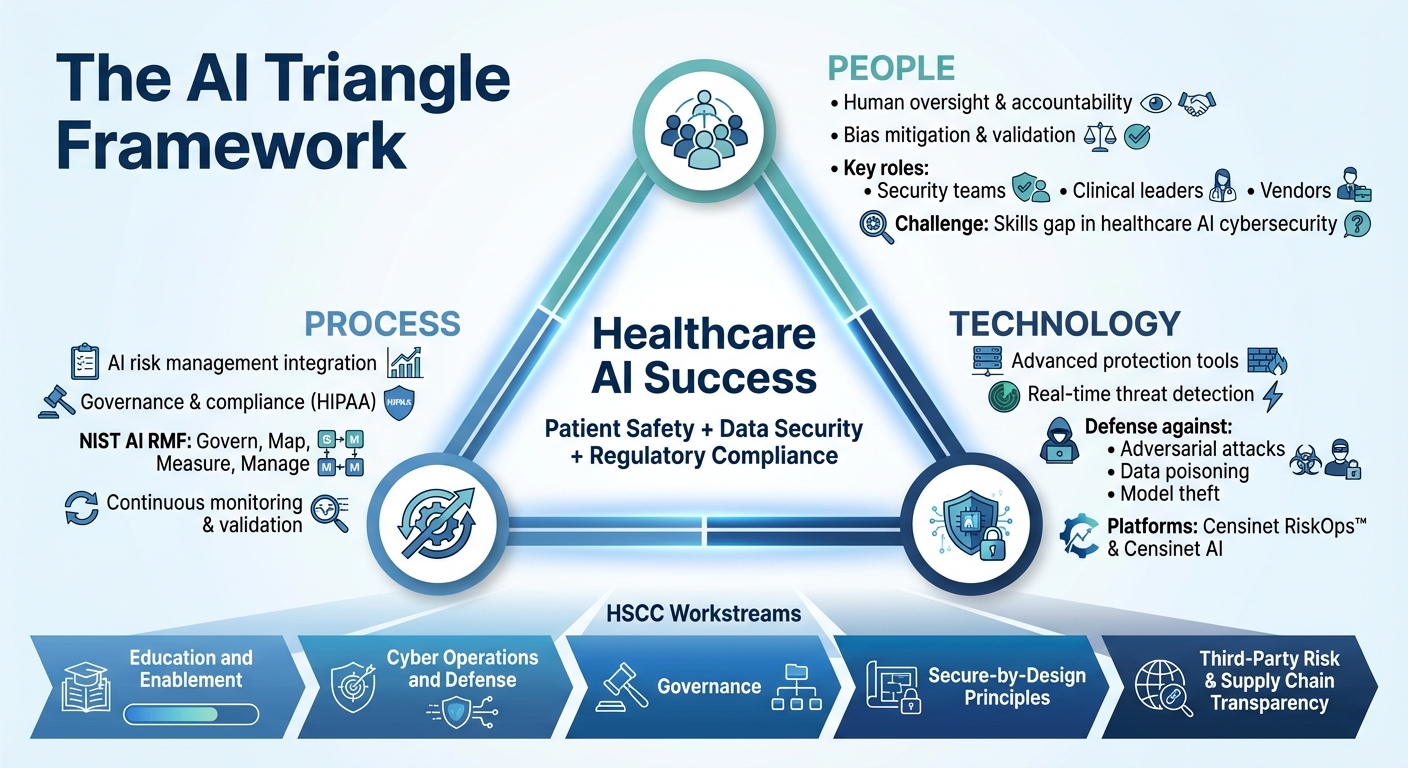

Healthcare organizations are increasingly using AI to improve operations, but this progress comes with cybersecurity risks. The AI Triangle - a framework of people, process, and technology - offers a balanced approach to managing these risks. Here's the core idea:

- People: Human oversight ensures accountability, mitigates bias, and validates AI outputs.

- Process: AI risk management should integrate into existing workflows, focusing on governance and compliance with regulations like HIPAA.

- Technology: Advanced tools protect AI systems from threats like adversarial attacks and data poisoning.

With AI's growing role in healthcare, aligning these three components is critical to safeguard patient data, maintain trust, and meet regulatory standards. Tools like Censinet RiskOps™ and Censinet AI streamline risk assessments while maintaining necessary human involvement. Success depends on clear governance, skilled teams, and effective workflows working together.

The AI Triangle Framework: People, Process, and Technology in Healthcare Cybersecurity

The 3 Components of the AI Triangle: People, Process, and Technology

To manage the unique risks AI introduces to healthcare cybersecurity, it’s essential to understand how the three components of the AI Triangle - people, process, and technology - work together. Issues like bias, explainability, and model integrity pose real threats to patient safety and data security. When these elements are aligned, they form a strong defense against such vulnerabilities.

The Health Sector Coordinating Council (HSCC) has laid out a framework through five key workstreams: Education and Enablement, Cyber Operations and Defense, Governance, Secure-by-Design Principles, and Third-Party Risk and Supply Chain Transparency. These workstreams emphasize how people, processes, and technology must integrate to adopt AI responsibly [1]. This approach connects strategy with execution, addressing governance, operational workflows, and technical safeguards.

People: The Role of Human Oversight

Human oversight is the cornerstone of addressing AI risks in healthcare cybersecurity. In systems where the decision-making logic - often referred to as "black box" models - isn’t transparent, human judgment plays a critical role [3]. While AI can handle routine tasks, it’s up to people to focus on more complex and strategic threat responses. This oversight ensures biases in AI models are identified and corrected, outcomes are explainable to both clinicians and patients, and model integrity is maintained through ongoing validation.

Key players include security teams monitoring AI systems for irregularities, clinical leaders evaluating AI recommendations against patient care standards, and vendors developing AI tools with built-in security and ethical transparency. However, a significant challenge lies in the shortage of professionals with expertise in both healthcare and AI cybersecurity [5]. Clearly defined roles and responsibilities are essential to ensure everyone involved understands how to manage AI-related risks effectively.

With human oversight as the foundation, well-structured processes are the next step in securing AI operations.

Process: Building Risk Management Workflows

Strong processes are essential for managing AI risks and should be rooted in governance frameworks and incident response protocols tailored specifically for AI systems. Instead of treating AI risk management as a separate function, it should be integrated into existing cybersecurity operations. This ensures that organizations have procedures in place to validate AI model outputs, document decision-making for audits, and respond to AI-specific threats like data poisoning or adversarial attacks.

Rather than relying on reactive measures, organizations need proactive workflows that continuously train, validate, and monitor AI models to meet regulatory requirements [3][4]. These processes should also encourage transparent reporting of AI errors, fostering a no-blame culture that supports accountability and improvement.

Technology: Tools for Managing AI Risks

The technology component includes both the AI systems themselves and the cybersecurity tools designed to protect them. AI-enabled platforms are uniquely suited to address healthcare’s challenges, offering real-time threat detection, automated risk assessments, and centralized visibility across complex IT environments. These tools integrate security measures from the ground up [1].

Platforms like Censinet RiskOps™ and Censinet AI streamline risk management workflows while maintaining critical human oversight. They ensure AI inventories are up to date, map data dependencies clearly, and implement risk-based safeguards. These tools also improve explainability by generating audit trails and documentation that outline how AI systems arrive at their conclusions - key for regulatory compliance and earning trust among clinical teams. When combined with skilled professionals and well-designed processes, these technological safeguards complete the AI Triangle, creating a comprehensive approach to healthcare cybersecurity.

People: Building Skills and Governance for AI Risk

Stakeholder Roles and Responsibilities

Effectively managing AI risks in healthcare requires a clear division of responsibilities across all levels of an organization. Executive leadership plays a crucial role in overseeing cybersecurity preparedness and keeping the governing body informed about AI usage, outcomes, and any incidents that arise [6][7]. Leaders must stay up to date on how AI systems are performing and the risks they bring to the table.

IT teams are at the core of AI governance. Cybersecurity experts, data privacy specialists, and system administrators work together to implement technical safeguards, monitor system behavior, detect anomalies, and respond to potential issues. Meanwhile, clinical leaders provide critical insights into patient care workflows and safety standards. They assess whether AI recommendations align with established clinical practices and flag instances where AI outputs could negatively affect patient outcomes.

To ensure accountability, organizations should appoint dedicated AI experts responsible for overseeing the entire AI lifecycle [6]. Without this focused leadership, accountability can falter, increasing the likelihood of risks going unchecked.

This structured approach to assigning roles lays the groundwork for building the necessary skills across teams.

Required Skills for AI Risk Management

Once roles are defined, the next step is equipping teams with the skills needed to manage AI risks effectively. Addressing the healthcare AI skills gap requires more than just basic cybersecurity knowledge. Staff must gain a thorough understanding of AI fundamentals, its applications in healthcare, and the specific risks it introduces [1]. For instance, teams should learn to identify issues like model drift, adversarial attacks, and vulnerabilities in training data that can lead to bias or security weaknesses.

Organizations should invest in ongoing, structured training programs that focus on developing technical expertise, ethical judgment, and compliance know-how [9][1][8][10][11]. It’s not just about understanding how AI functions - it’s about knowing how it can fail and what those failures could mean for patient safety and data security.

Creating AI Governance Structures

Establishing robust governance structures is essential for integrating human oversight into AI processes. As part of this effort, formal frameworks for accountability are key. The Health Sector Coordinating Council (HSCC) is actively working on 2026 guidance for AI cybersecurity, with a Governance subgroup focused on crafting a comprehensive framework for managing AI risks in healthcare [1]. Additionally, in December 2025, the U.S. Department of Health and Human Services (HHS) introduced its AI Strategy, which includes "Ensure governance and risk management for public trust" as a central pillar [12][2].

Healthcare organizations should form AI governance committees that include executives, IT specialists, clinical leaders, and compliance officers. These committees are instrumental in operationalizing AI governance, ensuring accountability, and safeguarding both patients and the organization.

Process: Adding AI to Risk Management Workflows

Aligning AI Risk with U.S. Standards

The NIST AI RMF outlines four essential functions - Govern, Map, Measure, and Manage - that provide a structured framework for addressing AI risks in healthcare. These functions, paired with the upcoming HSCC 2026 guidance, offer a roadmap for evaluating and mitigating potential risks tied to AI use in the industry [13][14][15][1]. The HSCC guidance will focus on five main areas: Education and Enablement, Cyber Operations and Defense, Governance, Secure-by-Design Principles, and Third-Party AI Risk and Supply Chain Transparency [1]. Aligning internal processes with both the NIST AI RMF and the forthcoming HSCC recommendations allows healthcare organizations to adopt a standardized, industry-accepted approach to AI risk management. This alignment also helps integrate AI into existing cybersecurity workflows more effectively.

Integrating AI into Cybersecurity Processes

Bringing AI into current cybersecurity workflows means carefully assessing how people, processes, and technologies interact. AI risk assessments should be incorporated into both clinical and enterprise risk management frameworks [3]. This includes evaluating third-party vendors that use AI, ensuring they address concerns like model poisoning risks, data privacy protections, and transparency in their AI training methods. Additionally, workflows governing protected health information (PHI) must account for how AI systems access, process, and store sensitive data, ensuring compliance with HIPAA and other U.S. healthcare regulations. By embedding AI risk management within the broader cybersecurity strategy, organizations can maintain a cohesive and comprehensive approach to safeguarding their systems.

Using Censinet Tools for Process Automation

Building on previous technological advancements, Censinet RiskOps™ and Censinet AI streamline risk assessment and documentation processes. These tools enable vendors to quickly complete security questionnaires, summarize evidence and documentation, and outline product integration details, including fourth-party risk exposures. While automation significantly reduces the time required for these assessments, human oversight remains integral, with configurable rules and review steps ensuring accuracy and accountability.

Censinet AI also offers a centralized dashboard that consolidates critical AI risk findings, routing them to key stakeholders - such as members of the AI governance committee - for review and approval. This dashboard provides real-time data, acting as a central hub for managing AI-related policies, risks, and tasks. By automating routine tasks while maintaining strategic human oversight, these tools help ensure that the appropriate teams address the most pressing issues promptly. This approach supports continuous monitoring and accountability, enabling organizations to manage AI risks effectively across all levels.

sbb-itb-535baee

Technology: Managing AI Systems and Cybersecurity Tools

Types of AI Technologies in Healthcare

Healthcare organizations are increasingly using AI to improve efficiency and aid clinical decision-making. One standout technology is Generative AI, which helps clinicians by offering decision support, automating patient communication, and generating documentation. However, as these AI tools grow more advanced, they also introduce new challenges that need careful attention.

AI-Specific Cyber Threats

AI systems face unique security risks that traditional cybersecurity tools may not fully address. Take data poisoning, for example - this involves tampering with training datasets, which can lead to biased or incorrect AI outputs. Then there's model theft, where proprietary algorithms are extracted through repeated queries, putting valuable intellectual property at risk. Another threat, adversarial attacks, involves feeding AI systems with deceptive inputs to manipulate their behavior, such as tricking a radiology AI into missing a critical diagnosis. The ECRI Institute has even identified AI as the top health technology hazard for 2025, citing the potential for catastrophic failures [16]. Tackling these issues requires constant monitoring, rigorous validation, and advanced technical safeguards that go beyond standard network security measures. Integrating these protections into existing healthcare risk management systems is essential.

Tools for AI Risk Management

Censinet RiskOps™ offers a centralized platform designed to help healthcare organizations manage AI-related risks. Built on a network of healthcare providers and over 50,000 vendors and products, this tool allows organizations to incorporate AI risk assessments into their broader cybersecurity plans [17]. Another key tool, Censinet AI, streamlines the risk assessment process by automating security questionnaires and summarizing critical evidence, like product integration details and fourth-party risks. Hosted on AWS infrastructure within a dedicated Virtual Private Cloud, the platform ensures customer data remains secure and is never exposed to the public internet [18]. This setup guarantees that customer data and prompts are not accessed or retained by third parties or used for purposes like model training or content moderation [18].

Balancing the AI Triangle for Long-Term Success

How to Align People, Process, and Technology

Creating harmony between people, processes, and technology is crucial for effective AI governance. Start by setting up clear governance structures that outline responsibilities - from forming dedicated AI oversight committees to empowering frontline staff who interact with AI tools daily. Boosting your team's understanding of AI is equally important. Equip them with the knowledge to comprehend both the strengths and limitations of these systems, ensuring they can provide essential human oversight. This step is key to using AI ethically and spotting issues that purely technical approaches might miss [2][20][6].

Next, integrate strong risk management practices into your current operations. This includes regular bias assessments, ongoing monitoring, and incident reporting systems designed to catch problems early [19][21]. Tie these workflows into your existing cybersecurity and risk frameworks. When clear roles, efficient processes, and supportive technology come together, they establish an adaptable and sustainable AI governance model. This alignment lays the groundwork for achieving measurable outcomes and operational efficiency.

Measuring Success: Metrics and KPIs

To measure the effectiveness of your AI governance efforts, focus on specific metrics and KPIs. For cybersecurity, track performance indicators that highlight security strengths and areas needing improvement [22][23]. For AI systems, prioritize metrics around data privacy, bias, and reliability - key safety concerns unique to these technologies [24]. Monitoring how long AI risk assessments take can reveal process efficiency, while tracking the frequency of AI-related incidents shows whether your safeguards are working. Workforce training completion rates are another critical metric, ensuring your team stays adept at overseeing AI systems.

Other valuable metrics include the time it takes to address vulnerabilities in AI systems, compliance rates with U.S. healthcare regulations, and the percentage of AI tools that have undergone formal risk assessments. These indicators not only measure progress but also guide the next step: putting governance into action with dedicated tools.

Operationalizing Governance with Censinet Platforms

Censinet RiskOps™ acts as the central command for managing AI governance. Think of it as air traffic control for AI risk - directing key findings and critical risks to the right stakeholders, such as members of the AI governance committee, for swift review and action. This ensures that issues are addressed by the appropriate teams at the right time, fostering accountability and ongoing oversight across your organization.

The Censinet AI platform simplifies risk assessments and consolidates essential AI risk data while keeping human oversight front and center through customizable rules and review processes. Its real-time AI risk dashboard pulls together policies, risks, and tasks, enabling organizations to manage cyber risks at scale without sacrificing the precision and safety required in healthcare. By streamlining these processes, Censinet ensures that governance remains both effective and manageable.

Conclusion: Key Takeaways for Healthcare AI Risk Management

Bringing AI into healthcare cybersecurity isn’t just about adopting the latest technology - it’s about finding the right balance between people, processes, and tools. The AI Triangle framework highlights the importance of this balance, showing how human oversight, structured workflows, and strong technological safeguards must work in sync. When these elements are misaligned, security gaps can emerge, putting both patient safety and data integrity at risk [3][8].

To integrate AI responsibly, healthcare organizations need to pair innovation with a focus on security [25][26]. This means setting up clear governance structures, using systems that monitor AI performance continuously, and ensuring teams are well-trained to manage and oversee AI tools effectively.

Tracking key metrics - like response times, compliance rates, and training progress - can help identify potential issues before they grow into major problems. Tools like Censinet RiskOps™ and Censinet AI centralize risk data, making it easier to maintain the human oversight that’s critical for managing cyber risks at scale. These tools support a proactive, forward-thinking approach to AI risk management.

Ultimately, success lies in committing to all three pillars of the AI Triangle. Invest in skilled people, refine workflows, and adopt technology that complements human decision-making. This balanced strategy allows organizations to embrace AI’s potential while meeting the high standards of security and compliance the healthcare industry demands.

FAQs

How does human oversight help reduce AI risks in healthcare?

Human involvement is crucial in reducing risks associated with AI in healthcare. It ensures that critical decisions are made thoughtfully and that ethical guidelines are followed. Unlike AI, humans can spot irregularities or biases that might otherwise go unnoticed, helping to avoid mistakes and improper use.

By taking responsibility and encouraging teamwork among all parties involved, human oversight protects patient safety and ensures that AI tools are used in ways that meet both ethical standards and the objectives of healthcare organizations.

What are the essential steps for managing AI risks effectively in healthcare cybersecurity?

Effective AI risk management in healthcare cybersecurity requires a proactive and structured approach to keep systems secure and dependable. The first step is spotting potential threats like data breaches, tampering with models, or biases in algorithms. Conducting regular audits and validations ensures that data remains accurate and models perform as expected over time.

It's also essential to have robust governance frameworks in place to maintain oversight and accountability. Additionally, a clearly outlined incident response plan allows for swift action when unexpected problems arise. Lastly, ongoing monitoring of AI systems plays a critical role in identifying vulnerabilities early, helping to address them before they become larger issues.

What key technologies help protect AI systems in healthcare?

To protect AI systems in healthcare, it's crucial to rely on real-time monitoring tools that can quickly identify and address potential security threats. Equipping staff with ongoing training ensures they can effectively manage AI-related challenges and adhere to established safety protocols. Having incident response plans in place allows for immediate action during breaches, minimizing damage. Moreover, using secure data-sharing methods, like blockchain technology, helps safeguard sensitive patient data while preserving the reliability of the system.