Cyber Resilience in the AI Age: Building Defenses Against Intelligent Threats

Post Summary

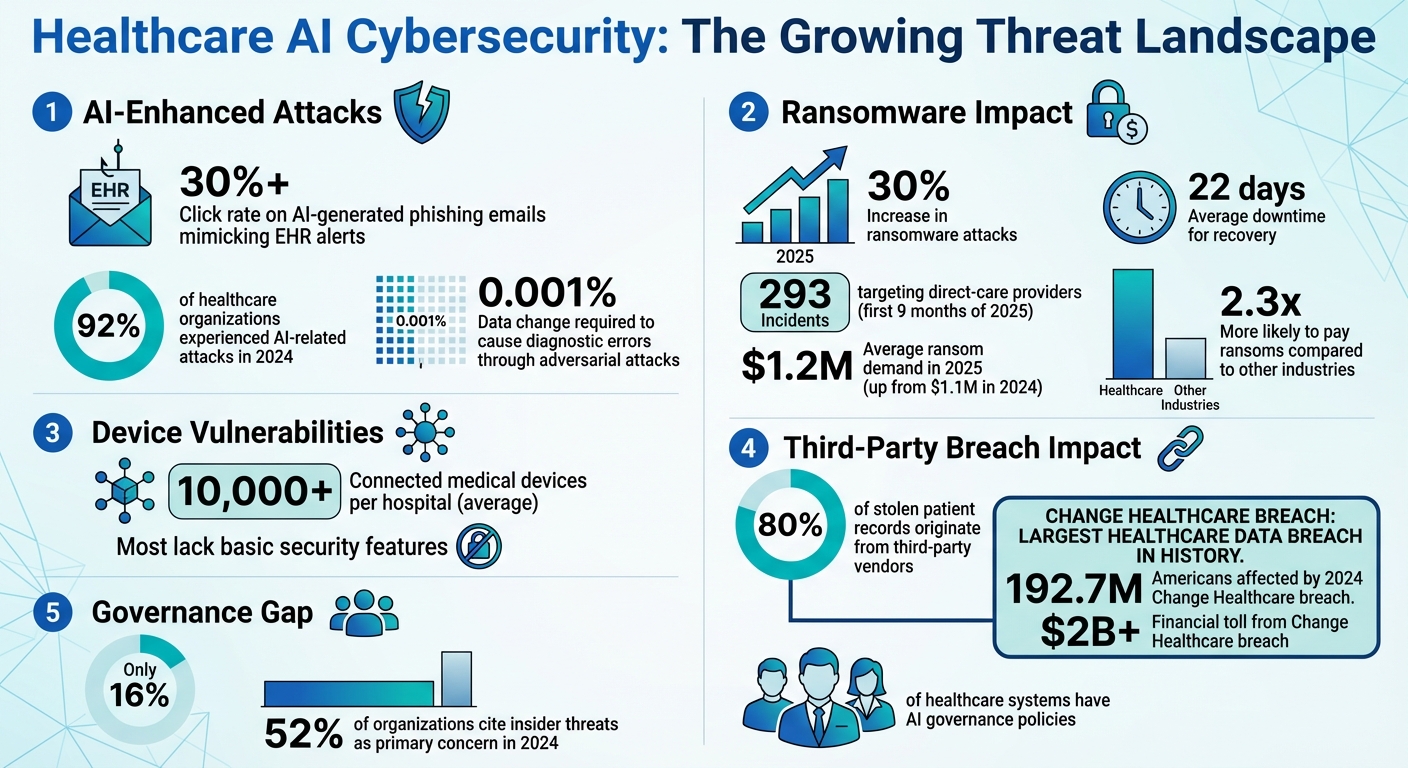

In healthcare, cyber resilience means preparing for and recovering from cyberattacks, especially as AI-driven threats evolve. Cybercriminals now use AI to create phishing scams, deepfakes, and manipulate sensitive systems, putting patient safety and data at risk. Traditional compliance and outdated tools fail to address these threats, leaving healthcare systems vulnerable.

Key takeaways:

- AI-driven threats: Phishing emails, deepfakes, and data poisoning attacks are becoming harder to detect.

- Healthcare vulnerabilities: Connected medical devices and third-party vendors are major weak points.

- Solutions: AI governance, risk assessments, zero-trust security, and workforce training are critical to building resilience.

Healthcare organizations must adopt AI-powered tools, improve oversight, and train staff to counter these evolving risks. Combining advanced technology with human expertise is essential to safeguard patient care and data integrity.

AI-Driven Cyber Risks in Healthcare

Healthcare AI Cybersecurity Threats: Key Statistics and Impact 2024-2025

AI-Enhanced Social Engineering and Fraud

AI has taken phishing scams to a whole new level, turning them into highly targeted and convincing attacks. Healthcare workers, for instance, now face phishing emails that mimic electronic health record (EHR) alerts or lab results with alarming accuracy. These emails have a click rate exceeding 30% [5].

Shane Cox, Director of the Cyber Fusion Center at MorganFranklin Cyber, describes the evolution of phishing:

"Traditional phishing scams were once easy to spot, riddled with typos and awkward phrasing. Now, AI can generate flawless, hyper-personalized phishing emails, mimicking the language, tone, and urgency of legitimate communications. Even security-aware employees struggle to tell the difference" [6].

Deepfake technology has made things even more sinister. By 2025, attackers have been using AI-generated voices that perfectly imitate CFOs to authorize fraudulent transactions. Cox outlines a disturbing scenario:

"Imagine a hospital finance director receiving a call that sounds exactly like the CFO, authorizing an urgent wire transfer for a medical supply purchase. The voice is eerily familiar, the details check out, and the pressure to act quickly is high. But it's not the CFO. It's an AI-generated deepfake, convincing enough to bypass even the most skeptical professionals" [6].

While social engineering attacks grow more sophisticated, attackers are also directly targeting AI systems, exploiting their vulnerabilities.

Attacks on AI Systems

Adversarial attacks are capable of causing diagnostic errors or treatment failures with changes as small as 0.001% of input data [5]. In 2024, 92% of healthcare organizations reported experiencing AI-related attacks [5].

One particularly dangerous method is data poisoning. Attackers subtly manipulate medical imaging systems by adding or removing tumor indicators in radiological scans, altering ECG results, or modifying pathology images [5][7]. These changes exploit AI's reliance on pattern recognition while evading detection by human operators.

Prompt injection attacks are another growing concern. These attacks target language models in medical chatbots and clinical systems, leading to outcomes such as dangerous medical advice, exposure of sensitive patient data, or the creation of false clinical documentation [5]. Unlike traditional tech failures, compromised AI systems can actively harm patients by providing incorrect diagnoses, inappropriate treatments, or medication errors [5].

Recognizing these risks is crucial for developing effective protection strategies. As AI becomes a primary target, vulnerabilities in connected devices further increase the risks.

Vulnerabilities in Connected Devices and Infrastructure

Healthcare's reliance on connected devices has significantly broadened the attack surface. On average, hospitals manage over 10,000 connected medical devices, many of which lack basic security features [5]. Devices like pacemakers, insulin pumps, imaging equipment, and automatic ventilators - especially those controlled by AI - are vulnerable to ransomware, denial-of-service attacks, and unauthorized modifications [2].

Outdated systems and poor interoperability create additional risks, where a single weak link can compromise an entire network [2][5][7].

The statistics paint a grim picture. Ransomware attacks in healthcare rose by 30% in 2025, with 293 incidents targeting direct-care providers in just the first nine months [5]. The average ransom demand hit $1.2 million in 2025, up from $1.1 million the previous year [5]. Recovery from these attacks is slow, with an average downtime of 22 days, and some facilities taking months to fully restore operations [5]. Due to the critical nature of healthcare, organizations in this sector are 2.3 times more likely to pay ransoms compared to other industries [5].

The 2024 Change Healthcare data breach is a stark reminder of supply chain vulnerabilities. This single vendor compromise affected 192.7 million Americans, disrupting prescription processing nationwide and forcing many practices to revert to paper-based operations for weeks. The financial toll exceeded $2 billion in delayed payments and operational disruptions [5]. Alarmingly, 80% of stolen patient records now originate from third-party vendors rather than hospitals themselves [5].

These challenges underscore the urgent need for stronger defense measures to protect the healthcare industry from escalating cyber threats.

Core Strategies for Building Cyber Resilience

To tackle the challenges posed by AI-driven risks, healthcare organizations are adopting strategies to strengthen defenses and ensure swift recovery.

Healthcare providers are collaborating to create actionable guidelines for managing AI-related cybersecurity threats. These efforts are structured around key areas like Education, Cyber Defense, Governance, Secure by Design, and Third-Party Supply Chain Transparency [1]. Together, these initiatives aim to establish strong governance and risk management practices.

AI Governance and Compliance Frameworks

Establishing formal governance processes is crucial for securing AI in healthcare. This includes defining clear roles, responsibilities, and clinical oversight across the entire AI lifecycle. Maintaining a detailed inventory of AI systems is also essential to understand their functions and associated risks [1].

One effective method is to classify AI tools using a five-level autonomy scale, which matches the level of oversight to the potential risk. For instance, systems making autonomous clinical decisions require stricter controls compared to those offering decision support. Governance measures should align with HIPAA and FDA regulations, while also referencing established frameworks like the NIST AI Risk Management Framework [1]. High-impact AI applications should adhere to practices such as bias mitigation, outcome monitoring, robust security protocols, and human oversight [9].

AI Risk Assessment and Management

AI introduces unique risks that traditional error classifications may overlook. These include algorithmic biases, lack of transparency in predictive models, system malfunctions, and the complexity of human-technology interactions [2]. To address these challenges, healthcare organizations should integrate AI-specific risk assessments into their existing cybersecurity frameworks. This involves creating tailored response procedures for threats like model poisoning, data corruption, and adversarial attacks. Continuous monitoring and maintaining verifiable model backups are also critical [1][2].

Collaboration between cybersecurity and data science teams plays a key role in improving resilience. This partnership supports ongoing testing and learning from incidents. Tools such as Censinet RiskOps™ provide centralized oversight by offering real-time dashboards that track AI-related policies, risks, and tasks, enhancing overall governance.

Technical Controls for AI Security

In addition to governance and risk assessments, technical controls are essential for safeguarding AI systems. A zero-trust approach is a strong starting point, incorporating measures like role-based access, multifactor authentication, and encryption for patient data. Limiting direct internet access and restricting USB port usage further bolsters security [11]. For data sharing, secure cloud storage solutions are recommended [11].

Protecting AI models themselves is equally important. Input validation helps prevent adversarial attacks by screening data before it reaches the models. Drift monitoring can signal when a model starts deviating from its training parameters, indicating potential compromise or degradation. Context-aware AI systems can analyze interactions in real time to prevent sensitive data leaks [10].

For AI-enabled medical devices, applying "Secure by Design" principles from the development stage ensures strong protection is built in from the start [1]. Regular updates to both software and hardware help address vulnerabilities, while storing patient data in independent locations supports real-time disaster recovery [11].

Using AI to Strengthen Cyber Defense

AI may bring new challenges, but it also provides healthcare organizations with powerful tools to detect, respond to, and recover from cyberattacks more efficiently. The key lies in using AI wisely - combining its rapid data analysis capabilities with human judgment.

AI-Powered Threat Detection and Response

AI builds on existing security measures by enhancing threat detection through real-time data processing. Machine learning models can identify normal email behaviors and flag unusual patterns, outperforming traditional methods that often struggle to keep up. AI's ability to conduct threat assessments in seconds is invaluable, especially when dealing with previously unknown vulnerabilities. By spotting emerging trends, predicting potential breaches, and automating repetitive tasks, AI-powered systems significantly improve how organizations detect and respond to threats [4][12].

Human-in-the-Loop AI for Cybersecurity

AI's speed and pattern recognition are impressive, but it's not a replacement for security professionals. Instead, it enhances their work. A human-in-the-loop approach allows AI to handle routine tasks like sorting alerts, while experts focus on validating AI's recommendations, investigating complex issues, and making strategic decisions. However, over-relying on AI - known as automation bias - can lead to missed warning signs. To counter this, AI systems should provide clear, transparent reasoning for their decisions. This transparency allows analysts to review and refine the system's logic. Feedback loops further help security teams address errors and improve decision-making [13][14][17].

Censinet RiskOps™ demonstrates this approach with its Censinet AI™ solution. This platform speeds up third-party risk assessments by assigning AI-generated tasks and findings to specific stakeholders, including members of an AI governance committee. By incorporating customizable rules and review processes, teams retain control, ensuring that automation supports their work rather than replacing critical decision-making.

"AI isn't a replacement for security professionals. It's a powerful tool that, when properly guided, can make teams faster, sharper, and more effective." - Dropzone AI [13]

Training plays a crucial role. Security teams need ongoing education to interpret AI insights, validate automated actions, and handle escalations appropriately. High-impact AI applications should always involve human oversight. Organizations must also establish clear governance frameworks to define roles, responsibilities, and escalation procedures for AI-related incidents [15][16][18]. This integrated approach ensures swift and effective responses.

AI-Supported Incident Recovery

In the event of a cyberattack, recovering quickly is critical. AI tools can analyze data to contain breaches and predict future threats, reducing downtime and minimizing losses. This is especially important in healthcare, where 92% of organizations have faced cyber intrusions, and individual breaches can cost as much as $4.7 million [3][8].

For incidents involving AI, healthcare organizations must prioritize the rapid containment and recovery of compromised AI models. Maintaining secure, verifiable backups is essential. Response plans should include procedures for identifying AI-driven threats, testing AI-powered response tools, and continuously monitoring AI systems to ensure rapid containment [1][2][3].

"The adoption of 'AI-first' cybersecurity systems can empower healthcare organizations to detect, respond to and recover from cyberattacks faster than traditional models." - FinThrive [8]

sbb-itb-535baee

Implementing AI Risk Management in Daily Operations

To effectively manage AI risks, organizations need to weave risk considerations into their daily operations. This includes integrating them into decision-making processes, vendor evaluations, and workforce preparedness. The Health Sector Coordinating Council is set to release guidance in 2026 for managing AI cybersecurity risks, with a phased rollout focusing on policies and best practices for responsible AI adoption [1]. But waiting isn’t an option - organizations should begin embedding AI risk strategies now to align clinical safety and business planning with robust AI risk management.

Integrating AI Risk into Clinical and Business Decisions

Managing AI risks is essential for ensuring patient safety and sound financial planning. Clinical safety committees should broaden their focus to include AI-related errors like algorithmic biases, lack of transparency in predictive models, system malfunctions, and complex interactions between technology and its users [2]. Addressing these issues requires healthcare leaders to scrutinize how AI tools handle patient data, their training processes, and potential failure scenarios. This approach not only enhances patient safety but also strengthens the organization’s overall cyber resilience by linking clinical oversight with security measures.

A no-blame culture is vital for incident reporting and root-cause analysis. Instead of assigning blame when something goes wrong, organizations should adopt a systems-based approach to identify underlying issues and fortify the entire system [2]. This encourages staff to report anomalies without fear, creating a feedback loop that improves both AI performance and cybersecurity. Maintaining detailed system inventories further helps teams understand operational functions, data dependencies, and security risks [1]. Securing external partnerships is just as critical as refining internal processes.

Managing Third-Party and Vendor AI Risks

Third-party vendors pose one of the biggest vulnerabilities in healthcare cybersecurity. A single vendor breach recently caused widespread disruption, highlighting that third-party weaknesses now account for 80% of stolen patient records [5].

"Third-party vendor compromise has become the dominant threat vector in healthcare cybersecurity, fundamentally reshaping how organizations must approach security architecture and risk management." - Vectra AI [5]

Organizations can no longer rely on one-time security assessments. Instead, they need continuous monitoring programs to identify emerging vulnerabilities and active threats [5]. High-risk vendors - such as billing processors, cloud storage providers, medical device manufacturers, telehealth platforms, and IT services - demand extra scrutiny due to their access to sensitive data and critical systems.

Business Associate Agreements should be updated to include mandatory HIPAA technical controls like multifactor authentication, encryption, timely breach reporting, and fourth-party risk management [5][21]. Recent updates to the HIPAA Security Rule remove "addressable" specifications, requiring these controls to be implemented within 240 days for existing agreements [5]. Primary vendors should also ensure their subcontractors meet equivalent security standards and notify organizations of any fourth-party changes [5].

Currently, only 16% of healthcare systems have a governance policy addressing AI usage and data access [21]. To bridge this gap, organizations must establish clear policies, governance boards, and approval pathways for third-party AI tools, assigning specific roles and responsibilities [1]. Tools like Censinet RiskOps™ streamline this process by channeling AI-related assessments and tasks to designated stakeholders, such as AI governance committees, creating a centralized hub for managing AI policies, risks, and responsibilities. Alongside rigorous vendor controls, a well-trained workforce is essential for identifying and responding to threats quickly.

Workforce Training and Readiness

Insider threats from employee errors or negligence were a major factor in cybersecurity breaches in 2024, with 52% of organizations identifying internal weaknesses as a primary concern [8]. Training employees to recognize AI risks, understand AI’s limitations, and report anomalies is critical [1][21].

Workforce education should cover the basics of AI and healthcare cybersecurity, including AI terminology, its applications in healthcare, and the risks associated with its use [1]. Training should also address ethical considerations, compliance with regulations like HIPAA, HITECH, and FDA requirements, and privacy challenges tied to AI [22][23][1].

Practical skills are equally important. Employees should learn how to identify and respond to AI-specific threats like model poisoning, data corruption, and adversarial attacks. Collaboration between cybersecurity, data science, clinical, and business teams is key to building a strong AI risk management framework [1][20]. Regular training updates ensure staff stay informed about evolving threats and maintain readiness to handle AI-related security challenges. Continuous learning also supports the balance between automation and human oversight, which remains a cornerstone of effective AI risk management.

Conclusion

The landscape of healthcare cybersecurity is shifting rapidly, demanding defenses that are both proactive and interconnected. AI-driven threats are advancing at a pace that traditional measures simply can't keep up with, creating a pivotal moment for the industry. By 2023, ransomware attacks and the costs associated with data breaches had climbed significantly [25].

In December 2025, the U.S. Department of Health and Human Services introduced its AI Strategy [26], while the Health Sector Coordinating Council plans to release updated AI cybersecurity guidance in 2026 [1]. These steps highlight the growing recognition of the challenges ahead and emphasize the need for immediate action.

Building cyber resilience means preparing for threats, minimizing disruptions, and ensuring uninterrupted patient care [24][19]. To achieve this, healthcare organizations must weave AI risk management into every aspect of their operations. This includes clinical teams focusing on patient safety and procurement teams rigorously evaluating risks from third-party vendors. A 2023 survey found that while 75% of healthcare professionals believe their organizations prioritize digital technology, many feel there’s a lack of robust planning to prevent breaches [25]. This underscores the necessity of combining advanced technologies with skilled human oversight.

Human involvement remains a critical factor. Insider threats, often caused by employee mistakes, are still a leading cause of breaches [8]. Employees need to be equipped to identify AI-related threats, understand the limitations of these systems, and confidently report irregularities without fear of repercussions. Regular training not only strengthens security but also supports better patient outcomes.

AI-powered tools can provide a strong line of defense, but they’re most effective when paired with human expertise. A comprehensive approach - incorporating vendor monitoring, real-time threat detection, and swift incident response - creates a multi-layered defense capable of adapting to new risks. Organizations that establish strong AI governance, maintain detailed system inventories, and nurture collaboration across cybersecurity, clinical, and business teams will be better prepared to safeguard patient data and uphold trust in this rapidly evolving era.

FAQs

How does AI governance enhance cybersecurity in healthcare?

AI governance strengthens cybersecurity in healthcare by establishing clear policies to oversee every stage of the AI lifecycle - from development to deployment. These policies ensure adherence to regulations, assign responsibilities, and enforce security measures to protect sensitive patient data.

By identifying potential risks early and staying ahead of emerging threats, AI governance helps healthcare organizations maintain strong security systems. This not only protects critical infrastructure but also fosters trust among patients and stakeholders.

What are the dangers of AI-powered deepfake attacks in healthcare?

AI-driven deepfake attacks pose significant dangers in healthcare, such as spreading false information, committing identity theft, and tampering with sensitive medical records or communications. These risks not only threaten patient trust but also compromise safety and disrupt essential healthcare operations.

Deepfakes can also be used to impersonate healthcare professionals or patients. This could allow unauthorized access to systems or lead to the misrepresentation of medical advice, creating potentially harmful consequences. As AI technology evolves, it’s crucial for healthcare organizations to remain alert and implement strong cybersecurity measures to safeguard both patient information and operational integrity.

Why is zero-trust security essential for protecting connected medical devices?

Zero-trust security plays a critical role in protecting connected medical devices by requiring continuous verification and authentication for every access request - whether it comes from a user or a device. This constant validation helps block unauthorized access, even if a system has already been breached, significantly reducing the risk of cyberattacks.

In the healthcare sector, where networks often rely on older systems and handle highly sensitive patient information, zero-trust security provides an added level of defense against sophisticated threats. By restricting access to only what is absolutely necessary and consistently reassessing trust, healthcare organizations can safeguard their devices, systems, and the invaluable data they oversee.