The Cybersecurity-Clinical Safety Connection: Why Medical Device Security Requires AI

Post Summary

Medical device security is no longer just about protecting data - it directly impacts patient safety. Cyberattacks on healthcare systems can disrupt treatments, delay diagnoses, and even lead to fatalities. With the rise of connected medical devices, the risks have grown exponentially. Traditional security methods can’t keep up with these evolving threats, making AI-powered tools essential for detecting and preventing breaches.

Key Insights:

- Patient Safety Risks: Cyberattacks on devices like infusion pumps or pacemakers can cause life-threatening errors.

- AI’s Role: AI tools analyze real-time data to detect threats faster, automate responses, and prioritize high-risk vulnerabilities.

- Regulatory Changes: The FDA now mandates stronger cybersecurity measures for medical devices, covering both premarket and postmarket stages.

- Challenges: While AI improves security, it introduces risks like data poisoning and model manipulation, requiring careful oversight.

AI is transforming medical device security by enabling faster threat detection, automating risk assessments, and reducing manual workloads. However, balancing its benefits with proper governance and monitoring is critical to ensuring patient safety in today’s interconnected healthcare systems.

Patient Safety Risks from Unsecured Medical Devices

How Device Vulnerabilities Lead to Patient Harm

When medical devices lack proper security, the risks go far beyond data breaches - they can directly endanger patients. Devices with network connectivity can serve as entry points for cyberattacks, leading to disruptions in treatment, inaccurate diagnostics, or delays in critical care [6] [2] [7].

Take infusion pumps as an example. If a hacker gains control, they could manipulate medication dosages, potentially causing overdoses or underdoses. Similarly, a compromised imaging system might produce incorrect diagnostic results, leading to misdiagnosis or inappropriate treatment. Devices like ventilators, pacemakers, and insulin pumps are also at risk when their security is breached.

The problem is magnified by older medical devices still in use. Many of these run on outdated software and lack modern security measures [8]. When connected to hospital networks, they expand the potential attack surface, making the entire system more vulnerable [6].

These examples highlight the urgent need for stronger security measures that align with evolving regulations to protect both devices and patients.

U.S. Regulatory Requirements for Medical Device Cybersecurity

The FDA has stepped up its regulations on medical device cybersecurity. A key development is Section 524B of the Federal Food, Drug, and Cosmetic Act (FD&C Act), introduced through the Consolidated Appropriations Act, 2023. This regulation, which came into effect on March 29, 2023, represents a significant change in how manufacturers must approach cybersecurity across a device's lifecycle [7] [9] [10].

The FDA's framework addresses both premarket and postmarket stages of a device's life. Manufacturers are now required to implement strong premarket measures, such as secure design, vulnerability management, and update capabilities. Postmarket responsibilities include ongoing monitoring, timely software patches, and reporting any vulnerabilities. By integrating these practices, the goal is to ensure that protecting devices from cyber threats goes hand in hand with safeguarding patient safety.

Why AI is Necessary for Medical Device Security

Limitations of Conventional Cybersecurity Methods

Traditional cybersecurity methods struggle to keep pace with the complexities of today’s healthcare systems. Hospitals often manage hundreds of interconnected devices, applications, and platforms, and manual processes or signature-based tools simply aren’t equipped to handle this scale. Outdated software, in particular, creates vulnerabilities that attackers can exploit.

On top of that, conventional approaches fall short when dealing with threats unique to AI systems. Risks like data poisoning, model inversion, or prompt injection attacks [1] are outside the scope of traditional methods. They also tend to overlook the human element, such as social engineering tactics that exploit human behavior. Modern AI systems, with their opaque and complex nature, require more advanced monitoring than traditional tools can provide. Without a "secure by design" strategy, organizations often find themselves reacting to security breaches instead of preventing them.

These limitations highlight why AI is essential for revolutionizing medical device security and moving beyond outdated, reactive approaches.

How AI Enables Proactive Risk Management

AI shifts medical device security from being reactive to proactive. By analyzing real-time data, AI can identify patterns and anomalies that signal potential cyberthreats. This ability to continuously monitor and predict risks is critical for safeguarding patient safety in today’s highly connected healthcare environments.

AI doesn’t just detect threats - it acts on them quickly and with precision. Routine security tasks, like monitoring network traffic or identifying outdated software, can be automated, freeing up cybersecurity teams to focus on more complex challenges that require human expertise.

The stakes are high. Delayed threat detection in healthcare can lead to devastating consequences. AI-driven security tools, by identifying attacks earlier, have the potential to prevent serious harm to patients.

Managing AI's Risks While Using Its Benefits

While AI enhances security, it also comes with its own set of risks. Attackers can exploit AI systems, turning them into targets or even using AI to launch more sophisticated attacks. To address these challenges, a balanced approach is crucial - one that maximizes AI’s strengths while maintaining strong human oversight.

Human involvement remains essential, especially for critical decisions. Risk management teams should implement configurable rules and review processes to ensure that AI tools support human judgment rather than replace it. Transparency is equally important. Healthcare organizations need clear details about AI’s communication interfaces, third-party software components, known vulnerabilities, and secure configuration practices. Without this level of transparency, even the most advanced AI tools can’t guarantee full protection.

The ultimate goal isn’t to remove humans from the equation but to empower them. AI tools should enhance human decision-making by providing the speed, scale, and precision needed to protect patients in an increasingly interconnected healthcare system.

AI Capabilities for Medical Device Security

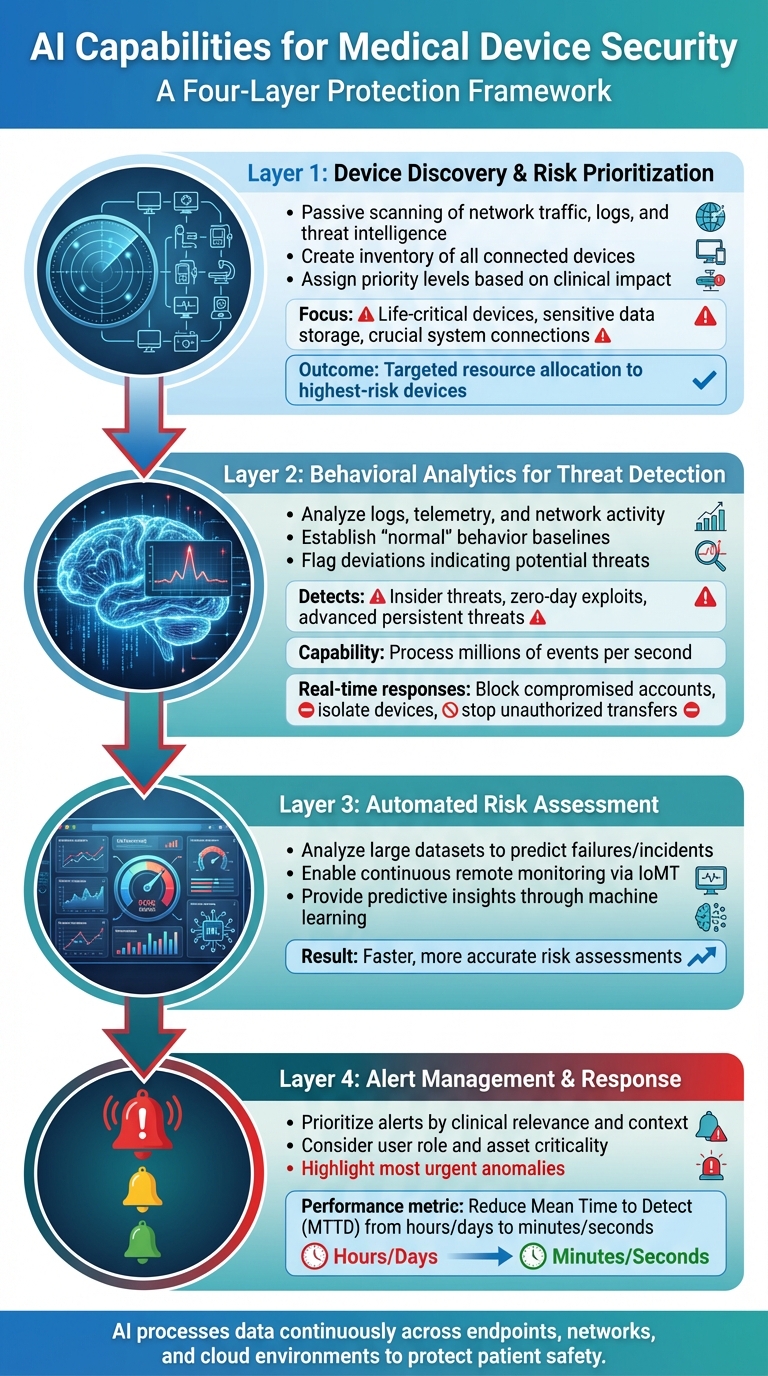

AI-Powered Medical Device Security: 4-Layer Protection Framework

Device Discovery and Risk Prioritization

AI tools play a key role in identifying and assessing vulnerabilities in healthcare networks. By passively scanning network traffic, logs, and threat intelligence, these tools create an inventory of connected devices and evaluate their risks [11][12]. Once all devices are mapped, AI assigns priority levels based on the potential clinical impact of a security breach. Devices that, if compromised, could endanger patient safety are flagged as top priorities.

This approach is essential in healthcare environments where hundreds or even thousands of devices operate simultaneously. Instead of treating every vulnerability equally, AI identifies the devices supporting life-critical functions, storing sensitive patient data, or connecting to crucial systems. This targeted prioritization ensures resources are directed where they’re needed most, reducing the likelihood of life-threatening device failures. This foundational step also prepares the system for advanced behavioral analysis.

Behavioral Analytics for Threat Detection

AI-driven behavioral analytics takes security a step further by learning what "normal" device behavior looks like. By analyzing logs, telemetry, and network activity, AI establishes baselines and flags deviations that could indicate potential threats [13][15][16]. This method is particularly effective in detecting issues that traditional tools might overlook, such as insider threats, zero-day exploits, and advanced persistent threats [13][14][16]. For instance, it can identify anomalies like unexpected communications with unfamiliar domains or unusual spikes in network traffic [13][14].

These systems are designed to process vast amounts of data - millions of events per second - and correlate signals across endpoints, networks, and cloud environments. This capability enables real-time automated responses, such as blocking compromised accounts, isolating devices, or stopping unauthorized data transfers [13][16]. Beyond detection, AI also simplifies the risk assessment process, reducing the burden on security teams.

Automated Risk Assessment and Alert Management

AI significantly speeds up risk assessments by analyzing large datasets to predict potential failures or security incidents [18]. Machine learning algorithms process this information, offering predictive insights that enhance security measures [18]. When paired with data from the Internet of Medical Things (IoMT), cloud-based AI systems enable continuous remote monitoring and ongoing assessments [18].

Additionally, AI addresses the challenge of alert fatigue by prioritizing notifications based on their clinical relevance and contextual factors, such as the user’s role or the criticality of the affected asset [13][14][16][17]. By highlighting the most urgent and impactful anomalies, AI allows security teams to act faster. In fact, AI-powered threat detection can cut the mean time to detect (MTTD) from hours - or even days - to just minutes or seconds [16]. This efficiency ensures a quicker and more effective response to potential threats.

sbb-itb-535baee

Governance and Operations for AI-Driven Device Security

Building Collaborative Governance Teams

For AI to be effectively governed in healthcare, teamwork across departments is a must. Start by appointing an executive sponsor for each AI initiative to ensure it aligns with both the organization’s goals and patient safety priorities. This requires bringing together key players like CISOs, CMIOs, clinical engineers, biomedical technicians, and patient safety officers into a cohesive governance structure.

To make this work, establish cross-departmental committees that tackle cybersecurity risks and report to a central oversight group. This setup ensures cybersecurity becomes a regular part of organizational discussions and encourages active input from clinicians and other stakeholders. Clearly defining roles and responsibilities for every stage of the AI lifecycle helps avoid gaps in oversight and ensures accountability when challenges arise. This unified approach creates a foundation for smoothly incorporating AI into both clinical and cybersecurity workflows.

Integrating AI into Clinical and Cyber Workflows

Rolling out AI-driven security tools requires careful planning to avoid disruptions to patient care. Regulatory guidelines now emphasize education, secure-by-design principles, and structured cyber operations for healthcare AI systems [5][3]. A key strategy is network segmentation, which isolates medical devices while allowing AI to monitor traffic patterns and device behaviors.

Tools like Censinet RiskOps act as central hubs, directing critical AI risk findings to the appropriate teams for review and action. Think of it as an "air traffic control" system for AI governance, ensuring the right issues are handled by the right people at the right time. By embedding AI into these workflows, organizations not only strengthen their cybersecurity defenses but also enhance patient safety.

Ensuring Ongoing Monitoring and Compliance

Once AI systems are in place, continuous monitoring is essential to maintain security and meet compliance standards. Regulators now require ongoing post-market monitoring for AI-enabled devices [20][21]. This is crucial to address issues like data drift, concept drift, and model drift - real-world challenges that can affect device safety and performance [20][21]. Compliance demands proactive monitoring, transparent metrics, and predefined corrective actions to address deviations [21].

AI-powered dashboards play a critical role by aggregating real-time data from connected devices. These dashboards provide insights into risk levels, compliance status, and clinical safety metrics, enabling teams to ensure AI models stay aligned with their original purpose. They also help maintain the balance of cost, benefit, and risk established during initial approvals [19]. Keeping an up-to-date inventory of all AI systems is key to understanding an organization’s risk exposure and demonstrating compliance during audits. This reinforces the importance of strong AI governance frameworks to safeguard patient outcomes.

Conclusion

The link between cybersecurity and patient safety has grown more crucial than ever. With healthcare organizations increasingly relying on connected medical devices, they must adopt AI-driven strategies to safeguard patient outcomes and meet strict regulatory standards. The FDA’s updated cybersecurity guidance from June 2025 underscores this point: the quality and security of medical devices are now inseparable, especially as AI becomes a core component of healthcare technologies [22].

Cyberattacks present serious threats to patient care. Traditional methods of cybersecurity fall short in addressing AI-specific challenges like data poisoning, performance drift, and algorithmic biases [1]. These vulnerabilities can lead to dangerous medical errors, eroded patient trust, exposure of sensitive health information, and compromised clinical data. To counter these risks, healthcare organizations need a proactive, flexible security approach that anticipates and mitigates threats before they impact patient care [4][23]. AI-powered tools are uniquely equipped to provide the broad visibility required to identify weaknesses across the software and AI supply chains of medical devices, ensuring vulnerabilities are addressed early [22].

Censinet RiskOps offers a way forward by centralizing risk management processes. It ensures that AI-related findings are routed to the right teams for evaluation and action while maintaining essential human oversight. By blending AI’s analytical capabilities with governance, continuous monitoring, and clear metrics, healthcare organizations can enhance their cybersecurity defenses without compromising patient safety. This integrated approach reinforces the critical bond between robust cyber defenses and the well-being of patients.

As the healthcare landscape grows more connected, the need for innovative solutions paired with vigilant oversight becomes undeniable. Organizations that commit to securing AI-driven medical devices today will not only better protect their patients but also maintain compliance and strengthen trust in this evolving digital era.

FAQs

How does AI enhance the security of medical devices compared to traditional approaches?

AI is transforming medical device security by offering real-time threat detection, automated responses, and continuous monitoring - capabilities that go beyond what traditional methods can provide. It excels at processing massive amounts of data quickly, identifying unusual patterns or vulnerabilities, and stopping potential breaches before they happen.

What makes AI even more effective is its ability to adapt to ever-changing threats. It updates its defenses automatically, providing a proactive shield against cyberattacks. This not only keeps sensitive patient data secure but also minimizes the chances of device malfunctions caused by security breaches, ensuring better clinical safety. By integrating AI into their systems, healthcare organizations can strengthen their defenses and protect both their operations and their patients in today’s increasingly connected world.

What risks should healthcare organizations consider when using AI to secure medical devices?

AI brings incredible potential to enhance the security of medical devices, but it’s not without its challenges. One major concern is data breaches, where sensitive patient details could be exposed. Another issue is algorithmic opacity - essentially, the "black box" problem - where it’s hard to understand exactly how AI systems are making their decisions. This lack of clarity can raise trust and safety concerns.

AI systems are also susceptible to cyberattacks, which could include tampering with training datasets, adversarial manipulation, or even malicious attempts to steal source code. Beyond these technical risks, social engineering attacks targeting AI systems could disrupt device functionality or, worse, jeopardize patient safety.

To address these vulnerabilities, it’s essential to adopt strong security protocols, actively monitor AI behavior, and prioritize transparency and accountability in how AI solutions are developed and deployed. These steps can help mitigate risks and build trust in AI-driven medical technologies.

How do new regulations shape the use of AI in securing medical devices?

The 2023 Omnibus Act in the U.S. has introduced new requirements for medical device manufacturers, emphasizing the need for strong cybersecurity plans. Companies must now submit detailed risk management strategies, address vulnerabilities during premarket submissions, and ensure continuous postmarket monitoring.

These updates are pushing healthcare organizations to incorporate AI-driven solutions to comply with standards like HIPAA. The focus is on improving transparency and implementing proactive security measures. By adhering to these regulations, healthcare providers can strengthen device security while safeguarding patient safety in a more interconnected world.