FDA AI/ML Guidance and Vendor Risk: What Healthcare Organizations Need to Know

Post Summary

AI tools in healthcare are under stricter FDA regulation, and your organization is responsible for ensuring vendor compliance. Here's what you need to know:

- FDA now regulates AI/ML tools like medical devices. Tools impacting diagnoses, treatment, or safety must meet rigorous requirements (e.g., 510(k), De Novo, PMA approvals).

- Vendor compliance is your responsibility. Using non-compliant tools can lead to patient safety risks, operational disruptions, and legal penalties.

- Key FDA guidelines to evaluate vendors:

- Good Machine Learning Practice (GMLP): Focus on data quality, algorithm transparency, and bias reduction.

- Predetermined Change Control Plans (PCCPs): Ensure vendors have plans for safe algorithm updates.

- Post-market performance monitoring: Vendors must track and address issues after deployment.

- Security is critical. Vendors handling patient data must comply with HIPAA, including BAAs and safeguards against AI-specific risks like data poisoning.

- AI evolves - monitor updates. Vendors need robust systems for managing model drift and validating changes.

Use a structured framework to evaluate AI/ML vendors: Confirm FDA compliance, ensure strong security measures, and demand clear update management processes. Tools like Censinet RiskOps™ can help centralize and simplify vendor risk management.

Bottom line: Thorough vendor evaluations and continuous oversight are essential to protect patients and stay compliant. AI in healthcare is powerful but requires careful management.

FDA Requirements for AI/ML Technologies

The FDA has established critical guidelines for AI/ML technologies to ensure they meet safety and effectiveness standards while supporting innovation. These requirements are pivotal for assessing vendor compliance, which directly affects your organization’s risk management. They serve as the groundwork for evaluating vendors and set the stage for a deeper dive into specific assessment criteria.

Good Machine Learning Practice (GMLP)

Good Machine Learning Practice (GMLP) consists of 10 guiding principles developed by leading regulatory authorities[6][3]. This framework is designed to promote the creation of AI/ML technologies that are safe, effective, and of high quality. When evaluating vendors, healthcare organizations should focus on several key areas:

- Data Integrity: Ensure training data is representative and free from bias.

- Software Practices: Confirm adherence to sound development methodologies.

- Model Design: Verify alignment with the tool’s intended use.

- Performance Monitoring: Assess processes for ongoing evaluation and updates.

Ask vendors for detailed documentation that illustrates how they’ve implemented these principles. Look for evidence such as diverse expertise in development, use of independent validation datasets, and clearly defined testing protocols. This level of transparency helps gauge a vendor’s commitment to quality. Additionally, it’s essential to understand how vendors handle dynamic changes in AI/ML systems, as these technologies evolve over time.

Predetermined Change Control Plans (PCCPs)

Predetermined Change Control Plans (PCCPs) are structured outlines detailing how manufacturers will modify their AI/ML devices over time without requiring new FDA submissions for every change[3][7]. These plans typically include:

- A description of anticipated changes

- A protocol for implementing modifications

- An impact assessment

- Validation processes for the changes

PCCPs offer a roadmap for how AI systems will adapt and evolve. Unlike static devices, AI/ML tools can update algorithms as new data becomes available. When assessing vendors, review their PCCP documentation to understand their approach to managing these updates. A well-thought-out PCCP demonstrates that the vendor has considered the entire lifecycle of their product and has measures in place to maintain safety and effectiveness as the technology evolves[3].

Post-Market Performance Monitoring

Initial compliance is just the beginning - ongoing monitoring is equally critical to address emerging risks. The FDA mandates continuous surveillance and performance tracking for AI/ML tools after deployment. This approach combines premarket evaluations with post-market oversight to ensure these tools remain safe and effective in real-world environments[3].

For healthcare organizations, maintaining a relationship with vendors that prioritize post-market monitoring is essential. During vendor evaluations, investigate their surveillance programs, the frequency of performance reviews, and their criteria for updates. Vendors should have clear processes for tracking system performance in production and addressing any issues that arise. A lack of robust monitoring practices can lead to undetected performance problems, posing significant compliance risks.

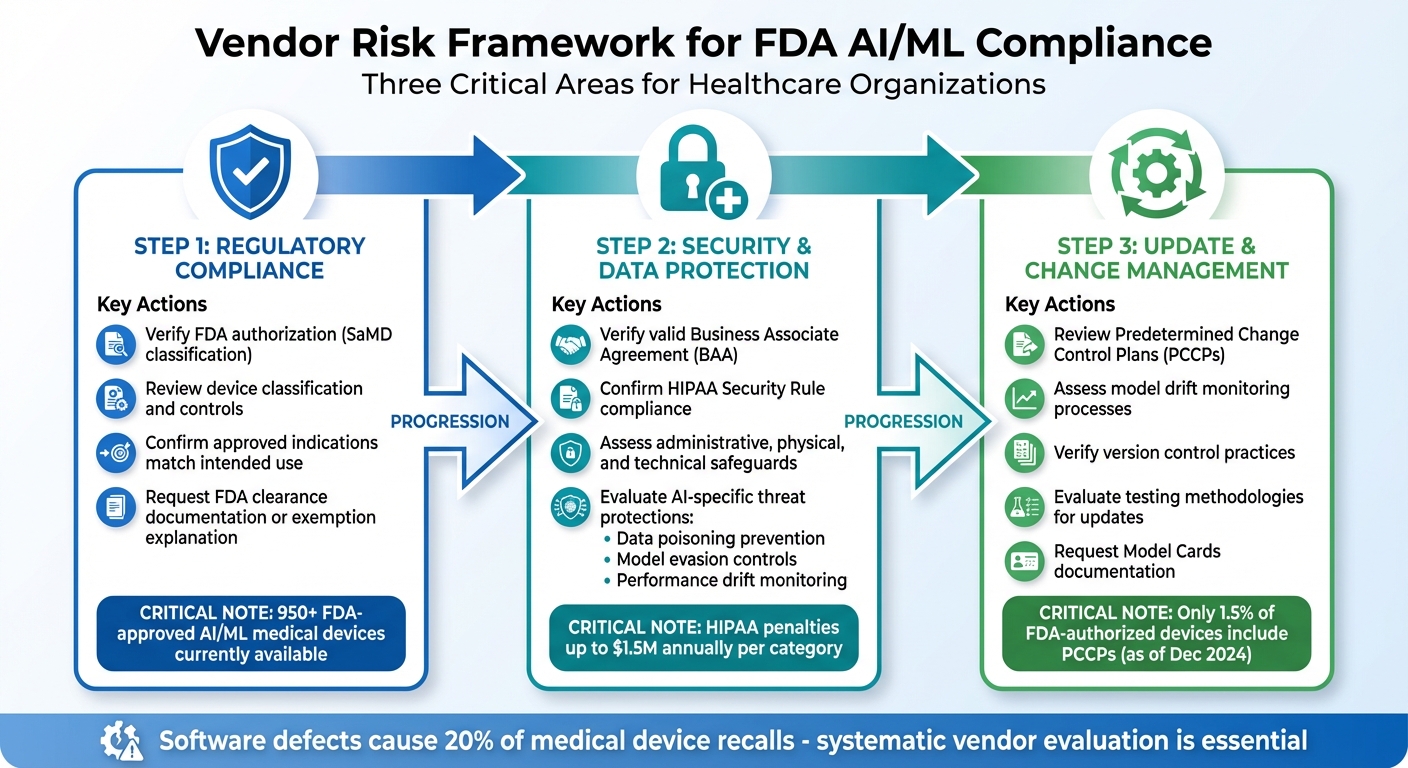

Creating a Vendor Risk Framework for FDA AI/ML Compliance

Three-Step Framework for Evaluating FDA AI/ML Vendor Compliance in Healthcare

Building on the FDA's guidelines, this framework helps you thoroughly evaluate vendor compliance. Developing a vendor risk framework that addresses regulatory compliance, security, and change management is crucial to avoid potential pitfalls like product recalls, liability issues, penalties, or harm to your reputation[1]. With more than 950 FDA-approved AI/ML medical devices currently available[1], having a systematic approach to assess these tools is essential.

Your framework should focus on three main areas: confirming regulatory compliance, evaluating security measures, and understanding how vendors manage updates. Each of these areas should include clear documentation requirements and consistent evaluation criteria, ensuring patient safety and adherence to FDA standards.

Checking Vendor Regulatory Status

The first step is to ensure the vendor complies with FDA standards before integrating their tools into your workflows. Determine if the AI/ML tool requires FDA authorization. The FDA regulates AI as Software as a Medical Device (SaMD) when it's used for diagnosis, treatment, or disease prevention[1]. Ask vendors to provide documentation of FDA clearance or a legal explanation if the tool is classified as exempt[5].

Make sure the vendor's regulatory pathway aligns with the tool's complexity and associated risks. Review the device classification and its controls, and verify that the approved indications for use match your intended application of the tool. Any deviation from the approved use case could lead to non-compliance, even if the vendor meets regulatory requirements. Once regulatory compliance is confirmed, the next focus is on security and data protection.

Reviewing Security and Data Protection

This step ensures compliance with HIPAA regulations and addresses AI-specific security risks. Vendors handling Protected Health Information (PHI) must have a valid Business Associate Agreement (BAA) that outlines access restrictions, security safeguards, breach notification protocols, and audit rights[1]. Without a properly executed BAA, sharing PHI with the vendor is not legally permissible.

Examine the vendor's adherence to the HIPAA Security Rule, which includes administrative, physical, and technical safeguards[1]. For AI/ML systems, pay close attention to protections against threats like data poisoning, model evasion, and performance drift[4]. Request detailed documentation of their vulnerability management processes, secure development practices, and controls to prevent data leakage. Since AI systems introduce unique risks that traditional software evaluations might overlook, your assessment criteria should address these specific challenges. With regulatory and security checks completed, the final step is to manage the ongoing evolution of AI/ML systems.

Managing AI/ML Updates and Changes

AI/ML systems are dynamic, requiring careful oversight as they evolve. Evaluate how vendors handle model drift, particularly in deep learning models[8][4]. Request documentation of their drift monitoring processes, thresholds for corrective actions, and response timelines. As of December 2024, only 1.5% of FDA-authorized AI/ML devices included a Predetermined Change Control Plan (PCCP) in their approval summaries. Of these, 73.3% were added after the PCCP guidelines were introduced in April 2023[8]. This indicates that many vendors may not yet have formalized processes for managing changes.

Ask vendors to provide evidence of their version control practices, testing methodologies for updates, and risk analysis procedures[1][8][4]. They should also supply structured reporting tools like Model Cards, which detail algorithm specifications, training data sources, and planned update pathways[1][8][4]. These measures ensure that your organization stays compliant and prepared for the complexities of AI/ML system updates.

sbb-itb-535baee

Managing AI/ML Vendor Risk with Censinet

Censinet RiskOps™ brings a practical solution to the table for healthcare organizations aiming to manage vendor risks tied to FDA regulations. It tackles key challenges like regulatory compliance, cybersecurity, and change control, all while simplifying vendor oversight. By centralizing and standardizing compliance with FDA guidelines, this platform helps streamline the often complex process of managing AI/ML vendor risks.

Automating Vendor Reviews

Censinet RiskOps™ takes the hassle out of vendor reviews by automating the collection and organization of essential compliance and security documents. It integrates structured workflows with automated processes, ensuring reviews are efficient while still allowing for human oversight during critical risk assessments.

Coordinating Across Teams

Effective AI/ML oversight requires input from multiple departments, including risk management, compliance, clinical, and IT teams. RiskOps™ ensures seamless collaboration by centralizing vendor risk data, enabling unified evaluations across teams. This shared access to accurate and relevant data ensures that compliance and cybersecurity standards are consistently upheld throughout the organization.

Tracking AI/ML Risks in One Place

With a centralized dashboard, Censinet RiskOps™ simplifies the task of monitoring AI policies, vendor compliance, and risk assessments. This tool helps organizations pinpoint compliance gaps, prioritize remediation efforts, and stay on top of key risk indicators. By doing so, it equips healthcare organizations to confidently handle internal reviews and regulatory audits with greater efficiency and preparedness.

Conclusion

Effectively managing AI and machine learning (ML) vendor risk is crucial to safeguarding patient safety and ensuring regulatory compliance. The numbers speak volumes: the FDA has approved over 1,000 AI technologies as medical devices[9], and between 2015 and 2020, there was an 83% increase in AI-enabled devices[2]. With potential penalties under HIPAA reaching $1.5 million annually per category[1], and software defects responsible for 20% of medical device recalls[5], the stakes couldn't be higher.

To address these risks, organizations need a structured framework. This begins with adhering to key FDA requirements, such as Good Machine Learning Practice (GMLP), Predetermined Change Control Plans (PCCPs), and rigorous post-market performance monitoring. Healthcare providers should confirm vendor regulatory compliance, verify FDA clearance for specific use cases, and establish strong security protocols. Adopting a Total Product Lifecycle (TPLC) approach ensures compliance doesn't stop at initial approval but continues through ongoing monitoring and updates[3][4].

AI/ML compliance should be treated as a core engineering principle, given its direct link to patient safety[1]. This involves setting up clear processes for reviewing vendor documentation, fostering collaboration across risk management, compliance, clinical, and IT teams, and maintaining oversight of updates and changes to AI/ML systems. Tools like Censinet RiskOps™ help by centralizing vendor risk data, automating compliance reviews, and providing a unified dashboard to monitor AI policies and risks. Such solutions enable healthcare organizations to meet FDA requirements while maintaining the vigilance needed to protect patients.

As the healthcare AI landscape evolves, the basics remain unchanged: thorough vendor evaluations, continuous monitoring, and strong cross-functional collaboration are non-negotiable. By committing to these principles, healthcare organizations can ensure that AI/ML technologies deliver on their promise of innovation while staying safe and compliant. This ongoing commitment to evaluation and oversight is what keeps cutting-edge AI solutions both effective and trustworthy.

FAQs

What are the FDA's main requirements for AI/ML tools in healthcare?

The FDA has established strict requirements for AI and machine learning tools in healthcare to ensure they are both safe and effective. These standards include clear labeling that identifies the use of AI, along with a straightforward explanation of how the tool achieves its intended purpose. Additionally, these tools must comply with premarket review processes such as 510(k), De Novo, or PMA, depending on the product's classification.

For tools that use adaptive algorithms, the FDA emphasizes the importance of ongoing performance monitoring throughout the product's lifecycle. This ensures that the tool consistently meets regulatory and safety standards over time. These guidelines provide healthcare organizations with the confidence to adopt AI/ML technologies while staying compliant and reducing potential risks.

How can healthcare organizations confirm that vendors comply with FDA AI/ML guidelines?

Healthcare organizations aiming to ensure vendor compliance with FDA AI/ML guidelines need a well-structured evaluation process. Start by examining whether the vendor meets FDA premarket requirements, follows cybersecurity standards, and adheres to lifecycle management protocols. Vendors should supply detailed documentation covering model development, validation, risk assessments, and change control strategies, including Predetermined Change Control Plans (PCCPs).

Ongoing audits and performance monitoring play a key role in maintaining compliance. Organizations should also insist that vendors align with FDA’s Quality System Regulation (QSR) and include these compliance expectations in their contracts. Leveraging risk assessment tools and checklists that align with FDA recommendations can simplify the process and help address potential risks more effectively.

What risks do healthcare organizations face when using AI/ML tools that don’t comply with FDA standards?

Using AI and machine learning tools that don't align with FDA compliance standards can have serious repercussions for healthcare organizations. These issues can range from regulatory rejections and product recalls to legal troubles - each capable of disrupting operations and tarnishing your organization's reputation.

On top of that, non-compliant tools often come with elevated cybersecurity risks, making them more susceptible to data breaches or malicious attacks. Such vulnerabilities can expose sensitive patient information, endanger patient safety, and weaken the trust between healthcare providers and their patients. Compliance isn't just about adhering to regulations - it's about safeguarding your organization and the individuals who depend on it.

Related Blog Posts

- Machine Learning Vendor Risk Assessment: Data Quality, Model Validation, and Compliance

- Healthcare AI Data Governance: Privacy, Security, and Vendor Management Best Practices

- The Healthcare AI Paradox: Better Outcomes, New Risks

- Clinical Intelligence: Using AI to Improve Patient Care While Managing Risk