Federated AI Risk: Managing Machine Learning Across Distributed Systems

Post Summary

Federated AI is changing how healthcare organizations handle machine learning by keeping sensitive data local and only sharing model updates. This approach reduces privacy risks, complies with regulations like HIPAA, and allows collaborative advancements in areas like cancer detection and heart disease prediction. However, it introduces risks like model poisoning, privacy breaches, and third-party vulnerabilities.

Key Points:

- Privacy Risks: Adversaries can exploit model updates to reverse-engineer sensitive data.

- Data Integrity Issues: Malicious inputs can corrupt models, impacting patient care.

- Third-Party Risks: External vendors may introduce security gaps.

Solutions include:

- Differential Privacy: Adds noise to model updates to protect data.

- Encryption: Ensures secure computation on encrypted data.

- Secure Aggregation: Combines model parameters without exposing individual contributions.

Governance frameworks and tools like Censinet RiskOps help healthcare organizations manage these risks effectively, ensuring that federated AI systems remain secure and compliant.

Key Risks in Federated AI Systems

While federated AI offers privacy benefits by keeping data localized, it also brings along a set of distinct risks. The distributed setup of federated learning introduces vulnerabilities that don't exist in centralized systems [3][4]. These risks can directly impact patient safety, compromise privacy, and affect clinical decision-making [3][4][5].

For healthcare IT leaders, understanding these risks is critical. Medical devices and federated identity management systems are highly interconnected, which adds layers of complexity. Without proper safeguards, these systems can become targets for exploitation [3][4]. Below, we break down the primary risks associated with federated AI systems.

Privacy Breaches and Data Leakage

Privacy-related threats are among the most pressing concerns. Even though raw data stays local, adversaries can still extract sensitive information from federated AI systems. One major threat is model inversion attacks, where attackers reverse engineer model updates sent from local nodes to central servers. This process can expose private patient information hidden within the mathematical parameters [1].

The risks grow when AI vendors use patient data for purposes beyond the agreed scope, potentially violating privacy laws such as HIPAA [6]. Breaches like these can erode patient trust and leave healthcare organizations facing both legal and financial fallout.

Data Integrity and Model Poisoning Attacks

Ensuring the reliability of federated AI models is another significant challenge. These models depend on the quality of the data they learn from, which makes them vulnerable to model poisoning attacks. In these attacks, malicious actors inject biased or corrupted data at local nodes, which then impacts the global model's accuracy [3][4][5].

Unlike centralized systems, where training data can be directly audited, federated setups make it harder to detect when a single node contributes harmful updates. The consequences can be severe, including misclassified medical images or incorrect treatment recommendations [3][4][5].

Third-Party and Network Security Vulnerabilities

Collaborations with external vendors often bring additional risks. For example, the training data used by third-party AI applications might violate intellectual property laws or replicate protected processes, creating legal liabilities for healthcare providers [1][6]. Additionally, unmonitored vulnerabilities in vendor systems can expose healthcare organizations to network security threats.

Addressing these challenges requires ongoing evaluation and the adoption of strong security measures specifically designed for the distributed nature of federated AI systems in healthcare. By staying vigilant, organizations can better protect both patient data and the integrity of their clinical tools.

Privacy Protection Techniques for Federated AI

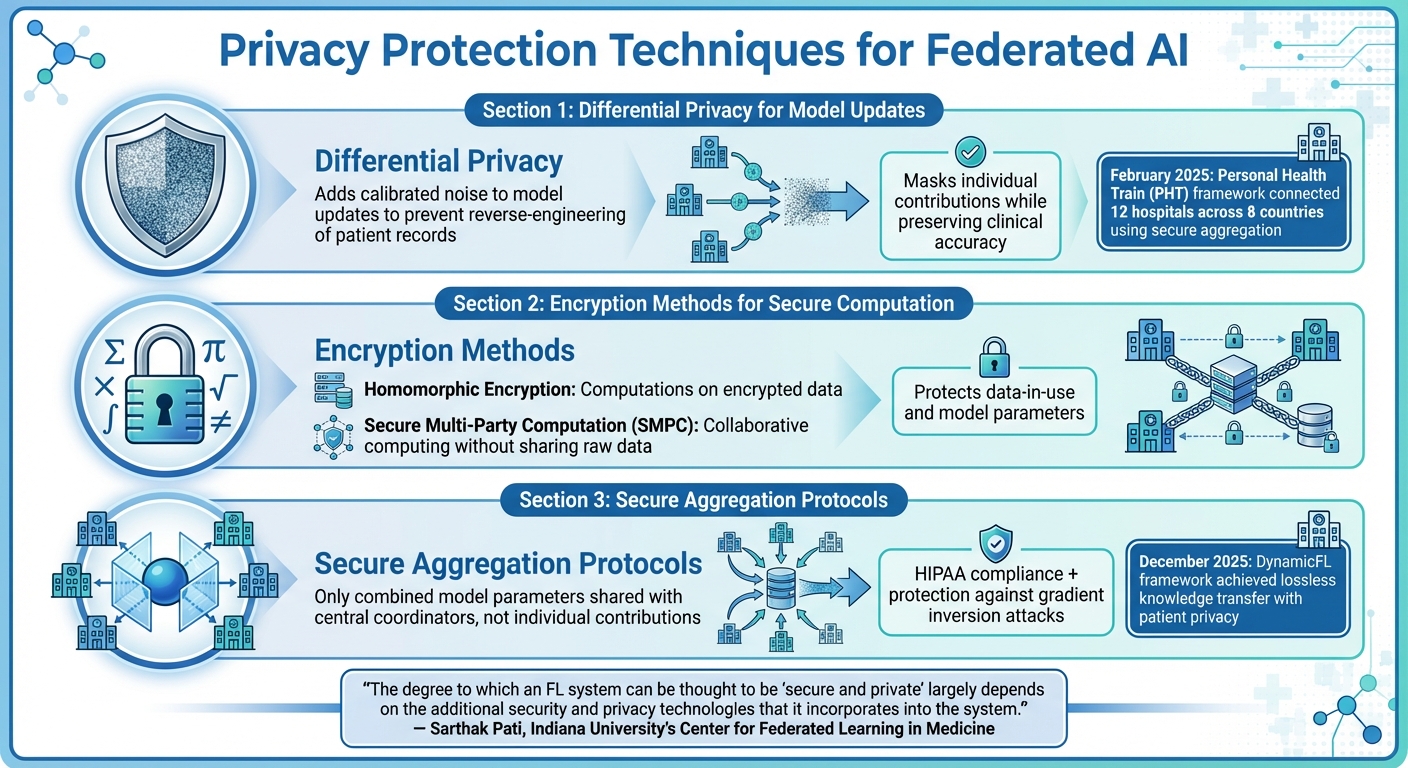

Three Key Privacy Protection Techniques for Federated AI in Healthcare

Safeguarding patient privacy in federated AI systems requires more than just standard security measures. Healthcare organizations need to integrate privacy protections directly into their machine learning workflows to ensure sensitive data remains secure without sacrificing model effectiveness [7]. Here are some key techniques that demonstrate how privacy can be maintained in federated AI systems.

Differential Privacy for Model Updates

Differential privacy plays a crucial role in preventing privacy breaches. By adding carefully calibrated noise to model updates before they are transmitted, it becomes nearly impossible for adversaries to reverse-engineer individual patient records. This noise is designed to mask individual contributions while preserving the model's overall clinical accuracy.

For example, in February 2025, the Personal Health Train (PHT) framework - powered by the open-source Vantage6 software - connected 12 hospitals across 8 countries and 4 continents. This initiative enabled federated deep learning on lung cancer CT scans using a secure aggregation server. The server performed model averaging in a trusted environment, ensuring that patient data never left the hospital premises during training [9].

"The degree to which an FL system can be thought to be 'secure and private' largely depends on the additional security and privacy technologies that it incorporates into the system",

- Sarthak Pati and colleagues at Indiana University's Center for Federated Learning in Medicine [3].

Encryption Methods for Secure Computation

Encryption methods like homomorphic encryption and secure multi-party computation (SMPC) are essential for protecting sensitive data during federated learning. Homomorphic encryption allows computations to occur directly on encrypted data, while SMPC enables multiple parties to collaboratively compute functions without sharing their raw data [11][12]. These methods are increasingly being integrated into federated AI frameworks to secure both data-in-use and model parameters. For healthcare organizations, adopting SMPC is a critical step toward ensuring privacy in collaborative model training [2].

Secure Aggregation Protocols

Secure aggregation protocols are designed to ensure that only combined model parameters are shared with central coordinators, rather than individual contributions. This approach is particularly important for meeting HIPAA compliance standards [8]. In December 2025, the DynamicFL framework showcased how secure aggregation could achieve lossless knowledge transfer while protecting against gradient inversion attacks, all while maintaining patient privacy [10]. For healthcare IT teams, implementing secure aggregation involves creating systems where data aggregation happens in isolated, trusted environments.

Governance and Risk Management Frameworks

After identifying key vulnerabilities, establishing a strong governance framework becomes essential. This framework helps healthcare organizations manage the unique risks associated with federated AI systems. Without clear protocols for identifying threats and ensuring compliance, organizations expose themselves to potential data breaches, model tampering, and regulatory fines.

Performing a Security Risk Analysis

Conducting a thorough security risk analysis is the first step in safeguarding federated AI systems. Start by defining the scope and objectives of your assessment. Pinpoint the specific federated AI systems under review and clarify what needs protection - whether it’s patient data, the integrity of machine learning models, or system uptime and availability [2][1].

Next, identify all relevant assets, including data repositories, machine learning models, infrastructure, and network connections. Assess potential threats based on three core principles: confidentiality, integrity, and availability. Pay close attention to how data and model updates move between decentralized devices and the central aggregator. This is particularly important when sensitive health information is part of the equation [1]. These risk assessments should feed directly into strategies for regulatory compliance and ongoing oversight.

Meeting HIPAA and Regulatory Requirements

Once risks are mapped out, the focus shifts to meeting regulatory standards. Federated learning aligns well with HIPAA guidelines because it keeps patient data stored locally, minimizing the risk of breaches. Only model parameters or updates are shared, rather than raw data [2][12][13][11]. However, compliance isn’t just about technology - it also demands effective governance structures. Healthcare organizations must adopt frameworks that address procedural, relational, and structural mechanisms to manage the risks tied to federated learning [1].

Implementing Human Oversight in AI Governance

Beyond risk assessments and compliance measures, human oversight plays a pivotal role in AI governance. Establish an AI Governance Board that includes representatives from IT, cybersecurity, data management, and privacy teams. This board should oversee AI operations and provide guidance on major decisions [14]. For systems with significant impact, integrate measures like human oversight, bias detection, and continuous outcome monitoring [14]. The ultimate goal is to empower clinical and IT teams to make well-informed decisions using AI systems [14].

sbb-itb-535baee

Using Censinet RiskOps for Federated AI Management

Censinet RiskOps™ is designed to simplify the complexities of managing federated AI within healthcare organizations. Built on solid risk governance principles and powered by Censinet AI on AWS AI infrastructure, this tool offers a clear view of risks and supports AI governance. It helps governance committees establish and enforce policies, evaluate third-party and internal risks, and implement AI solutions in line with the NIST AI Risk Management Framework (RMF) [15]. By integrating with existing privacy and risk management protocols, Censinet RiskOps safeguards distributed AI systems effectively.

Censinet AITM for Faster Risk Assessments

Censinet AITM streamlines the entire process of third-party risk assessments. It summarizes evidence, documents product integration details, identifies fourth-party risk exposures, and generates detailed risk reports. The platform uses a mix of human-guided and automated processes to handle critical tasks like evidence validation, policy creation, and risk mitigation. Risk teams retain control through customizable rules and review mechanisms. This combination allows healthcare organizations to manage risks more efficiently and address complex third-party challenges with speed and accuracy [15].

Real-Time Dashboards for AI Risk Oversight

Censinet RiskOps serves as a centralized platform featuring a user-friendly AI risk dashboard. This dashboard consolidates real-time data on policies, risks, and tasks, assigning key findings and responsibilities to stakeholders such as AI governance committee members. By facilitating prompt action from Governance, Risk, and Compliance (GRC) teams, the platform ensures continuous oversight and accountability. These features further enhance the risk management strategies discussed earlier.

"Our collaboration with AWS enables us to deliver Censinet AI to streamline risk management while ensuring responsible, secure AI deployment and use. With Censinet RiskOps, we're enabling healthcare leaders to manage cyber risks at scale to ensure safe, uninterrupted care."

- Ed Gaudet, CEO and founder of Censinet [15]

Pricing Plans for Healthcare Organizations

Censinet offers three flexible pricing models to accommodate different federated AI deployment needs. The Platform plan provides full access to the Censinet RiskOps™ software, ideal for organizations managing risks in-house. The Hybrid Mix plan combines platform access with customized managed services, while the Managed Services option offers fully outsourced cyber risk management, including assessments and reporting. Each plan is tailored to meet the specific requirements of the organization.

Conclusion: Managing Federated AI Risks in Healthcare

Navigating the risks of federated AI in healthcare demands a careful balance between progress and security. While federated learning offers the advantage of keeping sensitive data decentralized across institutions[2], it also brings unique challenges that call for both advanced privacy measures and strong governance.

At the heart of secure federated AI lies a commitment to privacy. Techniques like differential privacy, encryption, and secure aggregation protocols are crucial for safeguarding model updates and ensuring patient information remains protected. Beyond technical solutions, healthcare organizations must establish governance practices that address ethical concerns, mitigate biases, and regulate the secondary use of health data. This involves crafting policies that outline acceptable AI applications, setting up oversight committees, and ensuring critical decisions undergo human review.

Strong data governance is equally vital. Organizations should develop clear procedures for model training, validation, and deployment, alongside well-defined roles for IT teams, clinical staff, and compliance officers. Regular risk assessments and adherence to HIPAA regulations further reinforce patient data security.

To tackle these challenges effectively, leveraging advanced tools can make a difference. Platforms like Censinet RiskOps simplify risk management by offering centralized dashboards and faster risk evaluations, tailored to meet healthcare-specific needs.

FAQs

What are the main privacy risks of using federated AI in healthcare?

Federated AI in healthcare introduces several privacy concerns that need careful attention. One major issue is data breaches, which can happen through model inversion or poisoning attacks. In these scenarios, attackers either manipulate the model or extract sensitive patient information. Another challenge is the re-identification of anonymized data - even data stripped of personal identifiers can sometimes be traced back to individuals if not adequately protected. On top of that, unauthorized access poses a threat, especially if there are weaknesses in the security of model-sharing processes or communication protocols.

Addressing these risks requires strong measures. Organizations should prioritize robust encryption, ensure secure communication channels, and enforce strict access controls to keep healthcare data safe.

How does differential privacy improve the security of federated AI in healthcare?

Differential privacy strengthens the security of federated AI systems by introducing precisely adjusted noise to model updates. This added noise effectively masks individual data points, making it nearly impossible to link sensitive patient information back to a specific person.

By protecting personal data throughout the federated AI training process, differential privacy not only lowers the risk of data breaches but also helps ensure compliance with stringent healthcare regulations like HIPAA. This approach plays a key role in preserving both the security and trust required in distributed healthcare systems.

How does Censinet RiskOps help manage risks in federated AI systems?

Censinet RiskOps offers healthcare organizations a powerful way to manage risks in federated AI systems. It provides a platform designed to handle governance, security, and compliance, ensuring sensitive data stays secure while allowing machine learning to operate across distributed systems.

The platform simplifies critical risk management tasks, including setting up policies, ongoing vulnerability monitoring, and managing third-party risks. By making these processes more efficient, Censinet RiskOps helps healthcare providers protect data integrity and reduce cybersecurity threats commonly associated with federated AI systems.