HIPAA Compliance in the Age of AI: Continuous Monitoring vs. Annual Audits

Post Summary

AI is reshaping healthcare, but HIPAA compliance is struggling to keep up. With AI systems constantly evolving, traditional annual audits often miss critical risks. Continuous monitoring offers a real-time solution, ensuring compliance with HIPAA's stringent requirements while reducing vulnerabilities tied to AI's dynamic nature.

Here’s what you need to know:

- Annual audits provide a periodic review of compliance but often lag behind AI's rapid changes.

- Continuous monitoring ensures 24/7 oversight, identifying risks as they occur and reducing compliance costs by 27%.

- AI systems handling Protected Health Information (PHI) face challenges like re-identification risks, algorithmic bias, and massive audit logs that manual reviews can’t handle.

- Combining both methods creates a balanced approach: real-time risk management paired with periodic validation.

Tools like Censinet RiskOps™ simplify this process by automating evidence collection, tracking AI-related risks, and integrating real-time monitoring with audit preparation.

Key takeaway: To keep pace with AI's growth in healthcare, adopt a dual strategy of continuous monitoring and annual audits to protect patient data, reduce breaches, and meet evolving regulations.

HIPAA Requirements for AI-Powered Systems

AI systems handling Protected Health Information (PHI) must comply with HIPAA's Privacy, Security, and Breach Notification Rules. However, the dynamic nature of AI operations introduces unique challenges that complicate compliance efforts.

HIPAA Rules That Apply to AI

The HIPAA Privacy Rule regulates how AI systems access, use, and share PHI. Since AI training often falls outside the scope of Treatment, Payment, and Healthcare Operations (TPO), explicit patient authorization is typically required. Additionally, HIPAA emphasizes data minimization - using only the minimum necessary information - which can conflict with AI's need for large and varied datasets to function effectively [9].

The Security Rule mandates robust safeguards, including encryption, strict access controls (like multi-factor authentication and role-based permissions), and detailed audit trails. Starting in January 2025, healthcare organizations must also perform continuous risk assessments to detect breaches in real time [3][4]. A key change in this update removes the distinction between "required" and "addressable" safeguards, shifting the focus to "proven compliance." This means organizations must now provide verifiable evidence of their cybersecurity measures rather than relying on self-assessments.

The Breach Notification Rule requires healthcare organizations to report any unauthorized disclosure of PHI within 60 days. These rules set the foundation for understanding the added risks that AI systems bring to HIPAA compliance.

AI-Specific Compliance Risks

AI systems introduce new risks beyond traditional HIPAA concerns. One major issue is data re-identification, where advanced algorithms can re-identify individuals from datasets that were initially de-identified. Another concern is algorithmic bias, which could lead to misdiagnoses or unequal treatment for certain groups [10][12]. Compounding these risks is the lack of transparency in some AI systems, often referred to as the "double black box" problem. This makes it harder to verify whether PHI is being processed in compliance with privacy standards [1].

Third-party vendors also present significant vulnerabilities. Many AI developers and vendors, particularly those offering general-purpose tools or direct-to-consumer applications, do not qualify as HIPAA Covered Entities or Business Associates. This means PHI processed by such vendors may fall outside HIPAA's protections. Even when vendors sign Business Associate Agreements, healthcare organizations remain responsible for any HIPAA violations. Data breaches are an ongoing problem, with recent incidents exposing over 275 million healthcare records and costing organizations an average of $10.22 million per breach [13]. Adding to the complexity, 71% of healthcare workers reportedly use personal AI tools at work, often without adequate security measures [13].

AI Governance and Oversight Requirements

To address these risks, effective governance is critical. The NIST AI Risk Management Framework, introduced in January 2023, provides a structured approach to evaluating AI systems. It emphasizes principles such as validity, reliability, safety, security, explainability, privacy, and fairness [9][10]. These principles help tackle challenges like algorithmic transparency and bias, complementing HIPAA's requirements.

Healthcare organizations should establish AI governance structures, such as risk councils or oversight committees. These groups should include representatives from compliance, IT security, clinical operations, and legal departments to monitor AI deployments and conduct regular risk assessments. Keeping detailed records of AI decision-making processes, data handling practices, and security protocols is equally important [4]. With increasing regulatory scrutiny, proactive governance is no longer optional - it’s a necessity for ensuring AI compliance.

Continuous Monitoring for HIPAA Compliance

What Continuous Monitoring Means

Continuous monitoring transforms traditional, periodic compliance checks into real-time, automated oversight for systems managing Protected Health Information (PHI). Instead of waiting weeks or months between assessments, this approach keeps an eye on system activity 24/7, flagging any violations as they happen. This is especially important in AI-driven environments, where algorithms can generate thousands of automated data access events every minute, creating massive audit logs that can easily overwhelm manual review processes [6].

At its core, the goal is simple: identify and address threats as they arise. As one expert explains, "AI systems continuously verify that access actions comply with HIPAA requirements" [6]. This constant verification provides a dynamic, up-to-date view of compliance, replacing the outdated, static snapshots offered by annual audits.

To achieve this, continuous monitoring systems focus on several critical functions that are essential for ensuring AI compliance.

Core Functions of Continuous Monitoring for AI

Effective continuous monitoring systems juggle several vital tasks at once. One of the key functions is real-time PHI access tracking, which logs every interaction with sensitive data. This includes recording who accessed the information, when they did it, and why. These logs also document AI decision-making processes, creating a clear audit trail.

Another essential feature is anomaly detection, which leverages machine learning to identify unusual behaviors. This might include odd login patterns, suspicious file transfers, or unexpected geographic activity - any of which could point to potential HIPAA violations [6][14][15].

Automated control assessments also play a crucial role. These tools keep tabs on regulatory updates, generate compliance reports, and enforce standardized rules, all while minimizing human error. They also provide real-time alerts when issues arise [14][16]. For example, organizations that use AI-enhanced identity management systems have seen security incidents drop by 33% and compliance management costs decrease by 27% on average [6].

Lastly, self-learning AI systems help establish normal behavior patterns and flag deviations that could signal compromised accounts or upcoming compliance issues. In fact, AI-powered anomaly detection can identify incidents an average of 37 days earlier than manual methods [6].

How Censinet Enables Continuous Monitoring

Censinet RiskOps™ brings these core functions together, simplifying continuous compliance monitoring for AI-driven environments.

The platform acts as a centralized hub, pulling real-time data into an easy-to-use dashboard that provides a complete view of an organization’s compliance status. It automates risk assessments across third-party vendors, enterprise systems, and AI applications, ensuring that PHI is handled according to HIPAA standards at all times.

Censinet AI™ further streamlines the process by automatically summarizing vendor evidence and documentation, capturing key integration details, and identifying risks from fourth-party relationships. Its "human-in-the-loop" approach ensures that while automation scales operations, critical oversight is maintained. Risk teams can configure rules and review processes to make thoughtful, informed decisions.

To keep things running smoothly, the platform routes critical findings to the right stakeholders, ensuring timely reviews and actions. This coordination across Governance, Risk, and Compliance teams ensures that issues are handled efficiently and effectively. With Censinet RiskOps™, healthcare organizations can better manage risks while meeting HIPAA’s stringent requirements.

Annual Audits for HIPAA Compliance

How Annual HIPAA Audits Work

Annual HIPAA audits play a crucial role in ensuring healthcare organizations comply with the administrative, physical, and technical safeguards for protecting electronic protected health information (ePHI). These reviews are designed to confirm that organizations meet HIPAA standards. The Office for Civil Rights (OCR) HIPAA Audit Program focuses on assessing compliance, identifying best practices, uncovering risks and vulnerabilities, and offering guidance to strengthen cybersecurity measures [17].

When it comes to AI-driven systems, these audits go beyond traditional IT evaluations. As IBM puts it, "An AI audit is a structured, evidence-based examination of how artificial intelligence (AI) systems are designed, trained and deployed" [18]. This means auditors must examine every stage of the AI lifecycle, from data collection and quality to model architecture, explainability, and decision-making processes. They also scrutinize Business Associate Agreements (BAAs) with AI vendors to ensure that third-party tools handling PHI align with HIPAA requirements [19]. This traditional audit framework provides a foundation for evaluating AI systems, though it has its limitations in these complex environments.

Benefits of Annual Audits for AI Compliance

External audits offer healthcare organizations several advantages when it comes to AI compliance. These audits validate existing safeguards, identify risks that may have been overlooked, and deliver prioritized recommendations to address vulnerabilities tied to AI systems. They establish a baseline for compliance and generate actionable reports backed by evidence of cybersecurity measures - an increasingly critical need for digital health tools managing PHI [4].

Additionally, these audits provide organizations with a clear roadmap for addressing security gaps in AI systems, improving incident response strategies, and demonstrating a strong commitment to safeguarding patient data [20]. They can identify critical issues such as biases in AI models, incomplete inventories of AI assets, and missing audit trails for AI-driven decision-making processes [21].

Where Annual Audits Fall Short for AI

Despite their benefits, annual audits have notable limitations, especially when applied to AI systems. One major drawback is their reliance on historical data, which makes it difficult to account for real-time changes in AI risk profiles. As a result, audits often fail to keep pace with the rapid evolution of AI deployments.

Consider this: 80% of healthcare organizations reported at least one data breach in the past year [5]. Meanwhile, 62% of these organizations identified "regulatory compliance" as their most significant challenge when adopting AI [5]. The speed at which AI is being integrated into healthcare far outpaces the regulatory updates needed to address it, creating a "compliance gap" that annual audits alone cannot close [5]. These reviews also lack the ability to provide ongoing verification of security measures or adapt to constantly changing regulations [4].

sbb-itb-535baee

Continuous Monitoring vs. Annual Audits: Direct Comparison

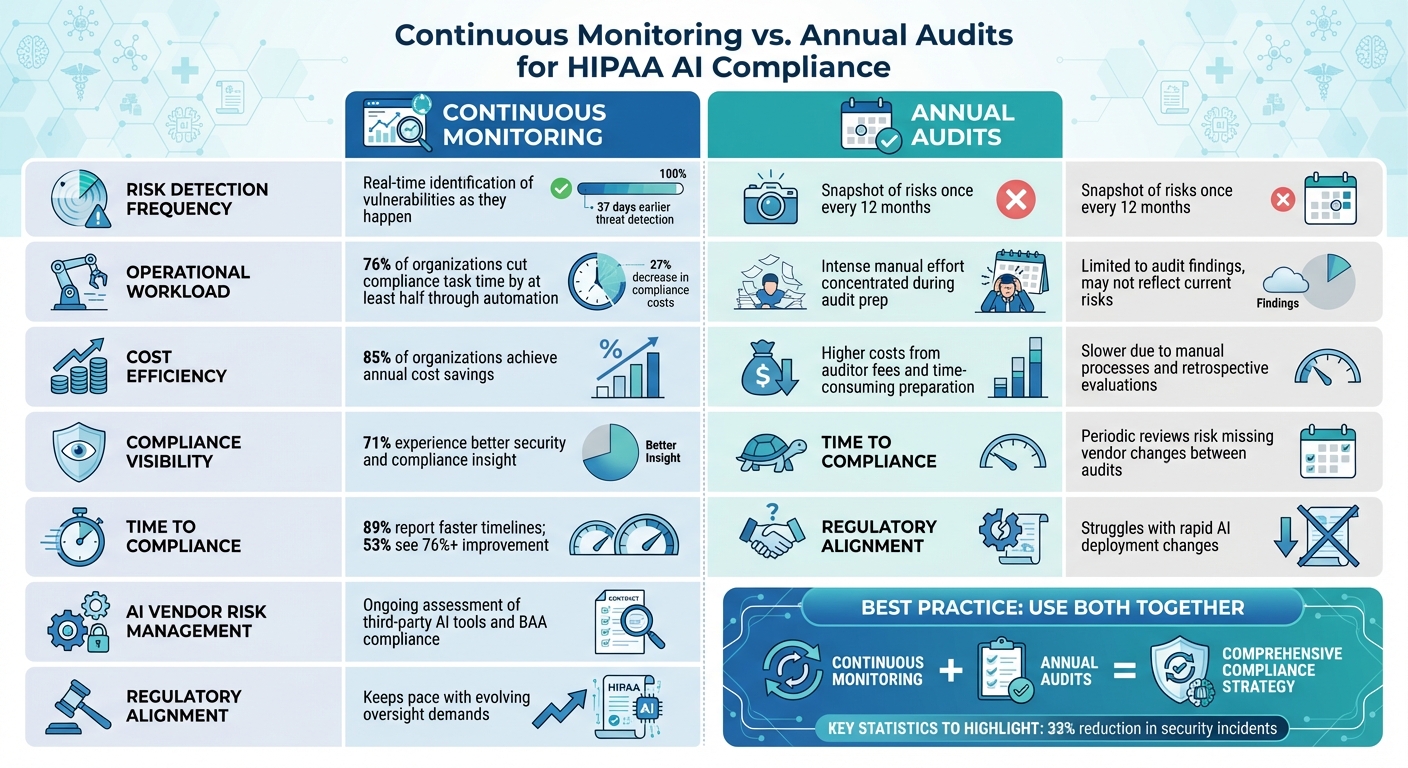

Continuous Monitoring vs Annual Audits for HIPAA AI Compliance

Comparison Table: Continuous Monitoring vs. Annual Audits

When assessing HIPAA compliance strategies for AI, continuous monitoring and annual audits each bring their own advantages and limitations. Here's how they stack up:

| Dimension | Continuous Monitoring | Annual Audits |

|---|---|---|

| Risk Detection Frequency | Real-time identification of vulnerabilities and misconfigurations as they happen | A snapshot of risks, typically conducted once every 12 months |

| Operational Workload | Automated evidence collection significantly reduces manual effort - 76% of organizations report cutting compliance task time by at least half [22] | Requires intense manual effort concentrated during audit preparation |

| Cost Efficiency | Automation delivers annual cost savings for 85% of organizations [22] | Higher costs due to auditor fees and time-consuming preparation |

| Compliance Visibility | 71% of organizations experience better insight into their security and compliance status [22] | Limited to the findings of the audit, which may not reflect current risks |

| AI Vendor Risk Management | Ongoing assessment of third-party AI tools and continuous monitoring of Business Associate Agreement (BAA) compliance | Periodic reviews risk overlooking changes in vendor practices between audits |

| Regulatory Alignment | Keeps pace with evolving regulatory demands for continuous oversight [2] | Struggles to adapt to the rapid changes in AI deployments |

| Time to Compliance | 89% of organizations report faster compliance timelines, with 53% achieving improvements of 76% or more [22] | Slower due to reliance on manual processes and retrospective evaluations |

This comparison highlights the strengths of each method, paving the way for a hybrid approach that capitalizes on their respective benefits.

Using Both Methods Together

The insights from the table suggest that blending continuous monitoring with annual audits creates a more effective compliance strategy. Regulatory bodies are increasingly favoring continuous monitoring as a cornerstone of modern compliance programs, acknowledging that annual audits alone cannot keep up with the fast-paced changes in AI systems [2]. Combining these methods offers a balanced framework: continuous monitoring ensures real-time risk management, while annual audits provide independent validation of the program's effectiveness and overall compliance [8][7].

This dual strategy fills a critical gap. Continuous monitoring addresses the day-to-day detection and resolution of risks, which is especially crucial for rapidly evolving AI systems. Meanwhile, annual audits confirm that the monitoring processes are functioning as intended and that the organization’s compliance efforts remain solid. Together, these methods enable the kind of agile compliance needed to meet HIPAA requirements in the AI age.

How Censinet Connects Both Approaches

Censinet RiskOps™ seamlessly integrates continuous monitoring with annual audits by consolidating all compliance data into a single platform. This setup supports both real-time risk management and periodic audit validation. Throughout the year, the platform automatically gathers and organizes evidence, eliminating the last-minute scramble that often precedes audits. When audit time arrives, organizations can generate thorough reports backed by up-to-date data, avoiding the need to piece together historical information.

The platform also provides ongoing visibility into AI-related risks, policy compliance, and control measures. Auditors gain access to current evidence of HIPAA safeguards, AI governance practices, and remediation efforts without disrupting the organization’s daily operations. By bridging the gap between continuous monitoring and periodic audits, Censinet significantly reduces the time needed for assessments - from weeks to mere seconds - while maintaining rigorous oversight.

How to Implement Continuous Monitoring for AI Compliance

Creating an AI and Data Asset Inventory

The first step in continuous monitoring is knowing exactly what you're working with. Healthcare organizations need to identify every AI system that interacts with Protected Health Information (PHI). This includes understanding what types of data these systems access, how they process that data, and where they integrate into your broader environment. For example, does the AI system handle PHI directly, rely on de-identified data, or use training datasets containing sensitive information? All of this should be documented.

Additionally, it’s essential to map out data flows and integration points across your entire infrastructure. This involves tracking how AI systems interact with electronic health records, medical devices, billing platforms, and third-party applications. Missing even one system in this inventory can leave critical gaps. A detailed inventory like this serves as the foundation for aligning your controls with HIPAA requirements.

Aligning HIPAA Safeguards with AI Controls

Once your inventory is complete, the next step is to align HIPAA safeguards with AI-specific controls. HIPAA outlines three categories of safeguards - Administrative, Physical, and Technical - that need to be adapted to address the unique challenges AI brings. These challenges include risks like log leakage, unclear data retention policies, weak data provenance, and opaque decision-making processes [24][23][25]. Continuous monitoring must tackle these vulnerabilities while still upholding standard HIPAA protections.

For instance, audit trails are critical for tracking all AI-related actions involving PHI [6][23][11]. AI systems equipped with anomaly detection can spot security threats significantly faster - up to 37 days earlier - than manual monitoring methods [6]. By establishing baseline behavior patterns for users and AI systems, monitoring tools can flag unusual activity, such as unexpected access patterns, large data retrievals, or geographic anomalies, which could signal compromised accounts or unauthorized access [6][23].

Using Censinet for AI Risk Monitoring

Once safeguards are aligned, automation becomes key to effective monitoring. Platforms like Censinet RiskOps™ streamline the process by automating evidence collection and offering a real-time AI risk dashboard. This dashboard consolidates compliance data, highlights vulnerabilities, and ensures that critical findings are routed to the appropriate stakeholders - such as members of an AI governance committee - for timely review and action.

Censinet also automatically logs AI system activities, tracks compliance with Business Associate Agreements for AI vendors, and generates audit-ready documentation. With tools like Censinet AI™, vendors can complete security questionnaires in seconds. The platform summarizes evidence, compiles documentation, and produces risk summary reports, cutting assessment times from weeks to seconds - all while maintaining thorough oversight. This combination of automation and centralized oversight ensures that organizations can stay ahead of potential risks without sacrificing compliance.

Conclusion: HIPAA Compliance in the AI Era

Key Takeaways

Staying HIPAA-compliant in the age of AI requires constant attention. With AI systems handling Protected Health Information (PHI) in real time, organizations need to pair continuous monitoring with periodic audits. Continuous monitoring ensures you can identify risks as they arise, offering quicker threat detection [6]. Meanwhile, annual audits validate the effectiveness of your monitoring systems and provide the detailed documentation regulators expect.

Data shows this dual approach is critical. Organizations that integrate continuous monitoring and periodic audits see a noticeable drop in risks and better overall oversight. For example, using AI-powered identity management can reduce security incidents by 33% and lower compliance management expenses by 27% [6][22]. Tools like Censinet RiskOps™ make it easier to adopt this strategy by automating evidence collection, maintaining real-time risk dashboards, and generating audit-ready documentation.

"AI's potential in healthcare is enormous, but without strong HIPAA compliance, it risks undermining patient trust." - Deven McGraw, Former Deputy Director of Health Information Privacy at the OCR [5]

This approach not only addresses current compliance needs but also positions organizations to handle future regulatory challenges.

Preparing for Future AI Regulations

The regulatory environment for AI in healthcare is changing fast, and waiting for new rules to take effect could leave your organization unprepared. To stay ahead, it's crucial to adopt flexible compliance frameworks now. This includes setting up strong governance, implementing continuous monitoring systems, and conducting risk assessments whenever there are major updates to AI models, data pipelines, or infrastructure [5][9].

Compliance is no longer a one-time task - it’s becoming an ongoing responsibility [4]. Regulators now expect organizations to provide continuous, verifiable evidence of their cybersecurity measures. By combining automated, real-time monitoring with structured periodic reviews, you can create a compliance strategy that evolves alongside your AI systems. This not only safeguards patient trust but also ensures your organization can innovate responsibly and securely.

FAQs

How does continuous monitoring help ensure HIPAA compliance in AI systems?

Continuous monitoring plays a crucial role in maintaining HIPAA compliance within AI systems. It enables real-time identification of security threats, suspicious behavior, and possible privacy breaches. This approach helps healthcare organizations tackle issues promptly, preventing them from escalating and ensuring patient data stays secure.

Automating compliance oversight through continuous monitoring significantly reduces the chances of human error. It provides reliable tracking of system access, data accuracy, and activity logs. This ensures AI-powered processes remain aligned with HIPAA requirements, even as technology advances and new challenges arise.

What challenges does AI pose to maintaining HIPAA compliance?

AI brings its own set of challenges to maintaining HIPAA compliance. These include threats like data breaches, unauthorized access, and security gaps in APIs. It can also create complications in encryption methods and make consistent, real-time monitoring harder to achieve. Without proper oversight, AI systems might even mishandle sensitive health information, leading to potential misuse.

To tackle these issues, healthcare organizations need to adopt strong protective measures. This means using continuous monitoring tools and implementing advanced security protocols to ensure that AI systems adhere to HIPAA's rigorous privacy and security requirements.

Why is it beneficial to use both continuous monitoring and annual audits for HIPAA compliance?

Combining continuous monitoring with annual audits offers a well-rounded approach to staying HIPAA compliant. Continuous monitoring works to spot and address vulnerabilities as they happen, minimizing the chances of breaches and ensuring you're consistently aligned with regulations. On the other hand, annual audits provide a deeper dive into your compliance efforts, confirming that your controls are working as intended and helping you gear up for regulatory inspections.

Together, these strategies allow healthcare organizations to remain proactive in tackling risks while gaining a clear, big-picture view of their overall compliance standing - especially in today's AI-driven landscape.